Operations Guide

- 1 Operations Overview

- 2 Tutorials

- 3 Third-Party Integrations

- 4 Managing Identity

- 5 Managing Compute

- 6 Managing ESX

- 7 Managing Block Storage

- 8 Managing Object Storage

- 9 Managing Networking

- 10 Managing the Dashboard

- 11 Managing Orchestration

- 12 Managing Monitoring, Logging, and Usage Reporting

- 13 System Maintenance

- 14 Backup and Restore

- 15 Troubleshooting Issues

12 Managing Monitoring, Logging, and Usage Reporting

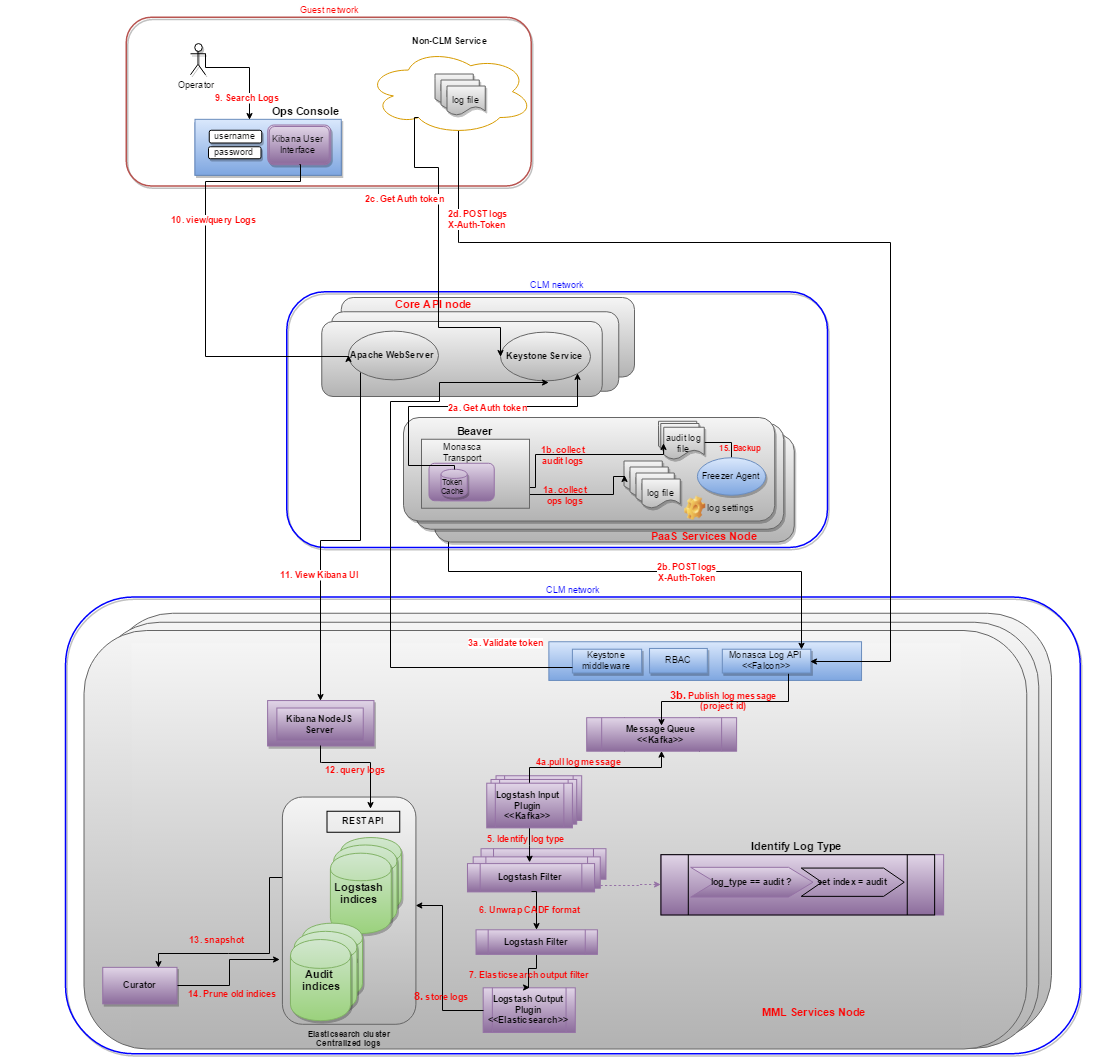

Information about the monitoring, logging, and metering services included with your HPE Helion OpenStack.

12.1 Monitoring #

The HPE Helion OpenStack Monitoring service leverages OpenStack Monasca, which is a multi-tenant, scalable, fault tolerant monitoring service.

12.1.1 Getting Started with Monitoring #

You can use the HPE Helion OpenStack Monitoring service to monitor the health of your cloud and, if necessary, to troubleshoot issues.

Monasca data can be extracted and used for a variety of legitimate purposes, and different purposes require different forms of data sanitization or encoding to protect against invalid or malicious data. Any data pulled from Monasca should be considered untrusted data, so users are advised to apply appropriate encoding and/or sanitization techniques to ensure safe and correct usage and display of data in a web browser, database scan, or any other use of the data.

12.1.1.1 Monitoring Service Overview #

12.1.1.1.1 Installation #

The monitoring service is automatically installed as part of the HPE Helion OpenStack installation.

No specific configuration is required to use Monasca. However, you can configure the database for storing metrics as explained in Section 12.1.2, “Configuring the Monitoring Service”.

12.1.1.1.2 Differences Between Upstream and HPE Helion OpenStack Implementations #

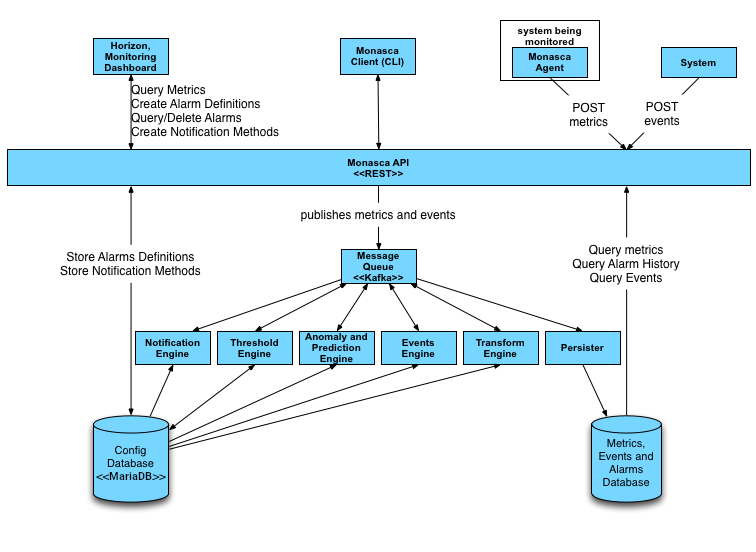

In HPE Helion OpenStack, the OpenStack monitoring service, Monasca, is included as the monitoring solution, except for the following which are not included:

Transform Engine

Events Engine

Anomaly and Prediction Engine

Note

Icinga was supported in previous HPE Helion OpenStack versions but it has been deprecated in HPE Helion OpenStack 8.

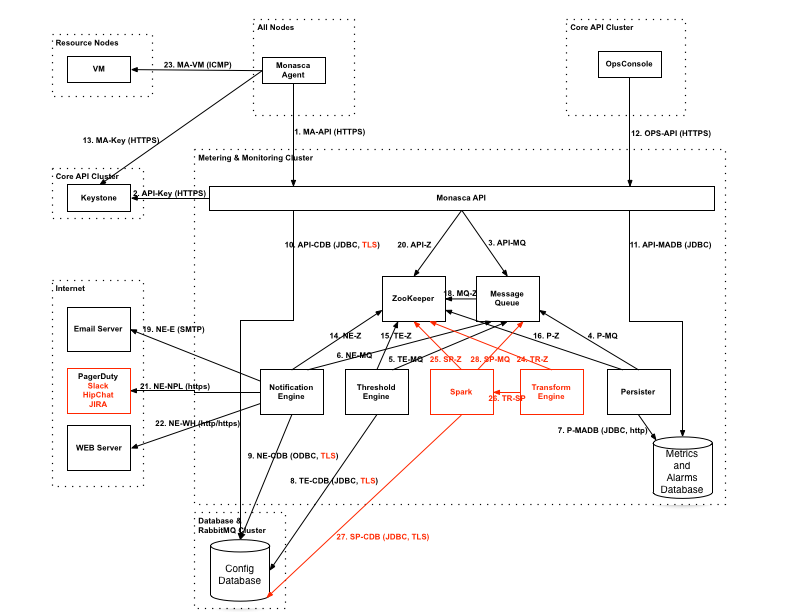

12.1.1.1.3 Diagram of Monasca Service #

12.1.1.1.4 For More Information #

For more details on OpenStack Monasca, see Monasca.io

12.1.1.1.5 Back-end Database #

The monitoring service default metrics database is Cassandra, which is a highly-scalable analytics database and the recommended database for HPE Helion OpenStack.

You can learn more about Cassandra at Apache Cassandra.

12.1.1.2 Working with Monasca #

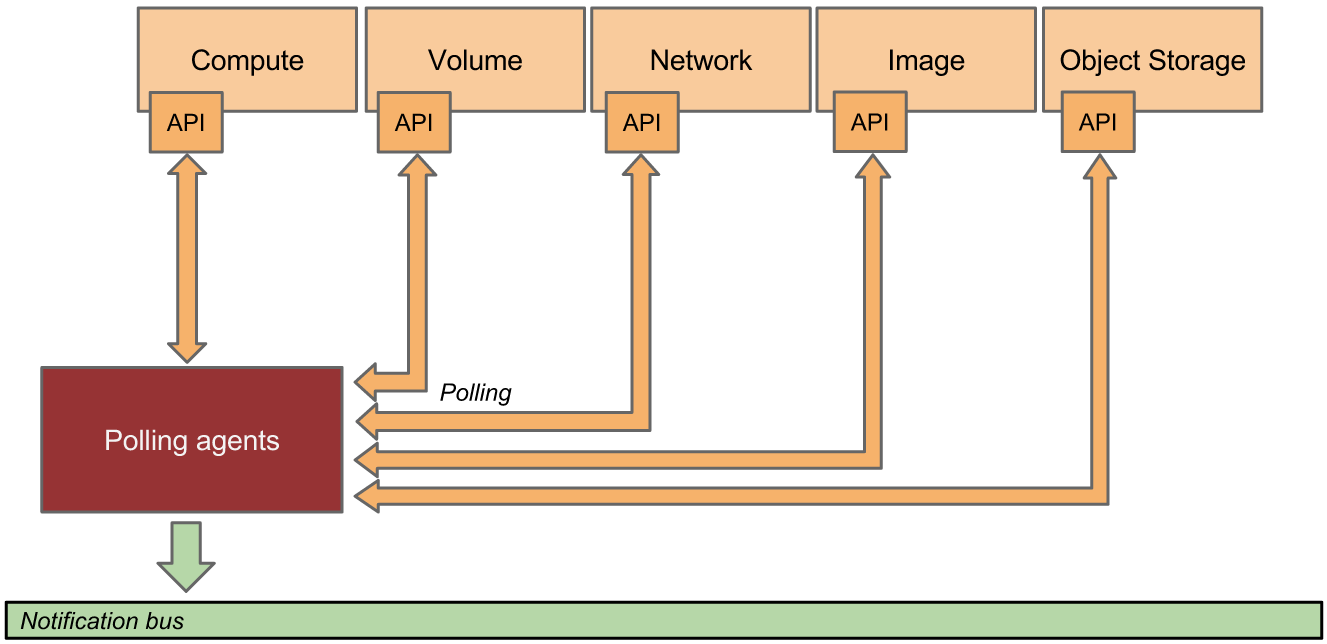

Monasca-Agent

The monasca-agent is a Python program that runs on the control plane nodes. It runs the defined checks and then sends data onto the API. The checks that the agent runs include:

System Metrics: CPU utilization, memory usage, disk I/O, network I/O, and filesystem utilization on the control plane and resource nodes.

Service Metrics: the agent supports plugins such as MySQL, RabbitMQ, Kafka, and many others.

VM Metrics: CPU utilization, disk I/O, network I/O, and memory usage of hosted virtual machines on compute nodes. Full details of these can be found https://github.com/openstack/monasca-agent/blob/master/docs/Plugins.md#per-instance-metrics.

For a full list of packaged plugins that are included HPE Helion OpenStack, see Monasca Plugins

You can further customize the monasca-agent to suit your needs, see Customizing the Agent

12.1.1.3 Accessing the Monitoring Service #

Access to the Monitoring service is available through a number of different interfaces.

12.1.1.3.1 Command-Line Interface #

For users who prefer using the command line, there is the python-monascaclient, which is part of the default installation on your Cloud Lifecycle Manager node.

For details on the CLI, including installation instructions, see Python-Monasca Client

Monasca API

If low-level access is desired, there is the Monasca REST API.

Full details of the Monasca API can be found on GitHub.

12.1.1.3.2 Operations Console GUI #

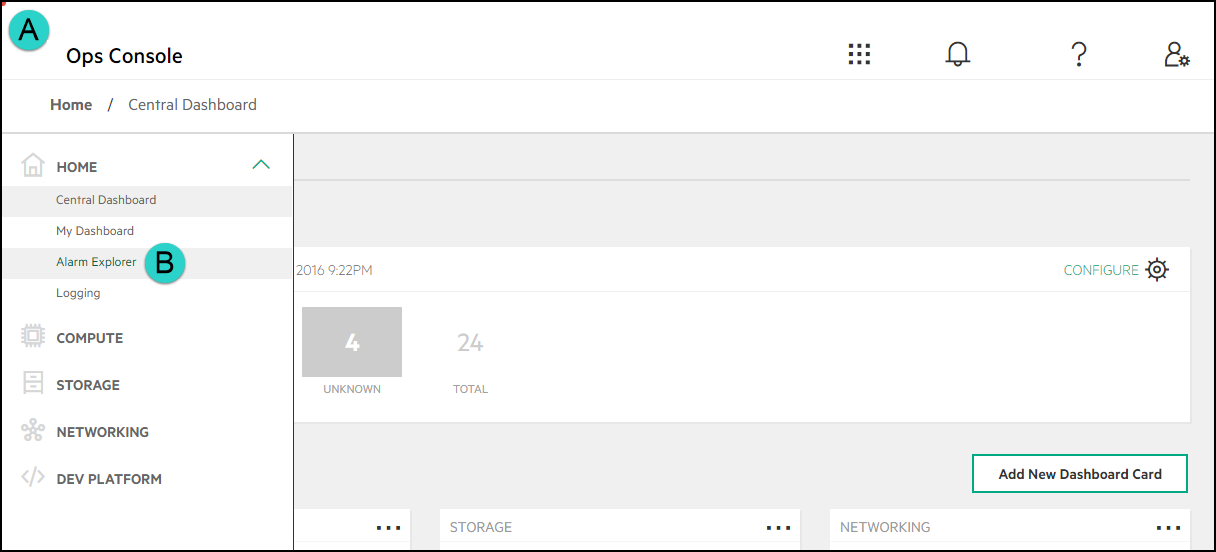

You can use the Operations Console (Ops Console) for HPE Helion OpenStack to view data about your HPE Helion OpenStack cloud infrastructure in a web-based graphical user interface (GUI) and ensure your cloud is operating correctly. By logging on to the console, HPE Helion OpenStack administrators can manage data in the following ways: Triage alarm notifications.

Alarm Definitions and notifications now have their own screens and are collected under the Alarm Explorer menu item which can be accessed from the Central Dashboard. Central Dashboard now allows you to customize the view in the following ways:

Rename or re-configure existing alarm cards to include services different from the defaults

Create a new alarm card with the services you want to select

Reorder alarm cards using drag and drop

View all alarms that have no service dimension now grouped in an Uncategorized Alarms card

View all alarms that have a service dimension that does not match any of the other cards -now grouped in an Other Alarms card

You can also easily access alarm data for a specific component. On the Summary page for the following components, a link is provided to an alarms screen specifically for that component:

Compute Instances: Book “User Guide”, Chapter 1 “Using the Operations Console”, Section 1.3 “Managing Compute Hosts”

Object Storage: Book “User Guide”, Chapter 1 “Using the Operations Console”, Section 1.4 “Managing Swift Performance”, Section 1.4.4 “Alarm Summary”

12.1.1.3.3 Connecting to the Operations Console #

To connect to Operations Console, perform the following:

Ensure your login has the required access credentials: Book “User Guide”, Chapter 1 “Using the Operations Console”, Section 1.2 “Connecting to the Operations Console”, Section 1.2.1 “Required Access Credentials”

Connect through a browser: Book “User Guide”, Chapter 1 “Using the Operations Console”, Section 1.2 “Connecting to the Operations Console”, Section 1.2.2 “Connect Through a Browser”

Optionally use a Host name OR virtual IP address to access Operations Console: Book “User Guide”, Chapter 1 “Using the Operations Console”, Section 1.2 “Connecting to the Operations Console”, Section 1.2.3 “Optionally use a Hostname OR virtual IP address to access Operations Console”

Operations Console will always be accessed over port 9095.

12.1.1.3.4 For More Information #

For more details about the Operations Console, see Book “User Guide”, Chapter 1 “Using the Operations Console”, Section 1.1 “Operations Console Overview”.

12.1.1.4 Service Alarm Definitions #

HPE Helion OpenStack comes with some predefined monitoring alarms for the services installed.

Full details of all service alarms can be found here: Section 15.1.1, “Alarm Resolution Procedures”.

Each alarm will have one of the following statuses:

- Open alarms, identified by red indicator.

- Open alarms, identified by yellow indicator.

- Open alarms, identified by gray indicator. Unknown will be the status of an alarm that has stopped receiving a metric. This can be caused by the following conditions:

An alarm exists for a service or component that is not installed in the environment.

An alarm exists for a virtual machine or node that previously existed but has been removed without the corresponding alarms being removed.

There is a gap between the last reported metric and the next metric.

- Complete list of open alarms.

- Complete list of alarms, may include Acknowledged and Resolved alarms.

When alarms are triggered it is helpful to review the service logs.

12.1.2 Configuring the Monitoring Service #

The monitoring service, based on Monasca, allows you to configure an external SMTP server for email notifications when alarms trigger. You also have options for your alarm metrics database should you choose not to use the default option provided with the product.

In HPE Helion OpenStack you have the option to specify a SMTP server for email notifications and a database platform you want to use for the metrics database. These steps will assist in this process.

12.1.2.1 Configuring the Monitoring Email Notification Settings #

The monitoring service, based on Monasca, allows you to configure an external SMTP server for email notifications when alarms trigger. In HPE Helion OpenStack, you have the option to specify a SMTP server for email notifications. These steps will assist in this process.

If you are going to use the email notifiication feature of the monitoring service, you must set the configuration options with valid email settings including an SMTP server and valid email addresses. The email server is not provided by HPE Helion OpenStack, but must be specified in the configuration file described below. The email server must support SMTP.

12.1.2.1.1 Configuring monitoring notification settings during initial installation #

Log in to the Cloud Lifecycle Manager.

To change the SMTP server configuration settings edit the following file:

~/openstack/my_cloud/definition/cloudConfig.yml

Enter your email server settings. Here is an example snippet showing the configuration file contents, uncomment these lines before entering your environment details.

smtp-settings: # server: mailserver.examplecloud.com # port: 25 # timeout: 15 # These are only needed if your server requires authentication # user: # password:This table explains each of these values:

Value Description Server (required) The server entry must be uncommented and set to a valid hostname or IP Address.

Port (optional) If your SMTP server is running on a port other than the standard 25, then uncomment the port line and set it your port.

Timeout (optional) If your email server is heavily loaded, the timeout parameter can be uncommented and set to a larger value. 15 seconds is the default.

User / Password (optional) If your SMTP server requires authentication, then you can configure user and password. Use double quotes around the password to avoid issues with special characters.

To configure the sending email addresses, edit the following file:

~/openstack/ardana/ansible/roles/monasca-notification/defaults/main.yml

Modify the following value to add your sending email address:

email_from_addr

Note

The default value in the file is

email_from_address: notification@exampleCloud.comwhich you should edit.[optional] To configure the receiving email addresses, edit the following file:

~/openstack/ardana/ansible/roles/monasca-default-alarms/defaults/main.yml

Modify the following value to configure a receiving email address:

notification_address

Note

You can also set the receiving email address via the Operations Console. Instructions for this are in the last section.

If your environment requires a proxy address then you can add that in as well:

# notification_environment can be used to configure proxies if needed. # Below is an example configuration. Note that all of the quotes are required. # notification_environment: '"http_proxy=http://<your_proxy>:<port>" "https_proxy=http://<your_proxy>:<port>"' notification_environment: ''Commit your configuration to the local Git repository (see Book “Installing with Cloud Lifecycle Manager”, Chapter 10 “Using Git for Configuration Management”), as follows:

ardana >cd ~/openstack/ardana/ansibleardana >git add -Aardana >git commit -m "Updated monitoring service email notification settings"Continue with your installation.

12.1.2.1.2 Monasca and Apache Commons validator #

The Monasca notification uses a standard Apache Commons validator to validate the configured HPE Helion OpenStack domain names before sending the notification over webhook. Monasca notification supports some non-standard domain names, but not all. See the Domain Validator documentation for more information: https://commons.apache.org/proper/commons-validator/apidocs/org/apache/commons/validator/routines/DomainValidator.html

You should ensure that any domains that you use are supported by IETF and IANA. As an example, .local is not listed by IANA and is invalid but .gov and .edu are valid.

Internet Assigned Numbers Authority (IANA): https://www.iana.org/domains/root/db

Failure to use supported domains will generate an unprocessable exception in Monasca notification create:

HTTPException code=422 message={"unprocessable_entity":

{"code":422,"message":"Address https://myopenstack.sample:8000/v1/signal/test is not of correct format","details":"","internal_code":"c6cf9d9eb79c3fc4"}12.1.2.1.3 Configuring monitoring notification settings after the initial installation #

If you need to make changes to the email notification settings after your initial deployment, you can change the "From" address using the configuration files but the "To" address will need to be changed in the Operations Console. The following section will describe both of these processes.

To change the sending email address:

Log in to the Cloud Lifecycle Manager.

To configure the sending email addresses, edit the following file:

~/openstack/ardana/ansible/roles/monasca-notification/defaults/main.yml

Modify the following value to add your sending email address:

email_from_addr

Note

The default value in the file is

email_from_address: notification@exampleCloud.comwhich you should edit.Commit your configuration to the local Git repository (Book “Installing with Cloud Lifecycle Manager”, Chapter 10 “Using Git for Configuration Management”), as follows:

ardana >cd ~/openstack/ardana/ansibleardana >git add -Aardana >git commit -m "Updated monitoring service email notification settings"Run the configuration processor:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlUpdate your deployment directory:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun the Monasca reconfigure playbook to deploy the changes:

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts monasca-reconfigure.yml --tags notificationNote

You may need to use the

--ask-vault-passswitch if you opted for encryption during the initial deployment.

To change the receiving email address via the Operations Console:

To configure the "To" email address, after installation,

Connect to and log in to the Operations Console. See Book “User Guide”, Chapter 1 “Using the Operations Console”, Section 1.2 “Connecting to the Operations Console” for assistance.

On the Home screen, click the menu represented by 3 horizontal lines (

).

).

From the menu that slides in on the left side, click Home, and then Alarm Explorer.

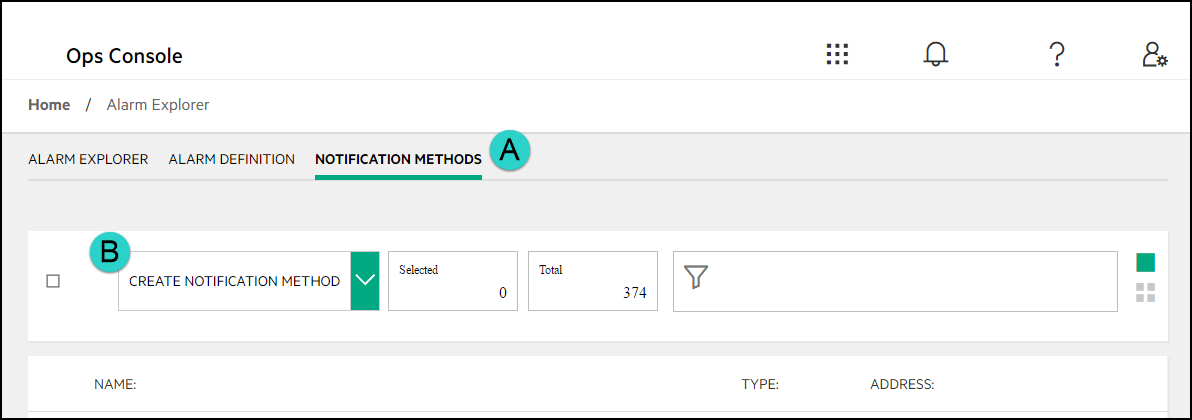

On the Alarm Explorer page, at the top, click the Notification Methods text.

On the Notification Methods page, find the row with the Default Email notification.

In the Default Email row, click the details icon (

), then click

Edit.

), then click

Edit.

On the Edit Notification Method: Default Email page, in Name, Type, and Address/Key, type in the values you want to use.

On the Edit Notification Method: Default Email page, click Update Notification.

Important

Once the notification has been added, using the procedures using the Ansible playbooks will not change it.

12.1.2.2 Managing Notification Methods for Alarms #

12.1.2.2.1 Enabling a Proxy for Webhook or Pager Duty Notifications #

If your environment requires a proxy in order for communications to function then these steps will show you how you can enable one. These steps will only be needed if you are utilizing the webhook or pager duty notification methods.

These steps will require access to the Cloud Lifecycle Manager in your cloud deployment so you may need to contact your Administrator. You can make these changes during the initial configuration phase prior to the first installation or you can modify your existing environment, the only difference being the last step.

Log in to the Cloud Lifecycle Manager.

Edit the

~/openstack/ardana/ansible/roles/monasca-notification/defaults/main.ymlfile and edit the line below with your proxy address values:notification_environment: '"http_proxy=http://<proxy_address>:<port>" "https_proxy=<http://proxy_address>:<port>"'

Note

There are single quotation marks around the entire value of this entry and then double quotation marks around the individual proxy entries. This formatting must exist when you enter these values into your configuration file.

If you are making these changes prior to your initial installation then you are done and can continue on with the installation. However, if you are modifying an existing environment, you will need to continue on with the remaining steps below.

Commit your configuration to the local Git repository (see Book “Installing with Cloud Lifecycle Manager”, Chapter 10 “Using Git for Configuration Management”), as follows:

ardana >cd ~/openstack/ardana/ansibleardana >git add -Aardana >git commit -m "My config or other commit message"Run the configuration processor:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlGenerate an updated deployment directory:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun the Monasca reconfigure playbook to enable these changes:

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts monasca-reconfigure.yml --tags notification

12.1.2.2.2 Creating a New Notification Method #

Log in to the Operations Console. For more information, see Book “User Guide”, Chapter 1 “Using the Operations Console”, Section 1.2 “Connecting to the Operations Console”.

Use the navigation menu to go to the Alarm Explorer page:

Select the Notification Methods menu and then click the Create Notification Method button:

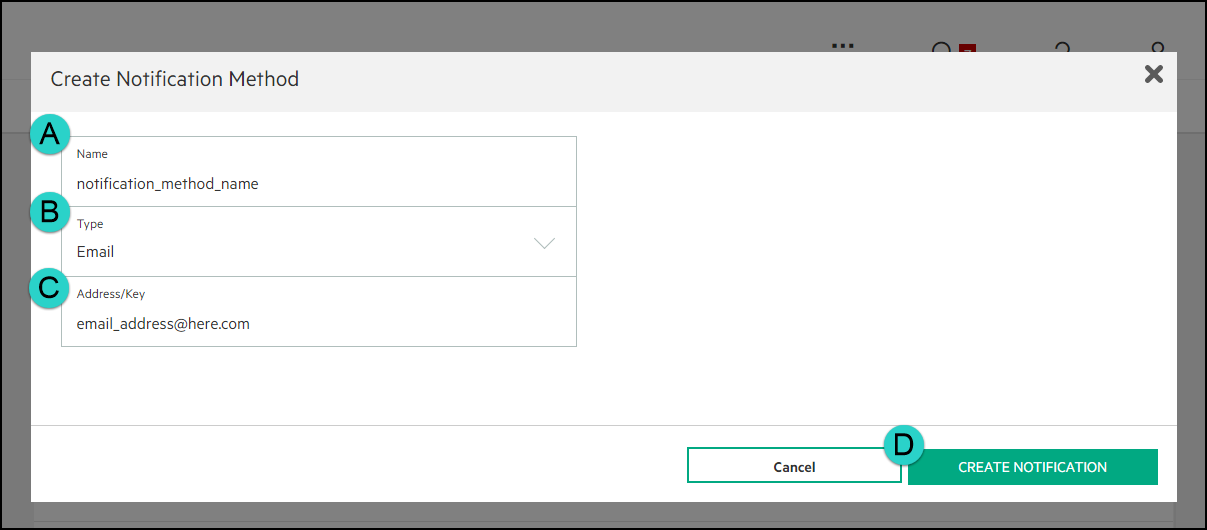

On the Create Notification Method window you will select your options and then click the Create Notification button.

A description of each of the fields you use for each notification method:

Field Description Name Enter a unique name value for the notification method you are creating.

Type Choose a type. Available values are Webhook, Email, or Pager Duty.

Address/Key Enter the value corresponding to the type you chose.

12.1.2.2.3 Applying a Notification Method to an Alarm Definition #

Log in to the Operations Console. For more informalfigure, see Book “User Guide”, Chapter 1 “Using the Operations Console”, Section 1.2 “Connecting to the Operations Console”.

Use the navigation menu to go to the Alarm Explorer page:

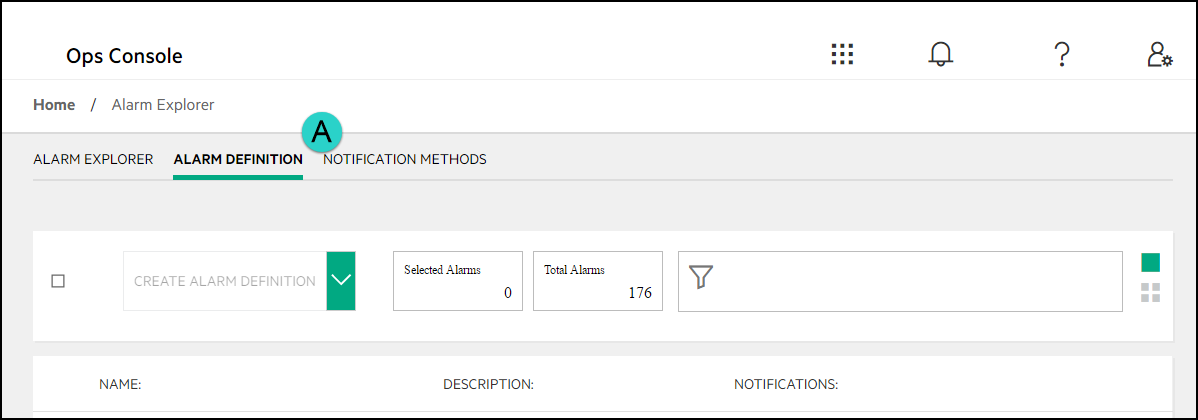

Select the Alarm Definition menu which will give you a list of each of the alarm definitions in your environment.

Locate the alarm you want to change the notification method for and click on its name to bring up the edit menu. You can use the sorting methods for assistance.

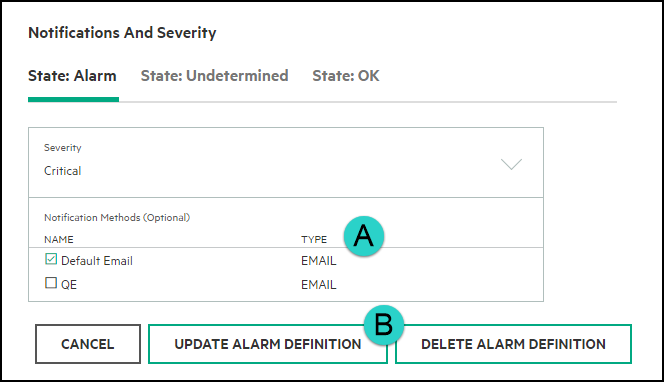

In the edit menu, scroll down to the Notifications and Severity section where you will select one or more Notification Methods before selecting the Update Alarm Definition button:

Repeat as needed until all of your alarms have the notification methods you desire.

12.1.2.3 Enabling the RabbitMQ Admin Console #

The RabbitMQ Admin Console is off by default in HPE Helion OpenStack. You can turn on the console by following these steps:

Log in to the Cloud Lifecycle Manager.

Edit the

~/openstack/my_cloud/config/rabbitmq/main.ymlfile. Under therabbit_plugins:line, uncomment- rabbitmq_management

Commit your configuration to the local Git repository (see Book “Installing with Cloud Lifecycle Manager”, Chapter 10 “Using Git for Configuration Management”), as follows:

ardana >cd ~/openstack/ardana/ansibleardana >git add -Aardana >git commit -m "Enabled RabbitMQ Admin Console"Run the configuration processor:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlUpdate your deployment directory:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun the RabbitMQ reconfigure playbook to deploy the changes:

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts rabbitmq-reconfigure.yml

To turn the RabbitMQ Admin Console off again, add the comment back and repeat steps 3 through 6.

12.1.2.4 Capacity Reporting and Monasca Transform #

Capacity reporting is a new feature in HPE Helion OpenStack which will provide cloud operators overall capacity (available, used, and remaining) information via the Operations Console so that the cloud operator can ensure that cloud resource pools have sufficient capacity to meet the demands of users. The cloud operator is also able to set thresholds and set alarms to be notified when the thresholds are reached.

For Compute

Host Capacity - CPU/Disk/Memory: Used, Available and Remaining Capacity - for the entire cloud installation or by host

VM Capacity - CPU/Disk/Memory: Allocated, Available and Remaining - for the entire cloud installation, by host or by project

For Object Storage

Disk Capacity - Used, Available and Remaining Capacity - for the entire cloud installation or by project

In addition to overall capacity, roll up views with appropriate slices provide views by a particular project, or compute node. Graphs also show trends and the change in capacity over time.

12.1.2.4.1 Monasca Transform Features #

Monasca Transform is a new component in Monasca which transforms and aggregates metrics using Apache Spark

Aggregated metrics are published to Kafka and are available for other monasca components like monasca-threshold and are stored in monasca datastore

Cloud operators can set thresholds and set alarms to receive notifications when thresholds are met.

These aggregated metrics are made available to the cloud operators via Operations Console's new Capacity Summary (reporting) UI

Capacity reporting is a new feature in HPE Helion OpenStack which will provides cloud operators an overall capacity (available, used and remaining) for Compute and Object Storage

Cloud operators can look at Capacity reporting via Operations Console's Compute Capacity Summary and Object Storage Capacity Summary UI

Capacity reporting allows the cloud operators the ability to ensure that cloud resource pools have sufficient capacity to meet demands of users. See table below for Service and Capacity Types.

A list of aggregated metrics is provided in Section 12.1.2.4.4, “New Aggregated Metrics”.

Capacity reporting aggregated metrics are aggregated and published every hour

In addition to the overall capacity, there are graphs which show the capacity trends over time range (for 1 day, for 7 days, for 30 days or for 45 days)

Graphs showing the capacity trends by a particular project or compute host are also provided.

Monasca Transform is integrated with centralized monitoring (Monasca) and centralized logging

Flexible Deployment

Upgrade & Patch Support

| Service | Type of Capacity | Description |

|---|---|---|

| Compute | Host Capacity |

CPU/Disk/Memory: Used, Available and Remaining Capacity - for entire cloud installation or by compute host |

| VM Capacity |

CPU/Disk/Memory: Allocated, Available and Remaining - for entire cloud installation, by host or by project | |

| Object Storage | Disk Capacity |

Used, Available and Remaining Disk Capacity - for entire cloud installation or by project |

| Storage Capacity |

Utilized Storage Capacity - for entire cloud installation or by project |

12.1.2.4.2 Architecture for Monasca Transform and Spark #

Monasca Transform is a new component in Monasca. Monasca Transform uses Spark for data aggregation. Both Monasca Transform and Spark are depicted in the example diagram below.

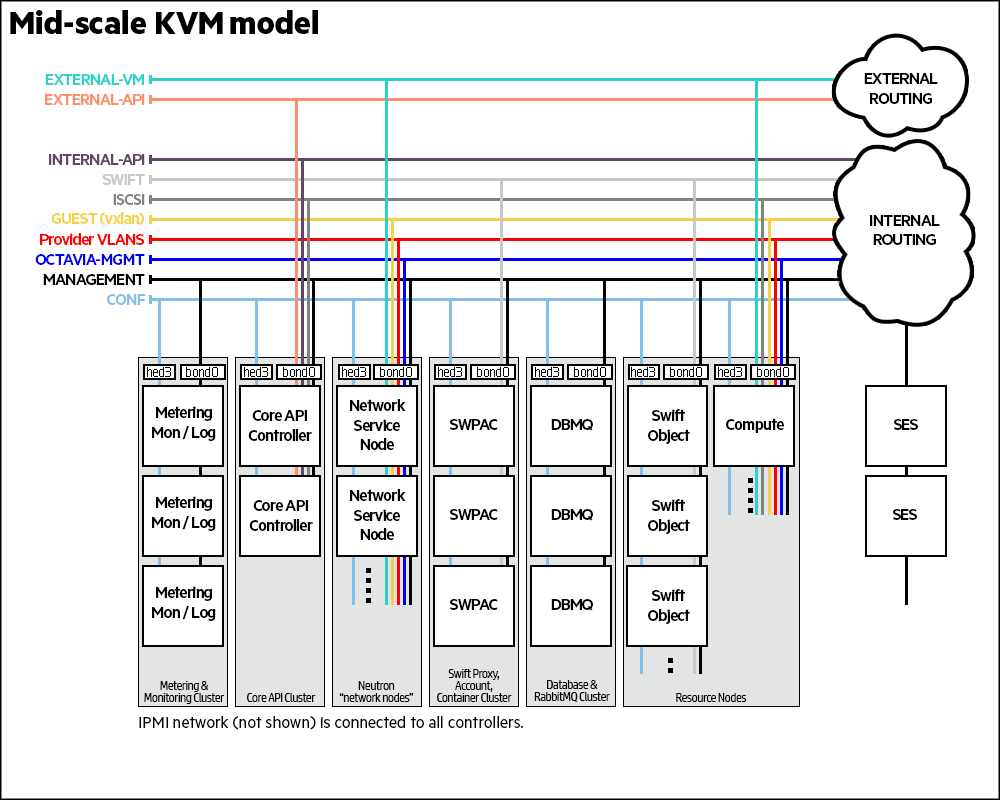

You can see that the Monasca components run on the Cloud Controller nodes, and the Monasca agents run on all nodes in the Mid-scale Example configuration.

12.1.2.4.3 Components for Capacity Reporting #

12.1.2.4.3.1 Monasca Transform: Data Aggregation Reporting #

Monasca-transform is a new component which provides mechanism to aggregate or transform metrics and publish new aggregated metrics to Monasca.

Monasca Transform is a data driven Apache Spark based data aggregation engine which collects, groups and aggregates existing individual Monasca metrics according to business requirements and publishes new transformed (derived) metrics to the Monasca Kafka queue.

Since the new transformed metrics are published as any other metric in Monasca, alarms can be set and triggered on the transformed metric, just like any other metric.

12.1.2.4.3.2 Object Storage and Compute Capacity Summary Operations Console UI #

A new "Capacity Summary" tab for Compute and Object Storage will displays all the aggregated metrics under the "Compute" and "Object Storage" sections.

Operations Console UI makes calls to Monasca API to retrieve and display various tiles and graphs on Capacity Summary tab in Compute and Object Storage Summary UI pages.

12.1.2.4.3.3 Persist new metrics and Trigger Alarms #

New aggregated metrics will be published to Monasca's Kafka queue and will be ingested by monasca-persister. If thresholds and alarms have been set on the aggregated metrics, Monasca will generate and trigger alarms as it currently does with any other metric. No new/additional change is expected with persisting of new aggregated metrics or setting threshold/alarms.

12.1.2.4.4 New Aggregated Metrics #

Following is the list of aggregated metrics produced by monasca transform in HPE Helion OpenStack

Table 12.1: Aggregated Metrics #

| Metric Name | For | Description | Dimensions | Notes | |

|---|---|---|---|---|---|

| 1 |

cpu.utilized_logical_cores_agg | compute summary |

utilized physical host cpu core capacity for one or all hosts by time interval (defaults to a hour) |

aggregation_period: hourly host: all or <host name> project_id: all | Available as total or per host |

| 2 | cpu.total_logical_cores_agg | compute summary |

total physical host cpu core capacity for one or all hosts by time interval (defaults to a hour) |

aggregation_period: hourly host: all or <host name> project_id: all | Available as total or per host |

| 3 | mem.total_mb_agg | compute summary |

total physical host memory capacity by time interval (defaults to a hour) |

aggregation_period: hourly host: all project_id: all | |

| 4 | mem.usable_mb_agg | compute summary |

usable physical host memory capacity by time interval (defaults to a hour) |

aggregation_period: hourly host: all project_id: all | |

| 5 | disk.total_used_space_mb_agg | compute summary |

utilized physical host disk capacity by time interval (defaults to a hour) |

aggregation_period: hourly host: all project_id: all | |

| 6 | disk.total_space_mb_agg | compute summary |

total physical host disk capacity by time interval (defaults to a hour) |

aggregation_period: hourly host: all project_id: all | |

| 7 | nova.vm.cpu.total_allocated_agg | compute summary |

cpus allocated across all VMs by time interval (defaults to a hour) |

aggregation_period: hourly host: all project_id: all | |

| 8 | vcpus_agg | compute summary |

virtual cpus allocated capacity for VMs of one or all projects by time interval (defaults to a hour) |

aggregation_period: hourly host: all project_id: all or <project ID> | Available as total or per project |

| 9 | nova.vm.mem.total_allocated_mb_agg | compute summary |

memory allocated to all VMs by time interval (defaults to a hour) |

aggregation_period: hourly host: all project_id: all | |

| 10 | vm.mem.used_mb_agg | compute summary |

memory utilized by VMs of one or all projects by time interval (defaults to an hour) |

aggregation_period: hourly host: all project_id: <project ID> | Available as total or per project |

| 11 | vm.mem.total_mb_agg | compute summary |

memory allocated to VMs of one or all projects by time interval (defaults to an hour) |

aggregation_period: hourly host: all project_id: <project ID> | Available as total or per project |

| 12 | vm.cpu.utilization_perc_agg | compute summary |

cpu utilized by all VMs by project by time interval (defaults to an hour) |

aggregation_period: hourly host: all project_id: <project ID> | |

| 13 | nova.vm.disk.total_allocated_gb_agg | compute summary |

disk space allocated to all VMs by time interval (defaults to a hour) |

aggregation_period: hourly host: all project_id: all | |

| 14 | vm.disk.allocation_agg | compute summary |

disk allocation for VMs of one or all projects by time interval (defaults to a hour) |

aggregation_period: hourly host: all project_id: all or <project ID> | Available as total or per project |

| 15 | swiftlm.diskusage.val.size_agg | object storage summary |

total available object storage capacity by time interval (defaults to a hour) |

aggregation_period: hourly host: all or <host name> project_id: all | Available as total or per host |

| 16 | swiftlm.diskusage.val.avail_agg | object storage summary |

remaining object storage capacity by time interval (defaults to a hour) |

aggregation_period: hourly host: all or <host name> project_id: all | Available as total or per host |

| 17 | swiftlm.diskusage.rate_agg | object storage summary |

rate of change of object storage usage by time interval (defaults to a hour) |

aggregation_period: hourly host: all project_id: all | |

| 18 | storage.objects.size_agg | object storage summary |

used object storage capacity by time interval (defaults to a hour) |

aggregation_period: hourly host: all project_id: all |

12.1.2.4.5 Deployment #

Monasca Transform and Spark will be deployed on the same control plane nodes along with Logging and Monitoring Service (Monasca).

Security Consideration during deployment of Monasca Transform and Spark

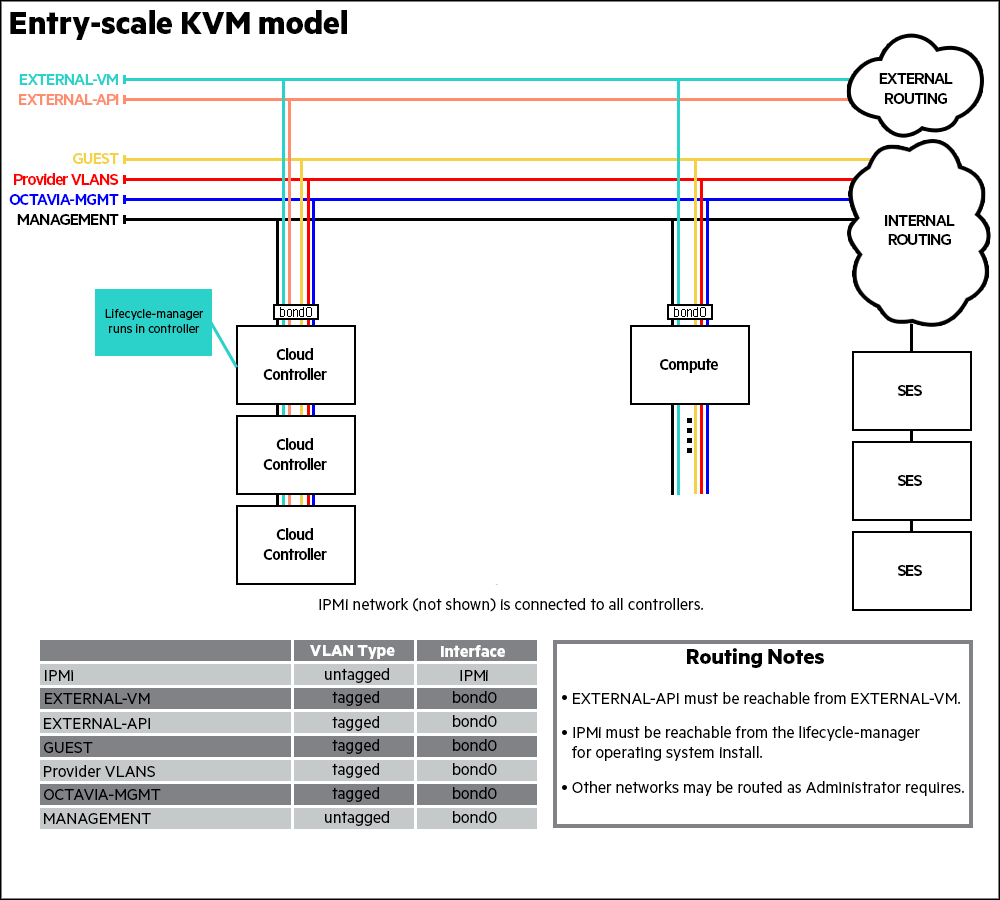

The HPE Helion OpenStack Monitoring system connects internally to the Kafka and Spark technologies without authentication. If you choose to deploy Monitoring, configure it to use only trusted networks such as the Management network, as illustrated on the network diagrams below for Entry Scale Deployment and Mid Scale Deployment.

Entry Scale Deployment

In Entry Scale Deployment Monasca Transform and Spark will be deployed on Shared Control Plane along with other Openstack Services along with Monitoring and Logging

Mid scale Deployment

In a Mid Scale Deployment Monasca Transform and Spark will be deployed on dedicated Metering Monitoring and Logging (MML) control plane along with other data processing intensive services like Metering, Monitoring and Logging

Multi Control Plane Deployment

In a Multi Control Plane Deployment, Monasca Transform and Spark will be deployed on the Shared Control plane along with rest of Monasca Components.

Start, Stop and Status for Monasca Transform and Spark processes

The service management methods for monasca-transform and spark follow the convention for services in the OpenStack platform. When executing from the deployer node, the commands are as follows:

Status

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts spark-status.ymlardana >ansible-playbook -i hosts/verb_hosts monasca-transform-status.yml

Start

As monasca-transform depends on spark for the processing of the metrics spark will need to be started before monasca-transform.

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts spark-start.ymlardana >ansible-playbook -i hosts/verb_hosts monasca-transform-start.yml

Stop

As a precaution, stop the monasca-transform service before taking spark down. Interruption to the spark service altogether while monasca-transform is still running can result in a monasca-transform process that is unresponsive and needing to be tidied up.

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts monasca-transform-stop.ymlardana >ansible-playbook -i hosts/verb_hosts spark-stop.yml

12.1.2.4.6 Reconfigure #

The reconfigure process can be triggered again from the deployer. Presuming that changes have been made to the variables in the appropriate places execution of the respective ansible scripts will be enough to update the configuration. The spark reconfigure process alters the nodes serially meaning that spark is never down altogether, each node is stopped in turn and zookeeper manages the leaders accordingly. This means that monasca-transform may be left running even while spark is upgraded.

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts spark-reconfigure.ymlardana >ansible-playbook -i hosts/verb_hosts monasca-transform-reconfigure.yml

12.1.2.4.7 Adding Monasca Transform and Spark to HPE Helion OpenStack Deployment #

Since Monasca Transform and Spark are optional components, the users might elect to not install these two components during their initial HPE Helion OpenStack install. The following instructions provide a way the users can add Monasca Transform and Spark to their existing HPE Helion OpenStack deployment.

Steps

Add Monasca Transform and Spark to the input model. Monasca Transform and Spark on a entry level cloud would be installed on the common control plane, for mid scale cloud which has a MML (Metering, Monitoring and Logging) cluster, Monasca Transform and Spark will should be added to MML cluster.

ardana >cd ~/openstack/my_cloud/definition/data/Add spark and monasca-transform to input model, control_plane.yml

clusters - name: core cluster-prefix: c1 server-role: CONTROLLER-ROLE member-count: 3 allocation-policy: strict service-components: [...] - zookeeper - kafka - cassandra - storm - spark - monasca-api - monasca-persister - monasca-notifier - monasca-threshold - monasca-client - monasca-transform [...]Run the Configuration Processor

ardana >cd ~/openstack/my_cloud/definitionardana >git add -Aardana >git commit -m "Adding Monasca Transform and Spark"ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlRun Ready Deployment

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun Cloud Lifecycle Manager Deploy

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts ardana-deploy.yml

Verify Deployment

Login to each controller node and run

tux >sudo service monasca-transform statustux >sudo service spark-master statustux >sudo service spark-worker status

tux >sudo service monasca-transform status ● monasca-transform.service - Monasca Transform Daemon Loaded: loaded (/etc/systemd/system/monasca-transform.service; disabled) Active: active (running) since Wed 2016-08-24 00:47:56 UTC; 2 days ago Main PID: 7351 (bash) CGroup: /system.slice/monasca-transform.service ├─ 7351 bash /etc/monasca/transform/init/start-monasca-transform.sh ├─ 7352 /opt/stack/service/monasca-transform/venv//bin/python /opt/monasca/monasca-transform/lib/service_runner.py ├─27904 /bin/sh -c export SPARK_HOME=/opt/stack/service/spark/venv/bin/../current && spark-submit --supervise --master spark://omega-cp1-c1-m1-mgmt:7077,omega-cp1-c1-m2-mgmt:7077,omega-cp1-c1... ├─27905 /usr/lib/jvm/java-8-openjdk-amd64/jre/bin/java -cp /opt/stack/service/spark/venv/lib/drizzle-jdbc-1.3.jar:/opt/stack/service/spark/venv/bin/../current/conf/:/opt/stack/service/spark/v... └─28355 python /opt/monasca/monasca-transform/lib/driver.py Warning: Journal has been rotated since unit was started. Log output is incomplete or unavailable.tux >sudo service spark-worker status ● spark-worker.service - Spark Worker Daemon Loaded: loaded (/etc/systemd/system/spark-worker.service; disabled) Active: active (running) since Wed 2016-08-24 00:46:05 UTC; 2 days ago Main PID: 63513 (bash) CGroup: /system.slice/spark-worker.service ├─ 7671 python -m pyspark.daemon ├─28948 /usr/lib/jvm/java-8-openjdk-amd64/jre/bin/java -cp /opt/stack/service/spark/venv/bin/../current/conf/:/opt/stack/service/spark/venv/bin/../current/lib/spark-assembly-1.6.1-hadoop2.6.0... ├─63513 bash /etc/spark/init/start-spark-worker.sh & └─63514 /usr/bin/java -cp /opt/stack/service/spark/venv/bin/../current/conf/:/opt/stack/service/spark/venv/bin/../current/lib/spark-assembly-1.6.1-hadoop2.6.0.jar:/opt/stack/service/spark/ven... Warning: Journal has been rotated since unit was started. Log output is incomplete or unavailable.tux >sudo service spark-master status ● spark-master.service - Spark Master Daemon Loaded: loaded (/etc/systemd/system/spark-master.service; disabled) Active: active (running) since Wed 2016-08-24 00:44:24 UTC; 2 days ago Main PID: 55572 (bash) CGroup: /system.slice/spark-master.service ├─55572 bash /etc/spark/init/start-spark-master.sh & └─55573 /usr/bin/java -cp /opt/stack/service/spark/venv/bin/../current/conf/:/opt/stack/service/spark/venv/bin/../current/lib/spark-assembly-1.6.1-hadoop2.6.0.jar:/opt/stack/service/spark/ven... Warning: Journal has been rotated since unit was started. Log output is incomplete or unavailable.

12.1.2.4.8 Increase Monasca Transform Scale #

Monasca Transform in the default configuration can scale up to estimated data for 100 node cloud deployment. Estimated maximum rate of metrics from a 100 node cloud deployment is 120M/hour.

You can further increase the processing rate to 180M/hour. Making the Spark configuration change will increase the CPU's being used by Spark and Monasca Transform from average of around 3.5 to 5.5 CPU's per control node over a 10 minute batch processing interval.

To increase the processing rate to 180M/hour the customer will have to make following spark configuration change.

Steps

Edit /var/lib/ardana/openstack/my_cloud/config/spark/spark-defaults.conf.j2 and set spark.cores.max to 6 and spark.executor.cores 2

Set spark.cores.max to 6

spark.cores.max {{ spark_cores_max }}to

spark.cores.max 6

Set spark.executor.cores to 2

spark.executor.cores {{ spark_executor_cores }}to

spark.executor.cores 2

Edit ~/openstack/my_cloud/config/spark/spark-env.sh.j2

Set SPARK_WORKER_CORES to 2

export SPARK_WORKER_CORES={{ spark_worker_cores }}to

export SPARK_WORKER_CORES=2

Edit ~/openstack/my_cloud/config/spark/spark-worker-env.sh.j2

Set SPARK_WORKER_CORES to 2

export SPARK_WORKER_CORES={{ spark_worker_cores }}to

export SPARK_WORKER_CORES=2

Run Configuration Processor

ardana >cd ~/openstack/my_cloud/definitionardana >git add -Aardana >git commit -m "Changing Spark Config increase scale"ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlRun Ready Deployment

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun spark-reconfigure.yml and monasca-transform-reconfigure.yml

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts spark-reconfigure.ymlardana >ansible-playbook -i hosts/verb_hosts monasca-transform-reconfigure.yml

12.1.2.4.9 Change Compute Host Pattern Filter in Monasca Transform #

Monasca Transform identifies compute host metrics by pattern matching on

hostname dimension in the incoming monasca metrics. The default pattern is of

the form compNNN. For example,

comp001, comp002, etc. To filter for it

in the transformation specs, use the expression

-comp[0-9]+-. In case the compute

host names follow a different pattern other than the standard pattern above,

the filter by expression when aggregating metrics will have to be changed.

Steps

On the deployer: Edit

~/openstack/my_cloud/config/monasca-transform/transform_specs.json.j2Look for all references of

-comp[0-9]+-and change the regular expression to the desired pattern say for example-compute[0-9]+-.{"aggregation_params_map":{"aggregation_pipeline":{"source":"streaming", "usage":"fetch_quantity", "setters":["rollup_quantity", "set_aggregated_metric_name", "set_aggregated_period"], "insert":["prepare_data","insert_data_pre_hourly"]}, "aggregated_metric_name":"mem.total_mb_agg", "aggregation_period":"hourly", "aggregation_group_by_list": ["host", "metric_id", "tenant_id"], "usage_fetch_operation": "avg", "filter_by_list": [{"field_to_filter": "host", "filter_expression": "-comp[0-9]+", "filter_operation": "include"}], "setter_rollup_group_by_list":[], "setter_rollup_operation": "sum", "dimension_list":["aggregation_period", "host", "project_id"], "pre_hourly_operation":"avg", "pre_hourly_group_by_list":["default"]}, "metric_group":"mem_total_all", "metric_id":"mem_total_all"}to

{"aggregation_params_map":{"aggregation_pipeline":{"source":"streaming", "usage":"fetch_quantity", "setters":["rollup_quantity", "set_aggregated_metric_name", "set_aggregated_period"], "insert":["prepare_data", "insert_data_pre_hourly"]}, "aggregated_metric_name":"mem.total_mb_agg", "aggregation_period":"hourly", "aggregation_group_by_list": ["host", "metric_id", "tenant_id"],"usage_fetch_operation": "avg","filter_by_list": [{"field_to_filter": "host","filter_expression": "-compute[0-9]+", "filter_operation": "include"}], "setter_rollup_group_by_list":[], "setter_rollup_operation": "sum", "dimension_list":["aggregation_period", "host", "project_id"], "pre_hourly_operation":"avg", "pre_hourly_group_by_list":["default"]}, "metric_group":"mem_total_all", "metric_id":"mem_total_all"}Note

The filter_expression has been changed to the new pattern.

To change all host metric transformation specs in the same JSON file, repeat Step 2.

Transformation specs will have to be changed for following metric_ids namely "mem_total_all", "mem_usable_all", "disk_total_all", "disk_usable_all", "cpu_total_all", "cpu_total_host", "cpu_util_all", "cpu_util_host"

Run the Configuration Processor:

ardana >cd ~/openstack/my_cloud/definitionardana >git add -Aardana >git commit -m "Changing Monasca Transform specs"ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlRun Ready Deployment:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun Monasca Transform Reconfigure:

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts monasca-transform-reconfigure.yml

12.1.2.5 Configuring Availability of Alarm Metrics #

Using the Monasca agent tuning knobs, you can choose which alarm metrics are available in your environment.

The addition of the libvirt and OVS plugins to the Monasca agent provides a number of additional metrics that can be used. Most of these metrics are included by default, but others are not. You have the ability to use tuning knobs to add or remove these metrics to your environment based on your individual needs in your cloud.

We will list these metrics along with the tuning knob name and instructions for how to adjust these.

12.1.2.5.1 Libvirt plugin metric tuning knobs #

The following metrics are added as part of the libvirt plugin:

| Tuning Knob | Default Setting | Admin Metric Name | Project Metric Name |

|---|---|---|---|

| vm_cpu_check_enable | True | vm.cpu.time_ns | cpu.time_ns |

| vm.cpu.utilization_norm_perc | cpu.utilization_norm_perc | ||

| vm.cpu.utilization_perc | cpu.utilization_perc | ||

| vm_disks_check_enable |

True Creates 20 disk metrics per disk device per virtual machine. | vm.io.errors | io.errors |

| vm.io.errors_sec | io.errors_sec | ||

| vm.io.read_bytes | io.read_bytes | ||

| vm.io.read_bytes_sec | io.read_bytes_sec | ||

| vm.io.read_ops | io.read_ops | ||

| vm.io.read_ops_sec | io.read_ops_sec | ||

| vm.io.write_bytes | io.write_bytes | ||

| vm.io.write_bytes_sec | io.write_bytes_sec | ||

| vm.io.write_ops | io.write_ops | ||

| vm.io.write_ops_sec | io.write_ops_sec | ||

| vm_network_check_enable |

True Creates 16 network metrics per NIC per virtual machine. | vm.net.in_bytes | net.in_bytes |

| vm.net.in_bytes_sec | net.in_bytes_sec | ||

| vm.net.in_packets | net.in_packets | ||

| vm.net.in_packets_sec | net.in_packets_sec | ||

| vm.net.out_bytes | net.out_bytes | ||

| vm.net.out_bytes_sec | net.out_bytes_sec | ||

| vm.net.out_packets | net.out_packets | ||

| vm.net.out_packets_sec | net.out_packets_sec | ||

| vm_ping_check_enable | True | vm.ping_status | ping_status |

| vm_extended_disks_check_enable |

True Creates 6 metrics per device per virtual machine. | vm.disk.allocation | disk.allocation |

| vm.disk.capacity | disk.capacity | ||

| vm.disk.physical | disk.physical | ||

|

True Creates 6 aggregate metrics per virtual machine. | vm.disk.allocation_total | disk.allocation_total | |

| vm.disk.capacity_total | disk.capacity.total | ||

| vm.disk.physical_total | disk.physical_total | ||

| vm_disks_check_enable vm_extended_disks_check_enable |

True Creates 20 aggregate metrics per virtual machine. | vm.io.errors_total | io.errors_total |

| vm.io.errors_total_sec | io.errors_total_sec | ||

| vm.io.read_bytes_total | io.read_bytes_total | ||

| vm.io.read_bytes_total_sec | io.read_bytes_total_sec | ||

| vm.io.read_ops_total | io.read_ops_total | ||

| vm.io.read_ops_total_sec | io.read_ops_total_sec | ||

| vm.io.write_bytes_total | io.write_bytes_total | ||

| vm.io.write_bytes_total_sec | io.write_bytes_total_sec | ||

| vm.io.write_ops_total | io.write_ops_total | ||

| vm.io.write_ops_total_sec | io.write_ops_total_sec |

12.1.2.5.1.1 Configuring the libvirt metrics using the tuning knobs #

Use the following steps to configure the tuning knobs for the libvirt plugin metrics.

Log in to the Cloud Lifecycle Manager.

Edit the following file:

~/openstack/my_cloud/config/nova/libvirt-monitoring.yml

Change the value for each tuning knob to the desired setting,

Trueif you want the metrics created andFalseif you want them removed. Refer to the table above for which metrics are controlled by each tuning knob.vm_cpu_check_enable: <true or false> vm_disks_check_enable: <true or false> vm_extended_disks_check_enable: <true or false> vm_network_check_enable: <true or false> vm_ping_check_enable: <true or false>

Commit your configuration to the local Git repository (see Book “Installing with Cloud Lifecycle Manager”, Chapter 10 “Using Git for Configuration Management”), as follows:

ardana >cd ~/openstack/ardana/ansibleardana >git add -Aardana >git commit -m "configuring libvirt plugin tuning knobs"Update your deployment directory:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun the Nova reconfigure playbook to implement the changes:

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts nova-reconfigure.yml

Note

If you modify either of the following files, then the monasca tuning parameters should be adjusted to handle a higher load on the system.

~/openstack/my_cloud/config/nova/libvirt-monitoring.yml ~/openstack/my_cloud/config/neutron/monasca_ovs_plugin.yaml.j2

Tuning parameters are located in

~/openstack/my_cloud/config/monasca/configuration.yml.

The parameter monasca_tuning_selector_override should be

changed to the extra-large setting.

12.1.2.5.2 OVS plugin metric tuning knobs #

The following metrics are added as part of the OVS plugin:

Note

For a description of each of these metrics, see Section 12.1.4.16, “Open vSwitch (OVS) Metrics”.

| Tuning Knob | Default Setting | Admin Metric Name | Project Metric Name |

|---|---|---|---|

| use_rate_metrics | False | ovs.vrouter.in_bytes_sec | vrouter.in_bytes_sec |

| ovs.vrouter.in_packets_sec | vrouter.in_packets_sec | ||

| ovs.vrouter.out_bytes_sec | vrouter.out_bytes_sec | ||

| ovs.vrouter.out_packets_sec | vrouter.out_packets_sec | ||

| use_absolute_metrics | True | ovs.vrouter.in_bytes | vrouter.in_bytes |

| ovs.vrouter.in_packets | vrouter.in_packets | ||

| ovs.vrouter.out_bytes | vrouter.out_bytes | ||

| ovs.vrouter.out_packets | vrouter.out_packets | ||

| use_health_metrics with use_rate_metrics | False | ovs.vrouter.in_dropped_sec | vrouter.in_dropped_sec |

| ovs.vrouter.in_errors_sec | vrouter.in_errors_sec | ||

| ovs.vrouter.out_dropped_sec | vrouter.out_dropped_sec | ||

| ovs.vrouter.out_errors_sec | vrouter.out_errors_sec | ||

| use_health_metrics with use_absolute_metrics | False | ovs.vrouter.in_dropped | vrouter.in_dropped |

| ovs.vrouter.in_errors | vrouter.in_errors | ||

| ovs.vrouter.out_dropped | vrouter.out_dropped | ||

| ovs.vrouter.out_errors | vrouter.out_errors |

12.1.2.5.2.1 Configuring the OVS metrics using the tuning knobs #

Use the following steps to configure the tuning knobs for the libvirt plugin metrics.

Log in to the Cloud Lifecycle Manager.

Edit the following file:

~/openstack/my_cloud/config/neutron/monasca_ovs_plugin.yaml.j2

Change the value for each tuning knob to the desired setting,

Trueif you want the metrics created andFalseif you want them removed. Refer to the table above for which metrics are controlled by each tuning knob.init_config: use_absolute_metrics: <true or false> use_rate_metrics: <true or false> use_health_metrics: <true or false>

Commit your configuration to the local Git repository (see Book “Installing with Cloud Lifecycle Manager”, Chapter 10 “Using Git for Configuration Management”), as follows:

ardana >cd ~/openstack/ardana/ansibleardana >git add -Aardana >git commit -m "configuring OVS plugin tuning knobs"Update your deployment directory:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun the Neutron reconfigure playbook to implement the changes:

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts neutron-reconfigure.yml

12.1.3 Integrating HipChat, Slack, and JIRA #

Monasca, the HPE Helion OpenStack monitoring and notification service, includes three default notification methods, email, PagerDuty, and webhook. Monasca also supports three other notification plugins which allow you to send notifications to HipChat, Slack, and JIRA. Unlike the default notification methods, the additional notification plugins must be manually configured.

This guide details the steps to configure each of the three non-default notification plugins. This guide also assumes that your cloud is fully deployed and functional.

12.1.3.1 Configuring the HipChat Plugin #

To configure the HipChat plugin you will need the following four pieces of information from your HipChat system.

The URL of your HipChat system.

A token providing permission to send notifications to your HipChat system.

The ID of the HipChat room you wish to send notifications to.

A HipChat user account. This account will be used to authenticate any incoming notifications from your HPE Helion OpenStack cloud.

Obtain a token

Use the following instructions to obtain a token from your Hipchat system.

Log in to HipChat as the user account that will be used to authenticate the notifications.

Navigate to the following URL:

https://<your_hipchat_system>/account/api. Replace<your_hipchat_system>with the fully-qualified-domain-name of your HipChat system.Select the Create token option. Ensure that the token has the "SendNotification" attribute.

Obtain a room ID

Use the following instructions to obtain the ID of a HipChat room.

Log in to HipChat as the user account that will be used to authenticate the notifications.

Select My account from the application menu.

Select the Rooms tab.

Select the room that you want your notifications sent to.

Look for the API ID field in the room information. This is the room ID.

Create HipChat notification type

Use the following instructions to create a HipChat notification type.

Begin by obtaining the API URL for the HipChat room that you wish to send notifications to. The format for a URL used to send notifications to a room is as follows:

/v2/room/{room_id_or_name}/notificationUse the Monasca API to create a new notification method. The following example demonstrates how to create a HipChat notification type named MyHipChatNotification, for room ID 13, using an example API URL and auth token.

ardana >monasca notification-create NAME TYPE ADDRESSardana >monasca notification-create MyHipChatNotification HIPCHAT https://hipchat.hpe.net/v2/room/13/notification?auth_token=1234567890The preceding example creates a notification type with the following characteristics

NAME: MyHipChatNotification

TYPE: HIPCHAT

ADDRESS: https://hipchat.hpe.net/v2/room/13/notification

auth_token: 1234567890

Note

The Horizon dashboard can also be used to create a HipChat notification type.

12.1.3.2 Configuring the Slack Plugin #

Configuring a Slack notification type requires four pieces of information from your Slack system.

Slack server URL

Authentication token

Slack channel

A Slack user account. This account will be used to authenticate incoming notifications to Slack.

Identify a Slack channel

Log in to your Slack system as the user account that will be used to authenticate the notifications to Slack.

In the left navigation panel, under the CHANNELS section locate the channel that you wish to receive the notifications. The instructions that follow will use the example channel #general.

Create a Slack token

Log in to your Slack system as the user account that will be used to authenticate the notifications to Slack

Navigate to the following URL: https://api.slack.com/docs/oauth-test-tokens

Select the Create token button.

Create a Slack notification type

Begin by identifying the structure of the API call to be used by your notification method. The format for a call to the Slack Web API is as follows:

https://slack.com/api/METHODYou can authenticate a Web API request by using the token that you created in the previous Create a Slack Tokensection. Doing so will result in an API call that looks like the following.

https://slack.com/api/METHOD?token=auth_tokenYou can further refine your call by specifying the channel that the message will be posted to. Doing so will result in an API call that looks like the following.

https://slack.com/api/METHOD?token=AUTH_TOKEN&channel=#channelThe following example uses the

chat.postMessagemethod, the token1234567890, and the channel#general.https://slack.com/api/chat.postMessage?token=1234567890&channel=#general

Find more information on the Slack Web API here: https://api.slack.com/web

Use the CLI on your Cloud Lifecycle Manager to create a new Slack notification type, using the API call that you created in the preceding step. The following example creates a notification type named MySlackNotification, using token 1234567890, and posting to channel #general.

ardana >monasca notification-create MySlackNotification SLACK https://slack.com/api/chat.postMessage?token=1234567890&channel=#general

Note

Notification types can also be created in the Horizon dashboard.

12.1.3.3 Configuring the JIRA Plugin #

Configuring the JIRA plugin requires three pieces of information from your JIRA system.

The URL of your JIRA system.

Username and password of a JIRA account that will be used to authenticate the notifications.

The name of the JIRA project that the notifications will be sent to.

Create JIRA notification type

You will configure the Monasca service to send notifications to a particular JIRA project. You must also configure JIRA to create new issues for each notification it receives to this project, however, that configuration is outside the scope of this document.

The Monasca JIRA notification plugin supports only the following two JIRA issue fields.

PROJECT. This is the only supported “mandatory” JIRA issue field.

COMPONENT. This is the only supported “optional” JIRA issue field.

The JIRA issue type that your notifications will create may only be configured with the "Project" field as mandatory. If your JIRA issue type has any other mandatory fields, the Monasca plugin will not function correctly. Currently, the Monasca plugin only supports the single optional "component" field.

Creating the JIRA notification type requires a few more steps than other notification types covered in this guide. Because the Python and YAML files for this notification type are not yet included in HPE Helion OpenStack 8, you must perform the following steps to manually retrieve and place them on your Cloud Lifecycle Manager.

Configure the JIRA plugin by adding the following block to the

/etc/monasca/notification.yamlfile, under thenotification_typessection, and adding the username and password of the JIRA account used for the notifications to the respective sections.plugins: - monasca_notification.plugins.jira_notifier:JiraNotifier jira: user: password: timeout: 60After adding the necessary block, the

notification_typessection should look like the following example. Note that you must also add the username and password for the JIRA user related to the notification type.notification_types: plugins: - monasca_notification.plugins.jira_notifier:JiraNotifier jira: user: password: timeout: 60 webhook: timeout: 5 pagerduty: timeout: 5 url: "https://events.pagerduty.com/generic/2010-04-15/create_event.json"Create the JIRA notification type. The following command example creates a JIRA notification type named

MyJiraNotification, in the JIRA projectHISO.ardana >monasca notification-create MyJiraNotification JIRA https://jira.hpcloud.net/?project=HISOThe following command example creates a JIRA notification type named

MyJiraNotification, in the JIRA projectHISO, and adds the optional component field with a value ofkeystone.ardana >monasca notification-create MyJiraNotification JIRA https://jira.hpcloud.net/?project=HISO&component=keystoneNote

There is a slash (

/) separating the URL path and the query string. The slash is required if you have a query parameter without a path parameter.Note

Notification types may also be created in the Horizon dashboard.

12.1.4 Alarm Metrics #

You can use the available metrics to create custom alarms to further monitor your cloud infrastructure and facilitate autoscaling features.

For details on how to create customer alarms using the Operations Console, see Book “Operations Console”, Chapter 1 “Alarm Definition”.

12.1.4.1 Apache Metrics #

A list of metrics associated with the Apache service.

| Metric Name | Dimensions | Description |

|---|---|---|

| apache.net.hits |

hostname service=apache component=apache | Total accesses |

| apache.net.kbytes_sec |

hostname service=apache component=apache | Total Kbytes per second |

| apache.net.requests_sec |

hostname service=apache component=apache | Total accesses per second |

| apache.net.total_kbytes |

hostname service=apache component=apache | Total Kbytes |

| apache.performance.busy_worker_count |

hostname service=apache component=apache | The number of workers serving requests |

| apache.performance.cpu_load_perc |

hostname service=apache component=apache |

The current percentage of CPU used by each worker and in total by all workers combined |

| apache.performance.idle_worker_count |

hostname service=apache component=apache | The number of idle workers |

| apache.status |

apache_port hostname service=apache component=apache | Status of Apache port |

12.1.4.2 Ceilometer Metrics #

A list of metrics associated with the Ceilometer service.

| Metric Name | Dimensions | Description |

|---|---|---|

| disk.total_space_mb_agg |

aggregation_period=hourly, host=all, project_id=all | Total space of disk |

| disk.total_used_space_mb_agg |

aggregation_period=hourly, host=all, project_id=all | Total used space of disk |

| swiftlm.diskusage.rate_agg |

aggregation_period=hourly, host=all, project_id=all | |

| swiftlm.diskusage.val.avail_agg |

aggregation_period=hourly, host, project_id=all | |

| swiftlm.diskusage.val.size_agg |

aggregation_period=hourly, host, project_id=all | |

| image |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=image, source=openstack | Existence of the image |

| image.delete |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=image, source=openstack | Delete operation on this image |

| image.size |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=B, source=openstack | Size of the uploaded image |

| image.update |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=image, source=openstack | Update operation on this image |

| image.upload |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=image, source=openstack | Upload operation on this image |

| instance |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=instance, source=openstack | Existence of instance |

| disk.ephemeral.size |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=GB, source=openstack | Size of ephemeral disk on this instance |

| disk.root.size |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=GB, source=openstack | Size of root disk on this instance |

| memory |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=MB, source=openstack | Size of memory on this instance |

| ip.floating |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=ip, source=openstack | Existence of IP |

| ip.floating.create |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=ip, source=openstack | Create operation on this fip |

| ip.floating.update |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=ip, source=openstack | Update operation on this fip |

| mem.total_mb_agg |

aggregation_period=hourly, host=all, project_id=all | Total space of memory |

| mem.usable_mb_agg |

aggregation_period=hourly, host=all, project_id=all | Available space of memory |

| network |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=network, source=openstack | Existence of network |

| network.create |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=network, source=openstack | Create operation on this network |

| network.update |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=network, source=openstack | Update operation on this network |

| network.delete |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=network, source=openstack | Delete operation on this network |

| port |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=port, source=openstack | Existence of port |

| port.create |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=port, source=openstack | Create operation on this port |

| port.delete |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=port, source=openstack | Delete operation on this port |

| port.update |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=port, source=openstack | Update operation on this port |

| router |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=router, source=openstack | Existence of router |

| router.create |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=router, source=openstack | Create operation on this router |

| router.delete |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=router, source=openstack | Delete operation on this router |

| router.update |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=router, source=openstack | Update operation on this router |

| snapshot |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=snapshot, source=openstack | Existence of the snapshot |

| snapshot.create.end |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=snapshot, source=openstack | Create operation on this snapshot |

| snapshot.delete.end |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=snapshot, source=openstack | Delete operation on this snapshot |

| snapshot.size |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=GB, source=openstack | Size of this snapshot |

| subnet |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=subnet, source=openstack | Existence of the subnet |

| subnet.create |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=subnet, source=openstack | Create operation on this subnet |

| subnet.delete |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=subnet, source=openstack | Delete operation on this subnet |

| subnet.update |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=subnet, source=openstack | Update operation on this subnet |

| vcpus |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=vcpus, source=openstack | Number of virtual CPUs allocated to the instance |

| vcpus_agg |

aggregation_period=hourly, host=all, project_id | Number of vcpus used by a project |

| volume |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=volume, source=openstack | Existence of the volume |

| volume.create.end |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=volume, source=openstack | Create operation on this volume |

| volume.delete.end |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=volume, source=openstack | Delete operation on this volume |

| volume.resize.end |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=volume, source=openstack | Resize operation on this volume |

| volume.size |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=GB, source=openstack | Size of this volume |

| volume.update.end |

user_id, region, resource_id, datasource=ceilometer, project_id, type=delta, unit=volume, source=openstack | Update operation on this volume |

| storage.objects |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=object, source=openstack | Number of objects |

| storage.objects.size |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=B, source=openstack | Total size of stored objects |

| storage.objects.containers |

user_id, region, resource_id, datasource=ceilometer, project_id, type=gauge, unit=container, source=openstack | Number of containers |

12.1.4.3 Cinder Metrics #

A list of metrics associated with the Cinder service.

| Metric Name | Dimensions | Description |

|---|---|---|

| cinderlm.cinder.backend.physical.list |

service=block-storage, hostname, cluster, cloud_name, control_plane, component, backends | List of physical backends |

| cinderlm.cinder.backend.total.avail |

service=block-storage, hostname, cluster, cloud_name, control_plane, component, backendname | Total available capacity metric per backend |

| cinderlm.cinder.backend.total.size |

service=block-storage, hostname, cluster, cloud_name, control_plane, component, backendname | Total capacity metric per backend |

| cinderlm.cinder.cinder_services |

service=block-storage, hostname, cluster, cloud_name, control_plane, component | Status of a cinder-volume service |

| cinderlm.hp_hardware.hpssacli.logical_drive |

service=block-storage, hostname, cluster, cloud_name, control_plane, component, sub_component, logical_drive, controller_slot, array The HPE Smart Storage Administrator (HPE SSA) CLI component will have to be installed for SSACLI status to be reported. To download and install the SSACLI utility to enable management of disk controllers, please refer to: https://support.hpe.com/hpsc/swd/public/detail?swItemId=MTX_3d16386b418a443388c18da82f | Status of a logical drive |

| cinderlm.hp_hardware.hpssacli.physical_drive |

service=block-storage, hostname, cluster, cloud_name, control_plane, component, box, bay, controller_slot | Status of a logical drive |

| cinderlm.hp_hardware.hpssacli.smart_array |

service=block-storage, hostname, cluster, cloud_name, control_plane, component, sub_component, model | Status of smart array |

| cinderlm.hp_hardware.hpssacli.smart_array.firmware |

service=block-storage, hostname, cluster, cloud_name, control_plane, component, model | Checks firmware version |

12.1.4.4 Compute Metrics #

A list of metrics associated with the Compute service.

| Metric Name | Dimensions | Description |

|---|---|---|

| nova.heartbeat |

service=compute cloud_name hostname component control_plane cluster |

Checks that all services are running heartbeats (uses nova user and to list services then sets up checks for each. For example, nova-scheduler, nova-conductor, nova-consoleauth, nova-compute) |

| nova.vm.cpu.total_allocated |

service=compute hostname component control_plane cluster | Total CPUs allocated across all VMs |

| nova.vm.disk.total_allocated_gb |

service=compute hostname component control_plane cluster | Total Gbytes of disk space allocated to all VMs |

| nova.vm.mem.total_allocated_mb |

service=compute hostname component control_plane cluster | Total Mbytes of memory allocated to all VMs |

12.1.4.5 Crash Metrics #

A list of metrics associated with the Crash service.

| Metric Name | Dimensions | Description |

|---|---|---|

| crash.dump_count |

service=system hostname cluster | Number of crash dumps found |

12.1.4.6 Directory Metrics #

A list of metrics associated with the Directory service.

| Metric Name | Dimensions | Description |

|---|---|---|

| directory.files_count |

service hostname path | Total number of files under a specific directory path |

| directory.size_bytes |

service hostname path | Total size of a specific directory path |

12.1.4.7 Elasticsearch Metrics #

A list of metrics associated with the Elasticsearch service.

| Metric Name | Dimensions | Description |

|---|---|---|

| elasticsearch.active_primary_shards |

service=logging url hostname |

Indicates the number of primary shards in your cluster. This is an aggregate total across all indices. |

| elasticsearch.active_shards |

service=logging url hostname |

Aggregate total of all shards across all indices, which includes replica shards. |

| elasticsearch.cluster_status |

service=logging url hostname |

Cluster health status. |

| elasticsearch.initializing_shards |

service=logging url hostname |

The count of shards that are being freshly created. |

| elasticsearch.number_of_data_nodes |

service=logging url hostname |

Number of data nodes. |

| elasticsearch.number_of_nodes |

service=logging url hostname |

Number of nodes. |

| elasticsearch.relocating_shards |

service=logging url hostname |

Shows the number of shards that are currently moving from one node to another node. |

| elasticsearch.unassigned_shards |

service=logging url hostname |

The number of unassigned shards from the master node. |

12.1.4.8 HAProxy Metrics #

A list of metrics associated with the HAProxy service.

| Metric Name | Dimensions | Description |

|---|---|---|

| haproxy.backend.bytes.in_rate | ||

| haproxy.backend.bytes.out_rate | ||

| haproxy.backend.denied.req_rate | ||

| haproxy.backend.denied.resp_rate | ||

| haproxy.backend.errors.con_rate | ||

| haproxy.backend.errors.resp_rate | ||

| haproxy.backend.queue.current | ||

| haproxy.backend.response.1xx | ||

| haproxy.backend.response.2xx | ||

| haproxy.backend.response.3xx | ||

| haproxy.backend.response.4xx | ||

| haproxy.backend.response.5xx | ||

| haproxy.backend.response.other | ||

| haproxy.backend.session.current | ||

| haproxy.backend.session.limit | ||

| haproxy.backend.session.pct | ||

| haproxy.backend.session.rate | ||

| haproxy.backend.warnings.redis_rate | ||

| haproxy.backend.warnings.retr_rate | ||

| haproxy.frontend.bytes.in_rate | ||

| haproxy.frontend.bytes.out_rate | ||

| haproxy.frontend.denied.req_rate | ||

| haproxy.frontend.denied.resp_rate | ||

| haproxy.frontend.errors.req_rate | ||

| haproxy.frontend.requests.rate | ||

| haproxy.frontend.response.1xx | ||

| haproxy.frontend.response.2xx | ||

| haproxy.frontend.response.3xx | ||

| haproxy.frontend.response.4xx | ||

| haproxy.frontend.response.5xx | ||

| haproxy.frontend.response.other | ||

| haproxy.frontend.session.current | ||

| haproxy.frontend.session.limit | ||

| haproxy.frontend.session.pct | ||

| haproxy.frontend.session.rate |

12.1.4.9 HTTP Check Metrics #

A list of metrics associated with the HTTP Check service:

Table 12.2: HTTP Check Metrics #

| Metric Name | Dimensions | Description |

|---|---|---|

| http_response_time |

url hostname service component | The response time in seconds of the http endpoint call. |

| http_status |

url hostname service | The status of the http endpoint call (0 = success, 1 = failure). |

For each component and HTTP metric name there are two separate metrics reported, one for the local URL and another for the virtual IP (VIP) URL:

Table 12.3: HTTP Metric Components #

| Component | Dimensions | Description |

|---|---|---|

| account-server |

service=object-storage component=account-server url | swift account-server http endpoint status and response time |

| barbican-api |

service=key-manager component=barbican-api url | barbican-api http endpoint status and response time |

| ceilometer-api |

service=telemetry component=ceilometer-api url | ceilometer-api http endpoint status and response time |

| cinder-api |

service=block-storage component=cinder-api url | cinder-api http endpoint status and response time |

| container-server |

service=object-storage component=container-server url | swift container-server http endpoint status and response time |

| designate-api |

service=dns component=designate-api url | designate-api http endpoint status and response time |

| freezer-api |

service=backup component=freezer-api url | freezer-api http endpoint status and response time |

| glance-api |

service=image-service component=glance-api url | glance-api http endpoint status and response time |

| glance-registry |

service=image-service component=glance-registry url | glance-registry http endpoint status and response time |

| heat-api |

service=orchestration component=heat-api url | heat-api http endpoint status and response time |

| heat-api-cfn |

service=orchestration component=heat-api-cfn url | heat-api-cfn http endpoint status and response time |

| heat-api-cloudwatch |

service=orchestration component=heat-api-cloudwatch url | heat-api-cloudwatch http endpoint status and response time |

| ardana-ux-services |

service=ardana-ux-services component=ardana-ux-services url | ardana-ux-services http endpoint status and response time |

| horizon |

service=web-ui component=horizon url | horizon http endpoint status and response time |

| keystone-api |

service=identity-service component=keystone-api url | keystone-api http endpoint status and response time |

| monasca-api |

service=monitoring component=monasca-api url | monasca-api http endpoint status |

| monasca-persister |

service=monitoring component=monasca-persister url | monasca-persister http endpoint status |

| neutron-server |

service=networking component=neutron-server url | neutron-server http endpoint status and response time |

| neutron-server-vip |

service=networking component=neutron-server-vip url | neutron-server-vip http endpoint status and response time |

| nova-api |

service=compute component=nova-api url | nova-api http endpoint status and response time |