5 Managing Identity #

The Identity service provides the structure for user authentication to your cloud.

5.1 The Identity Service #

This topic explains the purpose and mechanisms of the identity service.

The SUSE OpenStack Cloud Identity service, based on the OpenStack keystone API, is responsible for providing UserID authentication and access authorization to enable organizations to achieve their access security and compliance objectives and successfully deploy OpenStack. In short, the Identity service is the gateway to the rest of the OpenStack services.

5.1.1 Which version of the Identity service should you use? #

Use Identity API version 3.0, as previous versions no longer exist as endpoints for Identity API queries.

Similarly, when performing queries, you must use the OpenStack CLI (the

openstack command), and not the keystone CLI

(keystone) as the latter is only compatible with API

versions prior to 3.0.

5.1.2 Authentication #

The authentication function provides the initial login function to OpenStack. keystone supports multiple sources of authentication, including a native or built-in authentication system. The keystone native system can be used for all user management functions for proof of concept deployments or small deployments not requiring integration with a corporate authentication system, but it lacks some of the advanced functions usually found in user management systems such as forcing password changes. The focus of the keystone native authentication system is to be the source of authentication for OpenStack-specific users required for the operation of the various OpenStack services. These users are stored by keystone in a default domain; the addition of these IDs to an external authentication system is not required.

keystone is more commonly integrated with external authentication systems such as OpenLDAP or Microsoft Active Directory. These systems are usually centrally deployed by organizations to serve as the single source of user management and authentication for all in-house deployed applications and systems requiring user authentication. In addition to LDAP and Microsoft Active Directory, support for integration with Security Assertion Markup Language (SAML)-based identity providers from companies such as Ping, CA, IBM, Oracle, and others is also nearly "production-ready".

keystone also provides architectural support via the underlying Apache deployment for other types of authentication systems such as Multi-Factor Authentication. These types of systems typically require driver support and integration from the respective provider vendors.

While support for Identity Providers and Multi-factor authentication is available in keystone, it has not yet been certified by the SUSE OpenStack Cloud engineering team and is an experimental feature in SUSE OpenStack Cloud.

LDAP-compatible directories such as OpenLDAP and Microsoft Active Directory are recommended alternatives to using the keystone local authentication. Both methods are widely used by organizations and are integrated with a variety of other enterprise applications. These directories act as the single source of user information within an organization. keystone can be configured to authenticate against an LDAP-compatible directory on a per-domain basis.

Domains, as explained in Section 5.3, “Understanding Domains, Projects, Users, Groups, and Roles”, can be configured so that based on the user ID, a incoming user is automatically mapped to a specific domain. This domain can then be configured to authenticate against a specific LDAP directory. The user credentials provided by the user to keystone are passed along to the designated LDAP source for authentication. This communication can be optionally configured to be secure via SSL encryption. No special LDAP administrative access is required, and only read-only access is needed for this configuration. keystone will not add any LDAP information. All user additions, deletions, and modifications are performed by the application's front end in the LDAP directories. After a user has been successfully authenticated, they are then assigned to the groups, roles, and projects defined by the keystone domain or project administrators. This information is stored within the keystone service database.

Another form of external authentication provided by the keystone service is via integration with SAML-based Identity Providers (IdP) such as Ping Identity, IBM Tivoli, and Microsoft Active Directory Federation Server. A SAML-based identity provider provides authentication that is often called "single sign-on". The IdP server is configured to authenticate against identity sources such as Active Directory and provides a single authentication API against multiple types of downstream identity sources. This means that an organization could have multiple identity storage sources but a single authentication source. In addition, if a user has logged into one such source during a defined session time frame, they do not need to re-authenticate within the defined session. Instead, the IdP will automatically validate the user to requesting applications and services.

A SAML-based IdP authentication source is configured with keystone on a per-domain basis similar to the manner in which native LDAP directories are configured. Extra mapping rules are required in the configuration that define which keystone group an incoming UID is automatically assigned to. This means that groups need to be defined in keystone first, but it also removes the requirement that a domain or project admin assign user roles and project membership on a per-user basis. Instead, groups are used to define project membership and roles and incoming users are automatically mapped to keystone groups based on their upstream group membership. This provides a very consistent role-based access control (RBAC) model based on the upstream identity source. The configuration of this option is fairly straightforward. IdP vendors such as Ping and IBM are contributing to the maintenance of this function and have also produced their own integration documentation. Microsoft Active Directory Federation Services (ADFS) is used for functional testing and future documentation.

In addition to SAML-based IdP, keystone also supports external authentication with a third party IdP using OpenID Connect protocol by leveraging the capabilities provided by the Apache2 auth_mod_openidc module. The configuration of OpenID Connect is similar to SAML.

The third keystone-supported authentication source is known as Multi-Factor Authentication (MFA). MFA typically requires an external source of authentication beyond a login name and password, and can include options such as SMS text, a temporal token generator, a fingerprint scanner, etc. Each of these types of MFA are usually specific to a particular MFA vendor. The keystone architecture supports an MFA-based authentication system, but this has not yet been certified or documented for SUSE OpenStack Cloud.

5.1.3 Authorization #

The second major function provided by the keystone service is access authorization that determines what resources and actions are available based on the UserID, the role of the user, and the projects that a user is provided access to. All of this information is created, managed, and stored by keystone. These functions are applied via the horizon web interface, the OpenStack command-line interface, or the direct keystone API.

keystone provides support for organizing users via three entities including:

- Domains

Domains provide the highest level of organization. Domains are intended to be used as high-level containers for multiple projects. A domain can represent different tenants, companies or organizations for an OpenStack cloud deployed for public cloud deployments or represent major business units, functions, or any other type of top-level organization unit in an OpenStack private cloud deployment. Each domain has at least one Domain Admin assigned to it. This Domain Admin can then create multiple projects within the domain and assign the project admin role to specific project owners. Each domain created in an OpenStack deployment is unique and the projects assigned to a domain cannot exist in another domain.

- Projects

Projects are entities within a domain that represent groups of users, each user role within that project, and how many underlying infrastructure resources can be consumed by members of the project.

- Groups

Groups are an optional function and provide the means of assigning project roles to multiple users at once.

keystone also provides the means to create and assign roles to groups of users or individual users. The role names are created and user assignments are made within keystone. The actual function of a role is defined currently per each OpenStack service via scripts. When a user requests access to an OpenStack service, his access token contains information about his assigned project membership and role for that project. This role is then matched to the service-specific script and the user is allowed to perform functions within that service defined by the role mapping.

5.2 Supported Upstream Keystone Features #

5.2.1 OpenStack upstream features that are enabled by default in SUSE OpenStack Cloud 9 #

The following supported keystone features are enabled by default in the SUSE OpenStack Cloud 9 release.

| Name | User/Admin | Note: API support only. No CLI/UI support |

|---|---|---|

| Implied Roles | Admin | https://blueprints.launchpad.net/keystone/+spec/implied-roles |

| Domain-Specific Roles | Admin | https://blueprints.launchpad.net/keystone/+spec/domain-specific-roles |

| Fernet Token Provider | User and Admin | https://docs.openstack.org/keystone/rocky/admin/identity-fernet-token-faq.html |

Implied rules

To allow for the practice of hierarchical permissions in user roles, this feature enables roles to be linked in such a way that they function as a hierarchy with role inheritance.

When a user is assigned a superior role, the user will also be assigned all roles implied by any subordinate roles. The hierarchy of the assigned roles will be expanded when issuing the user a token.

Domain-specific roles

This feature extends the principle of implied roles to include a set of roles that are specific to a domain. At the time a token is issued, the domain-specific roles are not included in the token, however, the roles that they map to are.

Fernet token provider

Provides tokens in the Fernet format. This feature is automatically configured and is enabled by default. Fernet tokens are preferred and used by default instead of the older UUID token format.

5.2.2 OpenStack upstream features that are disabled by default in SUSE OpenStack Cloud 9 #

The following is a list of features which are fully supported in the SUSE OpenStack Cloud 9 release, but are disabled by default. Customers can run a playbook to enable the features.

| Name | User/Admin | Reason Disabled |

|---|---|---|

| Support multiple LDAP backends via per-domain configuration | Admin | Needs explicit configuration. |

| WebSSO | User and Admin | Needs explicit configuration. |

| keystone-to-keystone (K2K) federation | User and Admin | Needs explicit configuration. |

| Domain-specific config in SQL | Admin | Domain specific configuration options can be stored in SQL instead of configuration files, using the new REST APIs. |

Multiple LDAP backends for each domain

This feature allows identity backends to be configured on a domain-by-domain basis. Domains will be capable of having their own exclusive LDAP service (or multiple services). A single LDAP service can also serve multiple domains, with each domain in a separate subtree.

To implement this feature, individual domains will require domain-specific configuration files. Domains that do not implement this feature will continue to share a common backend driver.

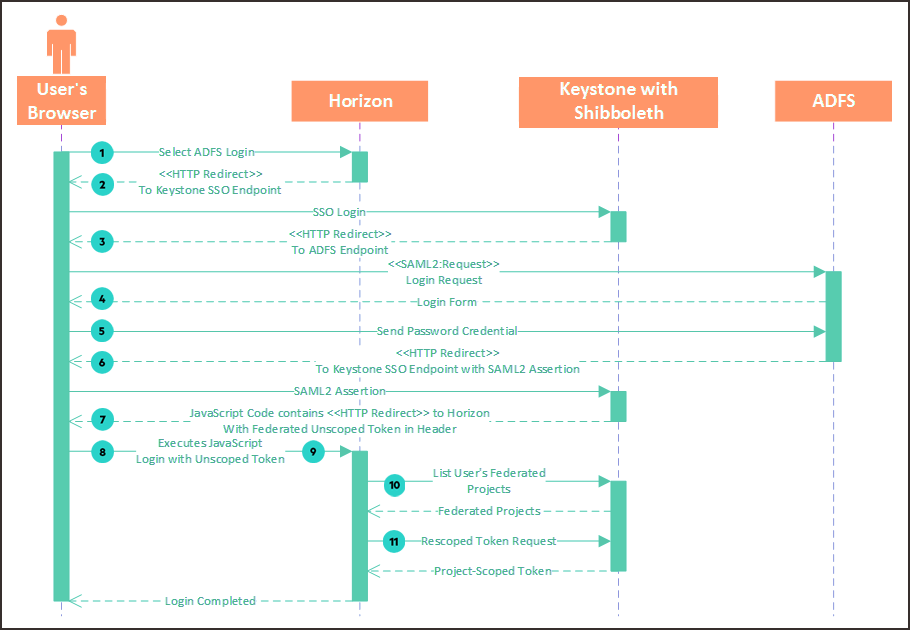

WebSSO

This feature enables the keystone service to provide federated identity services through a token-based single sign-on page. This feature is disabled by default, as it requires explicit configuration.

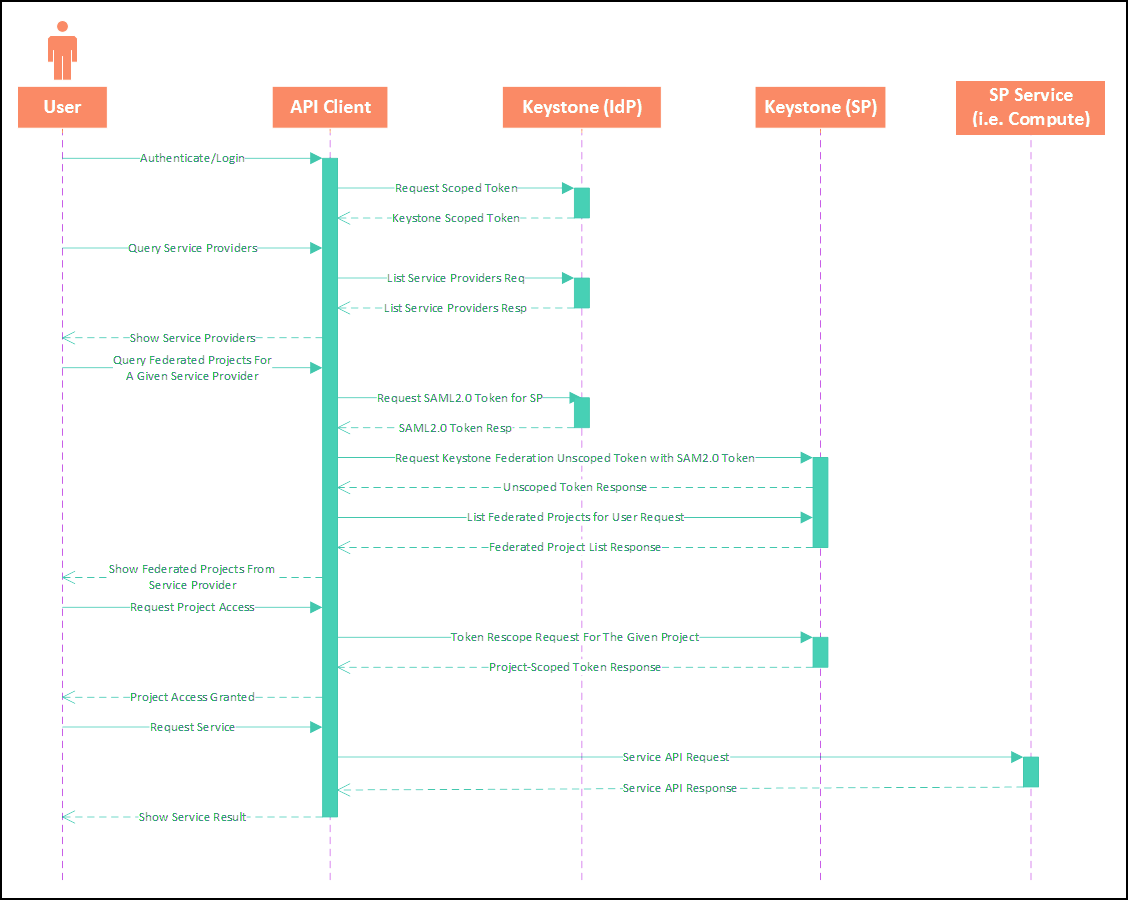

keystone-to-keystone (K2K) federation

This feature enables separate keystone instances to federate identities among the instances, offering inter-cloud authorization. This feature is disabled by default, as it requires explicit configuration.

Domain-specific config in SQL

Using the new REST APIs, domain-specific configuration options can be stored in a SQL database instead of in configuration files.

5.2.3 Stack upstream features that have been specifically disabled in SUSE OpenStack Cloud 9 #

The following is a list of extensions which are disabled by default in SUSE OpenStack Cloud 9, according to keystone policy.

| Target Release | Name | User/Admin | Reason Disabled |

|---|---|---|---|

| TBD | Endpoint Filtering | Admin |

This extension was implemented to facilitate service activation. However, due to lack of enforcement at the service side, this feature is only half effective right now. |

| TBD | Endpoint Policy | Admin |

This extension was intended to facilitate policy (policy.json) management and enforcement. This feature is useless right now due to lack of the needed middleware to utilize the policy files stored in keystone. |

| TBD | OATH 1.0a | User and Admin |

Complexity in workflow. Lack of adoption. Its alternative, keystone Trust, is enabled by default. HEAT is using keystone Trust. |

| TBD | Revocation Events | Admin |

For PKI token only and PKI token is disabled by default due to usability concerns. |

| TBD | OS CERT | Admin |

For PKI token only and PKI token is disabled by default due to usability concerns. |

| TBD | PKI Token | Admin |

PKI token is disabled by default due to usability concerns. |

| TBD | Driver level caching | Admin |

Driver level caching is disabled by default due to complexity in setup. |

| TBD | Tokenless Authz | Admin |

Tokenless authorization with X.509 SSL client certificate. |

| TBD | TOTP Authentication | User |

Not fully baked. Has not been battle-tested. |

| TBD | is_admin_project | Admin |

No integration with the services. |

5.3 Understanding Domains, Projects, Users, Groups, and Roles #

The identity service uses these concepts for authentication within your cloud and these are descriptions of each of them.

The SUSE OpenStack Cloud 9 identity service uses OpenStack keystone and the concepts of domains, projects, users, groups, and roles to manage authentication. This page describes how these work together.

5.3.1 Domains, Projects, Users, Groups, and Roles #

Most large business organizations use an identity system such as Microsoft Active Directory to store and manage their internal user information. A variety of applications such as HR systems are, in turn, used to manage the data inside of Active Directory. These same organizations often deploy a separate user management system for external users such as contractors, partners, and customers. Multiple authentication systems are then deployed to support multiple types of users.

An LDAP-compatible directory such as Active Directory provides a top-level organization or domain component. In this example, the organization is called Acme. The domain component (DC) is defined as acme.com. Underneath the top level domain component are entities referred to as organizational units (OU). Organizational units are typically designed to reflect the entity structure of the organization. For example, this particular schema has 3 different organizational units for the Marketing, IT, and Contractors units or departments of the Acme organization. Users (and other types of entities like printers) are then defined appropriately underneath each organizational entity. The keystone domain entity can be used to match the LDAP OU entity; each LDAP OU can have a corresponding keystone domain created. In this example, both the Marketing and IT domains represent internal employees of Acme and use the same authentication source. The Contractors domain contains all external people associated with Acme. UserIDs associated with the Contractor domain are maintained in a separate user directory and thus have a different authentication source assigned to the corresponding keystone-defined Contractors domain.

A public cloud deployment usually supports multiple, separate organizations. keystone domains can be created to provide a domain per organization with each domain configured to the underlying organization's authentication source. For example, the ABC company would have a keystone domain created called "abc". All users authenticating to the "abc" domain would be authenticated against the authentication system provided by the ABC organization; in this case ldap://ad.abc.com

5.3.2 Domains #

A domain is a top-level container targeted at defining major organizational entities.

Domains can be used in a multi-tenant OpenStack deployment to segregate projects and users from different companies in a public cloud deployment or different organizational units in a private cloud setting.

Domains provide the means to identify multiple authentication sources.

Each domain is unique within an OpenStack implementation.

Multiple projects can be assigned to a domain but each project can only belong to a single domain.

Each domain has an assigned "admin".

Each project has an assigned "admin".

Domains are created by the "admin" service account and domain admins are assigned by the "admin" user.

The "admin" UserID (UID) is created during the keystone installation, has the "admin" role assigned to it, and is defined as the "Cloud Admin". This UID is created using the "magic" or "secret" admin token found in the default 'keystone.conf' file installed during SUSE OpenStack Cloud keystone installation after the keystone service has been installed. This secret token should be removed after installation and the "admin" password changed.

The "default" domain is created automatically during the SUSE OpenStack Cloud keystone installation.

The "default" domain contains all OpenStack service accounts that are installed during the SUSE OpenStack Cloud keystone installation process.

No users but the OpenStack service accounts should be assigned to the "default" domain.

Domain admins can be any UserID inside or outside of the domain.

5.3.3 Domain Administrator #

A UUID is a domain administrator for a given domain if that UID has a domain-scoped token scoped for the given domain. This means that the UID has the "admin" role assigned to it for the selected domain.

The Cloud Admin UID assigns the domain administrator role for a domain to a selected UID.

A domain administrator can create and delete local users who have authenticated against keystone. These users will be assigned to the domain belonging to the domain administrator who creates the UserID.

A domain administrator can only create users and projects within her assigned domains.

A domain administrator can assign the "admin" role of their domains to another UID or revoke it; each UID with the "admin" role for a specified domain will be a co-administrator for that domain.

A UID can be assigned to be the domain admin of multiple domains.

A domain administrator can assign non-admin roles to any users and groups within their assigned domain, including projects owned by their assigned domain.

A domain admin UID can belong to projects within their administered domains.

Each domain can have a different authentication source.

The domain field is used during the initial login to define the source of authentication.

The "List Users" function can only be executed by a UID with the domain admin role.

A domain administrator can assign a UID from outside of their domain the "domain admin" role, but it is assumed that the domain admin would know the specific UID and would not need to list users from an external domain.

A domain administrator can assign a UID from outside of their domain the "project admin" role for a specific project within their domain, but it is assumed that the domain admin would know the specific UID and would not need to list users from an external domain.

Any user that needs the ability to create a user in a project should be granted the "admin" role for the domain where the user and the project reside.

In order for the horizon › panel to properly fill the "Owner" column, any user that is granted the admin role on a project must also be granted the "member" or "admin" role in the domain.

5.3.4 Projects #

The domain administrator creates projects within his assigned domain and assigns the project admin role to each project to a selected UID. A UID is a project administrator for a given project if that UID has a project-scoped token scoped for the given project. There can be multiple projects per domain. The project admin sets the project quota settings, adds/deletes users and groups to and from the project, and defines the user/group roles for the assigned project. Users can be belong to multiple projects and have different roles on each project. Users are assigned to a specific domain and a default project. Roles are assigned per project.

5.3.5 Users and Groups #

Each user belongs to one domain only. Domain assignments are defined either by the domain configuration files or by a domain administrator when creating a new, local (user authenticated against keystone) user. There is no current method for "moving" a user from one domain to another. A user can belong to multiple projects within a domain with a different role assignment per project. A group is a collection of users. Users can be assigned to groups either by the project admin or automatically via mappings if an external authentication source is defined for the assigned domain. Groups can be assigned to multiple projects within a domain and have different roles assigned to the group per project. A group can be assigned the "admin" role for a domain or project. All members of the group will be an "admin" for the selected domain or project.

5.3.6 Roles #

Service roles represent the functionality used to implement the OpenStack role based access control (RBAC), model used to manage access to each OpenStack service. Roles are named and assigned per user or group for each project by the identity service. Role definition and policy enforcement are defined outside of the identity service independently by each OpenStack service. The token generated by the identity service for each user authentication contains the role assigned to that user for a particular project. When a user attempts to access a specific OpenStack service, the role is parsed by the service, compared to the service-specific policy file, and then granted the resource access defined for that role by the service policy file.

Each service has its own service policy file with the /etc/[SERVICE_CODENAME]/policy.json file name format where [SERVICE_CODENAME] represents a specific OpenStack service name. For example, the OpenStack nova service would have a policy file called /etc/nova/policy.json. With Service policy files can be modified and deployed to control nodes from the Cloud Lifecycle Manager. Administrators are advised to validate policy changes before checking in the changes to the site branch of the local git repository before rolling the changes into production. Do not make changes to policy files without having a way to validate them.

The policy files are located at the following site branch locations on the Cloud Lifecycle Manager.

~/openstack/ardana/ansible/roles/GLA-API/templates/policy.json.j2 ~/openstack/ardana/ansible/roles/ironic-common/files/policy.json ~/openstack/ardana/ansible/roles/KEYMGR-API/templates/policy.json ~/openstack/ardana/ansible/roles/heat-common/files/policy.json ~/openstack/ardana/ansible/roles/CND-API/templates/policy.json ~/openstack/ardana/ansible/roles/nova-common/files/policy.json ~/openstack/ardana/ansible/roles/CEI-API/templates/policy.json.j2 ~/openstack/ardana/ansible/roles/neutron-common/templates/policy.json.j2

For test and validation, policy files can be modified in a non-production

environment from the ~/scratch/ directory. For a specific

policy file, run a search for policy.json. To deploy policy changes for a

service, run the service specific reconfiguration playbook (for example,

nova-reconfigure.yml). For a complete list of reconfiguration playbooks,

change directories to ~/scratch/ansible/next/ardana/ansible

and run this command:

ardana > ls | grep reconfigure

A read-only role named project_observer is explicitly

created in SUSE OpenStack Cloud 9. Any user who is granted this role can use

list_project.

5.4 Identity Service Token Validation Example #

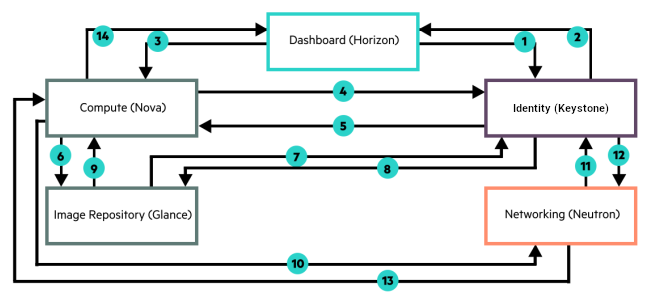

The following diagram illustrates the flow of typical Identity service (keystone) requests/responses between SUSE OpenStack Cloud services and the Identity service. It shows how keystone issues and validates tokens to ensure the identity of the caller of each service.

horizon sends an HTTP authentication request to keystone for user credentials.

keystone validates the credentials and replies with token.

horizon sends a POST request, with token to nova to start provisioning a virtual machine.

nova sends token to keystone for validation.

keystone validates the token.

nova forwards a request for an image with the attached token.

glance sends token to keystone for validation.

keystone validates the token.

glance provides image-related information to nova.

nova sends request for networks to neutron with token.

neutron sends token to keystone for validation.

keystone validates the token.

neutron provides network-related information to nova.

nova reports the status of the virtual machine provisioning request.

5.5 Configuring the Identity Service #

5.5.1 What is the Identity service? #

The SUSE OpenStack Cloud Identity service, based on the OpenStack keystone API, provides UserID authentication and access authorization to help organizations achieve their access security and compliance objectives and successfully deploy OpenStack. In short, the Identity service is the gateway to the rest of the OpenStack services.

The identity service is installed automatically by the Cloud Lifecycle Manager (just after MySQL and RabbitMQ). When your cloud is up and running, you can customize keystone in a number of ways, including integrating with LDAP servers. This topic describes the default configuration. See Section 5.8, “Reconfiguring the Identity service” for changes you can implement. Also see Section 5.9, “Integrating LDAP with the Identity Service” for information on integrating with an LDAP provider.

5.5.2 Which version of the Identity service should you use? #

Note that you should use identity API version 3.0. Identity API v2.0 was has been deprecated. Many features such as LDAP integration and fine-grained access control will not work with v2.0. The following are a few questions you may have regarding versions.

Why does the keystone identity catalog still show version 2.0?

Tempest tests still use the v2.0 API. They are in the process of migrating to v3.0. We will remove the v2.0 version once tempest has migrated the tests. The Identity catalog has version 2.0 just to support tempest migration.

Will the keystone identity v3.0 API work if the identity catalog has only the v2.0 endpoint?

Identity v3.0 does not rely on the content of the catalog. It will continue to work regardless of the version of the API in the catalog.

Which CLI client should you use?

You should use the OpenStack CLI, not the keystone CLI, because it is deprecated. The keystone CLI does not support the v3.0 API; only the OpenStack CLI supports the v3.0 API.

5.5.3 Authentication #

The authentication function provides the initial login function to OpenStack. keystone supports multiple sources of authentication, including a native or built-in authentication system. You can use the keystone native system for all user management functions for proof-of-concept deployments or small deployments not requiring integration with a corporate authentication system, but it lacks some of the advanced functions usually found in user management systems such as forcing password changes. The focus of the keystone native authentication system is to be the source of authentication for OpenStack-specific users required to operate various OpenStack services. These users are stored by keystone in a default domain; the addition of these IDs to an external authentication system is not required.

keystone is more commonly integrated with external authentication systems such as OpenLDAP or Microsoft Active Directory. These systems are usually centrally deployed by organizations to serve as the single source of user management and authentication for all in-house deployed applications and systems requiring user authentication. In addition to LDAP and Microsoft Active Directory, support for integration with Security Assertion Markup Language (SAML)-based identity providers from companies such as Ping, CA, IBM, Oracle, and others is also nearly "production-ready."

keystone also provides architectural support through the underlying Apache deployment for other types of authentication systems, such as multi-factor authentication. These types of systems typically require driver support and integration from the respective providers.

While support for Identity providers and multi-factor authentication is available in keystone, it has not yet been certified by the SUSE OpenStack Cloud engineering team and is an experimental feature in SUSE OpenStack Cloud.

LDAP-compatible directories such as OpenLDAP and Microsoft Active Directory are recommended alternatives to using keystone local authentication. Both methods are widely used by organizations and are integrated with a variety of other enterprise applications. These directories act as the single source of user information within an organization. You can configure keystone to authenticate against an LDAP-compatible directory on a per-domain basis.

Domains, as explained in Section 5.3, “Understanding Domains, Projects, Users, Groups, and Roles”, can be configured so that, based on the user ID, an incoming user is automatically mapped to a specific domain. You can then configure this domain to authenticate against a specific LDAP directory. User credentials provided by the user to keystone are passed along to the designated LDAP source for authentication. You can optionally configure this communication to be secure through SSL encryption. No special LDAP administrative access is required, and only read-only access is needed for this configuration. keystone will not add any LDAP information. All user additions, deletions, and modifications are performed by the application's front end in the LDAP directories. After a user has been successfully authenticated, that user is then assigned to the groups, roles, and projects defined by the keystone domain or project administrators. This information is stored in the keystone service database.

Another form of external authentication provided by the keystone service is through integration with SAML-based identity providers (IdP) such as Ping Identity, IBM Tivoli, and Microsoft Active Directory Federation Server. A SAML-based identity provider provides authentication that is often called "single sign-on." The IdP server is configured to authenticate against identity sources such as Active Directory and provides a single authentication API against multiple types of downstream identity sources. This means that an organization could have multiple identity storage sources but a single authentication source. In addition, if a user has logged into one such source during a defined session time frame, that user does not need to reauthenticate within the defined session. Instead, the IdP automatically validates the user to requesting applications and services.

A SAML-based IdP authentication source is configured with keystone on a per-domain basis similar to the manner in which native LDAP directories are configured. Extra mapping rules are required in the configuration that define which keystone group an incoming UID is automatically assigned to. This means that groups need to be defined in keystone first, but it also removes the requirement that a domain or project administrator assign user roles and project membership on a per-user basis. Instead, groups are used to define project membership and roles and incoming users are automatically mapped to keystone groups based on their upstream group membership. This strategy provides a consistent role-based access control (RBAC) model based on the upstream identity source. The configuration of this option is fairly straightforward. IdP vendors such as Ping and IBM are contributing to the maintenance of this function and have also produced their own integration documentation. HPE is using the Microsoft Active Directory Federation Services (AD FS) for functional testing and future documentation.

The third keystone-supported authentication source is known as multi-factor authentication (MFA). MFA typically requires an external source of authentication beyond a login name and password, and can include options such as SMS text, a temporal token generator, or a fingerprint scanner. Each of these types of MFAs are usually specific to a particular MFA vendor. The keystone architecture supports an MFA-based authentication system, but this has not yet been certified or documented for SUSE OpenStack Cloud.

5.5.4 Authorization #

Another major function provided by the keystone service is access authorization that determines which resources and actions are available based on the UserID, the role of the user, and the projects that a user is provided access to. All of this information is created, managed, and stored by keystone. These functions are applied through the horizon web interface, the OpenStack command-line interface, or the direct keystone API.

keystone provides support for organizing users by using three entities:

- Domains

Domains provide the highest level of organization. Domains are intended to be used as high-level containers for multiple projects. A domain can represent different tenants, companies, or organizations for an OpenStack cloud deployed for public cloud deployments or it can represent major business units, functions, or any other type of top-level organization unit in an OpenStack private cloud deployment. Each domain has at least one Domain Admin assigned to it. This Domain Admin can then create multiple projects within the domain and assign the project administrator role to specific project owners. Each domain created in an OpenStack deployment is unique and the projects assigned to a domain cannot exist in another domain.

- Projects

Projects are entities within a domain that represent groups of users, each user role within that project, and how many underlying infrastructure resources can be consumed by members of the project.

- Groups

Groups are an optional function and provide the means of assigning project roles to multiple users at once.

keystone also makes it possible to create and assign roles to groups of users or individual users. Role names are created and user assignments are made within keystone. The actual function of a role is defined currently for each OpenStack service via scripts. When users request access to an OpenStack service, their access tokens contain information about their assigned project membership and role for that project. This role is then matched to the service-specific script and users are allowed to perform functions within that service defined by the role mapping.

5.5.5 Default settings #

Identity service configuration settings

The identity service configuration options are described in the OpenStack documentation at keystone Configuration Options on the OpenStack site.

Default domain and service accounts

The "default" domain is automatically created during the installation to contain the various required OpenStack service accounts, including the following:

admin | heat | monasca-agent |

barbican | logging | neutron |

barbican_service | logging_api | nova |

ceilometer | logging_beaver | nova_monasca |

cinder | logging_monitor | octavia |

cinderinternal | magnum | placement |

demo | manila | swift |

designate | manilainternal | swift-demo |

glance | monasca | swift-dispersion |

glance-check | monasca_read_only | swift-monitor |

glance-swift |

These are required accounts and are used by the underlying OpenStack services. These accounts should not be removed or reassigned to a different domain. These "default" domain should be used only for these service accounts.

5.5.6 Preinstalled roles #

The following are the preinstalled roles. You can create additional roles by UIDs with the "admin" role. Roles are defined on a per-service basis (more information is available at Manage projects, users, and roles on the OpenStack website).

| Role | Description |

|---|---|

| admin |

The "superuser" role. Provides full access to all SUSE OpenStack Cloud services across all domains and projects. This role should be given only to a cloud administrator. |

| member |

A general role that enables a user to access resources within an assigned project including creating, modifying, and deleting compute, storage, and network resources. |

You can find additional information on these roles in each service policy

stored in the /etc/PROJECT/policy.json files where

PROJECT is a placeholder for an OpenStack service. For example, the Compute

(nova) service roles are stored in the

/etc/nova/policy.json file. Each service policy file

defines the specific API functions available to a role label.

5.6 Retrieving the Admin Password #

The admin password will be used to access the dashboard and Operations Console as well as allow you to authenticate to use the command-line tools and API.

In a default SUSE OpenStack Cloud 9 installation there is a randomly generated password for the Admin user created. These steps will show you how to retrieve this password.

5.6.1 Retrieving the Admin Password #

You can retrieve the randomly generated Admin password by using this command on the Cloud Lifecycle Manager:

ardana > cat ~/service.osrc

In this example output, the value for OS_PASSWORD is the

Admin password:

ardana > cat ~/service.osrc

unset OS_DOMAIN_NAME

export OS_IDENTITY_API_VERSION=3

export OS_AUTH_VERSION=3

export OS_PROJECT_NAME=admin

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USERNAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PASSWORD=SlWSfwxuJY0

export OS_AUTH_URL=https://10.13.111.145:5000/v3

export OS_ENDPOINT_TYPE=internalURL

# OpenstackClient uses OS_INTERFACE instead of OS_ENDPOINT

export OS_INTERFACE=internal

export OS_CACERT=/etc/ssl/certs/ca-certificates.crt

export OS_COMPUTE_API_VERSION=25.7 Changing Service Passwords #

SUSE OpenStack Cloud provides a process for changing the default service passwords, including your admin user password, which you may want to do for security or other purposes.

You can easily change the inter-service passwords used for authenticating communications between services in your SUSE OpenStack Cloud deployment, promoting better compliance with your organization’s security policies. The inter-service passwords that can be changed include (but are not limited to) keystone, MariaDB, RabbitMQ, Cloud Lifecycle Manager cluster, monasca and barbican.

The general process for changing the passwords is to:

Indicate to the configuration processor which password(s) you want to change, and optionally include the value of that password

Run the configuration processor to generate the new passwords (you do not need to run

git addbefore this)Run ready-deployment

Check your password name(s) against the tables included below to see which high-level credentials-change playbook(s) you need to run

Run the appropriate high-level credentials-change playbook(s)

5.7.1 Password Strength #

Encryption passwords supplied to the configuration processor for use with Ansible Vault and for encrypting the configuration processor’s persistent state must have a minimum length of 12 characters and a maximum of 128 characters. Passwords must contain characters from each of the following three categories:

Uppercase characters (A-Z)

Lowercase characters (a-z)

Base 10 digits (0-9)

Service Passwords that are automatically generated by the configuration processor are chosen from the 62 characters made up of the 26 uppercase, the 26 lowercase, and the 10 numeric characters, with no preference given to any character or set of characters, with the minimum and maximum lengths being determined by the specific requirements of individual services.

Currently, you can not use any special characters with Ansible Vault, Service Passwords, or vCenter configuration.

5.7.2 Telling the configuration processor which password(s) you want to change #

In SUSE OpenStack Cloud 9, the configuration processor will produce metadata about

each of the passwords (and other variables) that it generates in the file

~/openstack/my_cloud/info/private_data_metadata_ccp.yml. A

snippet of this file follows. Expand the header to see the file:

5.7.3 private_data_metadata_ccp.yml #

metadata_proxy_shared_secret:

metadata:

- clusters:

- cluster1

component: nova-metadata

consuming-cp: ccp

cp: ccp

version: '2.0'

mysql_admin_password:

metadata:

- clusters:

- cluster1

component: ceilometer

consumes: mysql

consuming-cp: ccp

cp: ccp

- clusters:

- cluster1

component: heat

consumes: mysql

consuming-cp: ccp

cp: ccp

- clusters:

- cluster1

component: keystone

consumes: mysql

consuming-cp: ccp

cp: ccp

- clusters:

- cluster1

- compute

component: nova

consumes: mysql

consuming-cp: ccp

cp: ccp

- clusters:

- cluster1

component: cinder

consumes: mysql

consuming-cp: ccp

cp: ccp

- clusters:

- cluster1

component: glance

consumes: mysql

consuming-cp: ccp

cp: ccp

- clusters:

- cluster1

- compute

component: neutron

consumes: mysql

consuming-cp: ccp

cp: ccp

- clusters:

- cluster1

component: horizon

consumes: mysql

consuming-cp: ccp

cp: ccp

version: '2.0'

mysql_barbican_password:

metadata:

- clusters:

- cluster1

component: barbican

consumes: mysql

consuming-cp: ccp

cp: ccp

version: '2.0'

For each variable, there is a metadata entry for each pair of services that

use the variable including a list of the clusters on which the service

component that consumes the variable (defined as "component:" in

private_data_metadata_ccp.yml above) runs.

Note above that the variable mysql_admin_password is used by a number of

service components, and the service that is consumed in each case is mysql,

which in this context refers to the MariaDB instance that is part of the

product.

5.7.4 Steps to change a password #

First, make sure that you have a copy of

private_data_metadata_ccp.yml. If you

do not, generate one to run the configuration processor:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.yml

Make a copy of the private_data_metadata_ccp.yml file and

place it into the ~/openstack/change_credentials directory:

ardana > cp ~/openstack/my_cloud/info/private_data_metadata_control-plane-1.yml \

~/openstack/change_credentials/

Edit the copied file in ~/openstack/change_credentials

leaving only those passwords you intend to change. All entries in this

template file should be deleted except for those

passwords.

If you leave other passwords in that file that you do not want to change, they will be regenerated and no longer match those in use which could disrupt operations.

It is required that you change passwords in batches of each category listed below.

For example, the snippet below would result in the configuration processor generating new random values for keystone_backup_password, keystone_ceilometer_password, and keystone_cinder_password:

keystone_backup_password:

metadata:

- clusters:

- cluster0

- cluster1

- compute

consumes: keystone-api

consuming-cp: ccp

cp: ccp

version: '2.0'

keystone_ceilometer_password:

metadata:

- clusters:

- cluster1

component: ceilometer-common

consumes: keystone-api

consuming-cp: ccp

cp: ccp

version: '2.0'

keystone_cinder_password:

metadata:

- clusters:

- cluster1

component: cinder-api

consumes: keystone-api

consuming-cp: ccp

cp: ccp

version: '2.0'5.7.5 Specifying password value #

Optionally, you can specify a value for the password by including a "value:" key and value at the same level as metadata:

keystone_backup_password:

value: 'new_password'

metadata:

- clusters:

- cluster0

- cluster1

- compute

consumes: keystone-api

consuming-cp: ccp

cp: ccp

version: '2.0'Note that you can have multiple files in openstack/change_credentials. The configuration processor will only read files that end in .yml or .yaml.

If you have specified a password value in your credential change file, you may want to encrypt it using ansible-vault. If you decide to encrypt with ansible-vault, make sure that you use the encryption key you have already used when running the configuration processor.

To encrypt a file using ansible-vault, execute:

ardana >cd ~/openstack/change_credentialsardana >ansible-vault encrypt credential change file ending in .yml or .yaml

Be sure to provide the encryption key when prompted. Note that if you have specified the wrong ansible-vault password, the configuration-processor will error out with a message like the following:

################################################## Reading Persistent State ################################################## ################################################################################ # The configuration processor failed. # PersistentStateCreds: User-supplied creds file test1.yml was not parsed properly ################################################################################

5.7.6 Running the configuration processor to change passwords #

The directory openstack/change_credentials is not managed by git, so to rerun the configuration processor to generate new passwords and prepare for the next deployment just enter the following commands:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlardana >ansible-playbook -i hosts/localhost ready-deployment.yml

The files that you placed in

~/openstack/change_credentials should be removed

once you have run the configuration processor because the old password

values and new password values will be stored in the configuration

processor's persistent state.

Note that if you see output like the following after running the configuration processor:

################################################################################ # The configuration processor completed with warnings. # PersistentStateCreds: User-supplied password name 'blah' is not valid ################################################################################

this tells you that the password name you have supplied, 'blah,' does not exist. A failure to correctly parse the credentials change file will result in the configuration processor erroring out with a message like the following:

################################################## Reading Persistent State ################################################## ################################################################################ # The configuration processor failed. # PersistentStateCreds: User-supplied creds file test1.yml was not parsed properly ################################################################################

Once you have run the configuration processor to change passwords, an

information file

~/openstack/my_cloud/info/password_change.yml similar to the

private_data_metadata_ccp.yml is written to tell you which

passwords have been changed, including metadata but not including the

values.

5.7.7 Password change playbooks and tables #

Once you have completed the steps above to change password(s) value(s) and then prepare for the deployment that will actually switch over to the new passwords, you will need to run some high-level playbooks. The passwords that can be changed are grouped into six categories. The tables below list the password names that belong in each category. The categories are:

- keystone

Playbook: ardana-keystone-credentials-change.yml

- RabbitMQ

Playbook: ardana-rabbitmq-credentials-change.yml

- MariaDB

Playbook: ardana-reconfigure.yml

- Cluster:

Playbook: ardana-cluster-credentials-change.yml

- monasca:

Playbook: monasca-reconfigure-credentials-change.yml

- Other:

Playbook: ardana-other-credentials-change.yml

It is recommended that you change passwords in batches; in other words, run through a complete password change process for each batch of passwords, preferably in the above order. Once you have followed the process indicated above to change password(s), check the names against the tables below to see which password change playbook(s) you should run.

Changing identity service credentials

The following table lists identity service credentials you can change.

| keystone credentials |

|---|

Password name

barbican_admin_password

barbican_service_password

keystone_admin_pwd

keystone_ceilometer_password

keystone_cinder_password

keystone_cinderinternal_password

keystone_demo_pwd

keystone_designate_password

keystone_glance_password

keystone_glance_swift_password

keystone_heat_password

keystone_magnum_password

keystone_monasca_agent_password

keystone_monasca_password

keystone_neutron_password

keystone_nova_password

keystone_octavia_password

keystone_swift_dispersion_password

keystone_swift_monitor_password

keystone_swift_password

nova_monasca_password |

The playbook to run to change keystone credentials is

ardana-keystone-credentials-change.yml. Execute the

following commands to make the changes:

ardana >cd ~/scratch/ansible/next/ardana/ansible/ardana >ansible-playbook -i hosts/verb_hosts ardana-keystone-credentials-change.yml

Changing RabbitMQ credentials

The following table lists the RabbitMQ credentials you can change.

| RabbitMQ credentials |

|---|

Password name

rmq_barbican_password

rmq_ceilometer_password

rmq_cinder_password

rmq_designate_password

rmq_keystone_password

rmq_magnum_password

rmq_monasca_monitor_password

rmq_nova_password

rmq_octavia_password

rmq_service_password |

The playbook to run to change RabbitMQ credentials is

ardana-rabbitmq-credentials-change.yml. Execute the

following commands to make the changes:

ardana >cd ~/scratch/ansible/next/ardana/ansible/ardana >ansible-playbook -i hosts/verb_hosts ardana-rabbitmq-credentials-change.yml

Changing MariaDB credentials

The following table lists the MariaDB credentials you can change.

| MariaDB credentials |

|---|

Password name

mysql_admin_password

mysql_barbican_password

mysql_clustercheck_pwd

mysql_designate_password

mysql_magnum_password

mysql_monasca_api_password

mysql_monasca_notifier_password

mysql_monasca_thresh_password

mysql_octavia_password

mysql_powerdns_password

mysql_root_pwd

mysql_sst_password

ops_mon_mdb_password

mysql_monasca_transform_password

mysql_nova_api_password

password |

The playbook to run to change MariaDB credentials is

ardana-reconfigure.yml. To make the changes, execute the

following commands:

ardana >cd ~/scratch/ansible/next/ardana/ansible/ardana >ansible-playbook -i hosts/verb_hosts ardana-reconfigure.yml

Changing cluster credentials

The following table lists the cluster credentials you can change.

| cluster credentials |

|---|

Password name

haproxy_stats_password

keepalive_vrrp_password |

The playbook to run to change cluster credentials is

ardana-cluster-credentials-change.yml. To make changes,

execute the following commands:

ardana >cd ~/scratch/ansible/next/ardana/ansible/ardana >ansible-playbook -i hosts/verb_hosts ardana-cluster-credentials-change.yml

Changing monasca credentials

The following table lists the monasca credentials you can change.

| monasca credentials |

|---|

Password name

cassandra_monasca_api_password

cassandra_monasca_persister_password |

The playbook to run to change monasca credentials is

monasca-reconfigure-credentials-change.yml. To make the

changes, execute the following commands:

ardana >cd ~/scratch/ansible/next/ardana/ansible/ardana >ansible-playbook -i hosts/verb_hosts monasca-reconfigure-credentials-change.yml

Changing other credentials

The following table lists the other credentials you can change.

| Other credentials |

|---|

Password name

logging_beaver_password

logging_api_password

logging_monitor_password

logging_kibana_password |

The playbook to run to change these credentials is

ardana-other-credentials-change.yml. To make the changes,

execute the following commands:

ardana >cd ~/scratch/ansible/next/ardana/ansible/ardana >ansible-playbook -i hosts/verb_hosts ardana-other-credentials-change.yml

5.7.8 Changing RADOS Gateway Credential #

To change the keystone credentials of RADOS Gateway, follow the preceding

steps documented in Section 5.7, “Changing Service Passwords” by modifying the

keystone_rgw_password section in

private_data_metadata_ccp.yml file in

Section 5.7.4, “Steps to change a password” or

Section 5.7.5, “Specifying password value”.

5.7.9 Immutable variables #

The values of certain variables are immutable, which means that once they have been generated by the configuration processor they cannot be changed. These variables are:

barbican_master_kek_db_plugin

swift_hash_path_suffix

swift_hash_path_prefix

mysql_cluster_name

heartbeat_key

erlang_cookie

The configuration processor will not re-generate the values of the above passwords, nor will it allow you to specify a value for them. In addition to the above variables, the following are immutable in SUSE OpenStack Cloud 9:

All ssh keys generated by the configuration processor

All UUIDs generated by the configuration processor

metadata_proxy_shared_secret

horizon_secret_key

ceilometer_metering_secret

5.8 Reconfiguring the Identity service #

5.8.1 Updating the keystone Identity Service #

This topic explains configuration options for the Identity service.

SUSE OpenStack Cloud lets you perform updates on the following parts of the Identity service configuration:

Any content in the main keystone configuration file:

/etc/keystone/keystone.conf. This lets you manipulate keystone configuration parameters. Next, continue with Section 5.8.2, “Updating the Main Identity service Configuration File”.Updating certain configuration options and enabling features, such as:

Verbosity of logs being written to keystone log files.

Process counts for the Apache2 WSGI module, separately for admin and public keystone interfaces.

Enabling/disabling auditing.

Enabling/disabling Fernet tokens.

For more information, see Section 5.8.3, “Enabling Identity Service Features”.

Creating and updating domain-specific configuration files: /etc/keystone/domains/keystone.<domain_name>.conf. This lets you integrate keystone with one or more external authentication sources, such as LDAP server. See the topic on Section 5.9, “Integrating LDAP with the Identity Service”.

5.8.2 Updating the Main Identity service Configuration File #

The main keystone Identity service configuration file (/etc/keystone/keystone.conf), located on each control plane server, is generated from the following template file located on a Cloud Lifecycle Manager:

~/openstack/my_cloud/config/keystone/keystone.conf.j2Modify this template file as appropriate. See keystone Liberty documentation for full descriptions of all settings. This is a Jinja2 template, which expects certain template variables to be set. Do not change values inside double curly braces:

{{ }}.NoteSUSE OpenStack Cloud 9 has the following token expiration setting, which differs from the upstream value

3600:[token] expiration = 14400

After you modify the template, commit the change to the local git repository, and rerun the configuration processor / deployment area preparation playbooks (as suggested in Chapter 22, Using Git for Configuration Management):

ardana >cd ~/openstackardana >git checkout siteardana >git add my_cloud/config/keystone/keystone.conf.j2ardana >git commit -m "Adjusting some parameters in keystone.conf"ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun the reconfiguration playbook in the deployment area:

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts keystone-reconfigure.yml

5.8.3 Enabling Identity Service Features #

To enable or disable keystone features, do the following:

Adjust respective parameters in ~/openstack/my_cloud/config/keystone/keystone_deploy_config.yml

Commit the change into local git repository, and rerun the configuration processor/deployment area preparation playbooks (as suggested in Chapter 22, Using Git for Configuration Management):

ardana >cd ~/openstackardana >git checkout siteardana >git add my_cloud/config/keystone/keystone_deploy_config.ymlardana >git commit -m "Adjusting some WSGI or logging parameters for keystone"ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun the reconfiguration playbook in the deployment area:

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts keystone-reconfigure.yml

5.8.4 Fernet Tokens #

SUSE OpenStack Cloud 9 supports Fernet tokens by default. The benefit of using Fernet tokens is that tokens are not persisted in a database, which is helpful if you want to deploy the keystone Identity service as one master and multiple slaves; only roles, projects, and other details are replicated from master to slaves. The token table is not replicated.

Tempest does not work with Fernet tokens in SUSE OpenStack Cloud 9. If Fernet tokens are enabled, do not run token tests in Tempest.

During reconfiguration when switching to a Fernet token provider or during

Fernet key rotation, you may see a warning in

keystone.log stating [fernet_tokens]

key_repository is world readable: /etc/keystone/fernet-keys/.

This is expected. You can safely ignore this message. For other keystone

operations, this warning is not displayed. Directory permissions are set to

600 (read/write by owner only), not world readable.

Fernet token-signing key rotation is being handled by a cron job, which is configured on one of the controllers. The controller with the Fernet token-signing key rotation cron job is also known as the Fernet Master node. By default, the Fernet token-signing key is rotated once every 24 hours. The Fernet token-signing keys are distributed from the Fernet Master node to the rest of the controllers at each rotation. Therefore, the Fernet token-signing keys are consistent for all the controlers at all time.

When enabling Fernet token provider the first time, specific steps are needed to set up the necessary mechanisms for Fernet token-signing key distributions.

Set

keystone_configure_fernettoTruein~/openstack/my_cloud/config/keystone/keystone_deploy_config.yml.Run the following commands to commit your change in Git and enable Fernet:

ardana >git add my_cloud/config/keystone/keystone_deploy_config.ymlardana >git commit -m "enable Fernet token provider"ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlardana >ansible-playbook -i hosts/localhost ready-deployment.ymlardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts keystone-deploy.yml

When the Fernet token provider is enabled, a Fernet Master alarm definition

is also created on monasca to monitor the Fernet Master node. If the Fernet

Master node is offline or unreachable, a CRITICAL alarm

is raised for the Cloud Admin to take corrective actions. If the Fernet

Master node is offline for a prolonged period of time, Fernet token-signing

key rotation is not performed. This may introduce security risks to the

cloud. The Cloud Admin must take immediate actions to resurrect the Fernet

Master node.

5.9 Integrating LDAP with the Identity Service #

5.9.1 Integrating with an external LDAP server #

The keystone identity service provides two primary functions: user authentication and access authorization. The user authentication function validates a user's identity. keystone has a very basic user management system that can be used to create and manage user login and password credentials but this system is intended only for proof of concept deployments due to the very limited password control functions. The internal identity service user management system is also commonly used to store and authenticate OpenStack-specific service account information.

The recommended source of authentication is external user management systems such as LDAP directory services. The identity service can be configured to connect to and use external systems as the source of user authentication. The identity service domain construct is used to define different authentication sources based on domain membership. For example, cloud deployment could consist of as few as two domains:

The default domain that is pre-configured for the service account users that are authenticated directly against the identity service internal user management system

A customer-defined domain that contains all user projects and membership definitions. This domain can then be configured to use an external LDAP directory such as Microsoft Active Directory as the authentication source.

SUSE OpenStack Cloud can support multiple domains for deployments that support multiple tenants. Multiple domains can be created with each domain configured to either the same or different external authentication sources. This deployment model is known as a "per-domain" model.

There are currently two ways to configure "per-domain" authentication sources:

File store – each domain configuration is created and stored in separate text files. This is the older and current default method for defining domain configurations.

Database store – each domain configuration can be created using either the identity service manager utility (recommenced) or a Domain Admin API (from OpenStack.org), and the results are stored in the identity service MariaDB database. This database store is a new method introduced in the OpenStack Kilo release and now available in SUSE OpenStack Cloud.

Instructions for initially creating per-domain configuration files and then migrating to the Database store method via the identity service manager utility are provided as follows.

5.9.2 Set up domain-specific driver configuration - file store #

To update configuration to a specific LDAP domain:

Ensure that the following configuration options are in the main configuration file template: ~/openstack/my_cloud/config/keystone/keystone.conf.j2

[identity] domain_specific_drivers_enabled = True domain_configurations_from_database = False

Create a YAML file that contains the definition of the LDAP server connection. The sample file below is already provided as part of the Cloud Lifecycle Manager in the Chapter 22, Using Git for Configuration Management. It is available on the Cloud Lifecycle Manager in the following file:

~/openstack/my_cloud/config/keystone/keystone_configure_ldap_sample.yml

Save a copy of this file with a new name, for example:

~/openstack/my_cloud/config/keystone/keystone_configure_ldap_my.yml

NotePlease refer to the LDAP section of the keystone configuration example for OpenStack for the full option list and description.

Below are samples of YAML configurations for identity service LDAP certificate settings, optimized for Microsoft Active Directory server.

Sample YAML configuration keystone_configure_ldap_my.yml

--- keystone_domainldap_conf: # CA certificates file content. # Certificates are stored in Base64 PEM format. This may be entire LDAP server # certificate (in case of self-signed certificates), certificate of authority # which issued LDAP server certificate, or a full certificate chain (Root CA # certificate, intermediate CA certificate(s), issuer certificate). # cert_settings: cacert: | -----BEGIN CERTIFICATE----- certificate appears here -----END CERTIFICATE----- # A domain will be created in MariaDB with this name, and associated with ldap back end. # Installer will also generate a config file named /etc/keystone/domains/keystone.<domain_name>.conf # domain_settings: name: ad description: Dedicated domain for ad users conf_settings: identity: driver: ldap # For a full list and description of ldap configuration options, please refer to # https://github.com/openstack/keystone/blob/master/etc/keystone.conf.sample or # http://docs.openstack.org/liberty/config-reference/content/keystone-configuration-file.html. # # Please note: # 1. LDAP configuration is read-only. Configuration which performs write operations (i.e. creates users, groups, etc) # is not supported at the moment. # 2. LDAP is only supported for identity operations (reading users and groups from LDAP). Assignment # operations with LDAP (i.e. managing roles, projects) are not supported. # 3. LDAP is configured as non-default domain. Configuring LDAP as a default domain is not supported. # ldap: url: ldap://ad.hpe.net suffix: DC=hpe,DC=net query_scope: sub user_tree_dn: CN=Users,DC=hpe,DC=net user : CN=admin,CN=Users,DC=hpe,DC=net password: REDACTED user_objectclass: user user_id_attribute: cn user_name_attribute: cn group_tree_dn: CN=Users,DC=hpe,DC=net group_objectclass: group group_id_attribute: cn group_name_attribute: cn use_pool: True user_enabled_attribute: userAccountControl user_enabled_mask: 2 user_enabled_default: 512 use_tls: True tls_req_cert: demand # if you are configuring multiple LDAP domains, and LDAP server certificates are issued # by different authorities, make sure that you place certs for all the LDAP backend domains in the # cacert parameter as seen in this sample yml file so that all the certs are combined in a single CA file # and every LDAP domain configuration points to the combined CA file. # Note: # 1. Please be advised that every time a new ldap domain is configured, the single CA file gets overwritten # and hence ensure that you place certs for all the LDAP backend domains in the cacert parameter. # 2. There is a known issue on one cert per CA file per domain when the system processes # concurrent requests to multiple LDAP domains. Using the single CA file with all certs combined # shall get the system working properly*. tls_cacertfile: /etc/keystone/ssl/certs/all_ldapdomains_ca.pem # The issue is in the underlying SSL library. Upstream is not investing in python-ldap package anymore. # It is also not python3 compliant.keystone_domain_MSAD_conf: # CA certificates file content. # Certificates are stored in Base64 PEM format. This may be entire LDAP server # certificate (in case of self-signed certificates), certificate of authority # which issued LDAP server certificate, or a full certificate chain (Root CA # certificate, intermediate CA certificate(s), issuer certificate). # cert_settings: cacert: | -----BEGIN CERTIFICATE----- certificate appears here -----END CERTIFICATE----- # A domain will be created in MariaDB with this name, and associated with ldap back end. # Installer will also generate a config file named /etc/keystone/domains/keystone.<domain_name>.conf # domain_settings: name: msad description: Dedicated domain for msad users conf_settings: identity: driver: ldap # For a full list and description of ldap configuration options, please refer to # https://github.com/openstack/keystone/blob/master/etc/keystone.conf.sample or # http://docs.openstack.org/liberty/config-reference/content/keystone-configuration-file.html. # # Please note: # 1. LDAP configuration is read-only. Configuration which performs write operations (i.e. creates users, groups, etc) # is not supported at the moment. # 2. LDAP is only supported for identity operations (reading users and groups from LDAP). Assignment # operations with LDAP (i.e. managing roles, projects) are not supported. # 3. LDAP is configured as non-default domain. Configuring LDAP as a default domain is not supported. # ldap: # If the url parameter is set to ldap then typically use_tls should be set to True. If # url is set to ldaps, then use_tls should be set to False url: ldaps://10.16.22.5 use_tls: False query_scope: sub user_tree_dn: DC=l3,DC=local # this is the user and password for the account that has access to the AD server user: administrator@l3.local password: OpenStack123 user_objectclass: user # For a default Active Directory schema this is where to find the user name, openldap uses a different value user_id_attribute: userPrincipalName user_name_attribute: sAMAccountName group_tree_dn: DC=l3,DC=local group_objectclass: group group_id_attribute: cn group_name_attribute: cn # An upstream defect requires use_pool to be set false use_pool: False user_enabled_attribute: userAccountControl user_enabled_mask: 2 user_enabled_default: 512 tls_req_cert: allow # Referals may contain urls that can't be resolved and will cause timeouts, ignore them chase_referrals: False # if you are configuring multiple LDAP domains, and LDAP server certificates are issued # by different authorities, make sure that you place certs for all the LDAP backend domains in the # cacert parameter as seen in this sample yml file so that all the certs are combined in a single CA file # and every LDAP domain configuration points to the combined CA file. # Note: # 1. Please be advised that every time a new ldap domain is configured, the single CA file gets overwritten # and hence ensure that you place certs for all the LDAP backend domains in the cacert parameter. # 2. There is a known issue on one cert per CA file per domain when the system processes # concurrent requests to multiple LDAP domains. Using the single CA file with all certs combined # shall get the system working properly. tls_cacertfile: /etc/keystone/ssl/certs/all_ldapdomains_ca.pemAs suggested in Chapter 22, Using Git for Configuration Management, commit the new file to the local git repository, and rerun the configuration processor and ready deployment playbooks:

ardana >cd ~/openstackardana >git checkout siteardana >git add my_cloud/config/keystone/keystone_configure_ldap_my.ymlardana >git commit -m "Adding LDAP server integration config"ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun the reconfiguration playbook in a deployment area, passing the YAML file created in the previous step as a command-line option:

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts keystone-reconfigure.yml -e@~/openstack/my_cloud/config/keystone/keystone_configure_ldap_my.ymlFollow these same steps for each LDAP domain with which you are integrating the identity service, creating a YAML file for each and running the reconfigure playbook once for each additional domain.

Ensure that a new domain was created for LDAP (Microsoft AD in this example) and set environment variables for admin level access

ardana >source keystone.osrcGet a list of domains

ardana >openstack domain listAs output here:

+----------------------------------+---------+---------+----------------------------------------------------------------------+ | ID | Name | Enabled | Description | +----------------------------------+---------+---------+----------------------------------------------------------------------+ | 6740dbf7465a4108a36d6476fc967dbd | heat | True | Owns users and projects created by heat | | default | Default | True | Owns users and tenants (i.e. projects) available on Identity API v2. | | b2aac984a52e49259a2bbf74b7c4108b | ad | True | Dedicated domain for users managed by Microsoft AD server | +----------------------------------+---------+---------+----------------------------------------------------------------------+

NoteLDAP domain is read-only. This means that you cannot create new user or group records in it.

Once the LDAP user is granted the appropriate role, you can authenticate within the specified domain. Set environment variables for admin-level access

ardana >source keystone.osrcGet user record within the ad (Active Directory) domain

ardana >openstack user show testuser1 --domain adNote the output:

+-----------+------------------------------------------------------------------+ | Field | Value | +-----------+------------------------------------------------------------------+ | domain_id | 143af847018c4dc7bd35390402395886 | | id | e6d8c90abdc4510621271b73cc4dda8bc6009f263e421d8735d5f850f002f607 | | name | testuser1 | +-----------+------------------------------------------------------------------+

Now, get list of LDAP groups:

ardana >openstack group list --domain adHere you see testgroup1 and testgroup2:

+------------------------------------------------------------------+------------+ | ID | Name | +------------------------------------------------------------------+------------+ | 03976b0ea6f54a8e4c0032e8f756ad581f26915c7e77500c8d4aaf0e83afcdc6| testgroup1 | 7ba52ee1c5829d9837d740c08dffa07ad118ea1db2d70e0dc7fa7853e0b79fcf | testgroup2 | +------------------------------------------------------------------+------------+

Create a new role. Note that the role is not bound to the domain.

ardana >openstack role create testrole1Testrole1 has been created:

+-------+----------------------------------+ | Field | Value | +-------+----------------------------------+ | id | 02251585319d459ab847409dea527dee | | name | testrole1 | +-------+----------------------------------+

Grant the user a role within the domain by executing the code below. Note that due to a current OpenStack CLI limitation, you must use the user ID rather than the user name when working with a non-default domain.

ardana >openstack role add testrole1 --user e6d8c90abdc4510621271b73cc4dda8bc6009f263e421d8735d5f850f002f607 --domain adVerify that the role was successfully granted, as shown here:

ardana >openstack role assignment list --user e6d8c90abdc4510621271b73cc4dda8bc6009f263e421d8735d5f850f002f607 --domain ad +----------------------------------+------------------------------------------------------------------+-------+---------+----------------------------------+ | Role | User | Group | Project | Domain | +----------------------------------+------------------------------------------------------------------+-------+---------+----------------------------------+ | 02251585319d459ab847409dea527dee | e6d8c90abdc4510621271b73cc4dda8bc6009f263e421d8735d5f850f002f607 | | | 143af847018c4dc7bd35390402395886 | +----------------------------------+------------------------------------------------------------------+-------+---------+----------------------------------+Authenticate (get a domain-scoped token) as a new user with a new role. The --os-* command-line parameters specified below override the respective OS_* environment variables set by the keystone.osrc script to provide admin access. To ensure that the command below is executed in a clean environment, you may want log out from the node and log in again.

ardana >openstack --os-identity-api-version 3 \ --os-username testuser1 \ --os-password testuser1_password \ --os-auth-url http://10.0.0.6:35357/v3 \ --os-domain-name ad \ --os-user-domain-name ad \ token issueHere is the result:

+-----------+------------------------------------------------------------------+ | Field | Value | +-----------+------------------------------------------------------------------+ | domain_id | 143af847018c4dc7bd35390402395886 | | expires | 2015-09-09T21:36:15.306561Z | | id | 6f8f9f1a932a4d01b7ad9ab061eb0917 | | user_id | e6d8c90abdc4510621271b73cc4dda8bc6009f263e421d8735d5f850f002f607 | +-----------+------------------------------------------------------------------+

Users can also have a project within the domain and get a project-scoped token. To accomplish this, set environment variables for admin level access:

ardana >source keystone.osrcThen create a new project within the domain: