10 Managing Networking #

Information about managing and configuring the Networking service.

10.1 SUSE OpenStack Cloud Firewall #

Firewall as a Service (FWaaS) provides the ability to assign network-level, port security for all traffic entering an existing tenant network. More information on this service can be found in the public OpenStack documentation located at http://specs.openstack.org/openstack/neutron-specs/specs/api/firewall_as_a_service__fwaas_.html. The following documentation provides command-line interface example instructions for configuring and testing a SUSE OpenStack Cloud firewall. FWaaS can also be configured and managed by the horizon web interface.

With SUSE OpenStack Cloud, FWaaS is implemented directly in the L3 agent (neutron-l3-agent). However if VPNaaS is enabled, FWaaS is implemented in the VPNaaS agent (neutron-vpn-agent). Because FWaaS does not use a separate agent process or start a specific service, there currently are no monasca alarms for it.

If DVR is enabled, the firewall service currently does not filter traffic between OpenStack private networks, also known as east-west traffic and will only filter traffic from external networks, also known as north-south traffic.

The L3 agent must be restarted on each compute node hosting a DVR router when removing the FWaaS or adding a new FWaaS. This condition only applies when updating existing instances connected to DVR routers. For more information, see the upstream bug.

10.1.1 Overview of the SUSE OpenStack Cloud Firewall configuration #

The following instructions provide information about how to identify and modify the overall SUSE OpenStack Cloud firewall that is configured in front of the control services. This firewall is administered only by a cloud admin and is not available for tenant use for private network firewall services.

During the installation process, the configuration processor will

automatically generate "allow" firewall rules for each server based on the

services deployed and block all other ports. These are populated in

~/openstack/my_cloud/info/firewall_info.yml, which includes

a list of all the ports by network, including the addresses on which the

ports will be opened. This is described in more detail in

Section 5.2.10.5, “Firewall Configuration”.

The firewall_rules.yml file in the input model allows you

to define additional rules for each network group. You can read more about

this in Section 6.15, “Firewall Rules”.

The purpose of this document is to show you how to make post-installation changes to the firewall rules if the need arises.

This process is not to be confused with Firewall-as-a-Service, which is a separate service that enables the ability for SUSE OpenStack Cloud tenants to create north-south, network-level firewalls to provide stateful protection to all instances in a private, tenant network. This service is optional and is tenant-configured.

10.1.2 SUSE OpenStack Cloud 9 FWaaS Configuration #

Check for an enabled firewall.

You should check to determine if the firewall is enabled. The output of the openstack extension list should contain a firewall entry.

openstack extension list

Assuming the external network is already created by the admin, this command will show the external network.

openstack network list

Create required assets.

Before creating firewalls, you will need to create a network, subnet, router, security group rules, start an instance and assign it a floating IP address.

Create the network, subnet and router.

openstack network create private openstack subnet create --name sub private 10.0.0.0/24 --gateway 10.0.0.1 openstack router create router openstack router add subnet router sub openstack router set router ext-net

Create security group rules. Security group rules filter traffic at VM level.

openstack security group rule create default --protocol icmp openstack security group rule create default --protocol tcp --port-range-min 22 --port-range-max 22 openstack security group rule create default --protocol tcp --port-range-min 80 --port-range-max 80

Boot a VM.

NET=$(openstack network list | awk '/private/ {print $2}') openstack server create --flavor 1 --image <image> --nic net-id=$NET vm1 --pollVerify if the instance is ACTIVE and is assigned an IP address.

openstack server list

Get the port id of the vm1 instance.

fixedip=$(openstack server list | awk '/vm1/ {print $12}' | awk -F '=' '{print $2}' | awk -F ',' '{print $1}') vmportuuid=$(openstack port list | grep $fixedip | awk '{print $2}')Create and associate a floating IP address to the vm1 instance.

openstack floating ip create ext-net --port-id $vmportuuid

Verify if the floating IP is assigned to the instance. The following command should show an assigned floating IP address from the external network range.

openstack server show vm1

Verify if the instance is reachable from the external network. SSH into the instance from a node in (or has route to) the external network.

ssh cirros@FIP-VM1 password: <password>

Create and attach the firewall.

By default, an internal "drop all" rule is enabled in IP tables if none of the defined rules match the real-time data packets.

Create new firewall rules using

firewall-rule-createcommand and providing the protocol, action (allow, deny, reject) and name for the new rule.Firewall actions provide rules in which data traffic can be handled. An allow rule will allow traffic to pass through the firewall, deny will stop and prevent data traffic from passing through the firewall and reject will reject the data traffic and return a destination-unreachable response. Using reject will speed up failure detection time dramatically for legitimate users, since they will not be required to wait for retransmission timeouts or submit retries. Some customers should stick with deny where prevention of port scanners and similar methods may be attempted by hostile attackers. Using deny will drop all of the packets, making it more difficult for malicious intent. The firewall action, deny is the default behavior.

The example below demonstrates how to allow icmp and ssh while denying access to http. See the

OpenStackClientcommand-line reference at https://docs.openstack.org/python-openstackclient/rocky/ on additional options such as source IP, destination IP, source port and destination port.NoteYou can create a firewall rule with an identical name and each instance will have a unique id associated with the created rule, however for clarity purposes this is not recommended.

neutron firewall-rule-create --protocol icmp --action allow --name allow-icmp neutron firewall-rule-create --protocol tcp --destination-port 80 --action deny --name deny-http neutron firewall-rule-create --protocol tcp --destination-port 22 --action allow --name allow-ssh

Once the rules are created, create the firewall policy by using the

firewall-policy-createcommand with the--firewall-rulesoption and rules to include in quotes, followed by the name of the new policy. The order of the rules is important.neutron firewall-policy-create --firewall-rules "allow-icmp deny-http allow-ssh" policy-fw

Finish the firewall creation by using the

firewall-createcommand, the policy name and the new name you want to give to your new firewall.neutron firewall-create policy-fw --name user-fw

You can view the details of your new firewall by using the

firewall-showcommand and the name of your firewall. This will verify that the status of the firewall is ACTIVE.neutron firewall-show user-fw

Verify the FWaaS is functional.

Since allow-icmp firewall rule is set you can ping the floating IP address of the instance from the external network.

ping <FIP-VM1>

Similarly, you can connect via ssh to the instance due to the allow-ssh firewall rule.

ssh cirros@<FIP-VM1> password: <password>

Run a web server on vm1 instance that listens over port 80, accepts requests and sends a WELCOME response.

$ vi webserv.sh #!/bin/bash MYIP=$(/sbin/ifconfig eth0|grep 'inet addr'|awk -F: '{print $2}'| awk '{print $1}'); while true; do echo -e "HTTP/1.0 200 OK Welcome to $MYIP" | sudo nc -l -p 80 done # Give it Exec rights $ chmod 755 webserv.sh # Execute the script $ ./webserv.shYou should expect to see curl fail over port 80 because of the deny-http firewall rule. If curl succeeds, the firewall is not blocking incoming http requests.

curl -vvv <FIP-VM1>

When using reference implementation, new networks, FIPs and routers created after the Firewall creation will not be automatically updated with firewall rules. Thus, execute the firewall-update command by passing the current and new router Ids such that the rules are reconfigured across all the routers (both current and new).

For example if router-1 is created before and router-2 is created after the firewall creation

$ neutron firewall-update —router <router-1-id> —router <router-2-id> <firewall-name>

10.1.3 Making Changes to the Firewall Rules #

Log in to your Cloud Lifecycle Manager.

Edit your

~/openstack/my_cloud/definition/data/firewall_rules.ymlfile and add the lines necessary to allow the port(s) needed through the firewall.In this example we are going to open up port range 5900-5905 to allow VNC traffic through the firewall:

- name: VNC network-groups: - MANAGEMENT rules: - type: allow remote-ip-prefix: 0.0.0.0/0 port-range-min: 5900 port-range-max: 5905 protocol: tcpNoteThe example above shows a

remote-ip-prefixof0.0.0.0/0which opens the ports up to all IP ranges. To be more secure you can specify your local IP address CIDR you will be running the VNC connect from.Commit those changes to your local git:

ardana >cd ~/openstack/ardana/ansibleardana >git add -Aardana >git commit -m "firewall rule update"Run the configuration processor:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlCreate the deployment directory structure:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost ready-deployment.ymlChange to the deployment directory and run the

osconfig-iptables-deploy.ymlplaybook to update your iptable rules to allow VNC:ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts osconfig-iptables-deploy.yml

You can repeat these steps as needed to add, remove, or edit any of these firewall rules.

10.1.4 More Information #

Firewalls are based in IPtable settings.

Each firewall that is created is known as an instance.

A firewall instance can be deployed on selected project routers. If no specific project router is selected, a firewall instance is automatically applied to all project routers.

Only 1 firewall instance can be applied to a project router.

Only 1 firewall policy can be applied to a firewall instance.

Multiple firewall rules can be added and applied to a firewall policy.

Firewall rules can be shared across different projects via the Share API flag.

Firewall rules supersede the Security Group rules that are applied at the Instance level for all traffic entering or leaving a private, project network.

For more information on the command-line interface (CLI) and firewalls, see the OpenStack networking command-line client reference: https://docs.openstack.org/python-openstackclient/rocky/

10.2 Using VPN as a Service (VPNaaS) #

SUSE OpenStack Cloud 9 VPNaaS Configuration

This document describes the configuration process and requirements for the SUSE OpenStack Cloud 9 Virtual Private Network (VPN) as a Service (VPNaaS) module.

10.2.1 Prerequisites #

SUSE OpenStack Cloud must be installed.

Before setting up VPNaaS, you will need to have created an external network and a subnet with access to the internet. Information on how to create the external network and subnet can be found in Section 10.2.4, “More Information”.

You should assume 172.16.0.0/16 as the ext-net CIDR in this document.

10.2.2 Considerations #

Using the neutron plugin-based VPNaaS causes additional processes to be run on the Network Service Nodes. One of these processes, the ipsec charon process from StrongSwan, runs as root and listens on an external network. A vulnerability in that process can lead to remote root compromise of the Network Service Nodes. If this is a concern customers should consider using a VPN solution other than the neutron plugin-based VPNaaS and/or deploying additional protection mechanisms.

10.2.3 Configuration #

Setup Networks You can setup VPN as a

Service (VPNaaS) by first creating networks, subnets and routers using the

neutron command line. The VPNaaS module enables the

ability to extend access between private networks across two different

SUSE OpenStack Cloud clouds or between a SUSE OpenStack Cloud cloud and a non-cloud network. VPNaaS

is based on the open source software application called StrongSwan.

StrongSwan (more information available

at http://www.strongswan.org/)

is an IPsec implementation and provides basic VPN gateway functionality.

You can execute the included commands from any shell with access to the service APIs. In the included examples, the commands are executed from the lifecycle manager, however you could execute the commands from the controller node or any other shell with aforementioned service API access.

The use of floating IP's is not possible with the current version of VPNaaS when DVR is enabled. Ensure that no floating IP is associated to instances that will be using VPNaaS when using a DVR router. Floating IP associated to instances are ok when using CVR router.

From the Cloud Lifecycle Manager, create first private network, subnet and router assuming that ext-net is created by admin.

openstack network create privateA openstack subnet create --name subA privateA 10.1.0.0/24 --gateway 10.1.0.1 openstack router create router1 openstack router add subnet router1 subA openstack router set router1 ext-net

Create second private network, subnet and router.

openstack network create privateB openstack subnet create --name subB privateB 10.2.0.0/24 --gateway 10.2.0.1 openstack router create router2 openstack router add subnet router2 subB openstack router set router2 ext-net

From the Cloud Lifecycle Manager run the following to start the virtual machines. Begin with adding secgroup rules for SSH and ICMP.

openstack security group rule create default --protocol icmp openstack security group rule create default --protocol tcp --port-range-min 22 --port-range-max 22

Start the virtual machine in the privateA subnet. Using nova images-list, use the image id to boot image instead of the image name. After executing this step, it is recommended that you wait approximately 10 seconds to allow the virtual machine to become active.

NETA=$(openstack network list | awk '/privateA/ {print $2}') openstack server create --flavor 1 --image <id> --nic net-id=$NETA vm1Start the virtual machine in the privateB subnet.

NETB=$(openstack network list | awk '/privateB/ {print $2}') openstack server create --flavor 1 --image <id> --nic net-id=$NETB vm2Verify private IP's are allocated to the respective vms. Take note of IP's for later use.

openstack server show vm1 openstack server show vm2

You can set up the VPN by executing the below commands from the lifecycle manager or any shell with access to the service APIs. Begin with creating the policies with

vpn-ikepolicy-createandvpn-ipsecpolicy-create.neutron vpn-ikepolicy-create ikepolicy neutron vpn-ipsecpolicy-create ipsecpolicy

Create the VPN service at router1.

neutron vpn-service-create --name myvpnA --description "My vpn service" router1 subA

Wait at least 5 seconds and then run

ipsec-site-connection-createto create a ipsec-site connection. Note that--peer-addressis the assign ext-net IP from router2 and--peer-cidris subB cidr.neutron ipsec-site-connection-create --name vpnconnection1 --vpnservice-id myvpnA \ --ikepolicy-id ikepolicy --ipsecpolicy-id ipsecpolicy --peer-address 172.16.0.3 \ --peer-id 172.16.0.3 --peer-cidr 10.2.0.0/24 --psk secret

Create the VPN service at router2.

neutron vpn-service-create --name myvpnB --description "My vpn serviceB" router2 subB

Wait at least 5 seconds and then run

ipsec-site-connection-createto create a ipsec-site connection. Note that--peer-addressis the assigned ext-net IP from router1 and--peer-cidris subA cidr.neutron ipsec-site-connection-create --name vpnconnection2 --vpnservice-id myvpnB \ --ikepolicy-id ikepolicy --ipsecpolicy-id ipsecpolicy --peer-address 172.16.0.2 \ --peer-id 172.16.0.2 --peer-cidr 10.1.0.0/24 --psk secret

On the Cloud Lifecycle Manager, run the

ipsec-site-connection-listcommand to see the active connections. Be sure to check that the vpn_services are ACTIVE. You can check this by runningvpn-service-listand then checking ipsec-site-connections status. You should expect that the time for both vpn-services and ipsec-site-connections to become ACTIVE could take as long as 1 to 3 minutes.neutron ipsec-site-connection-list +--------------------------------------+----------------+--------------+---------------+------------+-----------+--------+ | id | name | peer_address | peer_cidrs | route_mode | auth_mode | status | +--------------------------------------+----------------+--------------+---------------+------------+-----------+--------+ | 1e8763e3-fc6a-444c-a00e-426a4e5b737c | vpnconnection2 | 172.16.0.2 | "10.1.0.0/24" | static | psk | ACTIVE | | 4a97118e-6d1d-4d8c-b449-b63b41e1eb23 | vpnconnection1 | 172.16.0.3 | "10.2.0.0/24" | static | psk | ACTIVE | +--------------------------------------+----------------+--------------+---------------+------------+-----------+--------+

Verify VPN In the case of non-admin users, you can verify the VPN connection by pinging the virtual machines.

Check the VPN connections.

Notevm1-ip and vm2-ip denotes private IP's for vm1 and vm2 respectively. The private IPs are obtained, as described in of Step 4. If you are unable to SSH to the private network due to a lack of direct access, the VM console can be accessed through horizon.

ssh cirros@vm1-ip password: <password> # ping the private IP address of vm2 ping ###.###.###.###

In another terminal.

ssh cirros@vm2-ip password: <password> # ping the private IP address of vm1 ping ###.###.###.###

You should see ping responses from both virtual machines.

As the admin user, you should check to make sure that a route exists between the router gateways. Once the gateways have been checked, packet encryption can be verified by using traffic analyzer (tcpdump) by tapping on the respective namespace (qrouter-* in case of non-DVR and snat-* in case of DVR) and tapping the right interface (qg-***).

When using DVR namespaces, all the occurrences of qrouter-xxxxxx in the following commands should be replaced with respective snat-xxxxxx.

Check the if the route exists between two router gateways. You can get the right qrouter namespace id by executing sudo ip netns. Once you have the qrouter namespace id, you can get the interface by executing sudo ip netns qrouter-xxxxxxxx ip addr and from the result the interface can be found.

sudo ip netns sudo ip netns exec qrouter-<router1 UUID> ping <router2 gateway> sudo ip netns exec qrouter-<router2 UUID> ping <router1 gateway>

Initiate a tcpdump on the interface.

sudo ip netns exec qrouter-xxxxxxxx tcpdump -i qg-xxxxxx

Check the VPN connection.

ssh cirros@vm1-ip password: <password> # ping the private IP address of vm2 ping ###.###.###.###

Repeat for other namespace and right tap interface.

sudo ip netns exec qrouter-xxxxxxxx tcpdump -i qg-xxxxxx

In another terminal.

ssh cirros@vm2-ip password: <password> # ping the private IP address of vm1 ping ###.###.###.###

You will find encrypted packets containing ‘ESP’ in the tcpdump trace.

10.2.4 More Information #

VPNaaS currently only supports Pre-shared Keys (PSK) security between VPN gateways. A different VPN gateway solution should be considered if stronger, certificate-based security is required.

For more information on the neutron command-line interface (CLI) and VPN as a Service (VPNaaS), see the OpenStack networking command-line client reference: https://docs.openstack.org/python-openstackclient/rocky/

For information on how to create an external network and subnet, see the OpenStack manual: http://docs.openstack.org/user-guide/dashboard_create_networks.html

10.3 DNS Service Overview #

SUSE OpenStack Cloud DNS service provides multi-tenant Domain Name Service with REST API management for domain and records.

The DNS Service is not intended to be used as an internal or private DNS service. The name records in DNSaaS should be treated as public information that anyone could query. There are controls to prevent tenants from creating records for domains they do not own. TSIG provides a Transaction SIG nature to ensure integrity during zone transfer to other DNS servers.

10.3.1 For More Information #

For more information about designate REST APIs, see the OpenStack REST API Documentation at http://docs.openstack.org/developer/designate/rest.html.

For a glossary of terms for designate, see the OpenStack glossary at http://docs.openstack.org/developer/designate/glossary.html.

10.3.2 designate Initial Configuration #

After the SUSE OpenStack Cloud installation has been completed, designate requires initial configuration to operate.

10.3.2.1 Identifying Name Server Public IPs #

Depending on the back-end, the method used to identify the name servers' public IPs will differ.

10.3.2.1.1 InfoBlox #

InfoBlox will act as your public name servers, consult the InfoBlox management UI to identify the IPs.

10.3.2.1.2 BIND Back-end #

You can find the name server IPs in /etc/hosts by

looking for the ext-api addresses, which are the

addresses of the controllers. For example:

192.168.10.1 example-cp1-c1-m1-extapi 192.168.10.2 example-cp1-c1-m2-extapi 192.168.10.3 example-cp1-c1-m3-extapi

10.3.2.1.3 Creating Name Server A Records #

Each name server requires a public name, for example

ns1.example.com., to which designate-managed domains will

be delegated. There are two common locations where these may be registered,

either within a zone hosted on designate itself, or within a zone hosted on a

external DNS service.

If you are using an externally managed zone for these names:

For each name server public IP, create the necessary A records in the external system.

If you are using a designate-managed zone for these names:

Create the zone in designate which will contain the records:

ardana >openstack zone create --email hostmaster@example.com example.com. +----------------+--------------------------------------+ | Field | Value | +----------------+--------------------------------------+ | action | CREATE | | created_at | 2016-03-09T13:16:41.000000 | | description | None | | email | hostmaster@example.com | | id | 23501581-7e34-4b88-94f4-ad8cec1f4387 | | masters | | | name | example.com. | | pool_id | 794ccc2c-d751-44fe-b57f-8894c9f5c842 | | project_id | a194d740818942a8bea6f3674e0a3d71 | | serial | 1457529400 | | status | PENDING | | transferred_at | None | | ttl | 3600 | | type | PRIMARY | | updated_at | None | | version | 1 | +----------------+--------------------------------------+For each name server public IP, create an A record. For example:

ardana >openstack recordset create --records 192.168.10.1 --type A example.com. ns1.example.com. +-------------+--------------------------------------+ | Field | Value | +-------------+--------------------------------------+ | action | CREATE | | created_at | 2016-03-09T13:18:36.000000 | | description | None | | id | 09e962ed-6915-441a-a5a1-e8d93c3239b6 | | name | ns1.example.com. | | records | 192.168.10.1 | | status | PENDING | | ttl | None | | type | A | | updated_at | None | | version | 1 | | zone_id | 23501581-7e34-4b88-94f4-ad8cec1f4387 | +-------------+--------------------------------------+When records have been added, list the record sets in the zone to validate:

ardana >openstack recordset list example.com. +--------------+------------------+------+---------------------------------------------------+ | id | name | type | records | +--------------+------------------+------+---------------------------------------------------+ | 2d6cf...655b | example.com. | SOA | ns1.example.com. hostmaster.example.com 145...600 | | 33466...bd9c | example.com. | NS | ns1.example.com. | | da98c...bc2f | example.com. | NS | ns2.example.com. | | 672ee...74dd | example.com. | NS | ns3.example.com. | | 09e96...39b6 | ns1.example.com. | A | 192.168.10.1 | | bca4f...a752 | ns2.example.com. | A | 192.168.10.2 | | 0f123...2117 | ns3.example.com. | A | 192.168.10.3 | +--------------+------------------+------+---------------------------------------------------+Contact your domain registrar requesting Glue Records to be registered in the

com.zone for the nameserver and public IP address pairs above. If you are using a sub-zone of an existing company zone (for example,ns1.cloud.mycompany.com.), the Glue must be placed in themycompany.com.zone.

10.3.2.1.4 For More Information #

For additional DNS integration and configuration information, see the OpenStack designate documentation at https://docs.openstack.org/designate/rocky/.

For more information on creating servers, domains and examples, see the OpenStack REST API documentation at https://developer.openstack.org/api-ref/dns/.

10.3.3 DNS Service Monitoring Support #

10.3.3.1 DNS Service Monitoring Support #

Additional monitoring support for the DNS Service (designate) has been added to SUSE OpenStack Cloud.

In the Networking section of the Operations Console, you can see alarms for all of

the DNS Services (designate), such as designate-zone-manager, designate-api,

designate-pool-manager, designate-mdns, and designate-central after running

designate-stop.yml.

You can run designate-start.yml to start the DNS Services

back up and the alarms will change from a red status to green and be removed

from the New Alarms panel of the

Operations Console.

An example of the generated alarms from the Operations Console is provided below

after running designate-stop.yml:

ALARM: STATE: ALARM ID: LAST CHECK: DIMENSION: Process Check 0f221056-1b0e-4507-9a28-2e42561fac3e 2016-10-03T10:06:32.106Z hostname=ardana-cp1-c1-m1-mgmt, service=dns, cluster=cluster1, process_name=designate-zone-manager, component=designate-zone-manager, control_plane=control-plane-1, cloud_name=entry-scale-kvm Process Check 50dc4c7b-6fae-416c-9388-6194d2cfc837 2016-10-03T10:04:32.086Z hostname=ardana-cp1-c1-m1-mgmt, service=dns, cluster=cluster1, process_name=designate-api, component=designate-api, control_plane=control-plane-1, cloud_name=entry-scale-kvm Process Check 55cf49cd-1189-4d07-aaf4-09ed08463044 2016-10-03T10:05:32.109Z hostname=ardana-cp1-c1-m1-mgmt, service=dns, cluster=cluster1, process_name=designate-pool-manager, component=designate-pool-manager, control_plane=control-plane-1, cloud_name=entry-scale-kvm Process Check c4ab7a2e-19d7-4eb2-a9e9-26d3b14465ea 2016-10-03T10:06:32.105Z hostname=ardana-cp1-c1-m1-mgmt, service=dns, cluster=cluster1, process_name=designate-mdns, component=designate-mdns, control_plane=control-plane-1, cloud_name=entry-scale-kvm HTTP Status c6349bbf-4fd1-461a-9932-434169b86ce5 2016-10-03T10:05:01.731Z service=dns, cluster=cluster1, url=http://100.60.90.3:9001/, hostname=ardana-cp1-c1-m3-mgmt, component=designate-api, control_plane=control-plane-1, api_endpoint=internal, cloud_name=entry-scale-kvm, monitored_host_type=instance Process Check ec2c32c8-3b91-4656-be70-27ff0c271c89 2016-10-03T10:04:32.082Z hostname=ardana-cp1-c1-m1-mgmt, service=dns, cluster=cluster1, process_name=designate-central, component=designate-central, control_plane=control-plane-1, cloud_name=entry-scale-kvm

10.4 Networking Service Overview #

SUSE OpenStack Cloud Networking is a virtual Networking service that leverages the OpenStack neutron service to provide network connectivity and addressing to SUSE OpenStack Cloud Compute service devices.

The Networking service also provides an API to configure and manage a variety of network services.

You can use the Networking service to connect guest servers or you can define and configure your own virtual network topology.

10.4.1 Installing the Networking Service #

SUSE OpenStack Cloud Network Administrators are responsible for planning for the neutron Networking service, and once installed, to configure the service to meet the needs of their cloud network users.

10.4.2 Working with the Networking service #

To perform tasks using the Networking service, you can use the dashboard, API or CLI.

10.4.3 Reconfiguring the Networking service #

If you change any of the network configuration after installation, it is recommended that you reconfigure the Networking service by running the neutron-reconfigure playbook.

On the Cloud Lifecycle Manager:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts neutron-reconfigure.yml

10.4.4 For more information #

For information on how to operate your cloud we suggest you read the OpenStack Operations Guide. The Architecture section contains useful information about how an OpenStack Cloud is put together. However, SUSE OpenStack Cloud takes care of these details for you. The Operations section contains information on how to manage the system.

10.4.5 Neutron External Networks #

10.4.5.1 External networks overview #

This topic explains how to create a neutron external network.

External networks provide access to the internet.

The typical use is to provide an IP address that can be used to reach a VM from an external network which can be a public network like the internet or a network that is private to an organization.

10.4.5.2 Using the Ansible Playbook #

This playbook will query the Networking service for an existing external

network, and then create a new one if you do not already have one. The

resulting external network will have the name ext-net

with a subnet matching the CIDR you specify in the command below.

If you need to specify more granularity, for example specifying an allocation pool for the subnet, use the Section 10.4.5.3, “Using the python-neutronclient CLI”.

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts neutron-cloud-configure.yml -e EXT_NET_CIDR=<CIDR>

The table below shows the optional switch that you can use as part of this playbook to specify environment-specific information:

| Switch | Description |

|---|---|

|

|

Optional. You can use this switch to specify the external network CIDR. If you choose not to use this switch, or use a wrong value, the VMs will not be accessible over the network.

This CIDR will be from the |

10.4.5.3 Using the python-neutronclient CLI #

For more granularity you can utilize the OpenStackClient tool to create your external network.

Log in to the Cloud Lifecycle Manager.

Source the Admin creds:

ardana >source ~/service.osrcCreate the external network and then the subnet using these commands below.

Creating the network:

ardana >openstack network create --router:external <external-network-name>Creating the subnet:

ardana >openstack subnet create EXTERNAL-NETWORK-NAME CIDR --gateway GATEWAY --allocation-pool start=IP_START,end=IP_END [--disable-dhcp]Where:

Value Description external-network-name This is the name given to your external network. This is a unique value that you will choose. The value

ext-netis usually used.CIDR Use this switch to specify the external network CIDR. If you do not use this switch or use a wrong value, the VMs will not be accessible over the network.

This CIDR will be from the EXTERNAL VM network.

--gateway Optional switch to specify the gateway IP for your subnet. If this is not included, it will choose the first available IP.

--allocation-pool start end

Optional switch to specify start and end IP addresses to use as the allocation pool for this subnet.

--disable-dhcp Optional switch if you want to disable DHCP on this subnet. If this is not specified, DHCP will be enabled.

10.4.5.4 Multiple External Networks #

SUSE OpenStack Cloud provides the ability to have multiple external networks, by using the Network Service (neutron) provider networks for external networks. You can configure SUSE OpenStack Cloud to allow the use of provider VLANs as external networks by following these steps.

Do NOT include the

neutron.l3_agent.external_network_bridgetag in the network_groups definition for your cloud. This results in thel3_agent.ini external_network_bridgebeing set to an empty value (rather than the traditional br-ex).Configure your cloud to use provider VLANs, by specifying the

provider_physical_networktag on one of the network_groups defined for your cloud.For example, to run provider VLANS over the EXAMPLE network group: (some attributes omitted for brevity)

network-groups: - name: EXAMPLE tags: - neutron.networks.vlan: provider-physical-network: physnet1After the cloud has been deployed, you can create external networks using provider VLANs.

For example, using the OpenStackClient:

Create external network 1 on vlan101

ardana >openstack network create --provider-network-type vlan --provider-physical-network physnet1 --provider-segment 101 --external ext-net1Create external network 2 on vlan102

ardana >openstack network create --provider-network-type vlan --provider-physical-network physnet1 --provider-segment 102 --external ext-net2

10.4.6 Neutron Provider Networks #

This topic explains how to create a neutron provider network.

A provider network is a virtual network created in the SUSE OpenStack Cloud cloud that is consumed by SUSE OpenStack Cloud services. The distinctive element of a provider network is that it does not create a virtual router; rather, it depends on L3 routing that is provided by the infrastructure.

A provider network is created by adding the specification to the SUSE OpenStack Cloud input model. It consists of at least one network and one or more subnets.

10.4.6.1 SUSE OpenStack Cloud input model #

The input model is the primary mechanism a cloud admin uses in defining a SUSE OpenStack Cloud installation. It exists as a directory with a data subdirectory that contains YAML files. By convention, any service that creates a neutron provider network will create a subdirectory under the data directory and the name of the subdirectory shall be the project name. For example, the Octavia project will use neutron provider networks so it will have a subdirectory named 'octavia' and the config file that specifies the neutron network will exist in that subdirectory.

├── cloudConfig.yml

├── data

│ ├── control_plane.yml

│ ├── disks_compute.yml

│ ├── disks_controller_1TB.yml

│ ├── disks_controller.yml

│ ├── firewall_rules.yml

│ ├── net_interfaces.yml

│ ├── network_groups.yml

│ ├── networks.yml

│ ├── neutron

│ │ └── neutron_config.yml

│ ├── nic_mappings.yml

│ ├── server_groups.yml

│ ├── server_roles.yml

│ ├── servers.yml

│ ├── swift

│ │ └── swift_config.yml

│ └── octavia

│ └── octavia_config.yml

├── README.html

└── README.md10.4.6.2 Network/Subnet specification #

The elements required in the input model for you to define a network are:

name

network_type

physical_network

Elements that are optional when defining a network are:

segmentation_id

shared

Required elements for the subnet definition are:

cidr

Optional elements for the subnet definition are:

allocation_pools which will require start and end addresses

host_routes which will require a destination and nexthop

gateway_ip

no_gateway

enable-dhcp

NOTE: Only IPv4 is supported at the present time.

10.4.6.3 Network details #

The following table outlines the network values to be set, and what they represent.

| Attribute | Required/optional | Allowed Values | Usage |

|---|---|---|---|

| name | Required | ||

| network_type | Required | flat, vlan, vxlan | The type of desired network |

| physical_network | Required | Valid | Name of physical network that is overlayed with the virtual network |

| segmentation_id | Optional | vlan or vxlan ranges | VLAN id for vlan or tunnel id for vxlan |

| shared | Optional | True | Shared by all projects or private to a single project |

10.4.6.4 Subnet details #

The following table outlines the subnet values to be set, and what they represent.

| Attribute | Req/Opt | Allowed Values | Usage |

|---|---|---|---|

| cidr | Required | Valid CIDR range | for example, 172.30.0.0/24 |

| allocation_pools | Optional | See allocation_pools table below | |

| host_routes | Optional | See host_routes table below | |

| gateway_ip | Optional | Valid IP addr | Subnet gateway to other nets |

| no_gateway | Optional | True | No distribution of gateway |

| enable-dhcp | Optional | True | Enable dhcp for this subnet |

10.4.6.5 ALLOCATION_POOLS details #

The following table explains allocation pool settings.

| Attribute | Req/Opt | Allowed Values | Usage |

|---|---|---|---|

| start | Required | Valid IP addr | First ip address in pool |

| end | Required | Valid IP addr | Last ip address in pool |

10.4.6.6 HOST_ROUTES details #

The following table explains host route settings.

| Attribute | Req/Opt | Allowed Values | Usage |

|---|---|---|---|

| destination | Required | Valid CIDR | Destination subnet |

| nexthop | Required | Valid IP addr | Hop to take to destination subnet |

Multiple destination/nexthop values can be used.

10.4.6.7 Examples #

The following examples show the configuration file settings for neutron and Octavia.

Octavia configuration

This file defines the mapping. It does not need to be edited unless you want to change the name of your VLAN.

Path:

~/openstack/my_cloud/definition/data/octavia/octavia_config.yml

---

product:

version: 2

configuration-data:

- name: OCTAVIA-CONFIG-CP1

services:

- octavia

data:

amp_network_name: OCTAVIA-MGMT-NETneutron configuration

Input your network configuration information for your provider VLANs in

neutron_config.yml found here:

~/openstack/my_cloud/definition/data/neutron/.

---

product:

version: 2

configuration-data:

- name: NEUTRON-CONFIG-CP1

services:

- neutron

data:

neutron_provider_networks:

- name: OCTAVIA-MGMT-NET

provider:

- network_type: vlan

physical_network: physnet1

segmentation_id: 2754

cidr: 10.13.189.0/24

no_gateway: True

enable_dhcp: True

allocation_pools:

- start: 10.13.189.4

end: 10.13.189.252

host_routes:

# route to MANAGEMENT-NET

- destination: 10.13.111.128/26

nexthop: 10.13.189.510.4.6.8 Implementing your changes #

Commit the changes to git:

ardana >git add -Aardana >git commit -a -m "configuring provider network"Run the configuration processor:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlUpdate your deployment directory:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost ready-deployment.ymlThen continue with your clean cloud installation.

If you are only adding a neutron Provider network to an existing model, then run the neutron-deploy.yml playbook:

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts neutron-deploy.yml

10.4.6.9 Multiple Provider Networks #

The physical network infrastructure must be configured to convey the provider VLAN traffic as tagged VLANs to the cloud compute nodes and network service network nodes. Configuration of the physical network infrastructure is outside the scope of the SUSE OpenStack Cloud 9 software.

SUSE OpenStack Cloud 9 automates the server networking configuration and the Network Service configuration based on information in the cloud definition. To configure the system for provider VLANs, specify the neutron.networks.vlan tag with a provider-physical-network attribute on one or more network groups. For example (some attributes omitted for brevity):

network-groups:

- name: NET_GROUP_A

tags:

- neutron.networks.vlan:

provider-physical-network: physnet1

- name: NET_GROUP_B

tags:

- neutron.networks.vlan:

provider-physical-network: physnet2A network group is associated with a server network interface via an interface model. For example (some attributes omitted for brevity):

interface-models:

- name: INTERFACE_SET_X

network-interfaces:

- device:

name: bond0

network-groups:

- NET_GROUP_A

- device:

name: eth3

network-groups:

- NET_GROUP_BA network group used for provider VLANs may contain only a single SUSE OpenStack Cloud network, because that VLAN must span all compute nodes and any Network Service network nodes/controllers (that is, it is a single L2 segment). The SUSE OpenStack Cloud network must be defined with tagged-vlan false, otherwise a Linux VLAN network interface will be created. For example:

networks:

- name: NET_A

tagged-vlan: false

network-group: NET_GROUP_A

- name: NET_B

tagged-vlan: false

network-group: NET_GROUP_BWhen the cloud is deployed, SUSE OpenStack Cloud 9 will create the appropriate bridges on the servers, and set the appropriate attributes in the neutron configuration files (for example, bridge_mappings).

After the cloud has been deployed, create Network Service network objects for each provider VLAN. For example, using the Network Service CLI:

ardana >openstack network create --provider:network_type vlan --provider:physical_network physnet1 --provider-segment 101 mynet101ardana >openstack network create --provider:network_type vlan --provider:physical_network physnet2 --provider-segment 234 mynet234

10.4.6.10 More Information #

For more information on the Network Service command-line interface (CLI), see the OpenStack networking command-line client reference: http://docs.openstack.org/cli-reference/content/neutronclient_commands.html

10.4.7 Using IPAM Drivers in the Networking Service #

This topic describes how to choose and implement an IPAM driver.

10.4.7.1 Selecting and implementing an IPAM driver #

Beginning with the Liberty release, OpenStack networking includes a pluggable interface for the IP Address Management (IPAM) function. This interface creates a driver framework for the allocation and de-allocation of subnets and IP addresses, enabling the integration of alternate IPAM implementations or third-party IP Address Management systems.

There are three possible IPAM driver options:

Non-pluggable driver. This option is the default when the ipam_driver parameter is not specified in neutron.conf.

Pluggable reference IPAM driver. The pluggable IPAM driver interface was introduced in SUSE OpenStack Cloud 9 (OpenStack Liberty). It is a refactoring of the Kilo non-pluggable driver to use the new pluggable interface. The setting in neutron.conf to specify this driver is

ipam_driver = internal.Pluggable Infoblox IPAM driver. The pluggable Infoblox IPAM driver is a third-party implementation of the pluggable IPAM interface. the corresponding setting in neutron.conf to specify this driver is

ipam_driver = networking_infoblox.ipam.driver.InfobloxPool.NoteYou can use either the non-pluggable IPAM driver or a pluggable one. However, you cannot use both.

10.4.7.2 Using the Pluggable reference IPAM driver #

To indicate that you want to use the Pluggable reference IPAM driver, the

only parameter needed is "ipam_driver." You can set it by looking for the

following commented line in the

neutron.conf.j2 template (ipam_driver = internal)

uncommenting it, and committing the file. After following the standard

steps to deploy neutron, neutron will be configured to run using the

Pluggable reference IPAM driver.

As stated, the file you must edit is neutron.conf.j2 on

the Cloud Lifecycle Manager in the directory

~/openstack/my_cloud/config/neutron. Here is the relevant

section where you can see the ipam_driver parameter

commented out:

[DEFAULT] ... l3_ha_net_cidr = 169.254.192.0/18 # Uncomment the line below if the Reference Pluggable IPAM driver is to be used # ipam_driver = internal ...

After uncommenting the line ipam_driver = internal,

commit the file using git commit from the openstack/my_cloud

directory:

ardana > git commit -a -m 'My config for enabling the internal IPAM Driver'Then follow the steps to deploy SUSE OpenStack Cloud in the Chapter 13, Overview appropriate to your cloud configuration.

Currently there is no migration path from the non-pluggable driver to a pluggable IPAM driver because changes are needed to database tables and neutron currently cannot make those changes.

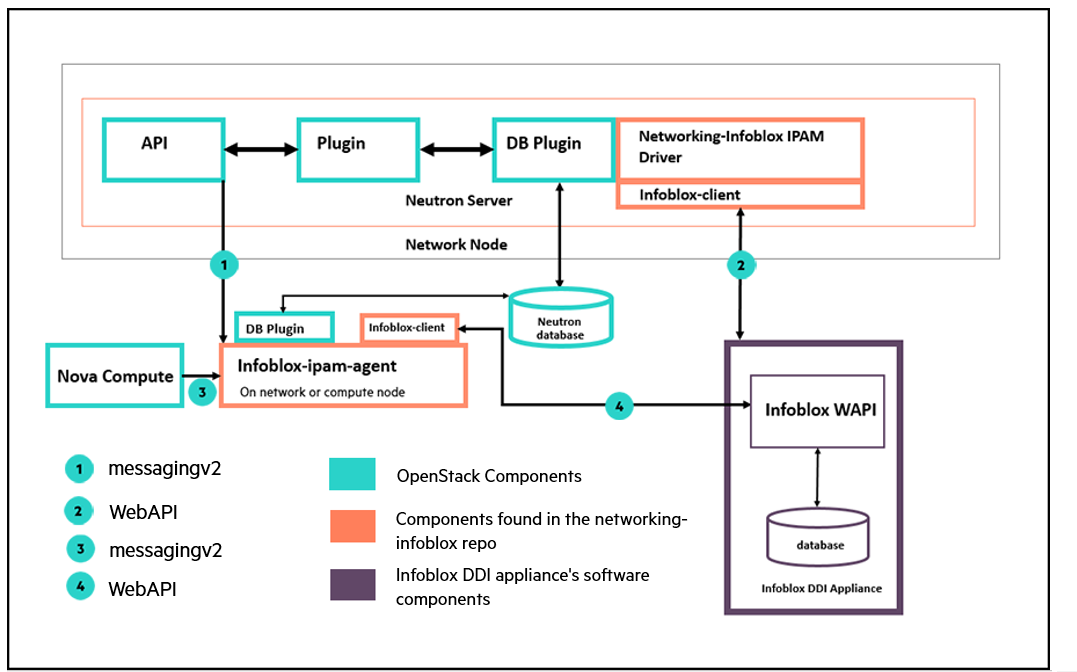

10.4.7.3 Using the Infoblox IPAM driver #

As suggested above, using the Infoblox IPAM driver requires changes to

existing parameters in nova.conf and

neutron.conf. If you want to use the infoblox appliance,

you will need to add the "infoblox service-component" to the service-role

containing the neutron API server. To use the infoblox appliance for IPAM,

both the agent and the Infoblox IPAM driver are

required. The infoblox-ipam-agent should be deployed on

the same node where the neutron-server component is running. Usually this is

a Controller node.

Have the Infoblox appliance running on the management network (the Infoblox appliance admin or the datacenter administrator should know how to perform this step).

Change the control plane definition to add i

nfoblox-ipam-agentas a service in the controller node cluster (see change in bold). Make the changes incontrol_plane.ymlfound here:~/openstack/my_cloud/definition/data/control_plane.yml--- product: version: 2 control-planes: - name: ccp control-plane-prefix: ccp ... clusters: - name: cluster0 cluster-prefix: c0 server-role: ARDANA-ROLE member-count: 1 allocation-policy: strict service-components: - lifecycle-manager - name: cluster1 cluster-prefix: c1 server-role: CONTROLLER-ROLE member-count: 3 allocation-policy: strict service-components: - ntp-server ... - neutron-server - infoblox-ipam-agent ... - designate-client - bind resources: - name: compute resource-prefix: comp server-role: COMPUTE-ROLE allocation-policy: anyModify the

~/openstack/my_cloud/config/neutron/neutron.conf.j2file on the controller node to comment and uncomment the lines noted below to enable use with the Infoblox appliance:[DEFAULT] ... l3_ha_net_cidr = 169.254.192.0/18 # Uncomment the line below if the Reference Pluggable IPAM driver is to be used # ipam_driver = internal # Comment out the line below if the Infoblox IPAM Driver is to be used # notification_driver = messaging # Uncomment the lines below if the Infoblox IPAM driver is to be used ipam_driver = networking_infoblox.ipam.driver.InfobloxPool notification_driver = messagingv2 # Modify the infoblox sections below to suit your cloud environment [infoblox] cloud_data_center_id = 1 # This name of this section is formed by "infoblox-dc:<infoblox.cloud_data_center_id>" # If cloud_data_center_id is 1, then the section name is "infoblox-dc:1" [infoblox-dc:0] http_request_timeout = 120 http_pool_maxsize = 100 http_pool_connections = 100 ssl_verify = False wapi_version = 2.2 admin_user_name = admin admin_password = infoblox grid_master_name = infoblox.localdomain grid_master_host = 1.2.3.4 [QUOTAS] ...Change

nova.conf.j2to replace the notification driver "messaging" to "messagingv2"... # Oslo messaging notification_driver = log # Note: # If the infoblox-ipam-agent is to be deployed in the cloud, change the # notification_driver setting from "messaging" to "messagingv2". notification_driver = messagingv2 notification_topics = notifications # Policy ...

Commit the changes:

ardana >cd ~/openstack/my_cloudardana >git commit –a –m 'My config for enabling the Infoblox IPAM driver'Deploy the cloud with the changes. Due to changes to the control_plane.yml, you will need to rerun the config-processor-run.yml playbook if you have run it already during the install process.

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlardana >ansible-playbook -i hosts/localhost ready-deployment.ymlardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts site.yml

10.4.7.4 Configuration parameters for using the Infoblox IPAM driver #

Changes required in the notification parameters in nova.conf:

| Parameter Name | Section in nova.conf | Default Value | Current Value | Description |

|---|---|---|---|---|

| notify_on_state_change | DEFAULT | None | vm_and_task_state |

Send compute.instance.update notifications on instance state changes. Vm_and_task_state means notify on vm and task state changes. Infoblox requires the value to be vm_state (notify on vm state change). Thus NO CHANGE is needed for infoblox |

| notification_topics | DEFAULT | empty list | notifications |

NO CHANGE is needed for infoblox. The infoblox installation guide requires the notifications to be "notifications" |

| notification_driver | DEFAULT | None | messaging |

Change needed. The infoblox installation guide requires the notification driver to be "messagingv2". |

Changes to existing parameters in neutron.conf

| Parameter Name | Section in neutron.conf | Default Value | Current Value | Description |

|---|---|---|---|---|

| ipam_driver | DEFAULT | None |

None (param is undeclared in neutron.conf) |

Pluggable IPAM driver to be used by neutron API server. For infoblox, the value is "networking_infoblox.ipam.driver.InfobloxPool" |

| notification_driver | DEFAULT | empty list | messaging |

The driver used to send notifications from the neutron API server to the neutron agents. The installation guide for networking-infoblox calls for the notification_driver to be "messagingv2" |

| notification_topics | DEFAULT | None | notifications |

No change needed. The row is here show the changes in the neutron parameters described in the installation guide for networking-infoblox |

Parameters specific to the Networking Infoblox Driver. All the parameters for the Infoblox IPAM driver must be defined in neutron.conf.

| Parameter Name | Section in neutron.conf | Default Value | Description |

|---|---|---|---|

| cloud_data_center_id | infoblox | 0 | ID for selecting a particular grid from one or more grids to serve networks in the Infoblox back end |

| ipam_agent_workers | infoblox | 1 | Number of Infoblox IPAM agent works to run |

| grid_master_host | infoblox-dc.<cloud_data_center_id> | empty string | IP address of the grid master. WAPI requests are sent to the grid_master_host |

| ssl_verify | infoblox-dc.<cloud_data_center_id> | False | Ensure whether WAPI requests sent over HTTPS require SSL verification |

| WAPI Version | infoblox-dc.<cloud_data_center_id> | 1.4 | The WAPI version. Value should be 2.2. |

| admin_user_name | infoblox-dc.<cloud_data_center_id> | empty string | Admin user name to access the grid master or cloud platform appliance |

| admin_password | infoblox-dc.<cloud_data_center_id> | empty string | Admin user password |

| http_pool_connections | infoblox-dc.<cloud_data_center_id> | 100 | |

| http_pool_maxsize | infoblox-dc.<cloud_data_center_id> | 100 | |

| http_request_timeout | infoblox-dc.<cloud_data_center_id> | 120 |

The diagram below shows nova compute sending notification to the infoblox-ipam-agent

10.4.7.5 Limitations #

There is no IPAM migration path from non-pluggable to pluggable IPAM driver (https://bugs.launchpad.net/neutron/+bug/1516156). This means there is no way to reconfigure the neutron database if you wanted to change neutron to use a pluggable IPAM driver. Unless you change the default of non-pluggable IPAM configuration to a pluggable driver at install time, you will have no other opportunity to make that change because reconfiguration of SUSE OpenStack Cloud 9from using the default non-pluggable IPAM configuration to SUSE OpenStack Cloud 9 using a pluggable IPAM driver is not supported.

Upgrade from previous versions of SUSE OpenStack Cloud to SUSE OpenStack Cloud 9 to use a pluggable IPAM driver is not supported.

The Infoblox appliance does not allow for overlapping IPs. For example, only one tenant can have a CIDR of 10.0.0.0/24.

The infoblox IPAM driver fails the creation of a subnet when a there is no gateway-ip supplied. For example, the command

openstack subnet create ... --no-gateway ...will fail.

10.4.8 Configuring Load Balancing as a Service (LBaaS) #

SUSE OpenStack Cloud 9 LBaaS Configuration

Load Balancing as a Service (LBaaS) is an advanced networking service that allows load balancing of multi-node environments. It provides the ability to spread requests across multiple servers thereby reducing the load on any single server. This document describes the installation steps and the configuration for LBaaS v2.

The LBaaS architecture is based on a driver model to support different load balancers. LBaaS-compatible drivers are provided by load balancer vendors including F5 and Citrix. A new software load balancer driver was introduced in the OpenStack Liberty release called "Octavia". The Octavia driver deploys a software load balancer called HAProxy. Octavia is the default load balancing provider in SUSE OpenStack Cloud 9 for LBaaS V2. Until Octavia is configured the creation of load balancers will fail with an error. Refer to Chapter 43, Configuring Load Balancer as a Service document for information on installing Octavia.

Before upgrading to SUSE OpenStack Cloud 9, contact F5 and SUSE to determine which F5 drivers have been certified for use with SUSE OpenStack Cloud. Loading drivers not certified by SUSE may result in failure of your cloud deployment.

LBaaS V2 offers with Chapter 43, Configuring Load Balancer as a Service a software load balancing solution that supports both a highly available control plane and data plane. However, should an external hardware load balancer be selected the cloud operation can achieve additional performance and availability.

LBaaS v2

Your vendor already has a driver that supports LBaaS v2. Many hardware load balancer vendors already support LBaaS v2 and this list is growing all the time.

You intend to script your load balancer creation and management so a UI is not important right now (horizon support will be added in a future release).

You intend to support TLS termination at the load balancer.

You intend to use the Octavia software load balancer (adding HA and scalability).

You do not want to take your load balancers offline to perform subsequent LBaaS upgrades.

You intend in future releases to need L7 load balancing.

Reasons not to select this version.

Your LBaaS vendor does not have a v2 driver.

You must be able to manage your load balancers from horizon.

You have legacy software which utilizes the LBaaS v1 API.

LBaaS v2 is installed by default with SUSE OpenStack Cloud and requires minimal configuration to start the service.

LBaaS V2 API currently supports load balancer failover with Octavia. LBaaS v2 API includes automatic failover of a deployed load balancer with Octavia. More information about this driver can be found in Chapter 43, Configuring Load Balancer as a Service.

10.4.8.1 Prerequisites #

SUSE OpenStack Cloud LBaaS v2

SUSE OpenStack Cloud must be installed for LBaaS v2.

Follow the instructions to install Chapter 43, Configuring Load Balancer as a Service

10.4.9 Load Balancer: Octavia Driver Administration #

This document provides the instructions on how to enable and manage various components of the Load Balancer Octavia driver if that driver is enabled.

Section 10.4.9.2, “Tuning Octavia Installation”

Homogeneous Compute Configuration

Octavia and Floating IP's

Configuration Files

Spare Pools

Section 10.4.9.3, “Managing Amphora”

Updating the Cryptographic Certificates

Accessing VM information in nova

Initiating Failover of an Amphora VM

10.4.9.1 Monasca Alerts #

The monasca-agent has the following Octavia-related plugins:

Process checks – checks if octavia processes are running. When it starts, it detects which processes are running and then monitors them.

http_connect check – checks if it can connect to octavia api servers.

Alerts are displayed in the Operations Console.

10.4.9.2 Tuning Octavia Installation #

Homogeneous Compute Configuration

Octavia works only with homogeneous compute node configurations. Currently, Octavia does not support multiple nova flavors. If Octavia needs to be supported on multiple compute nodes, then all the compute nodes should carry same set of physnets (which will be used for Octavia).

Octavia and Floating IPs

Due to a neutron limitation Octavia will only work with CVR routers. Another option is to use VLAN provider networks which do not require a router.

You cannot currently assign a floating IP address as the VIP (user facing) address for a load balancer created by the Octavia driver if the underlying neutron network is configured to support Distributed Virtual Router (DVR). The Octavia driver uses a neutron function known as allowed address pairs to support load balancer fail over.

There is currently a neutron bug that does not support this function in a DVR configuration

Octavia Configuration Files

The system comes pre-tuned and should not need any adjustments for most customers. If in rare instances manual tuning is needed, follow these steps:

Changes might be lost during SUSE OpenStack Cloud upgrades.

Edit the Octavia configuration files in

my_cloud/config/octavia. It is recommended that any

changes be made in all of the Octavia configuration files.

octavia-api.conf.j2

octavia-health-manager.conf.j2

octavia-housekeeping.conf.j2

octavia-worker.conf.j2

After the changes are made to the configuration files, redeploy the service.

Commit changes to git.

ardana >cd ~/openstackardana >git add -Aardana >git commit -m "My Octavia Config"Run the configuration processor and ready deployment.

ardana >cd ~/openstack/ardana/ansible/ardana >ansible-playbook -i hosts/localhost config-processor-run.ymlardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun the Octavia reconfigure.

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts octavia-reconfigure.yml

Spare Pools

The Octavia driver provides support for creating spare pools of the HAProxy software installed in VMs. This means instead of creating a new load balancer when loads increase, create new load balancer calls will pull a load balancer from the spare pool. The spare pools feature consumes resources, therefore the load balancers in the spares pool has been set to 0, which is the default and also disables the feature.

Reasons to enable a load balancing spare pool in SUSE OpenStack Cloud

You expect a large number of load balancers to be provisioned all at once (puppet scripts, or ansible scripts) and you want them to come up quickly.

You want to reduce the wait time a customer has while requesting a new load balancer.

To increase the number of load balancers in your spares pool, edit

the Octavia configuration files by uncommenting the

spare_amphora_pool_size and adding the number of load

balancers you would like to include in your spares pool.

# Pool size for the spare pool # spare_amphora_pool_size = 0

10.4.9.3 Managing Amphora #

Octavia starts a separate VM for each load balancing function. These VMs are called amphora.

Updating the Cryptographic Certificates

Octavia uses two-way SSL encryption for communication between amphora and the control plane. Octavia keeps track of the certificates on the amphora and will automatically recycle them. The certificates on the control plane are valid for one year after installation of SUSE OpenStack Cloud.

You can check on the status of the certificate by logging into the controller node as root and running:

ardana > cd /opt/stack/service/octavia-SOME UUID/etc/certs/

openssl x509 -in client.pem -text –nooutThis prints the certificate out where you can check on the expiration dates.

To renew the certificates, reconfigure Octavia. Reconfiguring causes Octavia to automatically generate new certificates and deploy them to the controller hosts.

On the Cloud Lifecycle Manager execute octavia-reconfigure:

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts octavia-reconfigure.yml

Accessing VM information in nova

You can use openstack project list as an administrative

user to obtain information about the tenant or project-id of the Octavia

project. In the example below, the Octavia project has a project-id of

37fd6e4feac14741b6e75aba14aea833.

ardana > openstack project list

+----------------------------------+------------------+

| ID | Name |

+----------------------------------+------------------+

| 055071d8f25d450ea0b981ca67f7ccee | glance-swift |

| 37fd6e4feac14741b6e75aba14aea833 | octavia |

| 4b431ae087ef4bd285bc887da6405b12 | swift-monitor |

| 8ecf2bb5754646ae97989ba6cba08607 | swift-dispersion |

| b6bd581f8d9a48e18c86008301d40b26 | services |

| bfcada17189e4bc7b22a9072d663b52d | cinderinternal |

| c410223059354dd19964063ef7d63eca | monitor |

| d43bc229f513494189422d88709b7b73 | admin |

| d5a80541ba324c54aeae58ac3de95f77 | demo |

| ea6e039d973e4a58bbe42ee08eaf6a7a | backup |

+----------------------------------+------------------+

You can then use openstack server list --tenant <project-id> to

list the VMs for the Octavia tenant. Take particular note of the IP address

on the OCTAVIA-MGMT-NET; in the example below it is

172.30.1.11. For additional nova command-line options see

Section 10.4.9.5, “For More Information”.

ardana > openstack server list --tenant 37fd6e4feac14741b6e75aba14aea833

+--------------------------------------+----------------------------------------------+----------------------------------+--------+------------+-------------+------------------------------------------------+

| ID | Name | Tenant ID | Status | Task State | Power State | Networks |

+--------------------------------------+----------------------------------------------+----------------------------------+--------+------------+-------------+------------------------------------------------+

| 1ed8f651-de31-4208-81c5-817363818596 | amphora-1c3a4598-5489-48ea-8b9c-60c821269e4c | 37fd6e4feac14741b6e75aba14aea833 | ACTIVE | - | Running | private=10.0.0.4; OCTAVIA-MGMT-NET=172.30.1.11 |

+--------------------------------------+----------------------------------------------+----------------------------------+--------+------------+-------------+------------------------------------------------+The Amphora VMs do not have SSH or any other access. In the rare case that there is a problem with the underlying load balancer the whole amphora will need to be replaced.

Initiating Failover of an Amphora VM

Under normal operations Octavia will monitor the health of the amphora constantly and automatically fail them over if there are any issues. This helps to minimize any potential downtime for load balancer users. There are, however, a few cases a failover needs to be initiated manually:

The Loadbalancer has become unresponsive and Octavia has not detected an error.

A new image has become available and existing load balancers need to start using the new image.

The cryptographic certificates to control and/or the HMAC password to verify Health information of the amphora have been compromised.

To minimize the impact for end users we will keep the existing load balancer working until shortly before the new one has been provisioned. There will be a short interruption for the load balancing service so keep that in mind when scheduling the failovers. To achieve that follow these steps (assuming the management ip from the previous step):

Assign the IP to a SHELL variable for better readability.

ardana >export MGM_IP=172.30.1.11Identify the port of the vm on the management network.

ardana >openstack port list | grep $MGM_IP | 0b0301b9-4ee8-4fb6-a47c-2690594173f4 | | fa:16:3e:d7:50:92 | {"subnet_id": "3e0de487-e255-4fc3-84b8-60e08564c5b7", "ip_address": "172.30.1.11"} |Disable the port to initiate a failover. Note the load balancer will still function but cannot be controlled any longer by Octavia.

NoteChanges after disabling the port will result in errors.

ardana >openstack port set --admin-state-up False 0b0301b9-4ee8-4fb6-a47c-2690594173f4 Updated port: 0b0301b9-4ee8-4fb6-a47c-2690594173f4You can check to see if the amphora failed over with

openstack server list --tenant <project-id>. This may take some time and in some cases may need to be repeated several times. You can tell that the failover has been successful by the changed IP on the management network.ardana >openstack server list --tenant 37fd6e4feac14741b6e75aba14aea833 +--------------------------------------+----------------------------------------------+----------------------------------+--------+------------+-------------+------------------------------------------------+ | ID | Name | Tenant ID | Status | Task State | Power State | Networks | +--------------------------------------+----------------------------------------------+----------------------------------+--------+------------+-------------+------------------------------------------------+ | 1ed8f651-de31-4208-81c5-817363818596 | amphora-1c3a4598-5489-48ea-8b9c-60c821269e4c | 37fd6e4feac14741b6e75aba14aea833 | ACTIVE | - | Running | private=10.0.0.4; OCTAVIA-MGMT-NET=172.30.1.12 | +--------------------------------------+----------------------------------------------+----------------------------------+--------+------------+-------------+------------------------------------------------+

Do not issue too many failovers at once. In a big installation you might be tempted to initiate several failovers in parallel for instance to speed up an update of amphora images. This will put a strain on the nova service and depending on the size of your installation you might need to throttle the failover rate.

10.4.9.4 Load Balancer: Octavia Administration #

10.4.9.4.1 Removing load balancers #

The following procedures demonstrate how to delete a load

balancer that is in the ERROR,

PENDING_CREATE, or

PENDING_DELETE state.

Query the Neutron service for the loadbalancer ID:

tux >neutron lbaas-loadbalancer-list neutron CLI is deprecated and will be removed in the future. Use openstack CLI instead. +--------------------------------------+---------+----------------------------------+--------------+---------------------+----------+ | id | name | tenant_id | vip_address | provisioning_status | provider | +--------------------------------------+---------+----------------------------------+--------------+---------------------+----------+ | 7be4e4ab-e9c6-4a57-b767-da9af5ba7405 | test-lb | d62a1510b0f54b5693566fb8afeb5e33 | 192.168.1.10 | ERROR | haproxy | +--------------------------------------+---------+----------------------------------+--------------+---------------------+----------+Connect to the neutron database:

ImportantThe default database name depends on the life cycle manager. Ardana uses

ovs_neutronwhile Crowbar usesneutron.Ardana:

mysql> use ovs_neutron

Crowbar:

mysql> use neutron

Get the pools and healthmonitors associated with the loadbalancer:

mysql> select id, healthmonitor_id, loadbalancer_id from lbaas_pools where loadbalancer_id = '7be4e4ab-e9c6-4a57-b767-da9af5ba7405'; +--------------------------------------+--------------------------------------+--------------------------------------+ | id | healthmonitor_id | loadbalancer_id | +--------------------------------------+--------------------------------------+--------------------------------------+ | 26c0384b-fc76-4943-83e5-9de40dd1c78c | 323a3c4b-8083-41e1-b1d9-04e1fef1a331 | 7be4e4ab-e9c6-4a57-b767-da9af5ba7405 | +--------------------------------------+--------------------------------------+--------------------------------------+

Get the members associated with the pool:

mysql> select id, pool_id from lbaas_members where pool_id = '26c0384b-fc76-4943-83e5-9de40dd1c78c'; +--------------------------------------+--------------------------------------+ | id | pool_id | +--------------------------------------+--------------------------------------+ | 6730f6c1-634c-4371-9df5-1a880662acc9 | 26c0384b-fc76-4943-83e5-9de40dd1c78c | | 06f0cfc9-379a-4e3d-ab31-cdba1580afc2 | 26c0384b-fc76-4943-83e5-9de40dd1c78c | +--------------------------------------+--------------------------------------+

Delete the pool members:

mysql> delete from lbaas_members where id = '6730f6c1-634c-4371-9df5-1a880662acc9'; mysql> delete from lbaas_members where id = '06f0cfc9-379a-4e3d-ab31-cdba1580afc2';

Find and delete the listener associated with the loadbalancer:

mysql> select id, loadbalancer_id, default_pool_id from lbaas_listeners where loadbalancer_id = '7be4e4ab-e9c6-4a57-b767-da9af5ba7405'; +--------------------------------------+--------------------------------------+--------------------------------------+ | id | loadbalancer_id | default_pool_id | +--------------------------------------+--------------------------------------+--------------------------------------+ | 3283f589-8464-43b3-96e0-399377642e0a | 7be4e4ab-e9c6-4a57-b767-da9af5ba7405 | 26c0384b-fc76-4943-83e5-9de40dd1c78c | +--------------------------------------+--------------------------------------+--------------------------------------+ mysql> delete from lbaas_listeners where id = '3283f589-8464-43b3-96e0-399377642e0a';

Delete the pool associated with the loadbalancer:

mysql> delete from lbaas_pools where id = '26c0384b-fc76-4943-83e5-9de40dd1c78c';

Delete the healthmonitor associated with the pool:

mysql> delete from lbaas_healthmonitors where id = '323a3c4b-8083-41e1-b1d9-04e1fef1a331';

Delete the loadbalancer:

mysql> delete from lbaas_loadbalancer_statistics where loadbalancer_id = '7be4e4ab-e9c6-4a57-b767-da9af5ba7405'; mysql> delete from lbaas_loadbalancers where id = '7be4e4ab-e9c6-4a57-b767-da9af5ba7405';

Query the Octavia service for the loadbalancer ID:

tux >openstack loadbalancer list --column id --column name --column provisioning_status +--------------------------------------+---------+---------------------+ | id | name | provisioning_status | +--------------------------------------+---------+---------------------+ | d8ac085d-e077-4af2-b47a-bdec0c162928 | test-lb | ERROR | +--------------------------------------+---------+---------------------+Query the Octavia service for the amphora IDs (in this example we use

ACTIVE/STANDBYtopology with 1 spare Amphora):tux >openstack loadbalancer amphora list +--------------------------------------+--------------------------------------+-----------+--------+---------------+-------------+ | id | loadbalancer_id | status | role | lb_network_ip | ha_ip | +--------------------------------------+--------------------------------------+-----------+--------+---------------+-------------+ | 6dc66d41-e4b6-4c33-945d-563f8b26e675 | d8ac085d-e077-4af2-b47a-bdec0c162928 | ALLOCATED | BACKUP | 172.30.1.7 | 192.168.1.8 | | 1b195602-3b14-4352-b355-5c4a70e200cf | d8ac085d-e077-4af2-b47a-bdec0c162928 | ALLOCATED | MASTER | 172.30.1.6 | 192.168.1.8 | | b2ee14df-8ac6-4bb0-a8d3-3f378dbc2509 | None | READY | None | 172.30.1.20 | None | +--------------------------------------+--------------------------------------+-----------+--------+---------------+-------------+Query the Octavia service for the loadbalancer pools:

tux >openstack loadbalancer pool list +--------------------------------------+-----------+----------------------------------+---------------------+----------+--------------+----------------+ | id | name | project_id | provisioning_status | protocol | lb_algorithm | admin_state_up | +--------------------------------------+-----------+----------------------------------+---------------------+----------+--------------+----------------+ | 39c4c791-6e66-4dd5-9b80-14ea11152bb5 | test-pool | 86fba765e67f430b83437f2f25225b65 | ACTIVE | TCP | ROUND_ROBIN | True | +--------------------------------------+-----------+----------------------------------+---------------------+----------+--------------+----------------+Connect to the octavia database:

mysql> use octavia

Delete any listeners, pools, health monitors, and members from the load balancer:

mysql> delete from listener where load_balancer_id = 'd8ac085d-e077-4af2-b47a-bdec0c162928'; mysql> delete from health_monitor where pool_id = '39c4c791-6e66-4dd5-9b80-14ea11152bb5'; mysql> delete from member where pool_id = '39c4c791-6e66-4dd5-9b80-14ea11152bb5'; mysql> delete from pool where load_balancer_id = 'd8ac085d-e077-4af2-b47a-bdec0c162928';

Delete the amphora entries in the database:

mysql> delete from amphora_health where amphora_id = '6dc66d41-e4b6-4c33-945d-563f8b26e675'; mysql> update amphora set status = 'DELETED' where id = '6dc66d41-e4b6-4c33-945d-563f8b26e675'; mysql> delete from amphora_health where amphora_id = '1b195602-3b14-4352-b355-5c4a70e200cf'; mysql> update amphora set status = 'DELETED' where id = '1b195602-3b14-4352-b355-5c4a70e200cf';

Delete the load balancer instance:

mysql> update load_balancer set provisioning_status = 'DELETED' where id = 'd8ac085d-e077-4af2-b47a-bdec0c162928';

The following script automates the above steps:

#!/bin/bash if (( $# != 1 )); then echo "Please specify a loadbalancer ID" exit 1 fi LB_ID=$1 set -u -e -x readarray -t AMPHORAE < <(openstack loadbalancer amphora list \ --format value \ --column id \ --column loadbalancer_id \ | grep ${LB_ID} \ | cut -d ' ' -f 1) readarray -t POOLS < <(openstack loadbalancer show ${LB_ID} \ --format value \ --column pools) mysql octavia --execute "delete from listener where load_balancer_id = '${LB_ID}';" for p in "${POOLS[@]}"; do mysql octavia --execute "delete from health_monitor where pool_id = '${p}';" mysql octavia --execute "delete from member where pool_id = '${p}';" done mysql octavia --execute "delete from pool where load_balancer_id = '${LB_ID}';" for a in "${AMPHORAE[@]}"; do mysql octavia --execute "delete from amphora_health where amphora_id = '${a}';" mysql octavia --execute "update amphora set status = 'DELETED' where id = '${a}';" done mysql octavia --execute "update load_balancer set provisioning_status = 'DELETED' where id = '${LB_ID}';"

10.4.9.5 For More Information #

For more information on the OpenStackClient and Octavia terminology, see the OpenStackClient guide.

10.4.10 Role-based Access Control in neutron #

This topic explains how to achieve more granular access control for your neutron networks.

Previously in SUSE OpenStack Cloud, a network object was either private to a project or could be used by all projects. If the network's shared attribute was True, then the network could be used by every project in the cloud. If false, only the members of the owning project could use it. There was no way for the network to be shared by only a subset of the projects.

neutron Role Based Access Control (RBAC) solves this problem for networks. Now the network owner can create RBAC policies that give network access to target projects. Members of a targeted project can use the network named in the RBAC policy the same way as if the network was owned by the project. Constraints are described in the section Section 10.4.10.10, “Limitations”.

With RBAC you are able to let another tenant use a network that you created, but as the owner of the network, you need to create the subnet and the router for the network.

10.4.10.1 Creating a Network #

ardana > openstack network create demo-net

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | UP |

| availability_zone_hints | |

| availability_zones | |

| created_at | 2018-07-25T17:43:59Z |

| description | |

| dns_domain | |

| id | 9c801954-ec7f-4a65-82f8-e313120aabc4 |

| ipv4_address_scope | None |

| ipv6_address_scope | None |

| is_default | False |

| is_vlan_transparent | None |

| mtu | 1450 |

| name | demo-net |

| port_security_enabled | False |

| project_id | cb67c79e25a84e328326d186bf703e1b |

| provider:network_type | vxlan |

| provider:physical_network | None |

| provider:segmentation_id | 1009 |

| qos_policy_id | None |

| revision_number | 2 |

| router:external | Internal |

| segments | None |

| shared | False |

| status | ACTIVE |

| subnets | |

| tags | |

| updated_at | 2018-07-25T17:43:59Z |

+---------------------------+--------------------------------------+10.4.10.2 Creating an RBAC Policy #