5 Input Model #

5.1 Introduction to the Input Model #

This document describes how SUSE OpenStack Cloud input models can be used to define and configure the cloud.

SUSE OpenStack Cloud ships with a set of example input models that can be used as starting points for defining a custom cloud. An input model allows you, the cloud administrator, to describe the cloud configuration in terms of:

Which OpenStack services run on which server nodes

How individual servers are configured in terms of disk and network adapters

The overall network configuration of the cloud

Network traffic separation

CIDR and VLAN assignments

The input model is consumed by the configuration processor which parses and validates the input model and outputs the effective configuration that will be deployed to each server that makes up your cloud.

The document is structured as follows:

- This explains the ideas behind the declarative model approach used in SUSE OpenStack Cloud 9 and the core concepts used in describing that model

- This section provides a description of each of the configuration entities in the input model

- In this section we provide samples and definitions of some of the more important configuration entities

5.2 Concepts #

An SUSE OpenStack Cloud 9 cloud is defined by a declarative model that is described in a series of configuration objects. These configuration objects are represented in YAML files which together constitute the various example configurations provided as templates with this release. These examples can be used nearly unchanged, with the exception of necessary changes to IP addresses and other site and hardware-specific identifiers. Alternatively, the examples may be customized to meet site requirements.

The following diagram shows the set of configuration objects and their relationships. All objects have a name that you may set to be something meaningful for your context. In the examples these names are provided in capital letters as a convention. These names have no significance to SUSE OpenStack Cloud, rather it is the relationships between them that define the configuration.

The configuration processor reads and validates the input model described in the YAML files discussed above, combines it with the service definitions provided by SUSE OpenStack Cloud and any persisted state information about the current deployment to produce a set of Ansible variables that can be used to deploy the cloud. It also produces a set of information files that provide details about the configuration.

The relationship between the file systems on the SUSE OpenStack Cloud deployment server and the configuration processor is shown in the following diagram. Below the line are the directories that you, the cloud administrator, edit to declare the cloud configuration. Above the line are the directories that are internal to the Cloud Lifecycle Manager such as Ansible playbooks and variables.

The input model is read from the

~/openstack/my_cloud/definition directory. Although the

supplied examples use separate files for each type of object in the model,

the names and layout of the files have no significance to the configuration

processor, it simply reads all of the .yml files in this directory. Cloud

administrators are therefore free to use whatever structure is best for their

context. For example, you may decide to maintain separate files or

sub-directories for each physical rack of servers.

As mentioned, the examples use the conventional upper casing for object names, but these strings are used only to define the relationship between objects. They have no specific significance to the configuration processor.

5.2.1 Cloud #

The Cloud definition includes a few top-level configuration values such as the name of the cloud, the host prefix, details of external services (NTP, DNS, SMTP) and the firewall settings.

The location of the cloud configuration file also tells the configuration processor where to look for the files that define all of the other objects in the input model.

5.2.2 Control Planes #

A control-plane runs one or more distributed across and .

A control-plane uses servers with a particular .

A provides the operating environment for a set of ; normally consisting of a set of shared services (MariaDB, RabbitMQ, HA Proxy, Apache, etc.), OpenStack control services (API, schedulers, etc.) and the they are managing (compute, storage, etc.).

A simple cloud may have a single which runs all of the . A more complex cloud may have multiple to allow for more than one instance of some services. Services that need to consume (use) another service (such as neutron consuming MariaDB, nova consuming neutron) always use the service within the same . In addition a control-plane can describe which services can be consumed from other control-planes. It is one of the functions of the configuration processor to resolve these relationships and make sure that each consumer/service is provided with the configuration details to connect to the appropriate provider/service.

Each is structured as and . The are typically used to host the OpenStack services that manage the cloud such as API servers, database servers, neutron agents, and swift proxies, while the are used to host the scale-out OpenStack services such as nova-Compute or swift-Object services. This is a representation convenience rather than a strict rule, for example it is possible to run the swift-Object service in the management cluster in a smaller-scale cloud that is not designed for scale-out object serving.

A cluster can contain one or more and you can have one or more depending on the capacity and scalability needs of the cloud that you are building. Spreading services across multiple provides greater scalability, but it requires a greater number of physical servers. A common pattern for a large cloud is to run high data volume services such as monitoring and logging in a separate cluster. A cloud with a high object storage requirement will typically also run the swift service in its own cluster.

Clusters in this context are a mechanism for grouping service components in physical servers, but all instances of a component in a work collectively. For example, if HA Proxy is configured to run on multiple clusters within the same then all of those instances will work as a single instance of the ha-proxy service.

Both and define the type (via a list of ) and number of servers (min and max or count) they require.

The can also define a list of failure-zones () from which to allocate servers.

5.2.2.1 Control Planes and Regions #

A region in OpenStack terms is a collection of URLs that together provide a

consistent set of services (nova, neutron, swift, etc). Regions are

represented in the keystone identity service catalog. In SUSE OpenStack Cloud,

multiple regions are not supported. Only Region0 is valid.

In a simple single control-plane cloud, there is no need for a separate region definition and the control-plane itself can define the region name.

5.2.3 Services #

A runs one or more .

A service is the collection of that provide a particular feature; for example, nova provides the compute service and consists of the following service-components: nova-api, nova-scheduler, nova-conductor, nova-novncproxy, and nova-compute. Some services, like the authentication/identity service keystone, only consist of a single service-component.

To define your cloud, all you need to know about a service are the names of the . The details of the services themselves and how they interact with each other is captured in service definition files provided by SUSE OpenStack Cloud.

When specifying your SUSE OpenStack Cloud cloud you have to decide where components will run and how they connect to the networks. For example, should they all run in one sharing common services or be distributed across multiple to provide separate instances of some services? The SUSE OpenStack Cloud supplied examples provide solutions for some typical configurations.

Where services run is defined in the . How they connect to networks is defined in the .

5.2.4 Server Roles #

and use with a particular set of s.

You are going to be running the services on physical , and you are going to need a way to specify which type of servers you want to use where. This is defined via the . Each describes how to configure the physical aspects of a server to fulfill the needs of a particular role. You will generally use a different role whenever the servers are physically different (have different disks or network interfaces) or if you want to use some specific servers in a particular role (for example to choose which of a set of identical servers are to be used in the control plane).

Each has a relationship to four other entities:

The specifies how to configure and use a server's local storage and it specifies disk sizing information for virtual machine servers. The disk model is described in the next section.

The describes how a server's network interfaces are to be configured and used. This is covered in more details in the networking section.

An optional specifies how to configure and use huge pages. The memory-model specifies memory sizing information for virtual machine servers.

An optional specifies how the CPUs will be used by nova and by DPDK. The cpu-model specifies CPU sizing information for virtual machine servers.

5.2.5 Disk Model #

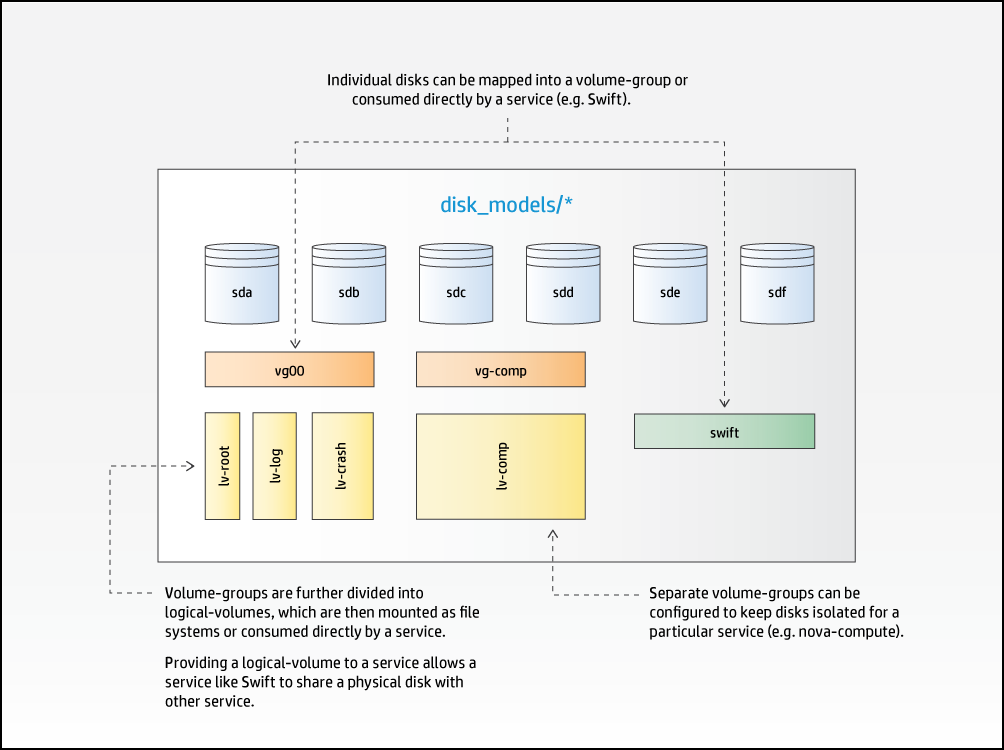

Each physical disk device is associated with a or a .

are consumed by .

are divided into .

are mounted as file systems or consumed by services.

Disk-models define how local storage is to be configured and presented to . Disk-models are identified by a name, which you will specify. The SUSE OpenStack Cloud examples provide some typical configurations. As this is an area that varies with respect to the services that are hosted on a server and the number of disks available, it is impossible to cover all possible permutations you may need to express via modifications to the examples.

Within a , disk devices are assigned to either a or a .

A is a set of one or more disks that are to be consumed directly by a service. For example, a set of disks to be used by swift. The device-group identifies the list of disk devices, the service, and a few service-specific attributes that tell the service about the intended use (for example, in the case of swift this is the ring names). When a device is assigned to a device-group, the associated service is responsible for the management of the disks. This management includes the creation and mounting of file systems. (swift can provide additional data integrity when it has full control over the file systems and mount points.)

A is used to present disk devices in a LVM volume group. It also contains details of the logical volumes to be created including the file system type and mount point. Logical volume sizes are expressed as a percentage of the total capacity of the volume group. A can also be consumed by a service in the same way as a . This allows services to manage their own devices on configurations that have limited numbers of disk drives.

Disk models also provide disk sizing information for virtual machine servers.

5.2.6 Memory Model #

Memory models define how the memory of a server should be configured to meet the needs of a particular role. It allows a number of HugePages to be defined at both the server and numa-node level.

Memory models also provide memory sizing information for virtual machine servers.

Memory models are optional - it is valid to have a server role without a memory model.

5.2.7 CPU Model #

CPU models define how CPUs of a server will be used. The model allows CPUs to be assigned for use by components such as nova (for VMs) and Open vSwitch (for DPDK). It also allows those CPUs to be isolated from the general kernel SMP balancing and scheduling algorithms.

CPU models also provide CPU sizing information for virtual machine servers.

CPU models are optional - it is valid to have a server role without a cpu model.

5.2.8 Servers #

have a which determines how they will be used in the cloud.

(in the input model) enumerate the resources available for your cloud. In addition, in this definition file you can either provide SUSE OpenStack Cloud with all of the details it needs to PXE boot and install an operating system onto the server, or, if you prefer to use your own operating system installation tooling you can simply provide the details needed to be able to SSH into the servers and start the deployment.

The address specified for the server will be the one used by SUSE OpenStack Cloud for lifecycle management and must be part of a network which is in the input model. If you are using SUSE OpenStack Cloud to install the operating system this network must be an untagged VLAN. The first server must be installed manually from the SUSE OpenStack Cloud ISO and this server must be included in the input model as well.

In addition to the network details used to install or connect to the server, each server defines what its is and to which it belongs.

5.2.9 Server Groups #

A is associated with a .

A can use as failure zones for server allocation.

A may be associated with a list of .

A can contain other .

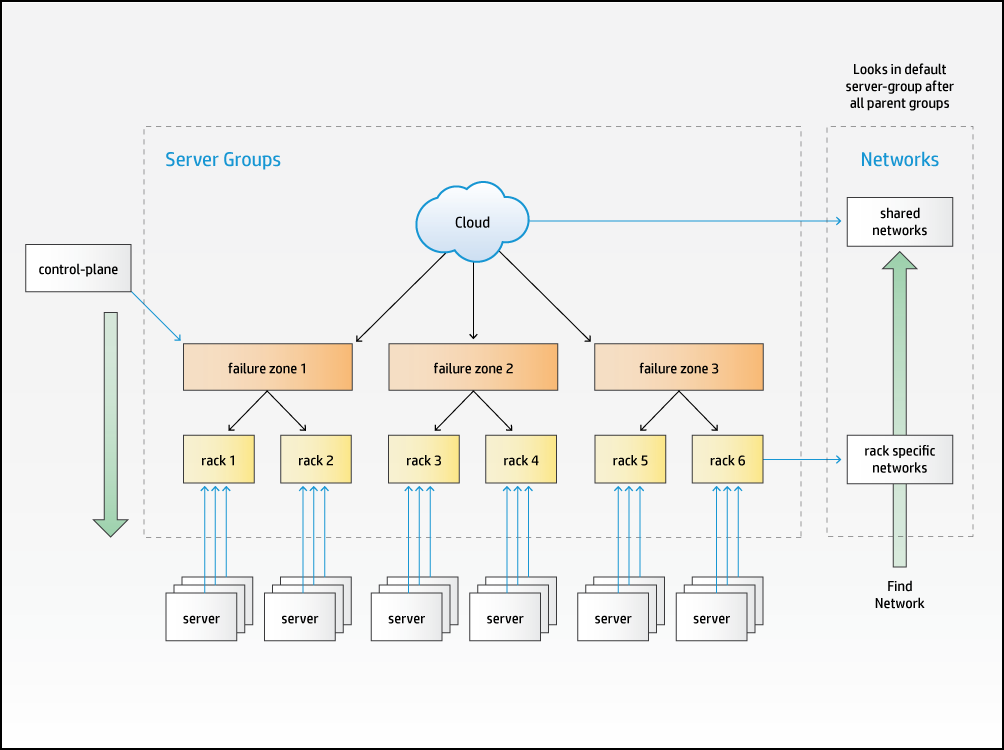

The practice of locating physical servers in a number of racks or enclosures in a data center is common. Such racks generally provide a degree of physical isolation that allows for separate power and/or network connectivity.

In the SUSE OpenStack Cloud model we support this configuration by allowing you to define a hierarchy of . Each is associated with one , normally at the bottom of the hierarchy.

are an optional part of the input model - if you do not define any, then all and will be allocated as if they are part of the same .

5.2.9.1 Server Groups and Failure Zones #

A defines a list of as the failure zones from which it wants to use servers. All servers in a listed as a failure zone in the and any they contain are considered part of that failure zone for allocation purposes. The following example shows how three levels of can be used to model a failure zone consisting of multiple racks, each of which in turn contains a number of .

When allocating , the configuration processor will traverse down the hierarchy of listed as failure zones until it can find an available server with the required . If the allocation policy is defined to be strict, it will allocate equally across each of the failure zones. A or can also independently specify the failure zones it wants to use if needed.

5.2.9.2 Server Groups and Networks #

Each L3 in a cloud must be associated with all or some of the , typically following a physical pattern (such as having separate networks for each rack or set of racks). This is also represented in the SUSE OpenStack Cloud model via , each group lists zero or more networks to which associated with at or below this point in the hierarchy are connected.

When the configuration processor needs to resolve the specific a should be configured to use, it traverses up the hierarchy of , starting with the group the server is directly associated with, until it finds a server-group that lists a network in the required network group.

The level in the hierarchy at which a is associated will depend on the span of connectivity it must provide. In the above example there might be networks in some which are per rack (that is Rack 1 and Rack 2 list different networks from the same ) and in a different that span failure zones (the network used to provide floating IP addresses to virtual machines for example).

5.2.10 Networking #

In addition to the mapping of to specific and we must also be able to define how the connect to one or more .

In a simple cloud there may be a single L3 network but more typically there are functional and physical layers of network separation that need to be expressed.

Functional network separation provides different networks for different types of traffic; for example, it is common practice in even small clouds to separate the External APIs that users will use to access the cloud and the external IP addresses that users will use to access their virtual machines. In more complex clouds it is common to also separate out virtual networking between virtual machines, block storage traffic, and volume traffic onto their own sets of networks. In the input model, this level of separation is represented by .

Physical separation is required when there are separate L3 network segments providing the same type of traffic; for example, where each rack uses a different subnet. This level of separation is represented in the input model by the within each .

5.2.10.1 Network Groups #

Service endpoints attach to in a specific .

can define routes to other .

encapsulate the configuration for via

A defines the traffic separation model and all of the properties that are common to the set of L3 networks that carry each type of traffic. They define where services are attached to the network model and the routing within that model.

In terms of connectivity, all that has to be captured in the definition are the same service-component names that are used when defining . SUSE OpenStack Cloud also allows a default attachment to be used to specify "all service-components" that are not explicitly connected to another . So, for example, to isolate swift traffic, the swift-account, swift-container, and swift-object service components are attached to an "Object" and all other services are connected to "MANAGEMENT" via the default relationship.

The name of the "MANAGEMENT" cannot be changed. It must be upper case. Every SUSE OpenStack Cloud requires this network group in order to be valid.

The details of how each service connects, such as what port it uses, if it should be behind a load balancer, if and how it should be registered in keystone, and so forth, are defined in the service definition files provided by SUSE OpenStack Cloud.

In any configuration with multiple networks, controlling the routing is a major consideration. In SUSE OpenStack Cloud, routing is controlled at the level. First, all are configured to provide the route to any other in the same . In addition, a may be configured to provide the route any other in the same ; for example, if the internal APIs are in a dedicated (a common configuration in a complex network because a network group with load balancers cannot be segmented) then other may need to include a route to the internal API so that services can access the internal API endpoints. Routes may also be required to define how to access an external storage network or to define a general default route.

As part of the SUSE OpenStack Cloud deployment, networks are configured to act as the default route for all traffic that was received via that network (so that response packets always return via the network the request came from).

Note that SUSE OpenStack Cloud will configure the routing rules on the servers it deploys and will validate that the routes between services exist in the model, but ensuring that gateways can provide the required routes is the responsibility of your network configuration. The configuration processor provides information about the routes it is expecting to be configured.

For a detailed description of how the configuration processor validates routes, refer to Section 7.6, “Network Route Validation”.

5.2.10.1.1 Load Balancers #

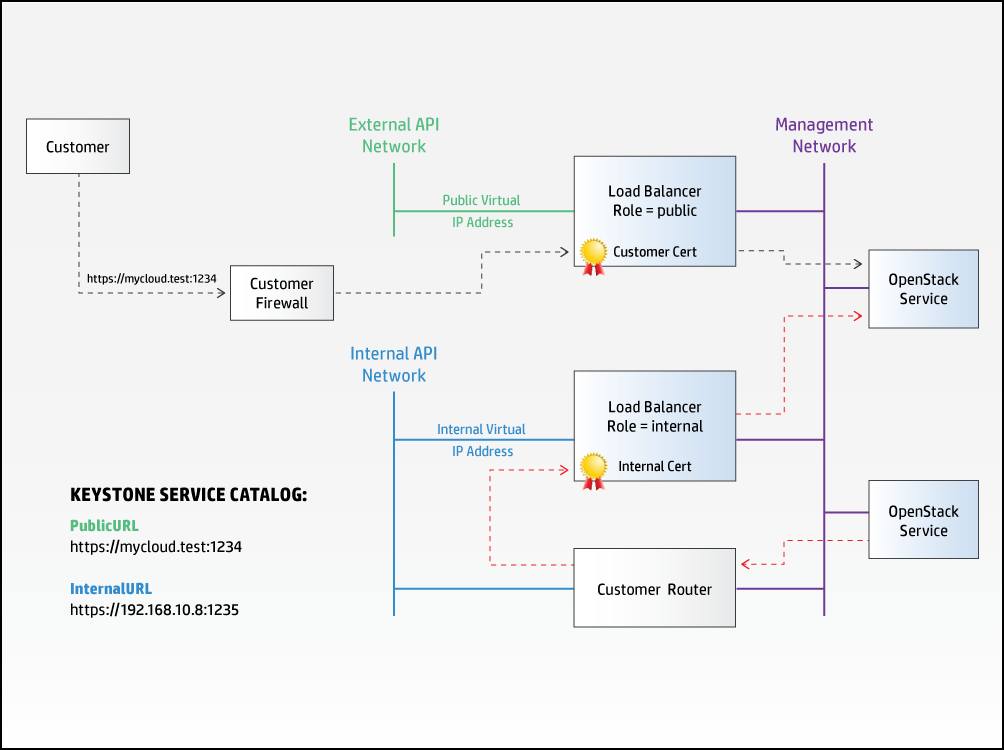

provide a specific type of routing and are defined as a relationship between the virtual IP address (VIP) on a network in one and a set of service endpoints (which may be on in the same or a different ).

As each is defined providing a virtual IP on a , it follows that those s can each only have one associated to them.

The definition includes a list of and endpoint roles it will provide a virtual IP for. This model allows service-specific to be defined on different . A "default" value is used to express "all service-components" which require a virtual IP address and are not explicitly configured in another configuration. The details of how the should be configured for each service, such as which ports to use, how to check for service liveness, etc., are provided in the SUSE OpenStack Cloud supplied service definition files.

Where there are multiple instances of a service (for example, in a cloud with multiple control-planes), each control-plane needs its own set of virtual IP address and different values for some properties such as the external name and security certificate. To accommodate this in SUSE OpenStack Cloud 9, load-balancers are defined as part of the control-plane, with the network groups defining just which load-balancers are attached to them.

Load balancers are always implemented by an ha-proxy service in the same control-plane as the services.

5.2.10.1.2 Separation of Public, Admin, and Internal Endpoints #

The list of endpoint roles for a make it possible to configure separate for public and internal access to services, and the configuration processor uses this information to both ensure the correct registrations in keystone and to make sure the internal traffic is routed to the correct endpoint. SUSE OpenStack Cloud services are configured to only connect to other services via internal virtual IP addresses and endpoints, allowing the name and security certificate of public endpoints to be controlled by the customer and set to values that may not be resolvable/accessible from the servers making up the cloud.

Note that each defined in the input model will be allocated a separate virtual IP address even when the load-balancers are part of the same . Because of the need to be able to separate both public and internal access, SUSE OpenStack Cloud will not allow a single to provide both public and internal access. in this context are logical entities (sets of rules to transfer traffic from a virtual IP address to one or more endpoints).

The following diagram shows a possible configuration in which the hostname associated with the public URL has been configured to resolve to a firewall controlling external access to the cloud. Within the cloud, SUSE OpenStack Cloud services are configured to use the internal URL to access a separate virtual IP address.

5.2.10.1.3 Network Tags #

Network tags are defined by some SUSE OpenStack Cloud and are used to convey information between the network model and the service, allowing the dependent aspects of the service to be automatically configured.

Network tags also convey requirements a service may have for aspects of the server network configuration, for example, that a bridge is required on the corresponding network device on a server where that service-component is installed.

See Section 6.13.2, “Network Tags” for more information on specific tags and their usage.

5.2.10.2 Networks #

A is part of a .

are fairly simple definitions. Each defines the details of its VLAN, optional address details (CIDR, start and end address, gateway address), and which it is a member of.

5.2.10.3 Interface Model #

A identifies an that describes how its network interfaces are to be configured and used.

Network groups are mapped onto specific network interfaces via an , which describes the network devices that need to be created (bonds, ovs-bridges, etc.) and their properties.

An acts like a template; it can define how some or all of the are to be mapped for a particular combination of physical NICs. However, it is the on each server that determine which are required and hence which interfaces and will be configured. This means that can be shared between different . For example, an API role and a database role may share an interface model even though they may have different disk models and they will require a different subset of the .

Within an , physical ports are identified by a device name, which in turn is resolved to a physical port on a server basis via a . To allow different physical servers to share an , the is defined as a property of each .

The interface-model can also used to describe how network

devices are to be configured for use with DPDK, SR-IOV, and PCI Passthrough.

5.2.10.4 NIC Mapping #

When a has more than a single physical network

port, a is required to unambiguously identify

each port. Standard Linux mapping of ports to interface names at the time of

initial discovery (for example, eth0,

eth1, eth2, ...) is not uniformly

consistent

from server to server, so a mapping of PCI bus address to interface name is

instead.

NIC mappings are also used to specify the device type for interfaces that are to be used for SR-IOV or PCI Passthrough. Each SUSE OpenStack Cloud release includes the data for the supported device types.

5.2.10.5 Firewall Configuration #

The configuration processor uses the details it has about which networks and ports use to create a set of firewall rules for each server. The model allows additional user-defined rules on a per basis.

5.2.11 Configuration Data #

Configuration Data is used to provide settings which have to be applied in a specific context, or where the data needs to be verified against or merged with other values in the input model.

For example, when defining a neutron provider network to be used by Octavia, the network needs to be included in the routing configuration generated by the Configuration Processor.