4 High Availability #

This chapter covers High Availability concepts overview and cloud infrastructure.

4.1 High Availability Concepts Overview #

A highly available (HA) cloud ensures that a minimum level of cloud resources are always available on request, which results in uninterrupted operations for users.

In order to achieve this high availability of infrastructure and workloads, we define the scope of HA to be limited to protecting these only against single points of failure (SPOF). Single points of failure include:

Hardware SPOFs: Hardware failures can take the form of server failures, memory going bad, power failures, hypervisors crashing, hard disks dying, NIC cards breaking, switch ports failing, network cables loosening, and so forth.

Software SPOFs: Server processes can crash due to software defects, out-of-memory conditions, operating system kernel panic, and so forth.

By design, SUSE OpenStack Cloud strives to create a system architecture resilient to SPOFs, and does not attempt to automatically protect the system against multiple cascading levels of failures; such cascading failures will result in an unpredictable state. The cloud operator is encouraged to recover and restore any failed component as soon as the first level of failure occurs.

4.2 Highly Available Cloud Infrastructure #

The highly available cloud infrastructure consists of the following:

High Availability of Controllers

Availability Zones

Compute with KVM

nova Availability Zones

Compute with ESX

Object Storage with swift

4.3 High Availability of Controllers #

The SUSE OpenStack Cloud installer deploys highly available configurations of OpenStack cloud services, resilient against single points of failure.

The high availability of the controller components comes in two main forms.

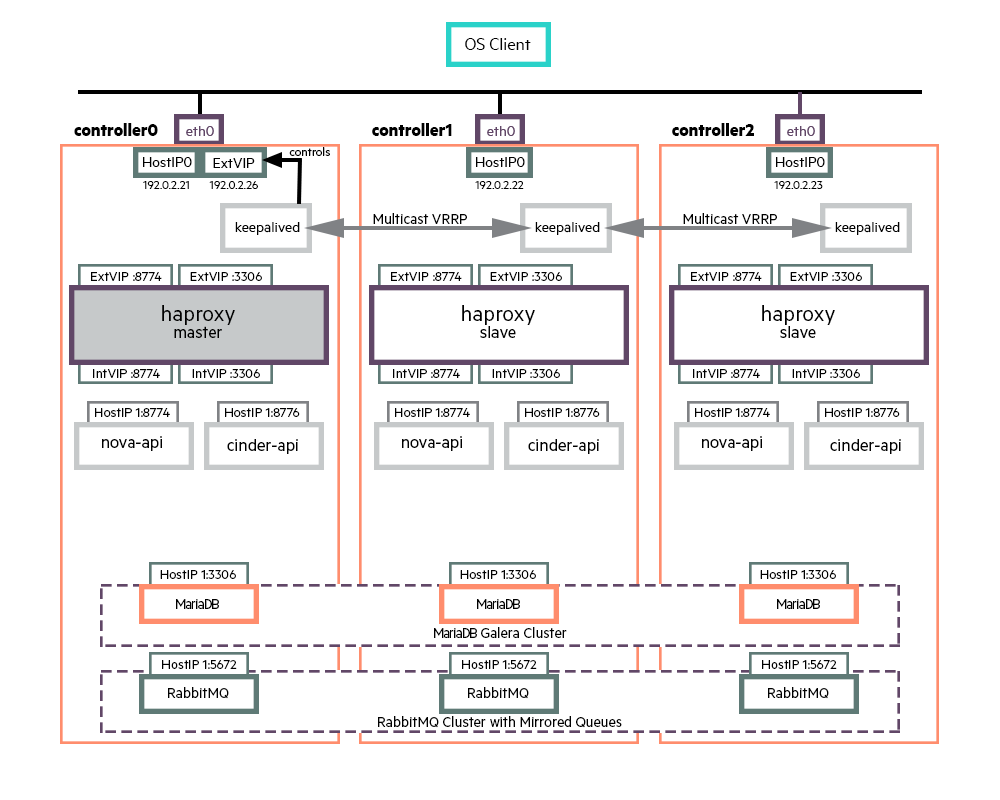

Many services are stateless and multiple instances are run across the control plane in active-active mode. The API services (nova-api, cinder-api, etc.) are accessed through the HA proxy load balancer whereas the internal services (nova-scheduler, cinder-scheduler, etc.), are accessed through the message broker. These services use the database cluster to persist any data.

NoteThe HA proxy load balancer is also run in active-active mode and keepalived (used for Virtual IP (VIP) Management) is run in active-active mode, with only one keepalived instance holding the VIP at any one point in time.

The high availability of the message queue service and the database service is achieved by running these in a clustered mode across the three nodes of the control plane: RabbitMQ cluster with Mirrored Queues and MariaDB Galera cluster.

The above diagram illustrates the HA architecture with the focus on VIP management and load balancing. It only shows a subset of active-active API instances and does not show examples of other services such as nova-scheduler, cinder-scheduler, etc.

In the above diagram, requests from an OpenStack client to the API services are sent to VIP and port combination; for example, 192.0.2.26:8774 for a nova request. The load balancer listens for requests on that VIP and port. When it receives a request, it selects one of the controller nodes configured for handling nova requests, in this particular case, and then forwards the request to the IP of the selected controller node on the same port.

The nova-api service, which is listening for requests on the IP of its host machine, then receives the request and deals with it accordingly. The database service is also accessed through the load balancer. RabbitMQ, on the other hand, is not currently accessed through VIP/HA proxy as the clients are configured with the set of nodes in the RabbitMQ cluster and failover between cluster nodes is automatically handled by the clients.

4.4 High Availability Routing - Centralized #

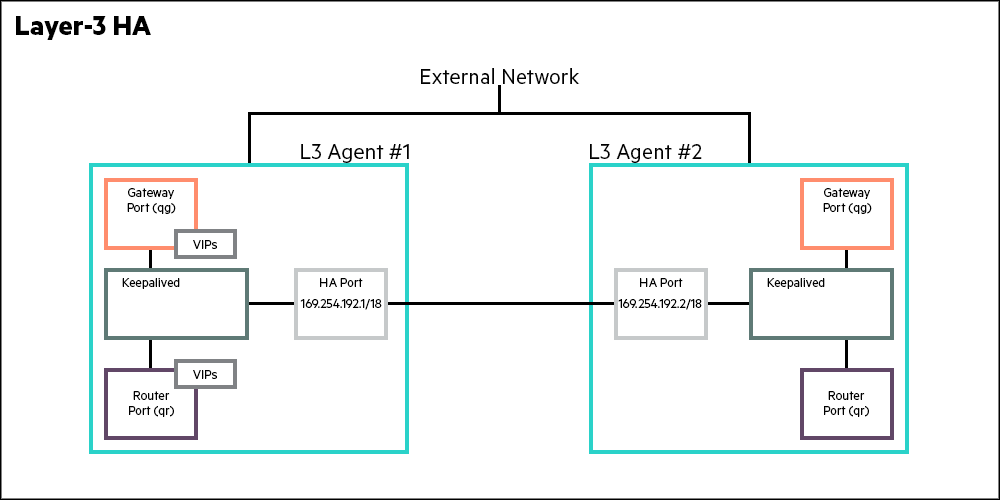

Incorporating High Availability into a system involves implementing redundancies in the component that is being made highly available. In Centralized Virtual Router (CVR), that element is the Layer 3 agent (L3 agent). By making L3 agent highly available, upon failure all HA routers are migrated from the primary L3 agent to a secondary L3 agent. The implementation efficiency of an HA subsystem is measured by the number of packets that are lost when the secondary L3 agent is made the master.

In SUSE OpenStack Cloud, the primary and secondary L3 agents run continuously, and failover involves a rapid switchover of mastership to the secondary agent (IEFT RFC 5798). The failover essentially involves a switchover from an already running master to an already running slave. This substantially reduces the latency of the HA. The mechanism used by the master and the slave to implement a failover is implemented using Linux’s pacemaker HA resource manager. This CRM (Cluster resource manager) uses VRRP (Virtual Router Redundancy Protocol) to implement the HA mechanism. VRRP is a industry standard protocol and defined in RFC 5798.

L3 HA uses of VRRP comes with several benefits.

The primary benefit is that the failover mechanism does not involve interprocess communication overhead. Such overhead would be in the order of 10s of seconds. By not using an RPC mechanism to invoke the secondary agent to assume the primary agents role enables VRRP to achieve failover within 1-2 seconds.

In VRRP, the primary and secondary routers are all active. As the routers are running, it is a matter of making the router aware of its primary/master status. This switchover takes less than 2 seconds instead of 60+ seconds it would have taken to start a backup router and failover.

The failover depends upon a heartbeat link between the primary and secondary. That link in SUSE OpenStack Cloud uses keepalived package of the pacemaker resource manager. The heartbeats are sent at a 2 second intervals between the primary and secondary. As per the VRRP protocol, if the secondary does not hear from the master after 3 intervals, it assumes the function of the primary.

Further, all the routable IP addresses, that is the VIPs (virtual IPs) are assigned to the primary agent.

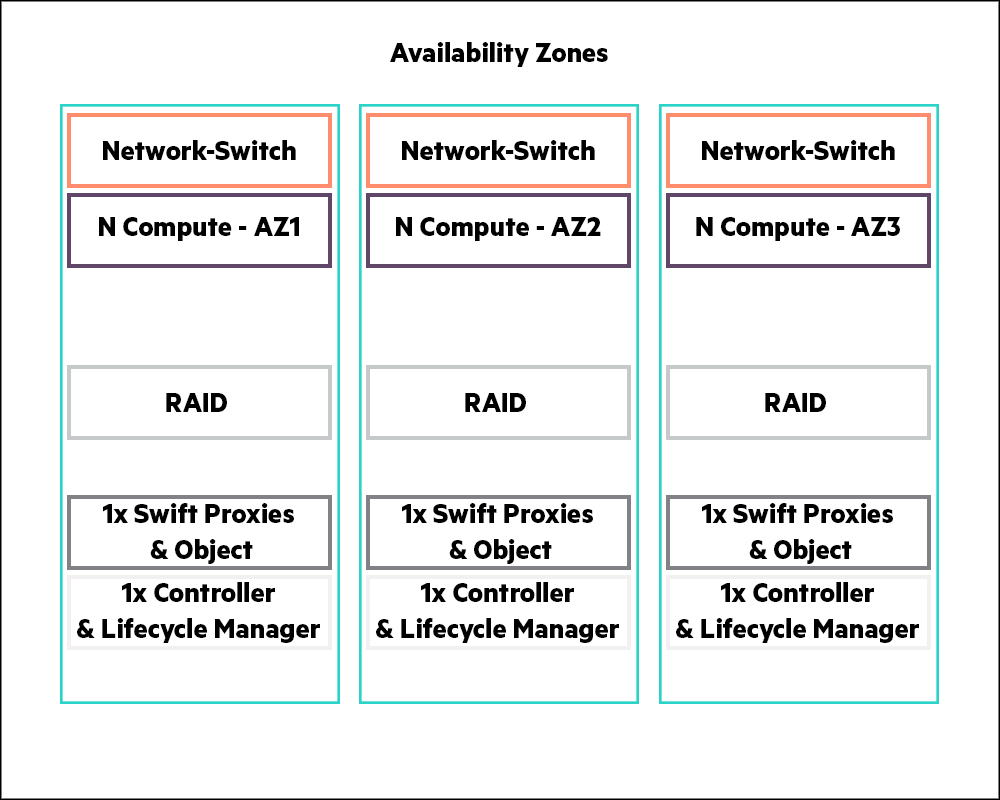

4.5 Availability Zones #

While planning your OpenStack deployment, you should decide on how to zone various types of nodes - such as compute, block storage, and object storage. For example, you may decide to place all servers in the same rack in the same zone. For larger deployments, you may plan more elaborate redundancy schemes for redundant power, network ISP connection, and even physical firewalling between zones (this aspect is outside the scope of this document).

SUSE OpenStack Cloud offers APIs, CLIs and horizon UIs for the administrator to define and user to consume, availability zones for nova, cinder and swift services. This section outlines the process to deploy specific types of nodes to specific physical servers, and makes a statement of available support for these types of availability zones in the current release.

By default, SUSE OpenStack Cloud is deployed in a single availability zone upon installation. Multiple availability zones can be configured by an administrator post-install, if required. Refer to OpenStack Documentation

4.6 Compute with KVM #

You can deploy your KVM nova-compute nodes either during initial installation or by adding compute nodes post initial installation.

While adding compute nodes post initial installation, you can specify the target physical servers for deploying the compute nodes.

Learn more about adding compute nodes in Section 15.1.3.4, “Adding Compute Node”.

4.7 Nova Availability Zones #

nova host aggregates and nova availability zones can be used to segregate nova compute nodes across different failure zones.

4.8 Compute with ESX Hypervisor #

Compute nodes deployed on ESX Hypervisor can be made highly available using the HA feature of VMware ESX Clusters. For more information on VMware HA, please refer to your VMware ESX documentation.

4.9 cinder Availability Zones #

cinder availability zones are not supported for general consumption in the current release.

4.10 Object Storage with Swift #

High availability in swift is achieved at two levels.

Control Plane

The swift API is served by multiple swift proxy nodes. Client requests are directed to all swift proxy nodes by the HA Proxy load balancer in round-robin fashion. The HA Proxy load balancer regularly checks the node is responding, so that if it fails, traffic is directed to the remaining nodes. The swift service will continue to operate and respond to client requests as long as at least one swift proxy server is running.

If a swift proxy node fails in the middle of a transaction, the transaction fails. However it is standard practice for swift clients to retry operations. This is transparent to applications that use the python-swiftclient library.

The entry-scale example cloud models contain three swift proxy nodes. However, it is possible to add additional clusters with additional swift proxy nodes to handle a larger workload or to provide additional resiliency.

Data

Multiple replicas of all data is stored. This happens for account, container and object data. The example cloud models recommend a replica count of three. However, you may change this to a higher value if needed.

When swift stores different replicas of the same item on disk, it ensures that as far as possible, each replica is stored in a different zone, server or drive. This means that if a single server of disk drives fails, there should be two copies of the item on other servers or disk drives.

If a disk drive is failed, swift will continue to store three replicas. The replicas that would normally be stored on the failed drive are “handed off” to another drive on the system. When the failed drive is replaced, the data on that drive is reconstructed by the replication process. The replication process re-creates the “missing” replicas by copying them to the drive using one of the other remaining replicas. While this is happening, swift can continue to store and retrieve data.

4.11 Highly Available Cloud Applications and Workloads #

Projects writing applications to be deployed in the cloud must be aware of the cloud architecture and potential points of failure and architect their applications accordingly for high availability.

Some guidelines for consideration:

Assume intermittent failures and plan for retries

OpenStack Service APIs: invocations can fail - you should carefully evaluate the response of each invocation, and retry in case of failures.

Compute: VMs can die - monitor and restart them

Network: Network calls can fail - retry should be successful

Storage: Storage connection can hiccup - retry should be successful

Build redundancy into your application tiers

Replicate VMs containing stateless services such as Web application tier or Web service API tier and put them behind load balancers. You must implement your own HA Proxy type load balancer in your application VMs.

Boot the replicated VMs into different nova availability zones.

If your VM stores state information on its local disk (Ephemeral Storage), and you cannot afford to lose it, then boot the VM off a cinder volume.

Take periodic snapshots of the VM which will back it up to swift through glance.

Your data on ephemeral may get corrupted (but not your backup data in swift and not your data on cinder volumes).

Take regular snapshots of cinder volumes and also back up cinder volumes or your data exports into swift.

Instead of rolling your own highly available stateful services, use readily available SUSE OpenStack Cloud platform services such as designate, the DNS service.

4.12 What is not Highly Available? #

- Cloud Lifecycle Manager

The Cloud Lifecycle Manager in SUSE OpenStack Cloud is not highly available.

- Control Plane

High availability (HA) is supported for the Network Service FWaaS. HA is not supported for VPNaaS.

- cinder Volume and Backup Services

cinder Volume and Backup Services are not high availability and started on one controller node at a time. More information on cinder Volume and Backup Services can be found in Section 8.1.3, “Managing cinder Volume and Backup Services”.

- keystone Cron Jobs

The keystone cron job is a singleton service, which can only run on a single node at a time. A manual setup process for this job will be required in case of a node failure. More information on enabling the cron job for keystone on the other nodes can be found in Section 5.12.4, “System cron jobs need setup”.