27 Installing ESX Computes and OVSvAPP #

This section describes the installation step requirements for ESX Computes (nova-proxy) and OVSvAPP.

27.1 Prepare for Cloud Installation #

Review the Chapter 14, Pre-Installation Checklist about recommended pre-installation tasks.

Prepare the Cloud Lifecycle Manager node. The Cloud Lifecycle Manager must be accessible either directly or via

ssh, and have SUSE Linux Enterprise Server 12 SP4 installed. All nodes must be accessible to the Cloud Lifecycle Manager. If the nodes do not have direct access to online Cloud subscription channels, the Cloud Lifecycle Manager node will need to host the Cloud repositories.If you followed the installation instructions for Cloud Lifecycle Manager server (see Chapter 15, Installing the Cloud Lifecycle Manager server), SUSE OpenStack Cloud software should already be installed. Double-check whether SUSE Linux Enterprise and SUSE OpenStack Cloud are properly registered at the SUSE Customer Center by starting YaST and running › .

If you have not yet installed SUSE OpenStack Cloud, do so by starting YaST and running › › . Choose and follow the on-screen instructions. Make sure to register SUSE OpenStack Cloud during the installation process and to install the software pattern

patterns-cloud-ardana.tux >sudo zypper -n in patterns-cloud-ardanaEnsure the SUSE OpenStack Cloud media repositories and updates repositories are made available to all nodes in your deployment. This can be accomplished either by configuring the Cloud Lifecycle Manager server as an SMT mirror as described in Chapter 16, Installing and Setting Up an SMT Server on the Cloud Lifecycle Manager server (Optional) or by syncing or mounting the Cloud and updates repositories to the Cloud Lifecycle Manager server as described in Chapter 17, Software Repository Setup.

Configure passwordless

sudofor the user created when setting up the node (as described in Section 15.4, “Creating a User”). Note that this is not the userardanathat will be used later in this procedure. In the following we assume you named the usercloud. Run the commandvisudoas userrootand add the following line to the end of the file:CLOUD ALL = (root) NOPASSWD:ALL

Make sure to replace CLOUD with your user name choice.

Set the password for the user

ardana:tux >sudo passwd ardanaBecome the user

ardana:tux >su - ardanaPlace a copy of the SUSE Linux Enterprise Server 12 SP4

.isoin theardanahome directory,var/lib/ardana, and rename it tosles12sp4.iso.Install the templates, examples, and working model directories:

ardana >/usr/bin/ardana-init

27.2 Setting Up the Cloud Lifecycle Manager #

27.2.1 Installing the Cloud Lifecycle Manager #

Running the ARDANA_INIT_AUTO=1 command is optional to

avoid stopping for authentication at any step. You can also run

ardana-initto launch the Cloud Lifecycle Manager. You will be prompted to

enter an optional SSH passphrase, which is used to protect the key used by

Ansible when connecting to its client nodes. If you do not want to use a

passphrase, press Enter at the prompt.

If you have protected the SSH key with a passphrase, you can avoid having to enter the passphrase on every attempt by Ansible to connect to its client nodes with the following commands:

ardana >eval $(ssh-agent)ardana >ssh-add ~/.ssh/id_rsa

The Cloud Lifecycle Manager will contain the installation scripts and configuration files to deploy your cloud. You can set up the Cloud Lifecycle Manager on a dedicated node or you do so on your first controller node. The default choice is to use the first controller node as the Cloud Lifecycle Manager.

Download the product from:

Boot your Cloud Lifecycle Manager from the SLES ISO contained in the download.

Enter

install(all lower-case, exactly as spelled out here) to start installation.Select the language. Note that only the English language selection is currently supported.

Select the location.

Select the keyboard layout.

Select the primary network interface, if prompted:

Assign IP address, subnet mask, and default gateway

Create new account:

Enter a username.

Enter a password.

Enter time zone.

Once the initial installation is finished, complete the Cloud Lifecycle Manager setup with these steps:

Ensure your Cloud Lifecycle Manager has a valid DNS nameserver specified in

/etc/resolv.conf.Set the environment variable LC_ALL:

export LC_ALL=C

NoteThis can be added to

~/.bashrcor/etc/bash.bashrc.

The node should now have a working SLES setup.

27.3 Overview of ESXi and OVSvApp #

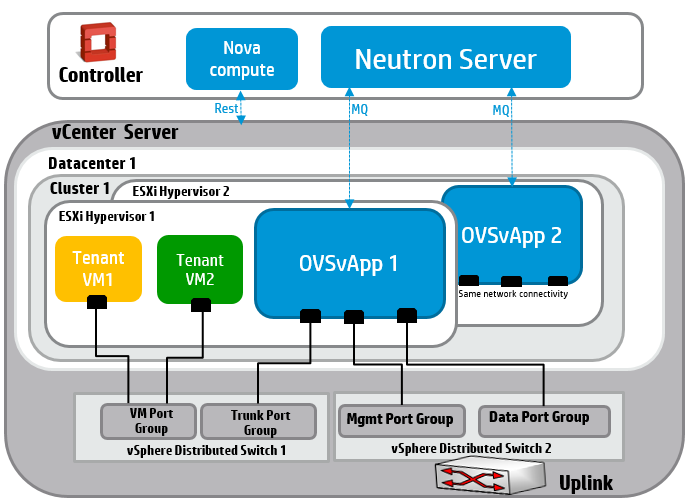

ESXi is a hypervisor developed by VMware for deploying and serving virtual computers. OVSvApp is a service VM that allows for leveraging advanced networking capabilities that OpenStack neutron provides. As a result, OpenStack features can be added quickly with minimum effort where ESXi is used. OVSvApp allows for hosting VMs on ESXi hypervisors together with the flexibility of creating port groups dynamically on Distributed Virtual Switches (DVS). Network traffic can then be steered through the OVSvApp VM which provides VLAN and VXLAN underlying infrastructure for VM communication and security features based on OpenStack. More information is available at the OpenStack wiki.

The diagram below illustrates the OVSvApp architecture.

27.4 VM Appliances Used in OVSvApp Implementation #

The default configuration deployed with the Cloud Lifecycle Manager for VMware ESX hosts uses service appliances that run as VMs on the VMware hypervisor. There is one OVSvApp VM per VMware ESX host and one nova Compute Proxy per VMware cluster or VMware vCenter Server. Instructions for how to create a template for the Nova Compute Proxy or ovsvapp can be found at Section 27.9, “Create a SUSE-based Virtual Appliance Template in vCenter”.

27.4.1 OVSvApp VM #

OVSvApp implementation is comprised of:

a service VM called OVSvApp VM hosted on each ESXi hypervisor within a cluster, and

two vSphere Distributed vSwitches (DVS).

OVSvApp VMs run SUSE Linux Enterprise and have Open vSwitch installed with an agent called

OVSvApp agent. The OVSvApp VM routes network traffic to

the various VMware tenants and cooperates with the OpenStack deployment to

configure the appropriate port and network settings for VMware tenants.

27.4.2 nova Compute Proxy VM #

The nova compute proxy is the nova-compute service

for VMware ESX. Only one instance of this service is required for each ESX

cluster that is deployed and is communicating with a single VMware vCenter

server. (This is not like KVM where the nova-compute

service must run on every KVM Host.) The single instance of

nova-compute service can run in the OpenStack controller

node or any other service node in your cloud. The main component of the

nova-compute VM is the OVSvApp nova VCDriver that talks

to the VMware vCenter server to perform VM operations such as VM creation

and deletion.

27.5 Prerequisites for Installing ESXi and Managing with vCenter #

ESX/vCenter integration is not fully automatic. vCenter administrators are responsible for taking steps to ensure secure operation.

The VMware administrator is responsible for administration of the vCenter servers and the ESX nodes using the VMware administration tools. These responsibilities include:

Installing and configuring vCenter server

Installing and configuring ESX server and ESX cluster

Installing and configuring shared datastores

Establishing network connectivity between the ESX network and the Cloud Lifecycle Manager OpenStack management network

The VMware administration staff is responsible for the review of vCenter logs. These logs are not automatically included in Cloud Lifecycle Manager OpenStack centralized logging.

The VMware administrator is responsible for administration of the vCenter servers and the ESX nodes using the VMware administration tools.

Logging levels for vCenter should be set appropriately to prevent logging of the password for the Cloud Lifecycle Manager OpenStack message queue.

The vCenter cluster and ESX Compute nodes must be appropriately backed up.

Backup procedures for vCenter should ensure that the file containing the Cloud Lifecycle Manager OpenStack configuration as part of nova and cinder volume services is backed up and the backups are protected appropriately.

Since the file containing the Cloud Lifecycle Manager OpenStack message queue password could appear in the swap area of a vCenter server, appropriate controls should be applied to the vCenter cluster to prevent discovery of the password via snooping of the swap area or memory dumps.

It is recommended to have a common shared storage for all the ESXi hosts in a particular cluster.

Ensure that you have enabled HA (High Availability) and DRS (Distributed Resource Scheduler) settings in a cluster configuration before running the installation. DRS and HA are disabled only for OVSvApp. This is done so that it does not move to a different host. If you do not enable DRS and HA prior to installation then you will not be able to disable it only for OVSvApp. As a result DRS or HA could migrate OVSvApp to a different host, which would create a network loop.

No two clusters should have the same name across datacenters in a given vCenter.

27.6 ESXi/vCenter System Requirements #

For information about recommended hardware minimums, consult Section 3.2, “Recommended Hardware Minimums for an Entry-scale ESX KVM Model”.

27.7 Creating an ESX Cluster #

Steps to create an ESX Cluster:

Download the ESXi Hypervisor and vCenter Appliance from the VMware website.

Install the ESXi Hypervisor.

Configure the Management Interface.

Enable the CLI and Shell access.

Set the password and login credentials.

Extract the vCenter Appliance files.

The vCenter Appliance offers two ways to install the vCenter. The directory

vcsa-ui-installercontains the graphical installer. Thevcsa-cli-installerdirectory contains the command line installer. The remaining steps demonstrate using thevcsa-ui-installerinstaller.In the

vcsa-ui-installer, click the to start installing the vCenter Appliance in the ESXi Hypervisor.Note the MANAGEMENT IP, USER ID, and PASSWORD of the ESXi Hypervisor.

Assign an IP ADDRESS, USER ID, and PASSWORD to the vCenter server.

Complete the installation.

When the installation is finished, point your Web browser to the IP ADDRESS of the vCenter. Connect to the vCenter by clicking on link in the browser.

Enter the information for the vCenter you just created: IP ADDRESS, USER ID, and PASSWORD.

When connected, configure the following:

DatacenterGo to

Home>Inventory>Hosts and ClustersSelect File > New > Datacenter

Rename the datacenter

Cluster

Right-click a datacenter or directory in the vSphere Client and select .

Enter a name for the cluster.

Choose cluster features.

Add a Host to Cluster

In the vSphere Web Client, navigate to a datacenter, cluster, or directory within a datacenter.

Right-click the datacenter, cluster, or directory and select .

Type the IP address or the name of the host and click .

Enter the administrator credentials and click .

Review the host summary and click .

Assign a license key to the host.

27.8 Configuring the Required Distributed vSwitches and Port Groups #

The required Distributed vSwitches (DVS) and port groups can be created by

using the vCenter graphical user interface (GUI) or by using the command line

tool provided by python-networking-vsphere. The vCenter

GUI is recommended.

OVSvApp virtual machines (VMs) give ESX installations the ability to leverage some of the advanced networking capabilities and other benefits OpenStack provides. In particular, OVSvApp allows for hosting VMs on ESX/ESXi hypervisors together with the flexibility of creating port groups dynamically on Distributed Virtual Switch.

A port group is a management object for aggregation of multiple ports (on a virtual switch) under a common configuration. A VMware port group is used to group together a list of ports in a virtual switch (DVS in this section) so that they can be configured all at once. The member ports of a port group inherit their configuration from the port group, allowing for configuration of a port by simply dropping it into a predefined port group.

The following sections cover configuring OVSvApp switches on ESX. More information about OVSvApp is available at https://wiki.openstack.org/wiki/Neutron/Networking-vSphere

The diagram below illustrates a typical configuration that uses OVSvApp and Distributed vSwitches.

Detailed instructions are shown in the following sections for four example installations and two command line procedures.

27.8.1 Creating ESXi TRUNK DVS and Required Portgroup #

The process of creating an ESXi Trunk Distributed vSwitch (DVS) consists of three steps: create a switch, add host and physical adapters, and add a port group. Use the following detailed instructions to create a trunk DVS and a required portgroup. These instructions use a graphical user interface (GUI). The GUI menu options may vary slightly depending on the specific version of vSphere installed. Command line interface (CLI) instructions are below the GUI instructions.

27.8.1.1 Creating ESXi Trunk DVS with vSphere Web Client #

Create the switch.

Using vSphere webclient, connect to the vCenter server.

Under , right-click on the appropriate datacenter. Select > .

Name the switch

TRUNK. Click .Select version 6.0.0 or larger. Click .

Under , lower the number of uplink ports to the lowest possible number (0 or 1). Uncheck . Click .

Under , verify the settings are correct and click .

Add host and physical adapters.

Under find the DVS named

TRUNKyou just created. Right-click on it and select .Under , select . Click .

Click .

Select the

CURRENT ESXI HOSTand select . Click .Under , select and UNCHECK all other boxes. Click .

Under , check that the

Maximum Number of Portsreads(auto). There is nothing else to do. Click .Under , verify that one and only one host is being added and click .

Add port group.

Right-click on the TRUNK DVS that was just created (or modified) and select > .

Name the port group

TRUNK-PG. Click .Under select:

port binding>Static bindingport allocation>Elasticvlan type>VLAN trunkingwith range of 1–4094.

Check

Customized default policies configuration. Click .Under use the following values:

Setting

Value

promiscuous mode

accept

MAC address changes

reject

Forged transmits

accept

Set to

true(port count growing).Skip and click .

Skip and click .

Skip and click .

Under there is nothing to be done. Click .

Under add a description if desired. Click .

Under verify everything is as expected and click .

27.8.2 Creating ESXi MGMT DVS and Required Portgroup #

The process of creating an ESXi Mgmt Distributed vSwitch (DVS) consists of three steps: create a switch, add host and physical adapters, and add a port group. Use the following detailed instructions to create a mgmt DVS and a required portgroup.

Create the switch.

Using the vSphere webclient, connect to the vCenter server.

Under , right-click on the appropriate datacenter, and select

Distributed Switch>New Distributed SwitchName the switch

MGMT. Click .Select version 6.0.0 or higher. Click .

Under , select the appropriate number of uplinks. The

MGMTDVS is what connects the ESXi host to the OpenStack management network. UncheckCreate a default port group. Click .Under , verify the settings are correct. Click .

Add host and physical adapters to Distributed Virtual Switch.

Under

Networking, find theMGMTDVS you just created. Right-click on it and select .Under , select . Click .

Click .

Select the current ESXi host and select . Click .

Under , select and UNCHECK all other boxes. Click .

Under , click on the interface you are using to connect the ESXi to the OpenStack management network. The name is of the form

vmnic#(for example,vmnic0,vmnic1, etc.). When the interface is highlighted, select then select the uplink name to assign or auto assign. Repeat the process for each uplink physical NIC you will be using to connect to the OpenStack data network. Click .Verify that you understand and accept the impact shown by . Click .

Verify that everything is correct and click on .

Add MGMT port group to switch.

Right-click on the

MGMTDVS and select > .Name the port group

MGMT-PG. Click .Under , select:

port binding>Static bindingport allocation>Elasticvlan type>None

Click .

Under , verify that everything is as expected and click .

Add GUEST port group to the switch.

Right-click on the DVS (MGMT) that was just created (or modified). Select > .

Name the port group

GUEST-PG. Click .Under , select:

port binding>Static bindingport allocation>Elasticvlan type>VLAN trunkingThe VLAN range corresponds to the VLAN ids being used by the OpenStack underlay. This is the same VLAN range as configured in theneutron.confconfiguration file for the neutron server.

Select . Click .

Under , use the following settings:

setting

value

promiscuous mode

accept

MAC address changes

reject

Forged transmits

accept

Skip and click .

Under , make changes appropriate for your network and deployment.

Skip and click .

Skip and click .

Under , add a description if desired. Click .

Under , verify everything is as expected. Click .

Add ESX-CONF port group.

Right-click on the DVS (MGMT) that was just created (or modified). Select > .

Name the port group

ESX-CONF-PG. Click .Under , select:

port binding>Static bindingport allocation>Elasticvlan type>None

Click .

port binding>Static bindingport allocation>Elasticvlan type>None

Click .

Under , verify that everything is as expected and click .

27.8.3 Configuring OVSvApp Network Resources Using Ansible-Playbook #

The Ardana ansible playbook

neutron-create-ovsvapp-resources.yml can be used to

create Distributed Virtual Switches and Port Groups on a vCenter cluster.

The playbook requires the following inputs:

vcenter_usernamevcenter_encrypted_passwordvcenter_ipvcenter_port(default 443)vc_net_resources_locationThis is the path to a file which contains the definition of the resources to be created. The definition is in JSON format.

The neutron-create-ovsvapp-resources.yml playbook is

not set up by default. In order to execute the playbook from the Cloud Lifecycle Manager, the

python-networking-vsphere package must be installed.

Install the

python-networking-vspherepackage:tux >sudo zypper install python-networking-vsphereRun the playbook:

ardana >ansible-playbook neutron-create-ovsvapp-resources.yml \ -i hosts/verb_hosts -vvv -e 'variable_host=localhost vcenter_username=USERNAME vcenter_encrypted_password=ENCRYPTED_PASSWORD vcenter_ip=IP_ADDRESS vcenter_port=443 vc_net_resources_location=LOCATION_TO_RESOURCE_DEFINITION_FILE

The RESOURCE_DEFINITION_FILE is in JSON format and

contains the resources to be created.

Sample file contents:

{

"datacenter_name": "DC1",

"host_names": [

"192.168.100.21",

"192.168.100.222"

],

"network_properties": {

"switches": [

{

"type": "dvSwitch",

"name": "TRUNK",

"pnic_devices": [],

"max_mtu": "1500",

"description": "TRUNK DVS for ovsvapp.",

"max_ports": 30000

},

{

"type": "dvSwitch",

"name": "MGMT",

"pnic_devices": [

"vmnic1"

],

"max_mtu": "1500",

"description": "MGMT DVS for ovsvapp. Uses 'vmnic0' to connect to OpenStack Management network",

"max_ports": 30000

}

],

"portGroups": [

{

"name": "TRUNK-PG",

"vlan_type": "trunk",

"vlan_range_start": "1",

"vlan_range_end": "4094",

"dvs_name": "TRUNK",

"nic_teaming": null,

"allow_promiscuous": true,

"forged_transmits": true,

"auto_expand": true,

"description": "TRUNK port group. Configure as trunk for vlans 1-4094. Default nic_teaming selected."

},

{

"name": "MGMT-PG",

"dvs_name": "MGMT",

"nic_teaming": null,

"description": "MGMT port group. Configured as type 'access' (vlan with vlan_id = 0, default). Default nic_teaming. Promiscuous false, forged_transmits default"

},

{

"name": "GUEST-PG",

"dvs_name": "GUEST",

"vlan_type": "MGMT",

"vlan_range_start": 100,

"vlan_range_end": 200,

"nic_teaming": null,

"allow_promiscuous": true,

"forged_transmits": true,

"auto_expand": true,

"description": "GUEST port group. Configure for vlans 100 through 200."

},

{

"name": "ESX-CONF-PG",

"dvs_name": "MGMT",

"nic_teaming": null,

"description": "ESX-CONF port group. Configured as type 'access' (vlan with vlan_id = 0, default)."

}

]

}

}27.8.4 Configuring OVSVAPP Using Python-Networking-vSphere #

Scripts can be used with the Networking-vSphere Project. The scripts automate some of the process of configuring OVSvAPP from the command line. The following are help entries for two of the scripts:

tux >cd /opt/repos/networking-vspheretux >ovsvapp-manage-dvs -h usage: ovsvapp-manage-dvs [-h] [--tcp tcp_port] [--pnic_devices pnic_devices [pnic_devices ...]] [--max_mtu max_mtu] [--host_names host_names [host_names ...]] [--description description] [--max_ports max_ports] [--cluster_name cluster_name] [--create] [--display_spec] [-v] dvs_name vcenter_user vcenter_password vcenter_ip datacenter_name positional arguments: dvs_name Name to use for creating the DVS vcenter_user Username to be used for connecting to vCenter vcenter_password Password to be used for connecting to vCenter vcenter_ip IP address to be used for connecting to vCenter datacenter_name Name of data center where the DVS will be created optional arguments: -h, --help show this help message and exit --tcp tcp_port TCP port to be used for connecting to vCenter --pnic_devices pnic_devices [pnic_devices ...] Space separated list of PNIC devices for DVS --max_mtu max_mtu MTU to be used by the DVS --host_names host_names [host_names ...] Space separated list of ESX hosts to add to DVS --description description DVS description --max_ports max_ports Maximum number of ports allowed on DVS --cluster_name cluster_name Cluster name to use for DVS --create Create DVS on vCenter --display_spec Print create spec of DVS -v Verbose output

tux >cd /opt/repos/networking-vspheretux >ovsvapp-manage-dvpg -h usage: ovsvapp-manage-dvpg [-h] [--tcp tcp_port] [--vlan_type vlan_type] [--vlan_id vlan_id] [--vlan_range_start vlan_range_start] [--vlan_range_stop vlan_range_stop] [--description description] [--allow_promiscuous] [--allow_forged_transmits] [--notify_switches] [--network_failover_detection] [--load_balancing {loadbalance_srcid,loadbalance_ip,loadbalance_srcmac,loadbalance_loadbased,failover_explicit}] [--create] [--display_spec] [--active_nics ACTIVE_NICS [ACTIVE_NICS ...]] [-v] dvpg_name vcenter_user vcenter_password vcenter_ip dvs_name positional arguments: dvpg_name Name to use for creating theDistributed Virtual Port Group (DVPG) vcenter_user Username to be used for connecting to vCenter vcenter_password Password to be used for connecting to vCenter vcenter_ip IP address to be used for connecting to vCenter dvs_name Name of the Distributed Virtual Switch (DVS) to create the DVPG in optional arguments: -h, --help show this help message and exit --tcp tcp_port TCP port to be used for connecting to vCenter --vlan_type vlan_type Vlan type to use for the DVPG --vlan_id vlan_id Vlan id to use for vlan_type='vlan' --vlan_range_start vlan_range_start Start of vlan id range for vlan_type='trunk' --vlan_range_stop vlan_range_stop End of vlan id range for vlan_type='trunk' --description description DVPG description --allow_promiscuous Sets promiscuous mode of DVPG --allow_forged_transmits Sets forge transmit mode of DVPG --notify_switches Set nic teaming 'notify switches' to True. --network_failover_detection Set nic teaming 'network failover detection' to True --load_balancing {loadbalance_srcid,loadbalance_ip,loadbalance_srcmac,loadbalance_loadbased,failover_explicit} Set nic teaming load balancing algorithm. Default=loadbalance_srcid --create Create DVPG on vCenter --display_spec Send DVPG's create spec to OUTPUT --active_nics ACTIVE_NICS [ACTIVE_NICS ...] Space separated list of active nics to use in DVPG nic teaming -v Verbose output

27.9 Create a SUSE-based Virtual Appliance Template in vCenter #

Download the SLES12-SP4 ISO image (

SLE-12-SP4-Server-DVD-x86_64-GM-DVD1.iso) from https://www.suse.com/products/server/download/. You need to sign in or create a SUSE customer service account before downloading.Create a new Virtual Machine in vCenter Resource Pool.

Configure the Storage selection.

Configure the Guest Operating System.

Create a Disk.

Ready to Complete.

Edit Settings before booting the VM with additional Memory, typically 16GB or 32GB, though large scale environments may require larger memory allocations.

Edit Settings before booting the VM with additional Network Settings. Ensure there are four network adapters, one each for TRUNK, MGMT, ESX-CONF, and GUEST.

Attach the ISO image to the DataStore.

Configure the 'disk.enableUUID=TRUE' flag in the General - Advanced Settings.

After attaching the CD/DVD drive with the ISO image and completing the initial VM configuration, power on the VM by clicking the Play button on the VM's summary page.

Click when the VM boots from the console window.

Accept the License agreement, language and Keyboard selection.

Select the System Role to Xen Virtualization Host.

Select the 'Proposed Partitions' in the Suggested Partition screen.

Edit the Partitions to select the 'LVM' Mode and then select the 'ext4' filesystem type.

Increase the size of the root partition from 10GB to 60GB.

Create an additional logical volume to accommodate the LV_CRASH volume (15GB). Do not mount the volume at this time, it will be used later.

Configure the Admin User/Password and User name.

Installation Settings (Disable Firewall and enable SSH).

The operating system successfully installs and the VM reboots.

Check that the contents of the ISO files are copied to the locations shown below on your Cloud Lifecycle Manager. This may already be completed on the Cloud Lifecycle Manager.

Mount or copy the contents of

SLE-12-SP4-Server-DVD-x86_64-GM-DVD1.isoto/opt/ardana_packager/ardana/sles12/zypper/OS/(create the directory if it is missing).

Log in to the VM with the configured user credentials.

The VM must be set up before a template can be created with it. The IP addresses configured here are temporary and will need to be reconfigured as VMs are created using this template. The temporary IP address should not overlap with the network range for the MGMT network.

The VM should now have four network interfaces. Configure them as follows:

ardana >cd /etc/sysconfig/networktux >sudo lsThe directory will contain the files:

ifcfg-br0,ifcfg-br1,ifcfg-br2,ifcfg-br3,ifcfg-eth0,ifcfg-eth1,ifcfg-eth2, andifcfg-eth3.If you have configured a default route while installing the VM, then there will be a

routesfile.Note the IP addresses configured for MGMT.

Configure the temporary IP for the MGMT network. Edit the

ifcfg-eth1file.tux >sudo vi /etc/sysconfig/network/ifcfg-eth1 BOOTPROTO='static' BROADCAST='' ETHTOOL_OPTIONS='' IPADDR='192.168.24.132/24' (Configure the IP address of the MGMT Interface) MTU='' NETWORK='' REMOTE_IPADDR='' STARTMODE='auto'

Edit the

ifcfg-eth0,ifcfg-eth2, andifcfg-eth3files.tux >sudo vi /etc/sysconfig/network/ifcfg-eth0 BOOTPROTO='static' BROADCAST='' ETHTOOL_OPTIONS='' IPADDR='' MTU='' NETWORK='' REMOTE_IPADDR='' STARTMODE='auto'tux >sudo vi /etc/sysconfig/network/ifcfg-eth2 BOOTPROTO='' BROADCAST='' ETHTOOL_OPTIONS='' IPADDR='' MTU='' NAME='VMXNET3 Ethernet Controller' NETWORK='' REMOTE_IPADDR='' STARTMODE='auto'tux >sudo vi /etc/sysconfig/network/ifcfg-eth3 BOOTPROTO='' BROADCAST='' ETHTOOL_OPTIONS='' IPADDR='' MTU='' NAME='VMXNET3 Ethernet Controller' NETWORK='' REMOTE_IPADDR='' STARTMODE='auto'If the default route is not configured, add a default route file manually.

Create a file

routesin/etc/sysconfig/network.Edit the file to add your default route.

tux >sudo sudo vi routes default 192.168.24.140 - -

Delete all the bridge configuration files, which are not required:

ifcfg-br0,ifcfg-br1,ifcfg-br2, andifcfg-br3.

Add

ardanauser and home directory if that is not your defaultuser. The username and password should beardana/ardana.tux >sudo useradd -m ardanatux >sudo passwd ardanaCreate a

ardanausergroup in the VM if it does not exist.Check for an existing

ardanagroup.tux >sudo groups ardanaAdd

ardanagroup if necessary.tux >sudo groupadd ardanaAdd

ardanauser to theardanagroup.tux >sudo gpasswd -a ardana ardana

Allow the

ardanauser tosudowithout password. Setting upsudoon SLES is covered in the SUSE documentation at https://documentation.suse.com/sles/15-SP1/single-html/SLES-admin/#sec-sudo-conf. We recommend creating user specific sudo config files in the/etc/sudoers.ddirectory. Create an/etc/sudoers.d/ardanaconfig file with the following content to allow sudo commands without the requirement of a password.ardana ALL=(ALL) NOPASSWD:ALL

Add the Zypper repositories using the ISO-based repositories created previously. Change the value of

deployer_ipif necessary.tux >sudo DEPLOYER_IP=192.168.24.140tux >sudo zypper addrepo --no-gpgcheck --refresh \ http://$deployer_ip:79/ardana/sles12/zypper/OS SLES-OStux >sudo zypper addrepo --no-gpgcheck --refresh \ http://$deployer_ip:79/ardana/sles12/zypper/SDK SLES-SDKVerify that the repositories have been added.

tux >zypper repos --detailSet up SSH access that does not require a password to the temporary IP address that was configured for

eth1.When you have started the installation using the Cloud Lifecycle Manager or if you are adding a SLES node to an existing cloud, the Cloud Lifecycle Manager public key needs to be copied to the SLES node. You can do this by copying

~/.ssh/authorized_keysfrom another node in the cloud to the same location on the SLES node. If you are installing a new cloud, this file will be available on the nodes after running thebm-reimage.ymlplaybook.ImportantEnsure that there is global read access to the file

~/.ssh/authorized_keys.Test passwordless ssh from the Cloud Lifecycle Manager and check your ability to remotely execute sudo commands.

tux >ssh ardana@IP_OF_SLES_NODE_eth1 "sudo tail -5 /var/log/messages"

Shutdown the VM and create a template out of the VM appliance for future use.

The VM Template will be saved in your vCenter Datacenter and you can view it from menu. Note that menu options will vary slightly depending on the version of vSphere that is deployed.

27.10 ESX Network Model Requirements #

For this model the following networks are needed:

MANAGEMENT-NET: This is an untagged network this is used for the control plane as well as the esx-compute proxy and ovsvapp VMware instance. It is tied to the MGMT DVS/PG in vSphere.EXTERNAL-API_NET: This is a tagged network for the external/public API. There is no difference in this model from those without ESX and there is no additional setup needed in vSphere for this network.EXTERNAL-VM-NET: This is a tagged network used for Floating IP (FIP) assignment to running instances. There is no difference in this model from those without ESX and there is no additional setup needed in vSphere for this network.GUEST-NET: This is a tagged network used internally for neutron. It is tied to the GUEST PG in vSphere.ESX-CONF-NET: This is a separate configuration network for ESX that must be reachable via the MANAGEMENT-NET. It is tied to the ESX-CONF PG in vSphere.TRUNK-NET: This is an untagged network used internally for ESX. It is tied to the TRUNC DVS/PG in vSphere.

27.11 Creating and Configuring Virtual Machines Based on Virtual Appliance Template #

The following process for creating and configuring VMs from the vApp template

should be repeated for every cluster in the DataCenter. Each cluster should

host a nova Proxy VM, and each host in a cluster should have an OVSvApp

VM running. The following method uses the vSphere Client

Management Tool to deploy saved templates from the vCenter.

Identify the cluster that you want nova Proxy to manage.

Create a VM from the template on a chosen cluster.

The first VM deployed is the

nova-compute-proxyVM. This VM can reside on anyHOSTinside a cluster. There should be only one instance of this VM in a cluster.The

nova-compute-proxyonly uses two of the four interfaces configured previously (ESX_CONFandMANAGEMENT).NoteDo not swap the interfaces. They must be in the specified order (

ESX_CONFiseth0,MGMTiseth1).After the VM has been deployed, log in to it with

ardana/ardanacredentials. Log in to the VM with SSH using theMGMTIP address. Make sure that all root level commands work withsudo. This is required for the Cloud Lifecycle Manager to configure the appliance for services and networking.Install another VM from the template and name it

OVSvApp-VM1-HOST1. (You can add a suffix with the host name to identify the host it is associated with).NoteThe VM must have four interfaces configured in the right order. The VM must be accessible from the Management Network through SSH from the Cloud Lifecycle Manager.

/etc/sysconfig/network/ifcfg-eth0isESX_CONF./etc/sysconfig/network/ifcfg-eth1isMGMT./etc/sysconfig/network/ifcfg-eth2isTRUNK./etc/sysconfig/network/ifcfg-eth3isGUEST.

If there is more than one

HOSTin the cluster, deploy another VM from the Template and name itOVSvApp-VM2-HOST2.If the OVSvApp VMs end up on the same

HOST, then manually separate the VMs and follow the instructions below to add rules for High Availability (HA) and Distributed Resource Scheduler (DRS).NoteHA seeks to minimize system downtime and data loss. See also Chapter 4, High Availability. DRS is a utility that balances computing workloads with available resources in a virtualized environment.

When installed from a template to a cluster, the VM will not be bound to a particular host if you have more than one Hypervisor. The requirement for the OVSvApp is that there be only one OVSvApp Appliance per host and that it should be constantly bound to the same host. DRS or VMotion should not be allowed to migrate the VMs from the existing HOST. This would cause major network interruption. In order to achieve this we need to configure rules in the cluster HA and DRS settings.

NoteVMotion enables the live migration of running virtual machines from one physical server to another with zero downtime, continuous service availability, and complete transaction integrity.

Configure rules for OVSvApp VMs.

Configure .

must be disabled.

VM should be

Power-On.

Configure .

Configure .

Create a DRS Group for the OVSvApp VMs.

Add VMs to the DRS Group.

Add appropriate to the DRS Groups.

All three VMs are up and running. Following the preceding process, there is one nova Compute Proxy VM per cluster, and

OVSvAppVM1andOVSvAppVM2on each HOST in the cluster.Record the configuration attributes of the VMs.

nova Compute Proxy VM:

Cluster Namewhere this VM is locatedManagement IP AddressVM NameThe actual name given to the VM to identify it.

OVSvAppVM1

Cluster Namewhere this VM is locatedManagement IP Addressesx_hostnamethat this OVSvApp is bound tocluster_dvs_mappingThe Distributed vSwitch name created in the datacenter for this particular cluster.Example format:

DATA_CENTER/host/CLUSTERNAME: DVS-NAME Do not substitute for

host'. It is a constant.

OVSvAppVM2:

Cluster Namewhere this VM is locatedManagement IP Addressesx_hostnamethat this OVSvApp is bound tocluster_dvs_mappingThe Distributed vSwitch name created in the datacenter for this particular cluster.Example format:

DATA_CENTER/host/CLUSTERNAME: DVS-NAME Do not substitute for

host'. It is a constant.

27.12 Collect vCenter Credentials and UUID #

Obtain the vCenter UUID from vSphere with the URL shown below:

https://VCENTER-IP/mob/?moid=ServiceInstance&doPath=content.about

Select the field

instanceUUID. Copy and paste the value of# field instanceUUID.Record the

UUIDRecord the

vCenter PasswordRecord the

vCenter Management IPRecord the

DataCenter NameRecord the

Cluster NameRecord the

DVS (Distributed vSwitch) Name

27.13 Edit Input Models to Add and Configure Virtual Appliances #

The following steps should be used to edit the Ardana input model data to add and configure the Virtual Appliances that were just created. The process assumes that the SUSE OpenStack Cloud is deployed and a valid Cloud Lifecycle Manager is in place.

Edit the following files in

~/openstack/my_cloud/definition/data/:servers.yml,disks_app_vm.yml, andpass_through.yml. Fill in attribute values recorded in the previous step.Follow the instructions in

pass_through.ymlto encrypt your vCenter password using an encryption key.Export an environment variable for the encryption key.

ARDANA_USER_PASSWORD_ENCRYPT_KEY=ENCRYPTION_KEY

Run

~ardana/openstack/ardana/ansible/ardanaencrypt.pyscript. It will prompt forunencrypted value?. Enter the unencrypted vCenter password and it will return an encrypted string.Copy and paste the encrypted password string in the

pass_through.ymlfile as a value for thepasswordfield enclosed in double quotes.Enter the

username,ip, andidof the vCenter server in the Global section of thepass_through.ymlfile. Use the values recorded in the previous step.In the

serverssection of thepass_through.ymlfile, add the details about the nova Compute Proxy and OVSvApp VMs that was recorded in the previous step.# Here the 'id' refers to the name of the node running the # esx-compute-proxy. This is identical to the 'servers.id' in # servers.yml. # NOTE: There should be one esx-compute-proxy node per ESX # resource pool or cluster. # cluster_dvs_mapping in the format # 'Datacenter-name/host/Cluster-Name:Trunk-DVS-Name' # Here 'host' is a string and should not be changed or # substituted. # vcenter_id is same as the 'vcenter-uuid' obtained in the global # section. # 'id': is the name of the appliance manually installed # 'vcenter_cluster': Name of the vcenter target cluster # esx_hostname: Name of the esx host hosting the ovsvapp # NOTE: For every esx host in a cluster there should be an ovsvapp # instance running. id: esx-compute1 data: vmware: vcenter_cluster: <vmware cluster1 name> vcenter_id: <vcenter-uuid> - id: ovsvapp1 data: vmware: vcenter_cluster: <vmware cluster1 name> cluster_dvs_mapping: <cluster dvs mapping> esx_hostname: <esx hostname hosting the ovsvapp> vcenter_id: <vcenter-uuid> - id: ovsvapp2 data: vmware: vcenter_cluster: <vmware cluster1 name> cluster_dvs_mapping: <cluster dvs mapping> esx_hostname: <esx hostname hosting the ovsvapp> vcenter_id: <vcenter-uuid>The VM

idstring should match exactly with the data written in theservers.ymlfile.Edit the

servers.ymlfile, adding the nova Proxy VM and OVSvApp information recorded in the previous step.# Below entries shall be added by the user # for entry-scale-kvm-esx after following # the doc instructions in creating the # esx-compute-proxy VM Appliance and the # esx-ovsvapp VM Appliance. # Added just for the reference # NOTE: There should be one esx-compute per # Cluster and one ovsvapp per Hypervisor in # the Cluster. # id - is the name of the virtual appliance # ip-addr - is the Mgmt ip address of the appliance # The values shown below are examples and has to be # substituted based on your setup. # Nova Compute proxy node - id: esx-compute1 server-group: RACK1 ip-addr: 192.168.24.129 role: ESX-COMPUTE-ROLE # OVSVAPP node - id: ovsvapp1 server-group: RACK1 ip-addr: 192.168.24.130 role: OVSVAPP-ROLE - id: ovsvapp2 server-group: RACK1 ip-addr: 192.168.24.131 role: OVSVAPP-ROLEExamples of

pass_through.ymlandservers.ymlfiles:pass_through.yml product: version: 2 pass-through: global: vmware: - username: administrator@vsphere.local ip: 10.84.79.3 port: '443' cert_check: false password: @hos@U2FsdGVkX19aqGOUYGgcAIMQSN2lZ1X+gyNoytAGCTI= id: a0742a39-860f-4177-9f38-e8db82ad59c6 servers: - data: vmware: vcenter_cluster: QE vcenter_id: a0742a39-860f-4177-9f38-e8db82ad59c6 id: lvm-nova-compute1-esx01-qe - data: vmware: vcenter_cluster: QE cluster_dvs_mapping: 'PROVO/host/QE:TRUNK-DVS-QE' esx_hostname: esx01.qe.provo vcenter_id: a0742a39-860f-4177-9f38-e8db82ad59c6 id: lvm-ovsvapp1-esx01-qe - data: vmware: vcenter_cluster: QE cluster_dvs_mapping: 'PROVO/host/QE:TRUNK-DVS-QE' esx_hostname: esx02.qe.provo vcenter_id: a0742a39-860f-4177-9f38-e8db82ad59c6 id: lvm-ovsvapp2-esx02-qeservers.yml product: version: 2 servers: - id: deployer ilo-ip: 192.168.10.129 ilo-password: 8hAcPMne ilo-user: CLM004 ip-addr: 192.168.24.125 is-deployer: true mac-addr: '8c:dc:d4:b4:c5:4c' nic-mapping: MY-2PORT-SERVER role: DEPLOYER-ROLE server-group: RACK1 - id: controller3 ilo-ip: 192.168.11.52 ilo-password: 8hAcPMne ilo-user: HLM004 ip-addr: 192.168.24.128 mac-addr: '8c:dc:d4:b5:ed:b8' nic-mapping: MY-2PORT-SERVER role: CONTROLLER-ROLE server-group: RACK1 - id: controller2 ilo-ip: 192.168.10.204 ilo-password: 8hAcPMne ilo-user: HLM004 ip-addr: 192.168.24.127 mac-addr: '8c:dc:d4:b5:ca:c8' nic-mapping: MY-2PORT-SERVER role: CONTROLLER-ROLE server-group: RACK2 - id: controller1 ilo-ip: 192.168.11.57 ilo-password: 8hAcPMne ilo-user: CLM004 ip-addr: 192.168.24.126 mac-addr: '5c:b9:01:89:c6:d8' nic-mapping: MY-2PORT-SERVER role: CONTROLLER-ROLE server-group: RACK3 # Nova compute proxy for QE cluster added manually - id: lvm-nova-compute1-esx01-qe server-group: RACK1 ip-addr: 192.168.24.129 role: ESX-COMPUTE-ROLE # OVSvApp VM for QE cluster added manually # First ovsvapp vm in esx01 node - id: lvm-ovsvapp1-esx01-qe server-group: RACK1 ip-addr: 192.168.24.132 role: OVSVAPP-ROLE # Second ovsvapp vm in esx02 node - id: lvm-ovsvapp2-esx02-qe server-group: RACK1 ip-addr: 192.168.24.131 role: OVSVAPP-ROLE baremetal: subnet: 192.168.24.0 netmask: 255.255.255.0Edit the

disks_app_vm.ymlfile based on yourlvmconfiguration. The attributes ofVolume Group,Physical Volume, andLogical Volumesmust be edited based on theLVMconfiguration of the VM.When you partitioned

LVMduring installation, you receivedVolume Groupname,Physical Volumename andLogical Volumeswith their partition sizes.This information can be retrieved from any of the VMs (nova Proxy VM or the OVSvApp VM):

tux >sudo pvdisplay# — Physical volume — # PV Name /dev/sda1 # VG Name system # PV Size 80.00 GiB / not usable 3.00 MiB # Allocatable yes # PE Size 4.00 MiB # Total PE 20479 # Free PE 511 # Allocated PE 19968 # PV UUID 7Xn7sm-FdB4-REev-63Z3-uNdM-TF3H-S3ZrIZThe Physical Volume Name is

/dev/sda1. And the Volume Group Name issystem.To find

Logical Volumes:tux >sudo fdisk -l# Disk /dev/sda: 80 GiB, 85899345920 bytes, 167772160 sectors # Units: sectors of 1 * 512 = 512 bytes # Sector size (logical/physical): 512 bytes / 512 bytes # I/O size (minimum/optimal): 512 bytes / 512 bytes # Disklabel type: dos # Disk identifier: 0x0002dc70 # Device Boot Start End Sectors Size Id Type # /dev/sda1 * 2048 167772159 167770112 80G 8e Linux LVM # Disk /dev/mapper/system-root: 60 GiB, 64424509440 bytes, # 125829120 sectors # Units: sectors of 1 * 512 = 512 bytes # Sector size (logical/physical): 512 bytes / 512 bytes # I/O size (minimum/optimal): 512 bytes / 512 bytes # Disk /dev/mapper/system-swap: 2 GiB, 2147483648 bytes, 4194304 sectors # Units: sectors of 1 * 512 = 512 bytes # Sector size (logical/physical): 512 bytes / 512 bytes # I/O size (minimum/optimal): 512 bytes / 512 bytes # Disk /dev/mapper/system-LV_CRASH: 16 GiB, 17179869184 bytes, # 33554432 sectors # Units: sectors of 1 * 512 = 512 bytes # Sector size (logical/physical): 512 bytes / 512 bytes # I/O size (minimum/optimal): 512 bytes / 512 bytes # NOTE: Even though we have configured the SWAP partition, it is # not required to be configured in here. Just configure the root # and the LV_CRASH partitionThe line with

/dev/mapper/system-root: 60 GiB, 64424509440 bytesindicates that the first logical partition isroot.The line with

/dev/mapper/system-LV_CRASH: 16 GiB, 17179869184 bytesindicates that the second logical partition isLV_CRASH.The line with

/dev/mapper/system-swap: 2 GiB, 2147483648 bytes, 4194304 sectorsindicates that the third logical partition isswap.

Edit the

disks_app_vm.ymlfile. It is not necessary to configure theswappartition.volume-groups: - name: system (Volume Group Name) physical-volumes: - /dev/sda1 (Physical Volume Name) logical-volumes: - name: root ( Logical Volume 1) size: 75% (Size in percentage) fstype: ext4 ( filesystem type) mount: / ( Mount point) - name: LV_CRASH (Logical Volume 2) size: 20% (Size in percentage) mount: /var/crash (Mount point) fstype: ext4 (filesystem type) mkfs-opts: -O large_fileAn example

disks_app_vm.ymlfile:disks_app_vm.yml --- product: version: 2 disk-models: - name: APP-VM-DISKS # Disk model to be used for application vms such as nova-proxy and ovsvapp # /dev/sda1 is used as a volume group for /, /var/log and /var/crash # Additional disks can be added to either volume group # # NOTE: This is just an example file and has to filled in by the user # based on the lvm partition map for their virtual appliance # While installing the operating system opt for the LVM partition and # create three partitions as shown below # Here is an example partition map # In this example we have three logical partitions # root partition (75%) # swap (5%) and # LV_CRASH (20%) # Run this command 'sudo pvdisplay' on the virtual appliance to see the # output as shown below # # — Physical volume — # PV Name /dev/sda1 # VG Name system # PV Size 80.00 GiB / not usable 3.00 MiB # Allocatable yes # PE Size 4.00 MiB # Total PE 20479 # Free PE 511 # Allocated PE 19968 # PV UUID 7Xn7sm-FdB4-REev-63Z3-uNdM-TF3H-S3ZrIZ # # Next run the following command on the virtual appliance # # sudo fdisk -l # The output will be as shown below # # Disk /dev/sda: 80 GiB, 85899345920 bytes, 167772160 sectors # Units: sectors of 1 * 512 = 512 bytes # Sector size (logical/physical): 512 bytes / 512 bytes # I/O size (minimum/optimal): 512 bytes / 512 bytes # Disklabel type: dos # Disk identifier: 0x0002dc70 # Device Boot Start End Sectors Size Id Type # /dev/sda1 * 2048 167772159 167770112 80G 8e Linux LVM # Disk /dev/mapper/system-root: 60 GiB, 64424509440 bytes, # 125829120 sectors # Units: sectors of 1 * 512 = 512 bytes # Sector size (logical/physical): 512 bytes / 512 bytes # I/O size (minimum/optimal): 512 bytes / 512 bytes # Disk /dev/mapper/system-swap: 2 GiB, 2147483648 bytes, 4194304 sectors # Units: sectors of 1 * 512 = 512 bytes # Sector size (logical/physical): 512 bytes / 512 bytes # I/O size (minimum/optimal): 512 bytes / 512 bytes # Disk /dev/mapper/system-LV_CRASH: 16 GiB, 17179869184 bytes, # 33554432 sectors # Units: sectors of 1 * 512 = 512 bytes # Sector size (logical/physical): 512 bytes / 512 bytes # I/O size (minimum/optimal): 512 bytes / 512 bytes # NOTE: Even though we have configured the SWAP partition, it is # not required to be configured in here. Just configure the root # and the LV_CRASH partition volume-groups: - name: system physical-volumes: - /dev/sda1 logical-volumes: - name: root size: 75% fstype: ext4 mount: / - name: LV_CRASH size: 20% mount: /var/crash fstype: ext4 mkfs-opts: -O large_file

27.14 Running the Configuration Processor With Applied Changes #

If the changes are being applied to a previously deployed cloud, then after the previous section is completed, the Configuration Processor should be run with the changes that were applied.

If nodes have been added after deployment, run the

site.ymlplaybook withgenerate_hosts_file.ardana >cd ~/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts site.yml --tag "generate_hosts_file"Run the Configuration Processor

ardana >cd ~/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.yml \ -e remove_deleted_servers="y" -e free_unused_addresses="y"ardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun the

site.ymlplaybook against only the VMs that were added.ardana >cd ~/scratch/ansible/next/ardana/ansible/ardana >ansible-playbook -i hosts/verb_hosts site.yml --limit hlm004-cp1-esx-comp0001-mgmt, \ hlm004-cp1-esx-ovsvapp0001-mgmt,hlm004-cp1-esx-ovsvapp0002-mgmtAdd nodes to monitoring by running the following playbook on those nodes:

ardana >ansible-playbook -i hosts/verb_hosts monasca-deploy.yml --tags "active_ping_checks"

If the changes are being applied ahead of deploying a new (greenfield) cloud, then after the previous section is completed, the following steps should be run.

Run the Configuration Processor

ardana >cd ~/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun the

site.ymlplaybook against only the VMs that were added.ardana >cd ~/scratch/ansible/next/ardana/ansible/ardana >ansible-playbook -i hosts/verb_hosts site.yml

27.15 Test the ESX-OVSvApp Environment #

When all of the preceding installation steps have been completed, test the ESX-OVSvApp environment with the following steps:

SSH to the Controller

Source the

service.osrcfileCreate a Network

Create a Subnet

Create a VMware-based glance image if there is not one available in the glance repo. The following instructions can be used to create an image that can be used by nova to to create a VM in vCenter.

Download a

vmdkimage file for the corresponding distro that you want for a VM.Create a nova image for VMware Hypervisor

ardana >openstack image create --name DISTRO \ --container-format bare --disk-format vmdk --property vmware_disktype="sparse" \ --property vmware_adaptertype="ide" --property hypervisor_type=vmware < SERVER_CLOUDIMG.VMDK+--------------------+--------------------------------------+ | Property | Value | +--------------------+--------------------------------------+ | checksum | 45a4a06997e64f7120795c68beeb0e3c | | container_format | bare | | created_at | 2018-02-17T10:42:14Z | | disk_format | vmdk | | hypervisor_type | vmware | | id | 17e4915a-ada0-4b95-bacf-ba67133f39a7 | | min_disk | 0 | | min_ram | 0 | | name | leap | | owner | 821b7bb8148f439191d108764301af64 | | protected | False | | size | 372047872 | | status | active | | tags | [] | | updated_at | 2018-02-17T10:42:23Z | | virtual_size | None | | visibility | shared | | vmware_adaptertype | ide | | vmware_disktype | sparse | +--------------------+--------------------------------------+

The image you created needs to be uploaded or saved. Otherwise the size will still be

0.Upload/save the image

ardana >openstack image save --file \ ./SERVER_CLOUDIMG.VMDK 17e4915...133f39a7After saving the image, check that it is active and has a valid size.

ardana >openstack image list+--------------------------------------+------------------------+--------+ | ID | Name | Status | +--------------------------------------+------------------------+--------+ | c48a9349-8e5c-4ca7-81ac-9ed8e2cab3aa | cirros-0.3.2-i386-disk | active | | 17e4915a-ada0-4b95-bacf-ba67133f39a7 | leap | active | +--------------------------------------+------------------------+--------+

Check the details of the image

ardana >openstack image show 17e4915...133f39a7+------------------+------------------------------------------------------------------------------+ | Field | Value | +------------------+------------------------------------------------------------------------------+ | checksum | 45a4a06997e64f7120795c68beeb0e3c | | container_format | bare | | created_at | 2018-02-17T10:42:14Z | | disk_format | vmdk | | file | /v2/images/40aa877c-2b7a-44d6-9b6d-f635dcbafc77/file | | id | 17e4915a-ada0-4b95-bacf-ba67133f39a7 | | min_disk | 0 | | min_ram | 0 | | name | leap | | owner | 821b7bb8148f439191d108764301af64 | | properties | hypervisor_type='vmware', vmware_adaptertype='ide', vmware_disktype='sparse' | | protected | False | | schema | /v2/schemas/image | | size | 372047872 | | status | active | | tags | | | updated_at | 2018-02-17T10:42:23Z | | virtual_size | None | | visibility | shared | +------------------+------------------------------------------------------------------------------+

Create security rules. All security rules must be created before any VMs are created. Otherwise the security rules will have no impact. Rebooting or restarting

neutron-ovsvapp-agentwill have no effect. The following example shows creating a security rule for ICMP:ardana >openstack security group rule create default --protocol icmpCreate a nova instance with the VMware VMDK-based image and target it to the new cluster in the vCenter.

The new VM will appear in the vCenter.

The respective PortGroups for the OVSvApp on the Trunk-DVS will be created and connected.

Test the VM for connectivity and service.