26 Magnum Overview #

The SUSE OpenStack Cloud Magnum Service provides container orchestration engines such as Docker Swarm, Kubernetes, and Apache Mesos available as first class resources. SUSE OpenStack Cloud Magnum uses heat to orchestrate an OS image which contains Docker and Kubernetes and runs that image in either virtual machines or bare metal in a cluster configuration.

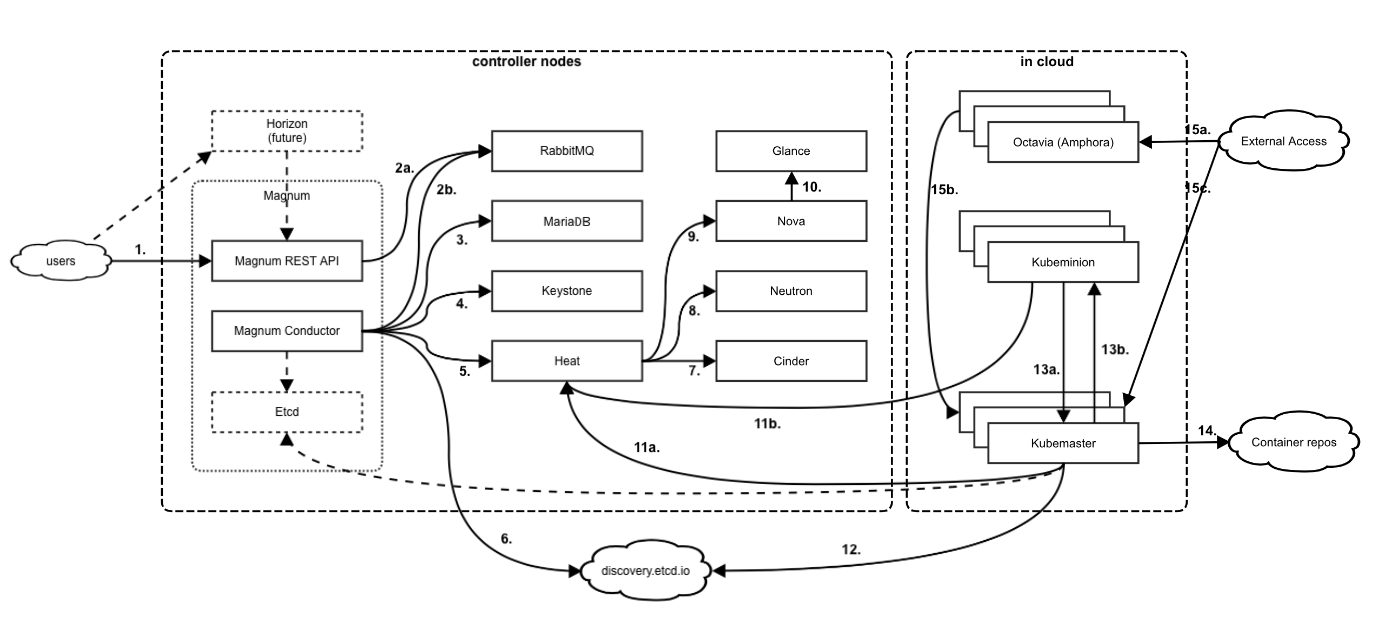

26.1 Magnum Architecture #

As an OpenStack API service, Magnum provides Container as a Service (CaaS) functionality. Magnum is capable of working with container orchestration engines (COE) such as Kubernetes, Docker Swarm, and Apache Mesos. Some operations work with a User CRUD (Create, Read, Update, Delete) filter.

Components

Magnum API: RESTful API for cluster and cluster template operations.

Magnum Conductor: Performs operations on clusters requested by Magnum API in an asynchronous manner.

Magnum CLI: Command-line interface to the Magnum API.

Etcd (planned, currently using public service): Remote key/value storage for distributed cluster bootstrap and discovery.

Kubemaster (in case of Kubernetes COE): One or more VM(s) or baremetal server(s), representing a control plane for Kubernetes cluster.

Kubeminion (in case of Kubernetes COE): One or more VM(s) or baremetal server(s), representing a workload node for Kubernetes cluster.

| Data Name | Confidentiality | Integrity | Availability | Backup? | Description |

|---|---|---|---|---|---|

| Session Tokens | Confidential | High | Medium | No | Session tokens not stored. |

| System Request | Confidential | High | Medium | No | Data in motion or in MQ not stored. |

| MariaDB Database "Magnum" | Confidential | High | High | Yes | Contains user preferences. Backed up to swift daily. |

| etcd data | Confidential | High | Low | No | Kubemaster IPs and cluster info. Only used during cluster bootstrap. |

| Interface | Network | Request | Response | Operation Description |

|---|---|---|---|---|

| 1 |

Name: External-API Protocol: HTTPS |

Request: Manage clusters Requester: User Credentials: keystone token Authorization: Manage objects that belong to current project Listener: Magnum API |

Operation status with or without data Credentials: TLS certificate |

CRUD operations on cluster templates and clusters |

| 2a |

Name: Internal-API Protocol: AMQP over HTTPS |

Request: Enqueue messages Requester: Magnum API Credentials: RabbitMQ username, password Authorization: RabbitMQ queue read/write operations Listener: RabbitMQ |

Operation status Credentials: TLS certificate |

Notifications issued when cluster CRUD operations requested |

| 2b |

Name: Internal-API Protocol: AMQP over HTTPS |

Request: Read queued messages Requester: Magnum Conductor Credentials: RabbitMQ username, password Authorization: RabbitMQ queue read/write operations Listener: RabbitMQ |

Operation status Credentials: TLS certificate |

Notifications issued when cluster CRUD operations requested |

| 3 |

Name: Internal-API Protocol: MariaDB over HTTPS |

Request: Persist data in MariaDB Requester: Magnum Conductor Credentials: MariaDB username, password Authorization: Magnum database Listener: MariaDB |

Operation status with or without data Credentials: TLS certificate |

Persist cluster/cluster template data, read persisted data |

| 4 |

Name: Internal-API Protocol: HTTPS |

Request: Create per-cluster user in dedicated domain, no role assignments initially Requester: Magnum Conductor Credentials: Trustee domain admin username, password Authorization: Manage users in dedicated Magnum domain Listener: keystone |

Operation status with or without data Credentials: TLS certificate |

Magnum generates user record in a dedicated keystone domain for each cluster |

| 5 |

Name: Internal-API Protocol: HTTPS |

Request: Create per-cluster user stack Requester: Magnum Conductor Credentials: keystone token Authorization: Limited to scope of authorized user Listener: heat |

Operation status with or without data Credentials: TLS certificate |

Magnum creates heat stack for each cluster |

| 6 |

Name: External Network Protocol: HTTPS |

Request: Bootstrap a cluster in public discovery https://discovery.etcd.io/ Requester: Magnum Conductor Credentials: Unguessable URL over HTTPS. URL is only available to software processes needing it. Authorization: Read and update Listener: Public discovery service |

Cluster discovery URL Credentials: TLS certificate |

Create key/value registry of specified size in public storage. This is used to stand up a cluster of kubernetes master nodes (refer to interface call #12). |

| 7 |

Name: Internal-API Protocol: HTTPS |

Request: Create cinder volumes Requester: heat Engine Credentials: keystone token Authorization: Limited to scope of authorized user Listener: cinder API |

Operation status with or without data Credentials: TLS certificate |

heat creates cinder volumes as part of stack. |

| 8 |

Name: Internal-API Protocol: HTTPS |

Request: Create networks, routers, load balancers Requester: heat Engine Credentials: keystone token Authorization: Limited to scope of authorized user Listener: neutron API |

Operation status with or without data Credentials: TLS certificate |

heat creates networks, routers, load balancers as part of the stack. |

| 9 |

Name: Internal-API Protocol: HTTPS |

Request: Create nova VMs, attach volumes Requester: heat Engine Credentials: keystone token Authorization: Limited to scope of authorized user Listener: nova API |

Operation status with or without data Credentials: TLS certificate |

heat creates nova VMs as part of the stack. |

| 10 |

Name: Internal-API Protocol: HTTPS |

Request: Read pre-configured glance image Requester: nova Credentials: keystone token Authorization: Limited to scope of authorized user Listener: glance API |

Operation status with or without data Credentials: TLS certificate |

nova uses pre-configured image in glance to bootstrap VMs. |

| 11a |

Name: External-API Protocol: HTTPS |

Request: heat notification Requester: Cluster member (VM or ironic node) Credentials: keystone token Authorization: Limited to scope of authorized user Listener: heat API |

Operation status with or without data Credentials: TLS certificate |

heat uses OS::heat::WaitCondition resource. VM is expected to call heat notification URL upon completion of certain bootstrap operation. |

| 11b |

Name: External-API Protocol: HTTPS |

Request: heat notification Requester: Cluster member (VM or ironic node) Credentials: keystone token Authorization: Limited to scope of authorized user Listener: heat API |

Operation status with or without data Credentials: TLS certificate |

heat uses OS::heat::WaitCondition resource. VM is expected to call heat notification URL upon completion of certain bootstrap operation. |

| 12 |

Name: External-API Protocol: HTTPS |

Request: Update cluster member state in a public registry at https://discovery.etcd.io Requester: Cluster member (VM or ironic node) Credentials: Unguessable URL over HTTPS only available to software processes needing it. Authorization: Read and update Listener: Public discovery service |

Operation status Credentials: TLS certificate |

Update key/value pair in a registry created by interface call #6. |

| 13a |

Name: VxLAN encapsulated private network on the Guest network Protocol: HTTPS |

Request: Various communications inside Kubernetes cluster Requester: Cluster member (VM or ironic node) Credentials: Tenant specific Authorization: Tenant specific Listener: Cluster member (VM or ironic node) |

Tenant specific Credentials: TLS certificate |

Various calls performed to build Kubernetes clusters, deploy applications and put workload |

| 13b |

Name: VxLAN encapsulated private network on the Guest network Protocol: HTTPS |

Request: Various communications inside Kubernetes cluster Requester: Cluster member (VM or ironic node) Credentials: Tenant specific Authorization: Tenant specific Listener: Cluster member (VM or ironic node) |

Tenant specific Credentials: TLS certificate |

Various calls performed to build Kubernetes clusters, deploy applications and put workload |

| 14 |

Name: Guest/External Protocol: HTTPS |

Request: Download container images Requester: Cluster member (VM or ironic node) Credentials: None Authorization: None Listener: External |

Container image data Credentials: TLS certificate |

Kubernetes makes calls to external repositories to download pre-packed container images |

| 15a |

Name: External/EXT_VM (Floating IP) Protocol: HTTPS |

Request: Tenant specific Requester: Tenant specific Credentials: Tenant specific Authorization: Tenant specific Listener: Octavia load balancer |

Tenant specific Credentials: Tenant specific |

External workload handled by container applications |

| 15b |

Name: Guest Protocol: HTTPS |

Request: Tenant specific Requester: Tenant specific Credentials: Tenant specific Authorization: Tenant specific Listener: Cluster member (VM or ironic node) |

Tenant specific Credentials: Tenant specific |

External workload handled by container applications |

| 15c |

Name: External/EXT_VM (Floating IP) Protocol: HTTPS |

Request: Tenant specific Requester: Tenant specific Credentials: Tenant specific Authorization: Tenant specific Listener: Cluster member (VM or ironic node) |

Tenant specific Credentials: Tenant specific |

External workload handled by container applications |

Dependencies

keystone

RabbitMQ

MariaDB

heat

glance

nova

cinder

neutron

barbican

swift

Implementation

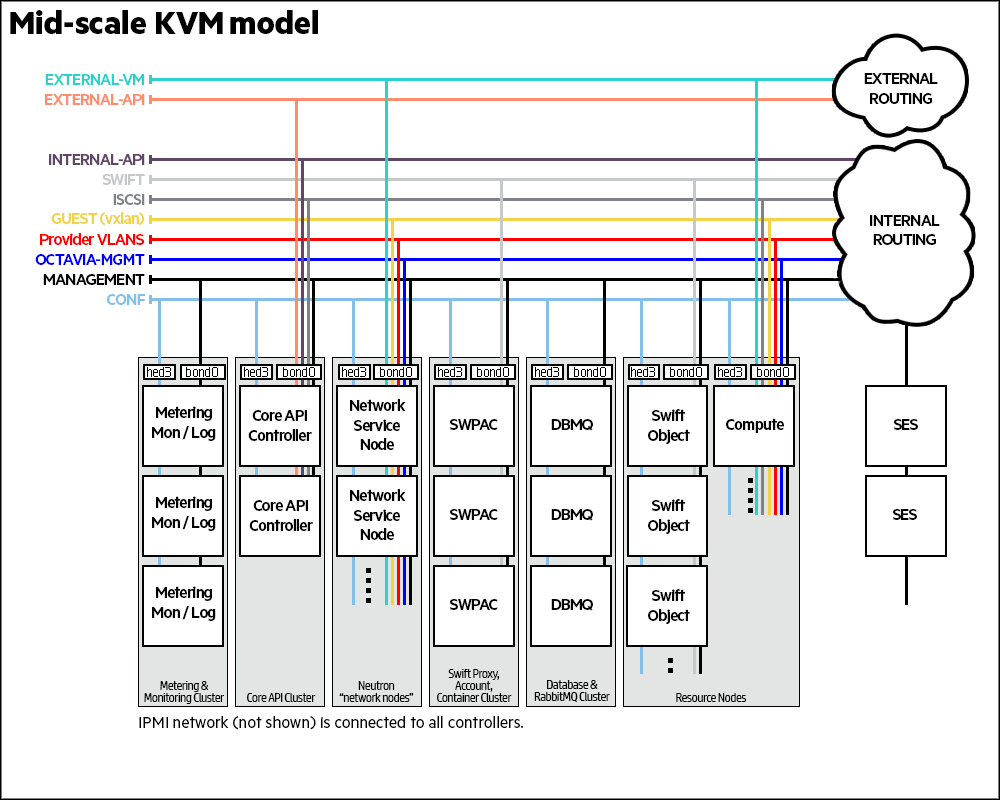

Magnum API and Magnum Conductor are run on the SUSE OpenStack Cloud controllers (or core nodes in case of mid-scale deployments).

| Source CIDR/Security Group | Port/Range | Protocol | Notes |

|---|---|---|---|

| Any IP | 22 | SSH | Tenant Admin access |

| Any IP/Kubernetes Security Group | 2379-2380 | HTTPS | Etcd Traffic |

| Any IP/Kubernetes Security Group | 6443 | HTTPS | kube-apiserver |

| Any IP/Kubernetes Security Group | 7080 | HTTPS | kube-apiserver |

| Any IP/Kubernetes Security Group | 8080 | HTTPS | kube-apiserver |

| Any IP/Kubernetes Security Group | 30000-32767 | HTTPS | kube-apiserver |

| Any IP/Kubernetes Security Group | any | tenant app specific | tenant app specific |

| Port/Range | Protocol | Notes |

|---|---|---|

| 22 | SSH | Admin Access |

| 9511 | HTTPS | Magnum API Access |

| 2379-2380 | HTTPS | Etcd (planned) |

Summary of controls spanning multiple components and interfaces:

Audit: Magnum performs logging. Logs are collected by the centralized logging service.

Authentication: Authentication via keystone tokens at APIs. Password authentication to MQ and DB using specific users with randomly-generated passwords.

Authorization: OpenStack provides admin and non-admin roles that are indicated in session tokens. Processes run at minimum privilege. Processes run as unique user/group definitions (magnum/magnum). Appropriate filesystem controls prevent other processes from accessing service’s files. Magnum config file is mode 600. Logs written using group adm, user magnum, mode 640. IPtables ensure that no unneeded ports are open. Security Groups provide authorization controls between in-cloud components.

Availability: Redundant hosts, clustered DB, and fail-over provide high availability.

Confidentiality: Network connections over TLS. Network separation via VLANs. Data and config files protected via filesystem controls. Unencrypted local traffic is bound to localhost. Separation of customer traffic on the TUL network via Open Flow (VxLANs).

Integrity: Network connections over TLS. Network separation via VLANs. DB API integrity protected by SQL Alchemy. Data and config files are protected by filesystem controls. Unencrypted traffic is bound to localhost.

26.2 Install the Magnum Service #

Installing the Magnum Service can be performed as part of a new SUSE OpenStack Cloud 9 environment or can be added to an existing SUSE OpenStack Cloud 9 environment. Both installations require container management services, running in Magnum cluster VMs with access to specific Openstack API endpoints. The following TCP ports need to be open in your firewall to allow access from VMs to external (public) SUSE OpenStack Cloud endpoints.

| TCP Port | Service |

|---|---|

| 5000 | Identity |

| 8004 | heat |

| 9511 | Magnum |

Magnum is dependent on the following OpenStack services.

keystone

heat

nova KVM

neutron

glance

cinder

swift

barbican

Magnum relies on the public discovery service

https://discovery.etcd.io during cluster bootstrapping

and update. This service does not perform authentication checks. Although

running a cluster cannot be harmed by unauthorized changes in the public

discovery registry, it can be compromised during a cluster update operation.

To avoid this, it is recommended that you keep your cluster discovery URL

(that is,

https://discovery.etc.io/SOME_RANDOM_ID)

secret.

26.2.1 Installing Magnum as part of new SUSE OpenStack Cloud 9 environment #

Magnum components are already included in example SUSE OpenStack Cloud models based on nova KVM, such as entry-scale-kvm, entry-scale-kvm-mml and mid-scale. These models contain the Magnum dependencies (see above). You can follow generic installation instruction for Mid-Scale and Entry-Scale KM model by using this guide: Chapter 24, Installing Mid-scale and Entry-scale KVM.

If you modify the cloud model to utilize a dedicated Cloud Lifecycle Manager, add

magnum-clientitem to the list of service components for the Cloud Lifecycle Manager cluster.Magnum needs a properly configured external endpoint. While preparing the cloud model, ensure that

external-namesetting indata/network_groups.ymlis set to valid hostname, which can be resolved on DNS server, and a valid TLS certificate is installed for your external endpoint. For non-production test installations, you can omitexternal-name. In test installations, the SUSE OpenStack Cloud installer will use an IP address as a public endpoint hostname, and automatically generate a new certificate, signed by the internal CA. Please refer to Chapter 41, Configuring Transport Layer Security (TLS) for more details.

26.2.2 Adding Magnum to an Existing SUSE OpenStack Cloud Environment #

Adding Magnum to an already deployed SUSE OpenStack Cloud 9 installation or during an upgrade can be achieved by performing the following steps.

Add items listed below to the list of service components in

~/openstack/my_cloud/definition/data/control_plane.yml. Add them to clusters which haveserver-roleset toCONTROLLER-ROLE(entry-scale models) orCORE_ROLE(mid-scale model).- magnum-api - magnum-conductor

If your environment utilizes a dedicated Cloud Lifecycle Manager, add

magnum-clientto the list of service components for the Cloud Lifecycle Manager.Commit your changes to the local git repository. Run the following playbooks as described in Chapter 22, Using Git for Configuration Management for your installation.

config-processor-run.ymlready-deployment.ymlsite.yml

Ensure that your external endpoint is configured correctly. The current public endpoint configuration can be verified by running the following commands on the Cloud Lifecycle Manager.

$ source service.osrc $ openstack endpoint list --interface=public --service=identity +-----------+---------+--------------+----------+---------+-----------+------------------------+ | ID | Region | Service Name | Service | Enabled | Interface | URL | | | | | Type | | | | +-----------+---------+--------------+----------+---------+-----------+------------------------+ | d83...aa3 | region0 | keystone | identity | True | public | https://10.245.41.168: | | | | | | | | 5000/v2.0 | +-----------+---------+--------------+----------+---------+-----------+------------------------+

Ensure that the endpoint URL is using either an IP address, or a valid hostname, which can be resolved on the DNS server. If the URL is using an invalid hostname (for example,

myardana.test), follow the steps in Chapter 41, Configuring Transport Layer Security (TLS) to configure a valid external endpoint. You will need to update theexternal-namesetting in thedata/network_groups.ymlto a valid hostname, which can be resolved on DNS server, and provide a valid TLS certificate for the external endpoint. For non-production test installations, you can omit theexternal-name. The SUSE OpenStack Cloud installer will use an IP address as public endpoint hostname, and automatically generate a new certificate, signed by the internal CA. For more information, see Chapter 41, Configuring Transport Layer Security (TLS).

By default SUSE OpenStack Cloud stores the private key used by Magnum and its passphrase in barbican which provides a secure place to store such information. You can change this such that this sensitive information is stored on the file system or in the database without encryption. Making such a change exposes you to the risk of this information being exposed to others. If stored in the database then any database backups, or a database breach, could lead to the disclosure of the sensitive information. Similarly, if stored unencrypted on the file system this information is exposed more broadly than if stored in barbican.

26.3 Integrate Magnum with the DNS Service #

Integration with DNSaaS may be needed if:

The external endpoint is configured to use

myardana.testas host name and SUSE OpenStack Cloud front-end certificate is issued for this host name.Minions are registered using nova VM names as hostnames Kubernetes API server. Most kubectl commands will not work if the VM name (for example,

cl-mu3eevqizh-1-b3vifun6qtuh-kube-minion-ff4cqjgsuzhy) is not getting resolved at the provided DNS server.

Follow these steps to integrate the Magnum Service with the DNS Service.

Allow connections from VMs to EXT-API

sudo modprobe 8021q sudo ip link add link virbr5 name vlan108 type vlan id 108 sudo ip link set dev vlan108 up sudo ip addr add 192.168.14.200/24 dev vlan108 sudo iptables -t nat -A POSTROUTING -o vlan108 -j MASQUERADE

Run the designate reconfigure playbook.

$ cd ~/scratch/ansible/next/ardana/ansible/ $ ansible-playbook -i hosts/verb_hosts designate-reconfigure.yml

Set up designate to resolve myardana.test correctly.

$ openstack zone create --email hostmaster@myardana.test myardana.test. # wait for status to become active $ EXTERNAL_VIP=$(grep HZN-WEB-extapi /etc/hosts | awk '{ print $1 }') $ openstack recordset create --records $EXTERNAL_VIP --type A myardana.test. myardana.test. # wait for status to become active $ LOCAL_MGMT_IP=$(grep `hostname` /etc/hosts | awk '{ print $1 }') $ nslookup myardana.test $LOCAL_MGMT_IP Server: 192.168.14.2 Address: 192.168.14.2#53 Name: myardana.test Address: 192.168.14.5If you need to add/override a top level domain record, the following example should be used, substituting proxy.example.org with your own real address:

$ openstack tld create --name net $ openstack zone create --email hostmaster@proxy.example.org proxy.example.org. $ openstack recordset create --records 16.85.88.10 --type A proxy.example.org. proxy.example.org. $ nslookup proxy.example.org. 192.168.14.2 Server: 192.168.14.2 Address: 192.168.14.2#53 Name: proxy.example.org Address: 16.85.88.10

Enable propagation of dns_assignment and dns_name attributes to neutron ports, as per https://docs.openstack.org/neutron/rocky/admin/config-dns-int.html

# optionally add 'dns_domain = <some domain name>.' to [DEFAULT] section # of ardana/ansible/roles/neutron-common/templates/neutron.conf.j2 stack@ksperf2-cp1-c1-m1-mgmt:~/openstack$ cat <<-EOF >>ardana/services/designate/api.yml provides-data: - to: - name: neutron-ml2-plugin data: - option: extension_drivers values: - dns EOF $ git commit -a -m "Enable DNS support for neutron ports" $ cd ardana/ansible $ ansible-playbook -i hosts/localhost config-processor-run.yml $ ansible-playbook -i hosts/localhost ready-deployment.ymlEnable DNSaaS registration of created VMs by editing the

~/openstack/ardana/ansible/roles/neutron-common/templates/neutron.conf.j2file. You will need to addexternal_dns_driver = designateto the [DEFAULT] section and create a new [designate] section for the designate specific configurations.... advertise_mtu = False dns_domain = ksperf. external_dns_driver = designate {{ neutron_api_extensions_path|trim }} {{ neutron_vlan_transparent|trim }} # Add additional options here [designate] url = https://10.240.48.45:9001 admin_auth_url = https://10.240.48.45:35357/v3 admin_username = designate admin_password = P8lZ9FdHuoW admin_tenant_name = services allow_reverse_dns_lookup = True ipv4_ptr_zone_prefix_size = 24 ipv6_ptr_zone_prefix_size = 116 ca_cert = /etc/ssl/certs/ca-certificates.crtCommit your changes.

$ git commit -a -m "Enable DNSaaS registration of nova VMs" [site f4755c0] Enable DNSaaS registration of nova VMs 1 file changed, 11 insertions(+)