8 Configuration Processor Information Files #

In addition to producing all of the data needed to deploy and configure the cloud, the configuration processor also creates a number of information files that provide details of the resulting configuration.

These files can be found in ~/openstack/my_cloud/info

after the first configuration processor run. This directory is also rebuilt

each time the Configuration Processor is run.

Most of the files are in YAML format, allowing them to be used in further automation tasks if required.

| File | Provides details of |

|---|---|

address_info.yml

| IP address assignments on each network. See Section 8.1, “address_info.yml” |

firewall_info.yml

| All ports that are open on each network by the firewall configuration. Can be used if you want to configure an additional firewall in front of the API network, for example. See Section 8.2, “firewall_info.yml” |

route_info.yml

| Routes that need to be configured between networks. See Section 8.3, “route_info.yml” |

server_info.yml

| How servers have been allocated, including their network configuration. Allows details of a server to be found from its ID. See Section 8.4, “server_info.yml” |

service_info.yml

| Details of where components of each service are deployed. See Section 8.5, “service_info.yml” |

control_plane_topology.yml

| Details the structure of the cloud from the perspective of each control-plane. See Section 8.6, “control_plane_topology.yml” |

network_topology.yml

| Details the structure of the cloud from the perspective of each control-plane. See Section 8.7, “network_topology.yml” |

region_topology.yml

| Details the structure of the cloud from the perspective of each region. See Section 8.8, “region_topology.yml” |

service_topology.yml

| Details the structure of the cloud from the perspective of each service. See Section 8.9, “service_topology.yml” |

private_data_metadata_ccp.yml

| Details the secrets that are generated by the configuration processor – the names of the secrets, along with the service(s) that use each secret and a list of the clusters on which the service that consumes the secret is deployed. See Section 8.10, “private_data_metadata_ccp.yml” |

password_change.yml

|

Details the secrets that have been changed by the configuration

processor – information for each secret is the same as for

private_data_metadata_ccp.yml. See

Section 8.11, “password_change.yml”

|

explain.txt

| An explanation of the decisions the configuration processor has made when allocating servers and networks. See Section 8.12, “explain.txt” |

CloudDiagram.txt

| A pictorial representation of the cloud. See Section 8.13, “CloudDiagram.txt” |

The examples are taken from the entry-scale-kvm

example configuration.

8.1 address_info.yml #

This file provides details of all the IP addresses allocated by the Configuration Processor:

NETWORK GROUPS

LIST OF NETWORKS

IP ADDRESS

LIST OF ALIASESExample:

EXTERNAL-API:

EXTERNAL-API-NET:

10.0.1.2:

- ardana-cp1-c1-m1-extapi

10.0.1.3:

- ardana-cp1-c1-m2-extapi

10.0.1.4:

- ardana-cp1-c1-m3-extapi

10.0.1.5:

- ardana-cp1-vip-public-SWF-PRX-extapi

- ardana-cp1-vip-public-FRE-API-extapi

- ardana-cp1-vip-public-GLA-API-extapi

- ardana-cp1-vip-public-HEA-ACW-extapi

- ardana-cp1-vip-public-HEA-ACF-extapi

- ardana-cp1-vip-public-NEU-SVR-extapi

- ardana-cp1-vip-public-KEY-API-extapi

- ardana-cp1-vip-public-MON-API-extapi

- ardana-cp1-vip-public-HEA-API-extapi

- ardana-cp1-vip-public-NOV-API-extapi

- ardana-cp1-vip-public-CND-API-extapi

- ardana-cp1-vip-public-CEI-API-extapi

- ardana-cp1-vip-public-SHP-API-extapi

- ardana-cp1-vip-public-OPS-WEB-extapi

- ardana-cp1-vip-public-HZN-WEB-extapi

- ardana-cp1-vip-public-NOV-VNC-extapi

EXTERNAL-VM:

EXTERNAL-VM-NET: {}

GUEST:

GUEST-NET:

10.1.1.2:

- ardana-cp1-c1-m1-guest

10.1.1.3:

- ardana-cp1-c1-m2-guest

10.1.1.4:

- ardana-cp1-c1-m3-guest

10.1.1.5:

- ardana-cp1-comp0001-guest

MANAGEMENT:

...8.2 firewall_info.yml #

This file provides details of all the network ports that will be opened on the deployed cloud. Data is ordered by network. If you want to configure an external firewall in front of the External API network, then you would need to open the ports listed in that section.

NETWORK NAME

List of:

PORT

PROTOCOL

LIST OF IP ADDRESSES

LIST OF COMPONENTSExample:

EXTERNAL-API:

- addresses:

- 10.0.1.5

components:

- horizon

port: '443'

protocol: tcp

- addresses:

- 10.0.1.5

components:

- keystone-api

port: '5000'

protocol: tcpPort 443 (tcp) is open on network EXTERNAL-API for address 10.0.1.5 because it is used by horizon

Port 5000 (tcp) is open on network EXTERNAL-API for address 10.0.1.5 because it is used by keystone API

8.3 route_info.yml #

This file provides details of routes between networks that need to be configured. Available routes are defined in the input model as part of the data; this file shows which routes will actually be used. SUSE OpenStack Cloud will reconfigure routing rules on the servers, you must configure the corresponding routes within your physical network. Routes must be configured to be symmetrical -- only the direction in which a connection is initiated is captured in this file.

Note that simple models may not require any routes, with all servers being

attached to common L3 networks. The following example is taken from the

tech-preview/mid-scale-kvm example.

SOURCE-NETWORK-NAME

TARGET-NETWORK-NAME

default: TRUE IF THIS IS THIS THE RESULT OF A "DEFAULT" ROUTE RULE

used_by:

SOURCE-SERVICE

TARGET-SERVICE

LIST OF HOSTS USING THIS ROUTEExample:

MANAGEMENT-NET-RACK1:

INTERNAL-API-NET:

default: false

used_by:

- ardana-cp1-mtrmon-m1

keystone-api:

- ardana-cp1-mtrmon-m1

MANAGEMENT-NET-RACK2:

default: false

used_by:

cinder-backup:

rabbitmq:

- ardana-cp1-core-m1A route is required from network MANAGEMENT-NET-RACK1 to network MANAGEMENT-NET-RACK2 so that cinder-backup can connect to rabbitmq from server ardana-cp1-core-m1

8.4 server_info.yml #

This file provides details of how servers have been allocated by the

Configuration Processor. This provides the easiest way to find where a

specific physical server (identified by server-id) is

being used.

SERVER-ID

failure-zone: FAILURE ZONE THAT THE SERVER WAS ALLOCATED FROM

hostname: HOSTNAME OF THE SERVER

net_data: NETWORK CONFIGURATION

state: "allocated" | "available" Example:

controller1:

failure-zone: AZ1

hostname: ardana-cp1-c1-m1-mgmt

net_data:

BOND0:

EXTERNAL-API-NET:

addr: 10.0.1.2

tagged-vlan: true

vlan-id: 101

EXTERNAL-VM-NET:

addr: null

tagged-vlan: true

vlan-id: 102

GUEST-NET:

addr: 10.1.1.2

tagged-vlan: true

vlan-id: 103

MANAGEMENT-NET:

addr: 192.168.10.3

tagged-vlan: false

vlan-id: 100

state: allocated8.5 service_info.yml #

This file provides details of how services are distributed across the cloud.

CONTROL-PLANE

SERVICE

SERVICE COMPONENT

LIST OF HOSTSExample:

control-plane-1:

neutron:

neutron-client:

- ardana-cp1-c1-m1-mgmt

- ardana-cp1-c1-m2-mgmt

- ardana-cp1-c1-m3-mgmt

neutron-dhcp-agent:

- ardana-cp1-c1-m1-mgmt

- ardana-cp1-c1-m2-mgmt

- ardana-cp1-c1-m3-mgmt

neutron-l3-agent:

- ardana-cp1-comp0001-mgmt

...8.6 control_plane_topology.yml #

This file provides details of the topology of the cloud from the perspective of each control plane:

control_planes:

CONTROL-PLANE-NAME

load-balancers:

LOAD-BALANCER-NAME:

address: IP ADDRESS OF VIP

cert-file: NAME OF CERT FILE

external-name: NAME TO USED FOR ENDPOINTS

network: NAME OF THE NETWORK THIS LB IS CONNECTED TO

network_group: NAME OF THE NETWORK GROUP THIS LB IS CONNECT TO

provider: SERVICE COMPONENT PROVIDING THE LB

roles: LIST OF ROLES OF THIS LB

services:

SERVICE-NAME:

COMPONENT-NAME:

aliases:

ROLE: NAME IN /etc/hosts

host-tls: BOOLEAN, TRUE IF CONNECTION FROM LB USES TLS

hosts: LIST OF HOSTS FOR THIS SERVICE

port: PORT USED FOR THIS COMPONENT

vip-tls: BOOLEAN, TRUE IF THE VIP TERMINATES TLS

clusters:

CLUSTER-NAME

failure-zones:

FAILURE-ZONE-NAME:

LIST OF HOSTS

services:

SERVICE NAME:

components:

LIST OF SERVICE COMPONENTS

regions:

LIST OF REGION NAMES

resources:

RESOURCE-NAME:

AS FOR CLUSTERS ABOVEExample:

control_planes:

control-plane-1:

clusters:

cluster1:

failure_zones:

AZ1:

- ardana-cp1-c1-m1-mgmt

AZ2:

- ardana-cp1-c1-m2-mgmt

AZ3:

- ardana-cp1-c1-m3-mgmt

services:

barbican:

components:

- barbican-api

- barbican-worker

regions:

- region1

…

load-balancers:

extlb:

address: 10.0.1.5

cert-file: my-public-entry-scale-kvm-cert

external-name: ''

network: EXTERNAL-API-NET

network-group: EXTERNAL-API

provider: ip-cluster

roles:

- public

services:

barbican:

barbican-api:

aliases:

public: ardana-cp1-vip-public-KEYMGR-API-extapi

host-tls: true

hosts:

- ardana-cp1-c1-m1-mgmt

- ardana-cp1-c1-m2-mgmt

- ardana-cp1-c1-m3-mgmt

port: '9311'

vip-tls: true8.7 network_topology.yml #

This file provides details of the topology of the cloud from the perspective of each network_group:

network-groups:

NETWORK-GROUP-NAME:

NETWORK-NAME:

control-planes:

CONTROL-PLANE-NAME:

clusters:

CLUSTER-NAME:

servers:

ARDANA-SERVER-NAME: ip address

vips:

IP ADDRESS: load balancer name

resources:

RESOURCE-GROUP-NAME:

servers:

ARDANA-SERVER-NAME: ip addressExample:

network_groups:

EXTERNAL-API:

EXTERNAL-API-NET:

control_planes:

control-plane-1:

clusters:

cluster1:

servers:

ardana-cp1-c1-m1: 10.0.1.2

ardana-cp1-c1-m2: 10.0.1.3

ardana-cp1-c1-m3: 10.0.1.4

vips:

10.0.1.5: extlb

EXTERNAL-VM:

EXTERNAL-VM-NET:

control_planes:

control-plane-1:

clusters:

cluster1:

servers:

ardana-cp1-c1-m1: null

ardana-cp1-c1-m2: null

ardana-cp1-c1-m3: null

resources:

compute:

servers:

ardana-cp1-comp0001: null8.8 region_topology.yml #

This file provides details of the topology of the cloud from the perspective

of each region. In SUSE OpenStack Cloud, multiple regions are not supported. Only

Region0 is valid.

regions:

REGION-NAME:

control-planes:

CONTROL-PLANE-NAME:

services:

SERVICE-NAME:

LIST OF SERVICE COMPONENTSExample:

regions:

region0:

control-planes:

control-plane-1:

services:

barbican:

- barbican-api

- barbican-worker

ceilometer:

- ceilometer-common

- ceilometer-agent-notification

- ceilometer-polling

cinder:

- cinder-api

- cinder-volume

- cinder-scheduler

- cinder-backup8.9 service_topology.yml #

This file provides details of the topology of the cloud from the perspective of each service:

services:

SERVICE-NAME:

components:

COMPONENT-NAME:

control-planes:

CONTROL-PLANE-NAME:

clusters:

CLUSTER-NAME:

LIST OF SERVERS

resources:

RESOURCE-GROUP-NAME:

LIST OF SERVERS

regions:

LIST OF REGIONS8.10 private_data_metadata_ccp.yml #

This file provide details of the secrets that are generated by the configuration processor. The details include:

The names of each secret

Metadata about each secret. This is a list where each element contains details about each

componentservice that uses the secret.The

componentservice that uses the secret, and if applicable the service that this component "consumes" when using the secretThe list of clusters on which the

componentservice is deployedThe control plane

cpon which the services are deployed

A version number (the model version number)

SECRET

METADATA

LIST OF METADATA

CLUSTERS

LIST OF CLUSTERS

COMPONENT

CONSUMES

CONTROL-PLANE

VERSIONFor example:

barbican_admin_password:

metadata:

- clusters:

- cluster1

component: barbican-api

cp: ccp

version: '2.0'

keystone_swift_password:

metadata:

- clusters:

- cluster1

component: swift-proxy

consumes: keystone-api

cp: ccp

version: '2.0'

metadata_proxy_shared_secret:

metadata:

- clusters:

- cluster1

component: nova-metadata

cp: ccp

- clusters:

- cluster1

- compute

component: neutron-metadata-agent

cp: ccp

version: '2.0'

…8.11 password_change.yml #

This file provides details equivalent to those in private_data_metadata_ccp.yml for passwords which have been changed from their original values, using the procedure outlined in the SUSE OpenStack Cloud documentation

8.12 explain.txt #

This file provides details of the server allocation and network configuration decisions the configuration processor has made. The sequence of information recorded is:

Any service components that are automatically added

Allocation of servers to clusters and resource groups

Resolution of the network configuration for each server

Resolution of the network configuration of each load balancer

Example:

Add required services to control plane control-plane-1

======================================================

control-plane-1: Added nova-metadata required by nova-api

control-plane-1: Added swift-common required by swift-proxy

control-plane-1: Added swift-rsync required by swift-account

Allocate Servers for control plane control-plane-1

==================================================

cluster: cluster1

-----------------

Persisted allocation for server 'controller1' (AZ1)

Persisted allocation for server 'controller2' (AZ2)

Searching for server with role ['CONTROLLER-ROLE'] in zones: set(['AZ3'])

Allocated server 'controller3' (AZ3)

resource: compute

-----------------

Persisted allocation for server 'compute1' (AZ1)

Searching for server with role ['COMPUTE-ROLE'] in zones: set(['AZ1', 'AZ2', 'AZ3'])

Resolve Networks for Servers

============================

server: ardana-cp1-c1-m1

------------------------

add EXTERNAL-API for component ip-cluster

add MANAGEMENT for component ip-cluster

add MANAGEMENT for lifecycle-manager (default)

add MANAGEMENT for ntp-server (default)

...

add MANAGEMENT for swift-rsync (default)

add GUEST for tag neutron.networks.vxlan (neutron-openvswitch-agent)

add EXTERNAL-VM for tag neutron.l3_agent.external_network_bridge (neutron-vpn-agent)

Using persisted address 10.0.1.2 for server ardana-cp1-c1-m1 on network EXTERNAL-API-NET

Using address 192.168.10.3 for server ardana-cp1-c1-m1 on network MANAGEMENT-NET

Using persisted address 10.1.1.2 for server ardana-cp1-c1-m1 on network GUEST-NET

…

Define load balancers

=====================

Load balancer: extlb

--------------------

Using persisted address 10.0.1.5 for vip extlb ardana-cp1-vip-extlb-extapi on network EXTERNAL-API-NET

Add nova-api for roles ['public'] due to 'default'

Add glance-api for roles ['public'] due to 'default'

...

Map load balancers to providers

===============================

Network EXTERNAL-API-NET

------------------------

10.0.1.5: ip-cluster nova-api roles: ['public'] vip-port: 8774 host-port: 8774

10.0.1.5: ip-cluster glance-api roles: ['public'] vip-port: 9292 host-port: 9292

10.0.1.5: ip-cluster keystone-api roles: ['public'] vip-port: 5000 host-port: 5000

10.0.1.5: ip-cluster swift-proxy roles: ['public'] vip-port: 8080 host-port: 8080

10.0.1.5: ip-cluster monasca-api roles: ['public'] vip-port: 8070 host-port: 8070

10.0.1.5: ip-cluster heat-api-cfn roles: ['public'] vip-port: 8000 host-port: 8000

10.0.1.5: ip-cluster ops-console-web roles: ['public'] vip-port: 9095 host-port: 9095

10.0.1.5: ip-cluster heat-api roles: ['public'] vip-port: 8004 host-port: 8004

10.0.1.5: ip-cluster nova-novncproxy roles: ['public'] vip-port: 6080 host-port: 6080

10.0.1.5: ip-cluster neutron-server roles: ['public'] vip-port: 9696 host-port: 9696

10.0.1.5: ip-cluster heat-api-cloudwatch roles: ['public'] vip-port: 8003 host-port: 8003

10.0.1.5: ip-cluster horizon roles: ['public'] vip-port: 443 host-port: 80

10.0.1.5: ip-cluster cinder-api roles: ['public'] vip-port: 8776 host-port: 87768.13 CloudDiagram.txt #

This file provides a pictorial representation of the cloud. Although this file is still produced, it is superseded by the HTML output described in the following section.

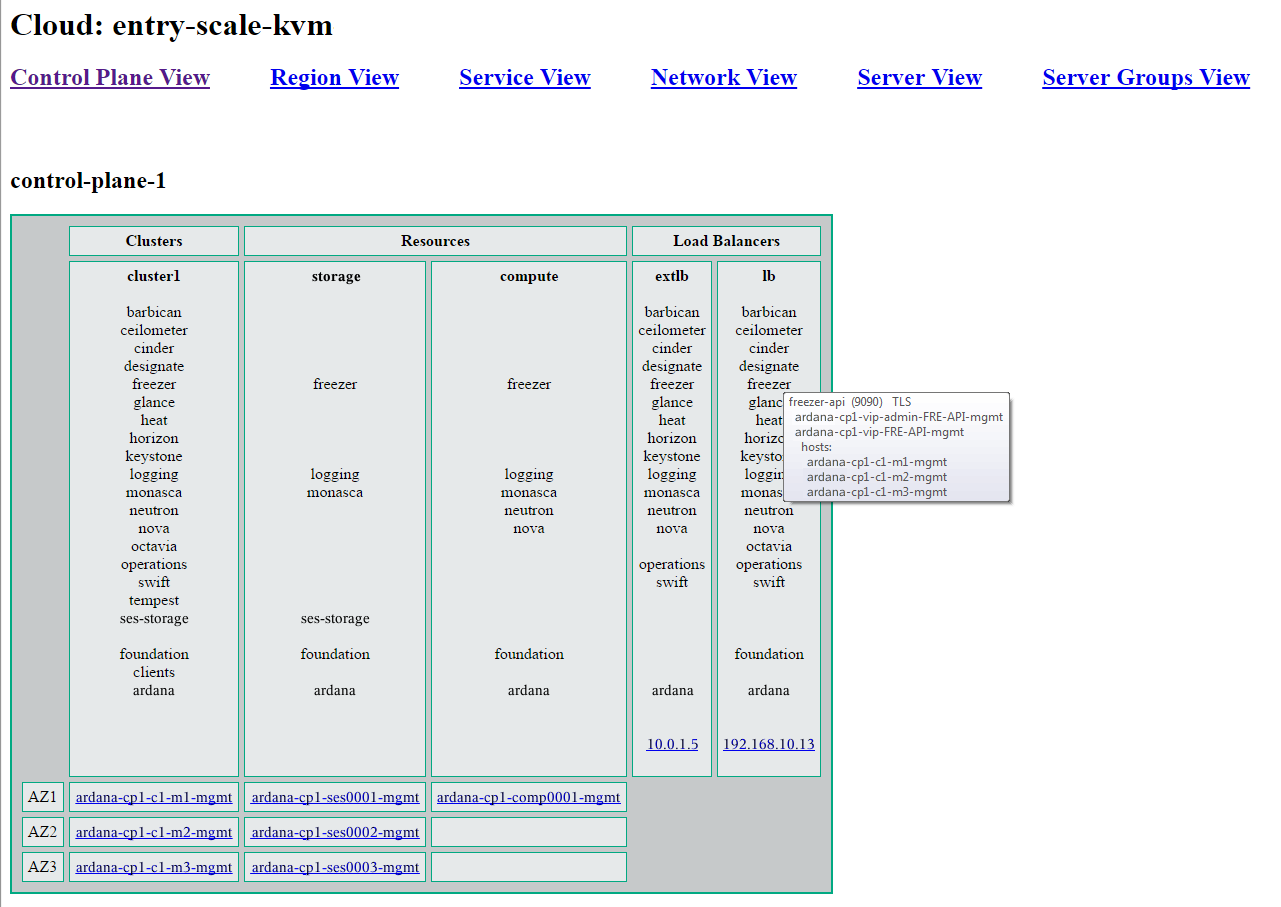

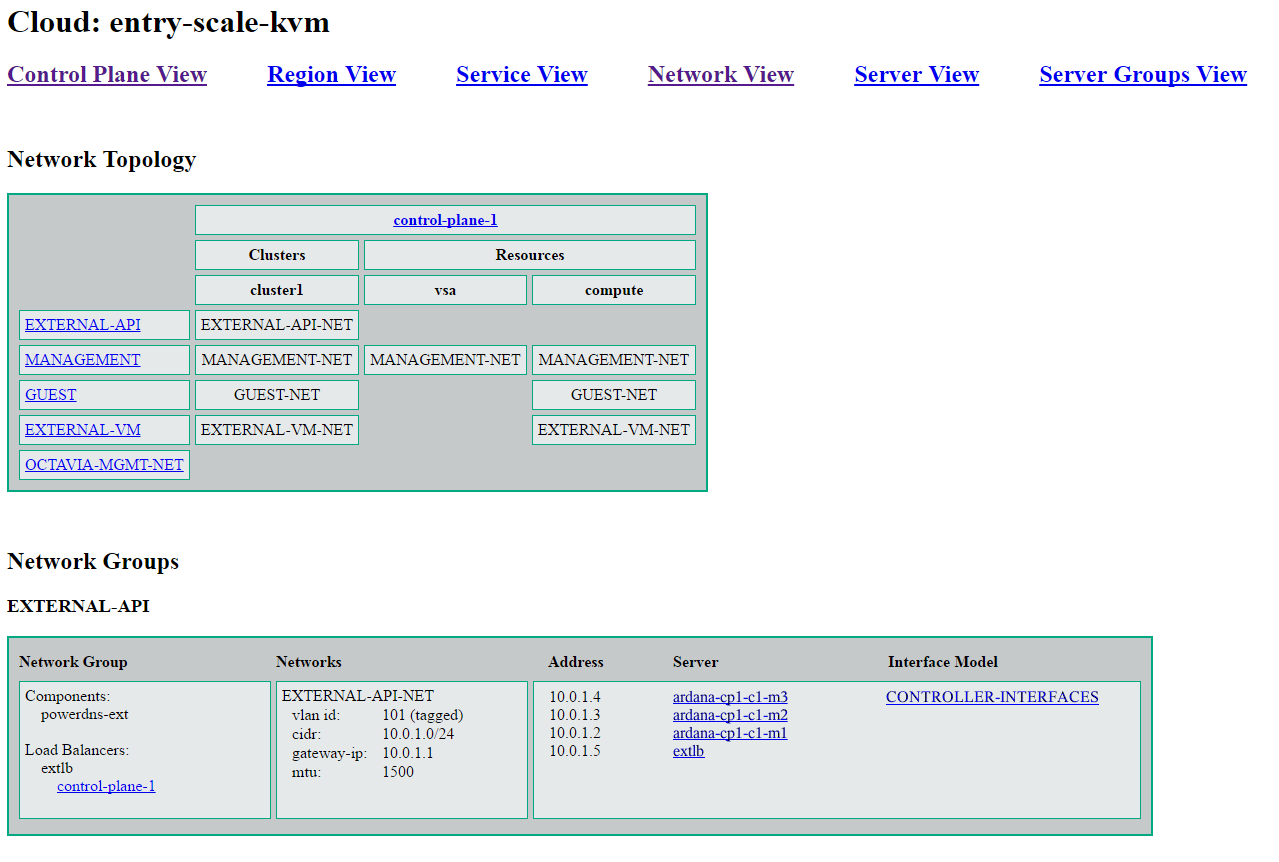

8.14 HTML Representation #

An HTML representation of the cloud can be found in

~/openstack/my_cloud/html after the first Configuration

Processor run. This directory is also rebuilt each time the Configuration

Processor is run. These files combine the data in the input model with

allocation decisions made by the Configuration processor to allow the

configured cloud to be viewed from a number of different perspectives.

Most of the entries on the HTML pages provide either links to other parts of the HTML output or additional details via hover text.