29 Installing Baremetal (Ironic) #

Bare Metal as a Service is enabled in this release for deployment of nova instances on bare metal nodes using flat networking.

29.1 Installation for SUSE OpenStack Cloud Entry-scale Cloud with Ironic Flat Network #

This page describes the installation step requirements for the SUSE OpenStack Cloud Entry-scale Cloud with ironic Flat Network.

29.1.1 Configure Your Environment #

Prior to deploying an operational environment with ironic, operators need to be aware of the nature of TLS certificate authentication. As pre-built deployment agent ramdisks images are supplied, these ramdisk images will only authenticate known third-party TLS Certificate Authorities in the interest of end-to-end security. As such, uses of self-signed certificates and private certificate authorities will be unable to leverage ironic without modifying the supplied ramdisk images.

Set up your configuration files, as follows:

See the sample sets of configuration files in the

~/openstack/examples/directory. Each set will have an accompanying README.md file that explains the contents of each of the configuration files.Copy the example configuration files into the required setup directory and edit them to contain the details of your environment:

cp -r ~/openstack/examples/entry-scale-ironic-flat-network/* \ ~/openstack/my_cloud/definition/

(Optional) You can use the

ardanaencrypt.pyscript to encrypt your IPMI passwords. This script uses OpenSSL.Change to the Ansible directory:

ardana >cd ~/openstack/ardana/ansiblePut the encryption key into the following environment variable:

export ARDANA_USER_PASSWORD_ENCRYPT_KEY=<encryption key>

Run the python script below and follow the instructions. Enter a password that you want to encrypt.

ardana >./ardanaencrypt.pyTake the string generated and place it in the

ilo-passwordfield in your~/openstack/my_cloud/definition/data/servers.ymlfile, remembering to enclose it in quotes.Repeat the above for each server.

NoteBefore you run any playbooks, remember that you need to export the encryption key in the following environment variable:

export ARDANA_USER_PASSWORD_ENCRYPT_KEY=<encryption key>

Commit your configuration to the local git repo (Chapter 22, Using Git for Configuration Management), as follows:

ardana >cd ~/openstack/ardana/ansibleardana >git add -Aardana >git commit -m "My config or other commit message"ImportantThis step needs to be repeated any time you make changes to your configuration files before you move on to the following steps. See Chapter 22, Using Git for Configuration Management for more information.

29.1.2 Provisioning Your Baremetal Nodes #

To provision the baremetal nodes in your cloud deployment you can either use the automated operating system installation process provided by SUSE OpenStack Cloud or you can use the 3rd party installation tooling of your choice. We will outline both methods below:

29.1.2.1 Using Third Party Baremetal Installers #

If you do not wish to use the automated operating system installation tooling included with SUSE OpenStack Cloud then the requirements that have to be met using the installation tooling of your choice are:

The operating system must be installed via the SLES ISO provided on the SUSE Customer Center.

Each node must have SSH keys in place that allows the same user from the Cloud Lifecycle Manager node who will be doing the deployment to SSH to each node without a password.

Passwordless sudo needs to be enabled for the user.

There should be a LVM logical volume as

/rooton each node.If the LVM volume group name for the volume group holding the

rootLVM logical volume isardana-vg, then it will align with the disk input models in the examples.Ensure that

openssh-server,python,python-apt, andrsyncare installed.

If you chose this method for installing your baremetal hardware, skip forward to the step Running the Configuration Processor.

29.1.2.2 Using the Automated Operating System Installation Provided by SUSE OpenStack Cloud #

If you would like to use the automated operating system installation tools provided by SUSE OpenStack Cloud, complete the steps below.

29.1.2.2.1 Deploying Cobbler #

This phase of the install process takes the baremetal information that was

provided in servers.yml and installs the Cobbler

provisioning tool and loads this information into Cobbler. This sets each

node to netboot-enabled: true in Cobbler. Each node

will be automatically marked as netboot-enabled: false

when it completes its operating system install successfully. Even if the

node tries to PXE boot subsequently, Cobbler will not serve it. This is

deliberate so that you cannot reimage a live node by accident.

The cobbler-deploy.yml playbook prompts for a password

- this is the password that will be encrypted and stored in Cobbler, which

is associated with the user running the command on the Cloud Lifecycle Manager, that you

will use to log in to the nodes via their consoles after install. The

username is the same as the user set up in the initial dialogue when

installing the Cloud Lifecycle Manager from the ISO, and is the same user that is running

the cobbler-deploy play.

When imaging servers with your own tooling, it is still necessary to have

ILO/IPMI settings for all nodes. Even if you are not using Cobbler, the

username and password fields in servers.yml need to

be filled in with dummy settings. For example, add the following to

servers.yml:

ilo-user: manual ilo-password: deployment

Run the following playbook which confirms that there is IPMI connectivity for each of your nodes so that they are accessible to be re-imaged in a later step:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost bm-power-status.ymlRun the following playbook to deploy Cobbler:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost cobbler-deploy.yml

29.1.2.2.2 Imaging the Nodes #

This phase of the install process goes through a number of distinct steps:

Powers down the nodes to be installed

Sets the nodes hardware boot order so that the first option is a network boot.

Powers on the nodes. (The nodes will then boot from the network and be installed using infrastructure set up in the previous phase)

Waits for the nodes to power themselves down (this indicates a successful install). This can take some time.

Sets the boot order to hard disk and powers on the nodes.

Waits for the nodes to be reachable by SSH and verifies that they have the signature expected.

Deploying nodes has been automated in the Cloud Lifecycle Manager and requires the following:

All of your nodes using SLES must already be installed, either manually or via Cobbler.

Your input model should be configured for your SLES nodes.

You should have run the configuration processor and the

ready-deployment.ymlplaybook.

Execute the following steps to re-image one or more nodes after you have

run the ready-deployment.yml playbook.

Run the following playbook, specifying your SLES nodes using the nodelist. This playbook will reconfigure Cobbler for the nodes listed.

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook prepare-sles-grub2.yml -e \ nodelist=node1[,node2,node3]Re-image the node(s) with the following command:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost bm-reimage.yml \ -e nodelist=node1[,node2,node3]

If a nodelist is not specified then the set of nodes in Cobbler with

netboot-enabled: True is selected. The playbook pauses

at the start to give you a chance to review the set of nodes that it is

targeting and to confirm that it is correct.

You can use the command below which will list all of your nodes with the

netboot-enabled: True flag set:

sudo cobbler system find --netboot-enabled=1

29.1.3 Running the Configuration Processor #

Once you have your configuration files setup, you need to run the configuration processor to complete your configuration.

When you run the configuration processor, you will be prompted for two

passwords. Enter the first password to make the configuration processor

encrypt its sensitive data, which consists of the random inter-service

passwords that it generates and the ansible group_vars

and host_vars that it produces for subsequent deploy

runs. You will need this password for subsequent Ansible deploy and

configuration processor runs. If you wish to change an encryption password

that you have already used when running the configuration processor then

enter the new password at the second prompt, otherwise just press

Enter to bypass this.

Run the configuration processor with this command:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.yml

For automated installation (for example CI), you can specify the required passwords on the ansible command line. For example, the command below will disable encryption by the configuration processor:

ardana > ansible-playbook -i hosts/localhost config-processor-run.yml \

-e encrypt="" -e rekey=""If you receive an error during this step, there is probably an issue with one or more of your configuration files. Verify that all information in each of your configuration files is correct for your environment. Then commit those changes to Git using the instructions in the previous section before re-running the configuration processor again.

For any troubleshooting information regarding these steps, see Section 36.2, “Issues while Updating Configuration Files”.

29.1.4 Deploying the Cloud #

Use the playbook below to create a deployment directory:

cd ~/openstack/ardana/ansible ansible-playbook -i hosts/localhost ready-deployment.yml

[OPTIONAL] - Run the

wipe_disks.ymlplaybook to ensure all of your non-OS partitions on your nodes are completely wiped before continuing with the installation. Thewipe_disks.ymlplaybook is only meant to be run on systems immediately after runningbm-reimage.yml. If used for any other case, it may not wipe all of the expected partitions.If you are using fresh machines this step may not be necessary.

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts wipe_disks.ymlIf you have used an encryption password when running the configuration processor use the command below and enter the encryption password when prompted:

ardana >ansible-playbook -i hosts/verb_hosts wipe_disks.yml --ask-vault-passRun the

site.ymlplaybook below:ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts site.ymlIf you have used an encryption password when running the configuration processor use the command below and enter the encryption password when prompted:

ardana >ansible-playbook -i hosts/verb_hosts site.yml --ask-vault-passNoteThe step above runs

osconfigto configure the cloud andardana-deployto deploy the cloud. Therefore, this step may run for a while, perhaps 45 minutes or more, depending on the number of nodes in your environment.Verify that the network is working correctly. Ping each IP in the

/etc/hostsfile from one of the controller nodes.

For any troubleshooting information regarding these steps, see Section 36.3, “Issues while Deploying the Cloud”.

29.1.5 Ironic configuration #

Run the ironic-cloud-configure.yml playbook below:

cd ~/scratch/ansible/next/ardana/ansible ansible-playbook -i hosts/verb_hosts ironic-cloud-configure.yml

This step configures ironic flat network, uploads glance images and sets the ironic configuration.

To see the images uploaded to glance, run:

$ source ~/service.osrc $ openstack image list

This will produce output like the following example, showing three images that have been added by ironic:

+--------------------------------------+--------------------------+ | ID | Name | +--------------------------------------+--------------------------+ | d4e2a0ff-9575-4bed-ac5e-5130a1553d93 | ir-deploy-iso-HOS3.0 | | b759a1f0-3b33-4173-a6cb-be5706032124 | ir-deploy-kernel-HOS3.0 | | ce5f4037-e368-46f2-941f-c01e9072676c | ir-deploy-ramdisk-HOS3.0 | +--------------------------------------+--------------------------+

To see the network created by ironic, run:

$ openstack network list

This returns details of the "flat-net" generated by the ironic install:

+---------------+----------+-------------------------------------------------------+ | id | name | subnets | +---------------+----------+-------------------------------------------------------+ | f9474...11010 | flat-net | ca8f8df8-12c8-4e58-b1eb-76844c4de7e8 192.168.245.0/24 | +---------------+----------+-------------------------------------------------------+

29.1.6 Node Configuration #

29.1.6.1 DHCP #

Once booted, nodes obtain network configuration via DHCP. If multiple interfaces are to be utilized, you may want to pre-build images with settings to execute DHCP on all interfaces. An easy way to build custom images is with KIWI, the command line utility to build Linux system appliances.

For information about building custom KIWI images, see Section 29.3.13, “Building glance Images Using KIWI”. For more information, see the KIWI documentation at https://osinside.github.io/kiwi/.

29.1.6.2 Configuration Drives #

Configuration Drives are stored unencrypted and should not include any sensitive data.

You can use Configuration Drives to store metadata for initial boot

setting customization. Configuration Drives are extremely useful for

initial machine configuration. However, as a general security practice,

they should not include any

sensitive data. Configuration Drives should only be trusted upon the initial

boot of an instance. cloud-init utilizes a lock file for

this purpose. Custom instance images should not rely upon the integrity of a

Configuration Drive beyond the initial boot of a host as an administrative

user within a deployed instance can potentially modify a configuration drive

once written to disk and released for use.

For more information about Configuration Drives, see http://docs.openstack.org/user-guide/cli_config_drive.html.

29.1.7 TLS Certificates with Ironic Python Agent (IPA) Images #

As part of SUSE OpenStack Cloud 9, ironic Python Agent, better known as IPA in the

OpenStack community, images are supplied and loaded into glance. Two types of

image exist. One is a traditional boot ramdisk which is used by the

agent_ipmitool, pxe_ipmitool, and

pxe_ilo drivers. The other is an ISO image that is

supplied as virtual media to the host when using the

agent_ilo driver.

As these images are built in advance, they are unaware of any private certificate authorities. Users attempting to utilize self-signed certificates or a private certificate authority will need to inject their signing certificate(s) into the image in order for IPA to be able to boot on a remote node, and ensure that the TLS endpoints being connected to in SUSE OpenStack Cloud can be trusted. This is not an issue with publicly signed certificates.

As two different types of images exist, below are instructions for

disassembling the image ramdisk file or the ISO image. Once this has been

done, you will need to re-upload the files to glance, and update any impacted

node's driver_info, for example, the

deploy_ramdisk and ilo_deploy_iso

settings that were set when the node was first defined. Respectively, this

can be done with the

ironic node-update <node> replace driver_info/deploy_ramdisk=<glance_id>

or

ironic node-update <node> replace driver_info/ilo_deploy_iso=<glance_id>

29.1.7.1 Add New Trusted CA Certificate Into Deploy Images #

Perform the following steps.

To upload your trusted CA certificate to the Cloud Lifecycle Manager, follow the directions in Section 41.7, “Upload to the Cloud Lifecycle Manager”.

Delete the deploy images.

ardana >openstack image delete ir-deploy-iso-ARDANA5.0ardana >openstack image delete ir-deploy-ramdisk-ARDANA5.0On the deployer, run

ironic-reconfigure.ymlplaybook to re-upload the images that include the new trusted CA bundle.ardana >cd /var/lib/ardana/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts ironic-reconfigure.ymlUpdate the existing ironic nodes with the new image IDs accordingly. For example,

ardana >openstack baremetal node set --driver-info \ deploy_ramdisk=NEW_RAMDISK_ID NODE_ID

29.2 ironic in Multiple Control Plane #

SUSE OpenStack Cloud 9 introduces the concept of multiple control planes - see the Input Model documentation for the relevant Section 5.2.2.1, “Control Planes and Regions” and Section 6.2.3, “Multiple Control Planes”. This document covers the use of an ironic region in a multiple control plane cloud model in SUSE OpenStack Cloud.

29.2.1 Networking for Baremetal in Multiple Control Plane #

IRONIC-FLAT-NET is the network configuration for baremetal control plane.

You need to set the environment variable OS_REGION_NAME to the ironic region in baremetal control plane. This will set up the ironic flat networking in neutron.

export OS_REGION_NAME=<ironic_region>

To see details of the IRONIC-FLAT-NETWORK created during

configuration, use the following command:

openstack network list

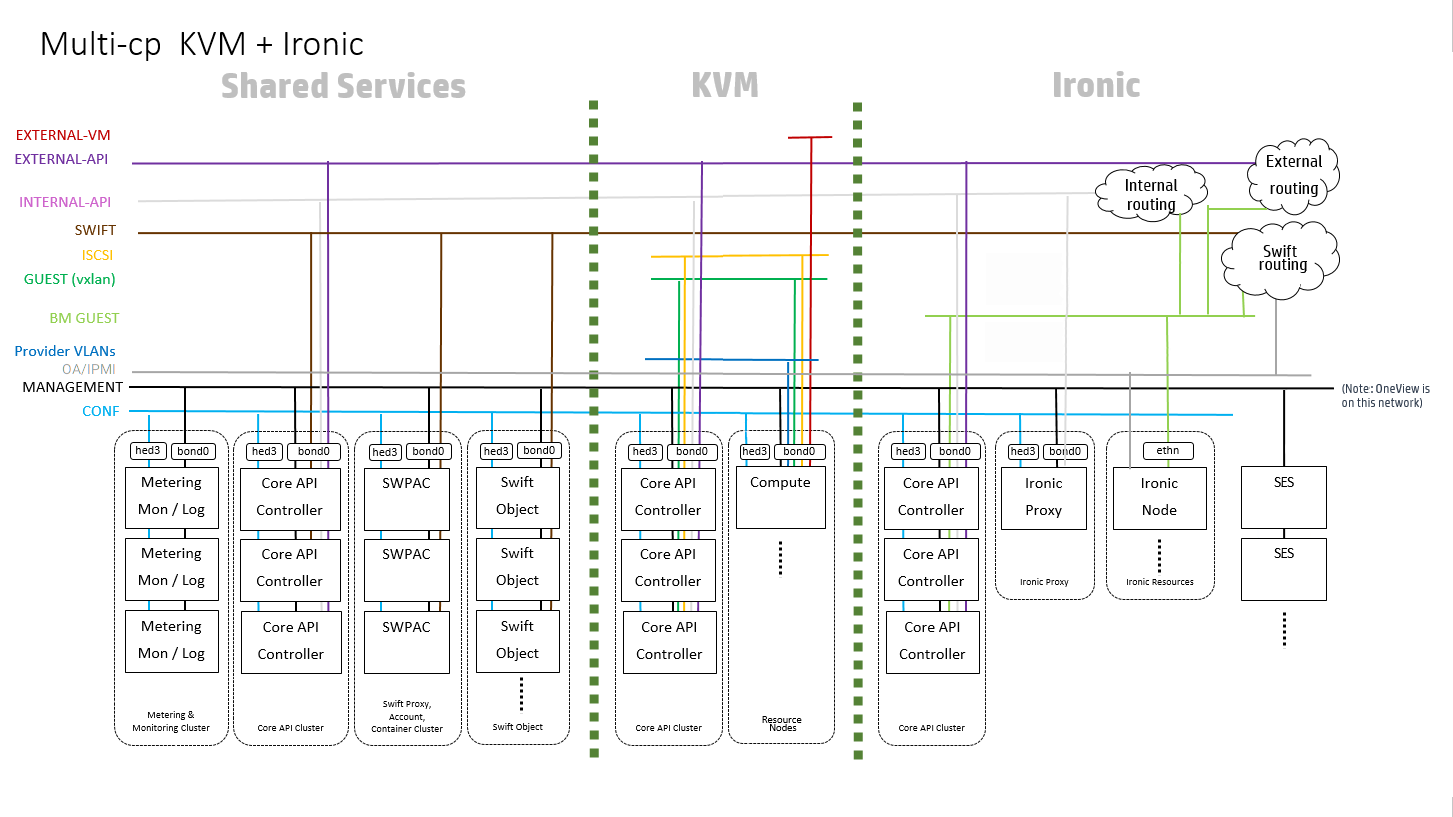

Referring to the diagram below, the Cloud Lifecycle Manager is a shared service that runs in a Core API Controller in a Core API Cluster. ironic Python Agent (IPA) must be able to make REST API calls to the ironic API (the connection is represented by the green line to Internal routing). The IPA connect to swift to get user images (the gray line connecting to swift routing).

29.2.2 Handling Optional swift Service #

swift is resource-intensive and as a result, it is now optional in the SUSE OpenStack Cloud control plane. A number of services depend on swift, and if it is not present, they must provide a fallback strategy. For example, glance can use the filesystem in place of swift for its backend store.

In ironic, agent-based drivers require swift. If it is not present, it is

necessary to disable access to this ironic feature in the control plane. The

enable_agent_driver flag has been added to the ironic

configuration data and can have values of true or

false. Setting this flag to false will

disable swift configurations and the agent based drivers in the ironic

control plane.

29.2.3 Instance Provisioning #

In a multiple control plane cloud setup, changes for glance container name

in the swift namespace of ironic-conductor.conf

introduces a conflict with the one in glance-api.conf.

Provisioning with agent-based drivers requires the container name to be the

same in ironic and glance. Hence, on instance provisioning with agent-based

drivers (swift-enabled), the agent is not able to fetch the images from

glance store and fails at that point.

You can resolve this issue using the following steps:

Copy the value of

swift_store_containerfrom the file/opt/stack/service/glance-api/etc/glance-api.confLog in to the Cloud Lifecycle Manager and use the value for

swift_containerin glance namespace of~/scratch/ansible/next/ardana/ansible/roles/ironic-common/templates/ironic-conductor.conf.j2Run the following playbook:

cd ~/scratch/ansible/next/ardana/ansible ansible-playbook -i hosts/verb_hosts ironic-reconfigure.yml

29.3 Provisioning Bare-Metal Nodes with Flat Network Model #

Providing bare-metal resources to an untrusted third party is not advised as a malicious user can potentially modify hardware firmware.

The steps outlined in Section 29.1.7, “TLS Certificates with Ironic Python Agent (IPA) Images” must be performed.

A number of drivers are available to provision and manage bare-metal machines. The drivers are named based on the deployment mode and the power management interface. SUSE OpenStack Cloud has been tested with the following drivers:

agent_ilo

agent_ipmi

pxe_ilo

pxe_ipmi

Redfish

Before you start, you should be aware that:

Node Cleaning is enabled for all the drivers in SUSE OpenStack Cloud 9.

Node parameter settings must have matching flavors in terms of

cpus,local_gb, andmemory_mb,boot_modeandcpu_arch.It is advisable that nodes enrolled for ipmitool drivers are pre-validated in terms of BIOS settings, in terms of boot mode, prior to setting capabilities.

Network cabling and interface layout should also be pre-validated in any given particular boot mode or configuration that is registered.

The use of

agent_drivers is predicated upon glance images being backed by a swift image store, specifically the need for the temporary file access features. Using the file system as a glance back-end image store means that theagent_drivers cannot be used.Manual Cleaning (RAID) and Node inspection is supported by ilo drivers (

agent_iloandpxe_ilo)

29.3.1 Redfish Protocol Support #

Redfish is a successor to the Intelligent Platform Management Interface (IPMI) with the ability to scale to larger and more diverse cloud deployments. It has an API that allows users to collect performance data from heterogeneous server installations and more data sources than could be handled previously. It is based on an industry standard protocol with a RESTful interface for managing cloud assets that are compliant with the Redfish protocol.

There are two known limitations to using Redfish.

RAID configuration does not work due to missing HPE Smart Storage Administrator CLI (HPE SSACLI) in the default deploy RAM disk. This is a licensing issue.

The ironic

inspectorinspect interface is not supported.

Enable the Redfish driver with the following steps:

Install the Sushy library on the ironic-conductor nodes. Sushy is a Python library for communicating with Redfish-based systems with ironic. More information is available at https://opendev.org/openstack/sushy/.

sudo pip install sushy

Add

redfishto the list ofenabled_hardware_types,enabled_power_interfacesandenabled_management_interfacesin/etc/ironic/ironic.confas shown below:[DEFAULT] ... enabled_hardware_types = ipmi,redfish enabled_power_interfaces = ipmitool,redfish enabled_management_interfaces = ipmitool,redfish

Restart the ironic-conductor service:

sudo systemctl restart openstack-ironic-conductor

To continue with Redfish, see Section 29.3.4, “Registering a Node with the Redfish Driver”.

29.3.2 Supplied Images #

As part of the SUSE OpenStack Cloud Entry-scale ironic Cloud installation, ironic Python Agent (IPA) images are supplied and loaded into glance. To see the images that have been loaded, execute the following commands on the deployer node:

$ source ~/service.osrc openstack image list

This will display three images that have been added by ironic:

Deploy_iso : openstack-ironic-image.x86_64-8.0.0.kernel.4.4.120-94.17-default Deploy_kernel : openstack-ironic-image.x86_64-8.0.0.xz Deploy_ramdisk : openstack-ironic-image.x86_64-8.0.0.iso

The ir-deploy-ramdisk image is a traditional boot ramdisk

used by the agent_ipmitool,

pxe_ipmitool, and pxe_ilo drivers

while ir-deploy-iso is an ISO image that is supplied as

virtual media to the host when using the agent_ilo

driver.

29.3.3 Provisioning a Node #

The information required to provision a node varies slightly depending on the driver used. In general the following details are required.

Network access information and credentials to connect to the management interface of the node.

Sufficient properties to allow for nova flavor matching.

A deployment image to perform the actual deployment of the guest operating system to the bare-metal node.

A combination of the ironic node-create and

ironic node-update commands are used for registering a

node's characteristics with the ironic service. In particular,

ironic node-update <nodeid>

add and ironic node-update

<nodeid> replace can be used to

modify the properties of a node after it has been created while

ironic node-update <nodeid>

remove will remove a property.

29.3.4 Registering a Node with the Redfish Driver #

Nodes configured to use the Redfish driver should have the driver property

set to redfish.

The following properties are specified in the driver_info

field of the node:

redfish_address(required)The URL address to the Redfish controller. It must include the authority portion of the URL, and can optionally include the scheme. If the scheme is missing, HTTPS is assumed.

redfish_system_id(required)The canonical path to the system resource that the driver interacts with. It should include the root service, version and the unique path to the system resource. For example,

/redfish/v1/Systems/1.redfish_username (recommended)User account with admin and server-profile access privilege.

redfish_password(recommended)User account password.

redfish_verify_ca(optional)If

redfish_addresshas the HTTPS scheme, the driver will use a secure (TLS) connection when talking to the Redfish controller. By default (if this is not set or set toTrue), the driver will try to verify the host certificates. This can be set to the path of a certificate file or directory with trusted certificates that the driver will use for verification. To disable verifying TLS, set this toFalse.

The openstack baremetal node create command is used

to enroll a node with the Redfish driver. For example:

openstack baremetal node create --driver redfish --driver-info \ redfish_address=https://example.com --driver-info \ redfish_system_id=/redfish/v1/Systems/CX34R87 --driver-info \ redfish_username=admin --driver-info redfish_password=password

29.3.5 Creating a Node Using agent_ilo #

If you want to use a boot mode of BIOS as opposed to UEFI, then you need to ensure that the boot mode has been set correctly on the IPMI:

While the iLO driver can automatically set a node to boot in UEFI mode via

the boot_mode defined capability, it cannot set BIOS boot

mode once UEFI mode has been set.

Use the ironic node-create command to specify the

agent_ilo driver, network access and credential

information for the IPMI, properties of the node and the glance ID of the

supplied ISO IPA image. Note that memory size is specified in megabytes while

disk size is specified in gigabytes.

ironic node-create -d agent_ilo -i ilo_address=IP_ADDRESS -i \ ilo_username=Administrator -i ilo_password=PASSWORD \ -p cpus=2 -p cpu_arch=x86_64 -p memory_mb=64000 -p local_gb=99 \ -i ilo_deploy_iso=DEPLOY_UUID

This will generate output similar to the following:

+--------------+---------------------------------------------------------------+

| Property | Value |

+--------------+---------------------------------------------------------------+

| uuid | NODE_UUID |

| driver_info | {u'ilo_address': u'IP_ADDRESS', u'ilo_password': u'******', |

| | u'ilo_deploy_iso': u'DEPLOY_UUID', |

| | u'ilo_username': u'Administrator'} |

| extra | {} |

| driver | agent_ilo |

| chassis_uuid | |

| properties | {u'memory_mb': 64000, u'local_gb': 99, u'cpus': 2, |

| | u'cpu_arch': u'x86_64'} |

| name | None |

+--------------+---------------------------------------------------------------+

Now update the node with boot_mode and

boot_option properties:

ironic node-update NODE_UUID add \ properties/capabilities="boot_mode:bios,boot_option:local"

The ironic node-update command returns details for all of

the node's characteristics.

+------------------------+------------------------------------------------------------------+

| Property | Value |

+------------------------+------------------------------------------------------------------+

| target_power_state | None |

| extra | {} |

| last_error | None |

| updated_at | None |

| maintenance_reason | None |

| provision_state | available |

| clean_step | {} |

| uuid | NODE_UUID |

| console_enabled | False |

| target_provision_state | None |

| provision_updated_at | None |

| maintenance | False |

| inspection_started_at | None |

| inspection_finished_at | None |

| power_state | None |

| driver | agent_ilo |

| reservation | None |

| properties | {u'memory_mb': 64000, u'cpu_arch': u'x86_64', u'local_gb': 99, |

| | u'cpus': 2, u'capabilities': u'boot_mode:bios,boot_option:local'}|

| instance_uuid | None |

| name | None |

| driver_info | {u'ilo_address': u'10.1.196.117', u'ilo_password': u'******', |

| | u'ilo_deploy_iso': u'DEPLOY_UUID', |

| | u'ilo_username': u'Administrator'} |

| created_at | 2016-03-11T10:17:10+00:00 |

| driver_internal_info | {} |

| chassis_uuid | |

| instance_info | {} |

+------------------------+------------------------------------------------------------------+29.3.6 Creating a Node Using agent_ipmi #

Use the ironic node-create command to specify the

agent_ipmi driver, network access and credential

information for the IPMI, properties of the node and the glance IDs of the

supplied kernel and ramdisk images. Note that memory size is specified in

megabytes while disk size is specified in gigabytes.

ironic node-create -d agent_ipmitool \

-i ipmi_address=IP_ADDRESS \

-i ipmi_username=Administrator -i ipmi_password=PASSWORD \

-p cpus=2 -p memory_mb=64000 -p local_gb=99 -p cpu_arch=x86_64 \

-i deploy_kernel=KERNEL_UUID \

-i deploy_ramdisk=RAMDISK_UUIDThis will generate output similar to the following:

+--------------+-----------------------------------------------------------------------+

| Property | Value |

+--------------+-----------------------------------------------------------------------+

| uuid | NODE2_UUID |

| driver_info | {u'deploy_kernel': u'KERNEL_UUID', |

| | u'ipmi_address': u'IP_ADDRESS', u'ipmi_username': u'Administrator', |

| | u'ipmi_password': u'******', |

| | u'deploy_ramdisk': u'RAMDISK_UUID'} |

| extra | {} |

| driver | agent_ipmitool |

| chassis_uuid | |

| properties | {u'memory_mb': 64000, u'cpu_arch': u'x86_64', u'local_gb': 99, |

| | u'cpus': 2} |

| name | None |

+--------------+-----------------------------------------------------------------------+

Now update the node with boot_mode and

boot_option properties:

ironic node-update NODE_UUID add \ properties/capabilities="boot_mode:bios,boot_option:local"

The ironic node-update command returns details for all of

the node's characteristics.

+------------------------+-----------------------------------------------------------------+

| Property | Value |

+------------------------+-----------------------------------------------------------------+

| target_power_state | None |

| extra | {} |

| last_error | None |

| updated_at | None |

| maintenance_reason | None |

| provision_state | available |

| clean_step | {} |

| uuid | NODE2_UUID |

| console_enabled | False |

| target_provision_state | None |

| provision_updated_at | None |

| maintenance | False |

| inspection_started_at | None |

| inspection_finished_at | None |

| power_state | None |

| driver | agent_ipmitool |

| reservation | None |

| properties | {u'memory_mb': 64000, u'cpu_arch': u'x86_64', |

| | u'local_gb': 99, u'cpus': 2, |

| | u'capabilities': u'boot_mode:bios,boot_option:local'} |

| instance_uuid | None |

| name | None |

| driver_info | {u'ipmi_password': u'******', u'ipmi_address': u'IP_ADDRESS', |

| | u'ipmi_username': u'Administrator', u'deploy_kernel': |

| | u'KERNEL_UUID', |

| | u'deploy_ramdisk': u'RAMDISK_UUID'} |

| created_at | 2016-03-11T14:19:18+00:00 |

| driver_internal_info | {} |

| chassis_uuid | |

| instance_info | {} |

+------------------------+-----------------------------------------------------------------+For more information on node enrollment, see the OpenStack documentation at http://docs.openstack.org/developer/ironic/deploy/install-guide.html#enrollment.

29.3.7 Creating a Flavor #

nova uses flavors when fulfilling requests for bare-metal nodes. The nova

scheduler attempts to match the requested flavor against the properties of

the created ironic nodes. So an administrator needs to set up flavors that

correspond to the available bare-metal nodes using the command

openstack flavor create:

openstack flavor create bmtest auto 64000 99 2 +----------------+--------+--------+------+-----------+------+-------+-------------+-----------+ | ID | Name | Mem_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public | +----------------+--------+--------+------+-----------+------+-------+-------------+-----------+ | 645de0...b1348 | bmtest | 64000 | 99 | 0 | | 2 | 1.0 | True | +----------------+--------+--------+------+-----------+------+-------+-------------+-----------+

To see a list of all the available flavors, run openstack flavor

list:

openstack flavor list +-------------+--------------+--------+------+-----------+------+-------+--------+-----------+ | ID | Name | Mem_MB | Disk | Ephemeral | Swap | VCPUs | RXTX | Is_Public | | | | | | | | | Factor | | +-------------+--------------+--------+------+-----------+------+-------+--------+-----------+ | 1 | m1.tiny | 512 | 1 | 0 | | 1 | 1.0 | True | | 2 | m1.small | 2048 | 20 | 0 | | 1 | 1.0 | True | | 3 | m1.medium | 4096 | 40 | 0 | | 2 | 1.0 | True | | 4 | m1.large | 8192 | 80 | 0 | | 4 | 1.0 | True | | 5 | m1.xlarge | 16384 | 160 | 0 | | 8 | 1.0 | True | | 6 | m1.baremetal | 4096 | 80 | 0 | | 2 | 1.0 | True | | 645d...1348 | bmtest | 64000 | 99 | 0 | | 2 | 1.0 | True | +-------------+--------------+--------+------+-----------+------+-------+--------+-----------+

Now set the CPU architecture and boot mode and boot option capabilities:

openstack flavor set 645de08d-2bc6-43f1-8a5f-2315a75b1348 set cpu_arch=x86_64 openstack flavor set 645de08d-2bc6-43f1-8a5f-2315a75b1348 set capabilities:boot_option="local" openstack flavor set 645de08d-2bc6-43f1-8a5f-2315a75b1348 set capabilities:boot_mode="bios"

For more information on flavor creation, see the OpenStack documentation at http://docs.openstack.org/developer/ironic/deploy/install-guide.html#flavor-creation.

29.3.8 Creating a Network Port #

Register the MAC addresses of all connected physical network interfaces intended for use with the bare-metal node.

ironic port-create -a 5c:b9:01:88:f0:a4 -n ea7246fd-e1d6-4637-9699-0b7c59c22e67

29.3.9 Creating a glance Image #

You can create a complete disk image using the instructions at Section 29.3.13, “Building glance Images Using KIWI”.

The image you create can then be loaded into glance:

openstack image create --name='leap' --disk-format=raw \

--container-format=bare \

--file /tmp/myimage/LimeJeOS-Leap-42.3.x86_64-1.42.3.raw

+------------------+--------------------------------------+

| Property | Value |

+------------------+--------------------------------------+

| checksum | 45a4a06997e64f7120795c68beeb0e3c |

| container_format | bare |

| created_at | 2018-02-17T10:42:14Z |

| disk_format | raw |

| id | 17e4915a-ada0-4b95-bacf-ba67133f39a7 |

| min_disk | 0 |

| min_ram | 0 |

| name | leap |

| owner | 821b7bb8148f439191d108764301af64 |

| protected | False |

| size | 372047872 |

| status | active |

| tags | [] |

| updated_at | 2018-02-17T10:42:23Z |

| virtual_size | None |

| visibility | private |

+------------------+--------------------------------------+This image will subsequently be used to boot the bare-metal node.

29.3.10 Generating a Key Pair #

Create a key pair that you will use when you login to the newly booted node:

openstack keypair create ironic_kp > ironic_kp.pem29.3.11 Determining the neutron Network ID #

openstack network list

+---------------+----------+----------------------------------------------------+

| id | name | subnets |

+---------------+----------+----------------------------------------------------+

| c0102...1ca8c | flat-net | 709ee2a1-4110-4b26-ba4d-deb74553adb9 192.3.15.0/24 |

+---------------+----------+----------------------------------------------------+29.3.12 Booting the Node #

Before booting, it is advisable to power down the node:

ironic node-set-power-state ea7246fd-e1d6-4637-9699-0b7c59c22e67 off

You can now boot the bare-metal node with the information compiled in the preceding steps, using the neutron network ID, the whole disk image ID, the matching flavor and the key name:

openstack server create --nic net-id=c010267c-9424-45be-8c05-99d68531ca8c \ --image 17e4915a-ada0-4b95-bacf-ba67133f39a7 \ --flavor 645de08d-2bc6-43f1-8a5f-2315a75b1348 \ --key-name ironic_kp leap

This command returns information about the state of the node that is booting:

+--------------------------------------+------------------------+

| Property | Value |

+--------------------------------------+------------------------+

| OS-EXT-AZ:availability_zone | |

| OS-EXT-SRV-ATTR:host | - |

| OS-EXT-SRV-ATTR:hypervisor_hostname | - |

| OS-EXT-SRV-ATTR:instance_name | instance-00000001 |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | - |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| adminPass | adpHw3KKTjHk |

| config_drive | |

| created | 2018-03-11T11:00:28Z |

| flavor | bmtest (645de...b1348) |

| hostId | |

| id | a9012...3007e |

| image | leap (17e49...f39a7) |

| key_name | ironic_kp |

| metadata | {} |

| name | leap |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| security_groups | default |

| status | BUILD |

| tenant_id | d53bcaf...baa60dd |

| updated | 2016-03-11T11:00:28Z |

| user_id | e580c64...4aaf990 |

+--------------------------------------+------------------------+

The boot process can take up to 10 minutes. Monitor the progress with the

IPMI console or with openstack server list,

openstack server show

<nova_node_id>, and ironic node-show

<ironic_node_id> commands.

openstack server list +---------------+--------+--------+------------+-------------+----------------------+ | ID | Name | Status | Task State | Power State | Networks | +---------------+--------+--------+------------+-------------+----------------------+ | a9012...3007e | leap | BUILD | spawning | NOSTATE | flat-net=192.3.15.12 | +---------------+--------+--------+------------+-------------+----------------------+

During the boot procedure, a login prompt will appear for SLES:

Ignore this login screen and wait for the login screen of your target operating system to appear:

If you now run the command openstack server list, it should show the

node in the running state:

openstack server list

+---------------+--------+--------+------------+-------------+----------------------+

| ID | Name | Status | Task State | Power State | Networks |

+---------------+--------+--------+------------+-------------+----------------------+

| a9012...3007e | leap | ACTIVE | - | Running | flat-net=192.3.15.14 |

+---------------+--------+--------+------------+-------------+----------------------+You can now log in to the booted node using the key you generated earlier. (You may be prompted to change the permissions of your private key files, so that they are not accessible by others).

ssh leap@192.3.15.14 -i ironic_kp.pem

29.3.13 Building glance Images Using KIWI #

The following sections show you how to create your own images using KIWI, the command line utility to build Linux system appliances. For information on installing KIWI, see https://osinside.github.io/kiwi/installation.html.

KIWI creates images in a two-step process:

The

prepareoperation generates an unpacked image tree using the information provided in the image description.The

createoperation creates the packed image based on the unpacked image and the information provided in the configuration file (config.xml).

Instructions for installing KIWI are available at https://osinside.github.io/kiwi/installation.html.

Image creation with KIWI is automated and does not require any user interaction. The information required for the image creation process is provided by the image description.

To use and run KIWI requires:

A recent Linux distribution such as:

openSUSE Leap 42.3

SUSE Linux Enterprise 12 SP4

openSUSE Tumbleweed

Enough free disk space to build and store the image (a minimum of 10 GB is recommended).

Python version 2.7, 3.4 or higher. KIWI supports both Python 2 and 3 versions

Git (package git-core) to clone a repository.

Virtualization technology to start the image (QEMU is recommended).

29.3.14 Creating an openSUSE Image with KIWI #

The following example shows how to build an openSUSE Leap image that is ready to run in QEMU.

Retrieve the example image descriptions.

git clone https://github.com/SUSE/kiwi-descriptions

Build the image with KIWI:

sudo kiwi-ng --type vmx system build \ --description kiwi-descriptions/suse/x86_64/suse-leap-42.3-JeOS \ --target-dir /tmp/myimage

A

.rawimage will be built in the/tmp/myimagedirectory.Test the live image with QEMU:

qemu \ -drive file=LimeJeOS-Leap-42.3.x86_64-1.42.3.raw,format=raw,if=virtio \ -m 4096

With a successful test, the image is complete.

By default, KIWI generates a file in the .raw format.

The .raw file is a disk image with a structure

equivalent to a physical hard disk. .raw images are

supported by any hypervisor, but are not compressed and do not offer the

best performance.

Virtualization systems support their own formats (such as

qcow2 or vmdk) with compression and

improved I/O performance. To build an image in a format other than

.raw, add the format attribute to the type definition

in the preferences section of config.xml. Using

qcow2 for example:

<image ...>

<preferences>

<type format="qcow2" .../>

...

</preferences>

...

</imageMore information about KIWI is at https://osinside.github.io/kiwi/.

29.4 Provisioning Baremetal Nodes with Multi-Tenancy #

To enable ironic multi-tenancy, you must first manually install the

python-networking-generic-switch package along with all

its dependents on all neutron nodes.

To manually enable the genericswitch mechanism driver in

neutron, the networking-generic-switch package must be

installed first. Do the following steps in each of the controllers where

neutron is running.

Comment out the

multi_tenancy_switch_configsection in~/openstack/my_cloud/definition/data/ironic/ironic_config.yml.SSH into the controller node

Change to root

ardana >sudo -iActivate the neutron venv

tux >sudo . /opt/stack/venv/neutron-20180528T093206Z/bin/activateInstall netmiko package

tux >sudo pip install netmikoClone the

networking-generic-switchsource code into/tmptux >sudo cd /tmptux >sudo git clone https://github.com/openstack/networking-generic-switch.gitInstall

networking_generic_switchpackagetux >sudo python setup.py install

After the networking_generic_switch package is installed,

the genericswitch settings must be enabled in the input

model. The following process must be run again any time a maintenance update

is installed that updates the neutron venv.

SSH into the deployer node as the user

ardana.Edit the ironic configuration data in the input model

~/openstack/my_cloud/definition/data/ironic/ironic_config.yml. Make sure themulti_tenancy_switch_config:section is uncommented and has the appropriate settings.driver_typeshould begenericswitchanddevice_typeshould benetmiko_hp_comware.multi_tenancy_switch_config: - id: switch1 driver_type: genericswitch device_type: netmiko_hp_comware ip_address: 192.168.75.201 username: IRONICSHARE password: 'k27MwbEDGzTm'Run the configure process to generate the model

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun

neutron-reconfigure.ymlardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/localhost neutron-reconfigure.ymlRun

neutron-status.ymlto make sure everything is OKardana >ansible-playbook -i hosts/verb_hosts neutron-status.yml

With the networking-generic-switch package installed and

enabled, you can proceed with provisioning baremetal nodes with multi-tenancy.

Create a network and a subnet:

$ openstack network create guest-net-1 Created a new network: +---------------------------+--------------------------------------+ | Field | Value | +---------------------------+--------------------------------------+ | admin_state_up | True | | availability_zone_hints | | | availability_zones | | | created_at | 2017-06-10T02:49:56Z | | description | | | id | 256d55a6-9430-4f49-8a4c-cc5192f5321e | | ipv4_address_scope | | | ipv6_address_scope | | | mtu | 1500 | | name | guest-net-1 | | project_id | 57b792cdcdd74d16a08fc7a396ee05b6 | | provider:network_type | vlan | | provider:physical_network | physnet1 | | provider:segmentation_id | 1152 | | revision_number | 2 | | router:external | False | | shared | False | | status | ACTIVE | | subnets | | | tags | | | tenant_id | 57b792cdcdd74d16a08fc7a396ee05b6 | | updated_at | 2017-06-10T02:49:57Z | +---------------------------+--------------------------------------+ $ openstack subnet create guest-net-1 200.0.0.0/24 Created a new subnet: +-------------------+----------------------------------------------+ | Field | Value | +-------------------+----------------------------------------------+ | allocation_pools | {"start": "200.0.0.2", "end": "200.0.0.254"} | | cidr | 200.0.0.0/24 | | created_at | 2017-06-10T02:53:08Z | | description | | | dns_nameservers | | | enable_dhcp | True | | gateway_ip | 200.0.0.1 | | host_routes | | | id | 53accf35-ae02-43ae-95d8-7b5efed18ae9 | | ip_version | 4 | | ipv6_address_mode | | | ipv6_ra_mode | | | name | | | network_id | 256d55a6-9430-4f49-8a4c-cc5192f5321e | | project_id | 57b792cdcdd74d16a08fc7a396ee05b6 | | revision_number | 2 | | service_types | | | subnetpool_id | | | tenant_id | 57b792cdcdd74d16a08fc7a396ee05b6 | | updated_at | 2017-06-10T02:53:08Z | +-------------------+----------------------------------------------+Review glance image list

$ openstack image list +--------------------------------------+--------------------------+ | ID | Name | +--------------------------------------+--------------------------+ | 0526d2d7-c196-4c62-bfe5-a13bce5c7f39 | cirros-0.4.0-x86_64 | +--------------------------------------+--------------------------+

Create ironic node

$ ironic --ironic-api-version 1.22 node-create -d agent_ipmitool \ -n test-node-1 -i ipmi_address=192.168.9.69 -i ipmi_username=ipmi_user \ -i ipmi_password=XXXXXXXX --network-interface neutron -p memory_mb=4096 \ -p cpu_arch=x86_64 -p local_gb=80 -p cpus=2 \ -p capabilities=boot_mode:bios,boot_option:local \ -p root_device='{"name":"/dev/sda"}' \ -i deploy_kernel=db3d131f-2fb0-4189-bb8d-424ee0886e4c \ -i deploy_ramdisk=304cae15-3fe5-4f1c-8478-c65da5092a2c +-------------------+-------------------------------------------------------------------+ | Property | Value | +-------------------+-------------------------------------------------------------------+ | chassis_uuid | | | driver | agent_ipmitool | | driver_info | {u'deploy_kernel': u'db3d131f-2fb0-4189-bb8d-424ee0886e4c', | | | u'ipmi_address': u'192.168.9.69', | | | u'ipmi_username': u'gozer', u'ipmi_password': u'******', | | | u'deploy_ramdisk': u'304cae15-3fe5-4f1c-8478-c65da5092a2c'} | | extra | {} | | name | test-node-1 | | network_interface | neutron | | properties | {u'cpu_arch': u'x86_64', u'root_device': {u'name': u'/dev/sda'}, | | | u'cpus': 2, u'capabilities': u'boot_mode:bios,boot_option:local', | | | u'memory_mb': 4096, u'local_gb': 80} | | resource_class | None | | uuid | cb4dda0d-f3b0-48b9-ac90-ba77b8c66162 | +-------------------+-------------------------------------------------------------------+ipmi_address, ipmi_username and ipmi_password are IPMI access parameters for baremetal ironic node. Adjust memory_mb, cpus, local_gb to your node size requirements. They also need to be reflected in flavor setting (see below). Use capabilities boot_mode:bios for baremetal nodes operating in Legacy BIOS mode. For UEFI baremetal nodes, use boot_mode:uefi lookup deploy_kernel and deploy_ramdisk in glance image list output above.

ImportantSince we are using ironic API version 1.22, node is created initial state enroll. It needs to be explicitly moved to available state. This behavior changed in API version 1.11

Create port

$ ironic --ironic-api-version 1.22 port-create --address f0:92:1c:05:6c:40 \ --node cb4dda0d-f3b0-48b9-ac90-ba77b8c66162 -l switch_id=e8:f7:24:bf:07:2e -l \ switch_info=hp59srv1-a-11b -l port_id="Ten-GigabitEthernet 1/0/34" \ --pxe-enabled true +-----------------------+--------------------------------------------+ | Property | Value | +-----------------------+--------------------------------------------+ | address | f0:92:1c:05:6c:40 | | extra | {} | | local_link_connection | {u'switch_info': u'hp59srv1-a-11b', | | | u'port_id': u'Ten-GigabitEthernet 1/0/34', | | | u'switch_id': u'e8:f7:24:bf:07:2e'} | | node_uuid | cb4dda0d-f3b0-48b9-ac90-ba77b8c66162 | | pxe_enabled | True | | uuid | a49491f3-5595-413b-b4a7-bb6f9abec212 | +-----------------------+--------------------------------------------+for

--address, use MAC of 1st NIC of ironic baremetal node, which will be used for PXE bootfor

--node, use ironic node uuid (see above)for

-l switch_id, use switch management interface MAC address. It can be retrieved by pinging switch management IP and looking up MAC address in 'arp -l -n' command output.for

-l switch_info, use switch_id fromdata/ironic/ironic_config.ymlfile. If you have several switch config definitions, use the right switch your baremetal node is connected to.for -l port_id, use port ID on the switch

Move ironic node to manage and then available state

$ ironic node-set-provision-state test-node-1 manage $ ironic node-set-provision-state test-node-1 provide

Once node is successfully moved to available state, its resources should be included into nova hypervisor statistics

$ openstack hypervisor stats show +----------------------+-------+ | Property | Value | +----------------------+-------+ | count | 1 | | current_workload | 0 | | disk_available_least | 80 | | free_disk_gb | 80 | | free_ram_mb | 4096 | | local_gb | 80 | | local_gb_used | 0 | | memory_mb | 4096 | | memory_mb_used | 0 | | running_vms | 0 | | vcpus | 2 | | vcpus_used | 0 | +----------------------+-------+

Prepare a keypair, which will be used for logging into the node

$ openstack keypair create ironic_kp > ironic_kp.pem

Obtain user image and upload it to glance. Please refer to OpenStack documentation on user image creation: https://docs.openstack.org/project-install-guide/baremetal/draft/configure-glance-images.html.

NoteDeployed images are already populated by SUSE OpenStack Cloud installer.

$ openstack image create --name='Ubuntu Trusty 14.04' --disk-format=qcow2 \ --container-format=bare --file ~/ubuntu-trusty.qcow2 +------------------+--------------------------------------+ | Property | Value | +------------------+--------------------------------------+ | checksum | d586d8d2107f328665760fee4c81caf0 | | container_format | bare | | created_at | 2017-06-13T22:38:45Z | | disk_format | qcow2 | | id | 9fdd54a3-ccf5-459c-a084-e50071d0aa39 | | min_disk | 0 | | min_ram | 0 | | name | Ubuntu Trusty 14.04 | | owner | 57b792cdcdd74d16a08fc7a396ee05b6 | | protected | False | | size | 371508736 | | status | active | | tags | [] | | updated_at | 2017-06-13T22:38:55Z | | virtual_size | None | | visibility | private | +------------------+--------------------------------------+ $ openstack image list +--------------------------------------+---------------------------+ | ID | Name | +--------------------------------------+---------------------------+ | 0526d2d7-c196-4c62-bfe5-a13bce5c7f39 | cirros-0.4.0-x86_64 | | 83eecf9c-d675-4bf9-a5d5-9cf1fe9ee9c2 | ir-deploy-iso-EXAMPLE | | db3d131f-2fb0-4189-bb8d-424ee0886e4c | ir-deploy-kernel-EXAMPLE | | 304cae15-3fe5-4f1c-8478-c65da5092a2c | ir-deploy-ramdisk- EXAMPLE | | 9fdd54a3-ccf5-459c-a084-e50071d0aa39 | Ubuntu Trusty 14.04 | +--------------------------------------+---------------------------+

Create a baremetal flavor and set flavor keys specifying requested node size, architecture and boot mode. A flavor can be re-used for several nodes having the same size, architecture and boot mode

$ openstack flavor create m1.ironic auto 4096 80 2 +-------------+-----------+--------+------+---------+------+-------+-------------+-----------+ | ID | Name | Mem_MB | Disk | Ephemrl | Swap | VCPUs | RXTX_Factor | Is_Public | +-------------+-----------+--------+------+---------+------+-------+-------------+-----------+ | ab69...87bf | m1.ironic | 4096 | 80 | 0 | | 2 | 1.0 | True | +-------------+-----------+--------+------+---------+------+-------+-------------+-----------+ $ openstack flavor set ab6988...e28694c87bf set cpu_arch=x86_64 $ openstack flavor set ab6988...e28694c87bf set capabilities:boot_option="local" $ openstack flavor set ab6988...e28694c87bf set capabilities:boot_mode="bios"

Parameters must match parameters of ironic node above. Use

capabilities:boot_mode="bios"for Legacy BIOS nodes. For UEFI nodes, usecapabilities:boot_mode="uefi"Boot the node

$ openstack server create --flavor m1.ironic --image 9fdd54a3-ccf5-459c-a084-e50071d0aa39 \ --key-name ironic_kp --nic net-id=256d55a6-9430-4f49-8a4c-cc5192f5321e \ test-node-1 +--------------------------------------+-------------------------------------------------+ | Property | Value | +--------------------------------------+-------------------------------------------------+ | OS-DCF:diskConfig | MANUAL | | OS-EXT-AZ:availability_zone | | | OS-EXT-SRV-ATTR:host | - | | OS-EXT-SRV-ATTR:hypervisor_hostname | - | | OS-EXT-SRV-ATTR:instance_name | | | OS-EXT-STS:power_state | 0 | | OS-EXT-STS:task_state | scheduling | | OS-EXT-STS:vm_state | building | | OS-SRV-USG:launched_at | - | | OS-SRV-USG:terminated_at | - | | accessIPv4 | | | accessIPv6 | | | adminPass | XXXXXXXXXXXX | | config_drive | | | created | 2017-06-14T21:25:18Z | | flavor | m1.ironic (ab69881...5a-497d-93ae-6e28694c87bf) | | hostId | | | id | f1a8c63e-da7b-4d9a-8648-b1baa6929682 | | image | Ubuntu Trusty 14.04 (9fdd54a3-ccf5-4a0...0aa39) | | key_name | ironic_kp | | metadata | {} | | name | test-node-1 | | os-extended-volumes:volumes_attached | [] | | progress | 0 | | security_groups | default | | status | BUILD | | tenant_id | 57b792cdcdd74d16a08fc7a396ee05b6 | | updated | 2017-06-14T21:25:17Z | | user_id | cc76d7469658401fbd4cf772278483d9 | +--------------------------------------+-------------------------------------------------+for

--image, use the ID of user image created at previous stepfor

--nic net-id, use ID of the tenant network created at the beginning

NoteDuring the node provisioning, the following is happening in the background:

neutron connects to switch management interfaces and assigns provisioning VLAN to baremetal node port on the switch. ironic powers up the node using IPMI interface. Node is booting IPA image via PXE. IPA image is writing provided user image onto specified root device (

/dev/sda) and powers node down. neutron connects to switch management interfaces and assigns tenant VLAN to baremetal node port on the switch. A VLAN ID is selected from provided range. ironic powers up the node using IPMI interface. Node is booting user image from disk.Once provisioned, node will join the private tenant network. Access to private network from other networks is defined by switch configuration.

29.5 View Ironic System Details #

29.5.1 View details about the server using openstack server show <nova-node-id> #

openstack server show a90122ce-bba8-496f-92a0-8a7cb143007e

+--------------------------------------+-----------------------------------------------+

| Property | Value |

+--------------------------------------+-----------------------------------------------+

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-SRV-ATTR:host | ardana-cp1-ir-compute0001-mgmt |

| OS-EXT-SRV-ATTR:hypervisor_hostname | ea7246fd-e1d6-4637-9699-0b7c59c22e67 |

| OS-EXT-SRV-ATTR:instance_name | instance-0000000a |

| OS-EXT-STS:power_state | 1 |

| OS-EXT-STS:task_state | - |

| OS-EXT-STS:vm_state | active |

| OS-SRV-USG:launched_at | 2016-03-11T12:26:25.000000 |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| config_drive | |

| created | 2016-03-11T12:17:54Z |

| flat-net network | 192.3.15.14 |

| flavor | bmtest (645de08d-2bc6-43f1-8a5f-2315a75b1348) |

| hostId | ecafa4f40eb5f72f7298...3bad47cbc01aa0a076114f |

| id | a90122ce-bba8-496f-92a0-8a7cb143007e |

| image | ubuntu (17e4915a-ada0-4b95-bacf-ba67133f39a7) |

| key_name | ironic_kp |

| metadata | {} |

| name | ubuntu |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| security_groups | default |

| status | ACTIVE |

| tenant_id | d53bcaf15afb4cb5aea3adaedbaa60dd |

| updated | 2016-03-11T12:26:26Z |

| user_id | e580c645bfec4faeadef7dbd24aaf990 |

+--------------------------------------+-----------------------------------------------+29.5.2 View detailed information about a node using ironic node-show <ironic-node-id> #

ironic node-show ea7246fd-e1d6-4637-9699-0b7c59c22e67

+------------------------+--------------------------------------------------------------------------+

| Property | Value |

+------------------------+--------------------------------------------------------------------------+

| target_power_state | None |

| extra | {} |

| last_error | None |

| updated_at | 2016-03-11T12:26:25+00:00 |

| maintenance_reason | None |

| provision_state | active |

| clean_step | {} |

| uuid | ea7246fd-e1d6-4637-9699-0b7c59c22e67 |

| console_enabled | False |

| target_provision_state | None |

| provision_updated_at | 2016-03-11T12:26:25+00:00 |

| maintenance | False |

| inspection_started_at | None |

| inspection_finished_at | None |

| power_state | power on |

| driver | agent_ilo |

| reservation | None |

| properties | {u'memory_mb': 64000, u'cpu_arch': u'x86_64', u'local_gb': 99, |

| | u'cpus': 2, u'capabilities': u'boot_mode:bios,boot_option:local'} |

| instance_uuid | a90122ce-bba8-496f-92a0-8a7cb143007e |

| name | None |

| driver_info | {u'ilo_address': u'10.1.196.117', u'ilo_password': u'******', |

| | u'ilo_deploy_iso': u'b9499494-7db3-4448-b67f-233b86489c1f', |

| | u'ilo_username': u'Administrator'} |

| created_at | 2016-03-11T10:17:10+00:00 |

| driver_internal_info | {u'agent_url': u'http://192.3.15.14:9999', |

| | u'is_whole_disk_image': True, u'agent_last_heartbeat': 1457699159} |

| chassis_uuid | |

| instance_info | {u'root_gb': u'99', u'display_name': u'ubuntu', u'image_source': u |

| | '17e4915a-ada0-4b95-bacf-ba67133f39a7', u'capabilities': u'{"boot_mode": |

| | "bios", "boot_option": "local"}', u'memory_mb': u'64000', u'vcpus': |

| | u'2', u'image_url': u'http://192.168.12.2:8080/v1/AUTH_ba121db7732f4ac3a |

| | 50cc4999a10d58d/glance/17e4915a-ada0-4b95-bacf-ba67133f39a7?temp_url_sig |

| | =ada691726337805981ac002c0fbfc905eb9783ea&temp_url_expires=1457699878', |

| | u'image_container_format': u'bare', u'local_gb': u'99', |

| | u'image_disk_format': u'qcow2', u'image_checksum': |

| | u'2d7bb1e78b26f32c50bd9da99102150b', u'swap_mb': u'0'} |

+------------------------+--------------------------------------------------------------------------+29.5.3 View detailed information about a port using ironic port-show <ironic-port-id> #

ironic port-show a17a4ef8-a711-40e2-aa27-2189c43f0b67

+------------+-----------------------------------------------------------+

| Property | Value |

+------------+-----------------------------------------------------------+

| node_uuid | ea7246fd-e1d6-4637-9699-0b7c59c22e67 |

| uuid | a17a4ef8-a711-40e2-aa27-2189c43f0b67 |

| extra | {u'vif_port_id': u'82a5ab28-76a8-4c9d-bfb4-624aeb9721ea'} |

| created_at | 2016-03-11T10:40:53+00:00 |

| updated_at | 2016-03-11T12:17:56+00:00 |

| address | 5c:b9:01:88:f0:a4 |

+------------+-----------------------------------------------------------+29.5.4 View detailed information about a hypervisor using openstack

hypervisor list and openstack hypervisor show #

openstack hypervisor list +-----+--------------------------------------+-------+---------+ | ID | Hypervisor hostname | State | Status | +-----+--------------------------------------+-------+---------+ | 541 | ea7246fd-e1d6-4637-9699-0b7c59c22e67 | up | enabled | +-----+--------------------------------------+-------+---------+

openstack hypervisor show ea7246fd-e1d6-4637-9699-0b7c59c22e67 +-------------------------+--------------------------------------+ | Property | Value | +-------------------------+--------------------------------------+ | cpu_info | | | current_workload | 0 | | disk_available_least | 0 | | free_disk_gb | 0 | | free_ram_mb | 0 | | host_ip | 192.168.12.6 | | hypervisor_hostname | ea7246fd-e1d6-4637-9699-0b7c59c22e67 | | hypervisor_type | ironic | | hypervisor_version | 1 | | id | 541 | | local_gb | 99 | | local_gb_used | 99 | | memory_mb | 64000 | | memory_mb_used | 64000 | | running_vms | 1 | | service_disabled_reason | None | | service_host | ardana-cp1-ir-compute0001-mgmt | | service_id | 25 | | state | up | | status | enabled | | vcpus | 2 | | vcpus_used | 2 | +-------------------------+--------------------------------------+

29.5.5 View a list of all running services using openstack compute

service list #

openstack compute service list +----+------------------+-----------------------+----------+---------+-------+------------+----------+ | Id | Binary | Host | Zone | Status | State | Updated_at | Disabled | | | | | | | | | Reason | +----+------------------+-----------------------+----------+---------+-------+------------+----------+ | 1 | nova-conductor | ardana-cp1-c1-m1-mgmt | internal | enabled | up | date:time | - | | 7 | nova-conductor | " -cp1-c1-m2-mgmt | internal | enabled | up | date:time | - | | 10 | nova-conductor | " -cp1-c1-m3-mgmt | internal | enabled | up | date:time | - | | 13 | nova-scheduler | " -cp1-c1-m1-mgmt | internal | enabled | up | date:time | - | | 16 | nova-scheduler | " -cp1-c1-m3-mgmt | internal | enabled | up | date:time | - | | 19 | nova-scheduler | " -cp1-c1-m2-mgmt | internal | enabled | up | date:time | - | | 25 | nova-compute | " -cp1-ir- | nova | | enabled | up | date:time | - | | | | compute0001-mgmt | | | | | | +----+------------------+-----------------------+----------+---------+-------+------------+----------+

29.6 Troubleshooting ironic Installation #

Sometimes the openstack server create command does not

succeed and when you do a openstack server list, you will see output

like the following:

ardana > openstack server list

+------------------+--------------+--------+------------+-------------+----------+

| ID | Name | Status | Task State | Power State | Networks |

+------------------+--------------+--------+------------+-------------+----------+

| ee08f82...624e5f | OpenSUSE42.3 | ERROR | - | NOSTATE | |

+------------------+--------------+--------+------------+-------------+----------+

You should execute the openstack server show <nova-node-id> and

ironic node-show <ironic-node-id> commands to get

more information about the error.

29.6.1 Error: No valid host was found. #

The error No valid host was found. There are not enough

hosts. is typically seen when performing the openstack

server create where there is a mismatch between the properties set

on the node and the flavor used. For example, the output from a

openstack server show command may look like this:

ardana > openstack server show ee08f82e-8920-4360-be51-a3f995624e5f

+------------------------+------------------------------------------------------------------------------+

| Property | Value |

+------------------------+------------------------------------------------------------------------------+

| OS-EXT-AZ: | |

| availability_zone | |

| OS-EXT-SRV-ATTR:host | - |

| OS-EXT-SRV-ATTR: | |

| hypervisor_hostname | - |

| OS-EXT-SRV-ATTR: | |

| instance_name | instance-00000001 |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | - |

| OS-EXT-STS:vm_state | error |

| OS-SRV-USG:launched_at | - |

| OS-SRV-USG: | |

| terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| config_drive | |

| created | 2016-03-11T11:00:28Z |

| fault | {"message": "No valid host was found. There are not enough hosts |

| | available.", "code": 500, "details": " File \"/opt/stack/ |

| | venv/nova-20160308T002421Z/lib/python2.7/site-packages/nova/ |

| | conductor/manager.py\", line 739, in build_instances |

| | request_spec, filter_properties) |

| | File \"/opt/stack/venv/nova-20160308T002421Z/lib/python2.7/ |

| | site-packages/nova/scheduler/utils.py\", line 343, in wrapped |

| | return func(*args, **kwargs) |

| | File \"/opt/stack/venv/nova-20160308T002421Z/lib/python2.7/ |

| | site-packages/nova/scheduler/client/__init__.py\", line 52, |

| | in select_destinations context, request_spec, filter_properties) |

| | File \"/opt/stack/venv/nova-20160308T002421Z/lib/python2.7/ |

| | site-packages/nova/scheduler/client/__init__.py\",line 37,in __run_method |

| | return getattr(self.instance, __name)(*args, **kwargs) |

| | File \"/opt/stack/venv/nova-20160308T002421Z/lib/python2.7/ |

| | site-packages/nova/scheduler/client/query.py\", line 34, |

| | in select_destinations context, request_spec, filter_properties) |

| | File \"/opt/stack/venv/nova-20160308T002421Z/lib/python2.7/ |

| | site-packages/nova/scheduler/rpcapi.py\", line 120, in select_destinations |

| | request_spec=request_spec, filter_properties=filter_properties) |

| | File \"/opt/stack/venv/nova-20160308T002421Z/lib/python2.7/ |

| | site-packages/oslo_messaging/rpc/client.py\", line 158, in call |

| | retry=self.retry) |

| | File \"/opt/stack/venv/nova-20160308T002421Z/lib/python2.7/ |

| | site-packages/oslo_messaging/transport.py\", line 90, in _send |

| | timeout=timeout, retry=retry) |

| | File \"/opt/stack/venv/nova-20160308T002421Z/lib/python2.7/ |

| | site-packages/oslo_messaging/_drivers/amqpdriver.py\", line 462, in send |

| | retry=retry) |

| | File \"/opt/stack/venv/nova-20160308T002421Z/lib/python2.7/ |

| | site-packages/oslo_messaging/_drivers/amqpdriver.py\", line 453, in _send |

| | raise result |

| | ", "created": "2016-03-11T11:00:29Z"} |

| flavor | bmtest (645de08d-2bc6-43f1-8a5f-2315a75b1348) |

| hostId | |

| id | ee08f82e-8920-4360-be51-a3f995624e5f |

| image | opensuse (17e4915a-ada0-4b95-bacf-ba67133f39a7) |

| key_name | ironic_kp |

| metadata | {} |

| name | opensuse |

| os-extended-volumes: | |

| volumes_attached | [] |

| status | ERROR |

| tenant_id | d53bcaf15afb4cb5aea3adaedbaa60dd |

| updated | 2016-03-11T11:00:28Z |

| user_id | e580c645bfec4faeadef7dbd24aaf990 |

+------------------------+------------------------------------------------------------------------------+

You can find more information about the error by inspecting the log file at

/var/log/nova/nova-scheduler.log or alternatively by

viewing the error location in the source files listed in the stack-trace (in

bold above).

To find the mismatch, compare the properties of the ironic node:

+------------------------+---------------------------------------------------------------------+

| Property | Value |

+------------------------+---------------------------------------------------------------------+

| target_power_state | None |

| extra | {} |

| last_error | None |

| updated_at | None |

| maintenance_reason | None |

| provision_state | available |

| clean_step | {} |

| uuid | ea7246fd-e1d6-4637-9699-0b7c59c22e67 |

| console_enabled | False |

| target_provision_state | None |

| provision_updated_at | None |

| maintenance | False |

| inspection_started_at | None |

| inspection_finished_at | None |

| power_state | None |

| driver | agent_ilo |

| reservation | None |

| properties | {u'memory_mb': 64000, u'local_gb': 99, u'cpus': 2, u'capabilities': |

| | u'boot_mode:bios,boot_option:local'} |

| instance_uuid | None |

| name | None |

| driver_info | {u'ilo_address': u'10.1.196.117', u'ilo_password': u'******', |

| | u'ilo_deploy_iso': u'b9499494-7db3-4448-b67f-233b86489c1f', |

| | u'ilo_username': u'Administrator'} |

| created_at | 2016-03-11T10:17:10+00:00 |

| driver_internal_info | {} |

| chassis_uuid | |

| instance_info | {} |

+------------------------+---------------------------------------------------------------------+with the flavor characteristics:

ardana > openstack flavor show

+----------------------------+-------------------------------------------------------------------+

| Property | Value |

+----------------------------+-------------------------------------------------------------------+

| OS-FLV-DISABLED:disabled | False |

| OS-FLV-EXT-DATA:ephemeral | 0 |

| disk | 99 |

| extra_specs | {"capabilities:boot_option": "local", "cpu_arch": "x86_64", |

| | "capabilities:boot_mode": "bios"} |

| id | 645de08d-2bc6-43f1-8a5f-2315a75b1348 |

| name | bmtest |

| os-flavor-access:is_public | True |

| ram | 64000 |

| rxtx_factor | 1.0 |

| swap | |

| vcpus | 2 |

+----------------------------+-------------------------------------------------------------------+In this instance, the problem is caused by the absence of the "cpu_arch": "x86_64" property on the ironic node. This can be resolved by updating the ironic node, adding the missing property:

ardana > ironic node-update ea7246fd-e1d6-4637-9699-0b7c59c22e67 \

add properties/cpu_arch=x86_64

and then re-running the openstack server create command.

29.6.2 Node fails to deploy because it has timed out #

Possible cause: The neutron API session timed out before port creation was completed.

Resolution: Switch response time varies

by vendor; the value of url_timeout must be increased to

allow for switch response.

Check ironic Conductor logs

(/var/log/ironic/ironic-conductor.log) for

ConnectTimeout errors while connecting to neutron for

port creation. For example:

19-03-20 19:09:14.557 11556 ERROR ironic.conductor.utils [req-77f3a7b...1b10c5b - default default] Unexpected error while preparing to deploy to node 557316...84dbdfbe8b0: ConnectTimeout: Request to https://192.168.75.1:9696/v2.0/ports timed out

Use the following steps to increase the value of

url_timeout.

Log in to the deployer node.

Edit

./roles/ironic-common/defaults/main.yml, increasing the value ofurl_timeout.ardana >cd /var/lib/ardana/scratch/ansible/next/ardana/ansibleardana >vi ./roles/ironic-common/defaults/main.ymlIncrease the value of the

url_timeoutparameter in theironic_neutron:section. Increase the parameter from the default (60 seconds) to 120 and then in increments of 60 seconds until the node deploys successfully.Reconfigure ironic.

ardana >ansible-playbook -i hosts/verb_hosts ironic-reconfigure.yml

29.6.3 Deployment to a node fails and in "ironic node-list" command, the power_state column for the node is shown as "None" #

Possible cause: The IPMI commands to the node take longer to change the power state of the server.

Resolution: Check if the node power state can be changed using the following command

ardana > ironic node-set-power-state $NODEUUID onIf the above command succeeds and the power_state column is updated correctly, then the following steps are required to increase the power sync interval time.

On the first controller, reconfigure ironic to increase the power sync interval time. In the example below, it is set to 120 seconds. This value may have to be tuned based on the setup.

Go to the

~/openstack/my_cloud/config/ironic/directory and editironic-conductor.conf.j2to set thesync_power_state_intervalvalue:[conductor] sync_power_state_interval = 120

Save the file and then run the following playbooks:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlardana >ansible-playbook -i hosts/localhost ready-deployment.ymlardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts ironic-reconfigure.yml

29.6.4 Error Downloading Image #

If you encounter the error below during the deployment:

"u'message': u'Error downloading image: Download of image id 77700...96551 failed: Image download failed for all URLs.', u'code': 500, u'type': u'ImageDownloadError', u'details': u'Download of image id 77700b53-9e15-406c-b2d5-13e7d9b96551 failed: Image download failed for all URLs.'"

you should visit the Single Sign-On Settings in the Security page of IPMI and change the Single Sign-On Trust Mode setting from the default of "Trust None (SSO disabled)" to "Trust by Certificate".

29.6.5 Using node-inspection can cause temporary claim of IP addresses #

Possible cause: Running

node-inspection on a node discovers all the NIC ports

including the NICs that do not have any connectivity. This causes a

temporary consumption of the network IPs and increased usage of the

allocated quota. As a result, other nodes are deprived of IP addresses and

deployments can fail.

Resolution:You can add node properties manually added instead of using the inspection tool.

Note: Upgrade ipmitool to a version >= 1.8.15 or it

may not return detailed information about the NIC interface for

node-inspection.

29.6.6 Node permanently stuck in deploying state #

Possible causes:

ironic conductor service associated with the node could go down.

There might be a properties mismatch. MAC address registered for the node could be incorrect.

Resolution: To recover from this

condition, set the provision state of the node to Error

and maintenance to True. Delete the node and re-register

again.

29.6.7 The NICs in the baremetal node should come first in boot order #

Possible causes: By default, the boot order of baremetal node is set as NIC1, HDD and NIC2. If NIC1 fails, the nodes starts booting from HDD and the provisioning fails.

Resolution: Set boot order so that all the NICs appear before the hard disk of the baremetal as NIC1, NIC2…, HDD.

29.6.8 Increase in the number of nodes can cause power commands to fail #

Possible causes:ironic periodically performs a power state sync with all the baremetal nodes. When the number of nodes increase, ironic does not get sufficient time to perform power operations.

Resolution: The following procedure gives a

way to increase sync_power_state_interval:

Edit the file

~/openstack/my_cloud/config/ironic/ironic-conductor.conf.j2and navigate to the section for[conductor]Increase the

sync_power_state_interval. For example, for 100 nodes, setsync_power_state_interval = 90and save the file.Execute the following set of commands to reconfigure ironic:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlardana >ansible-playbook -i hosts/localhost ready-deployment.ymlardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts ironic-reconfigure.yml

29.6.9 DHCP succeeds with PXE but times out with iPXE #

If you see DHCP error "No configuration methods succeeded" in iPXE right after successful DHCP performed by embedded NIC firmware, there may be an issue with Spanning Tree Protocol on the switch.

To avoid this error, Rapid Spanning Tree Protocol needs to be enabled on the switch. If this is not an option due to conservative loop detection strategies, use the steps outlined below to install the iPXE binary with increased DHCP timeouts.

Clone iPXE source code

tux >git clone git://git.ipxe.org/ipxe.gittux >cd ipxe/srcModify lines 22-25 in file