12 Alternative Configurations #

In SUSE OpenStack Cloud 9 there are alternative configurations that we recommend for specific purposes.

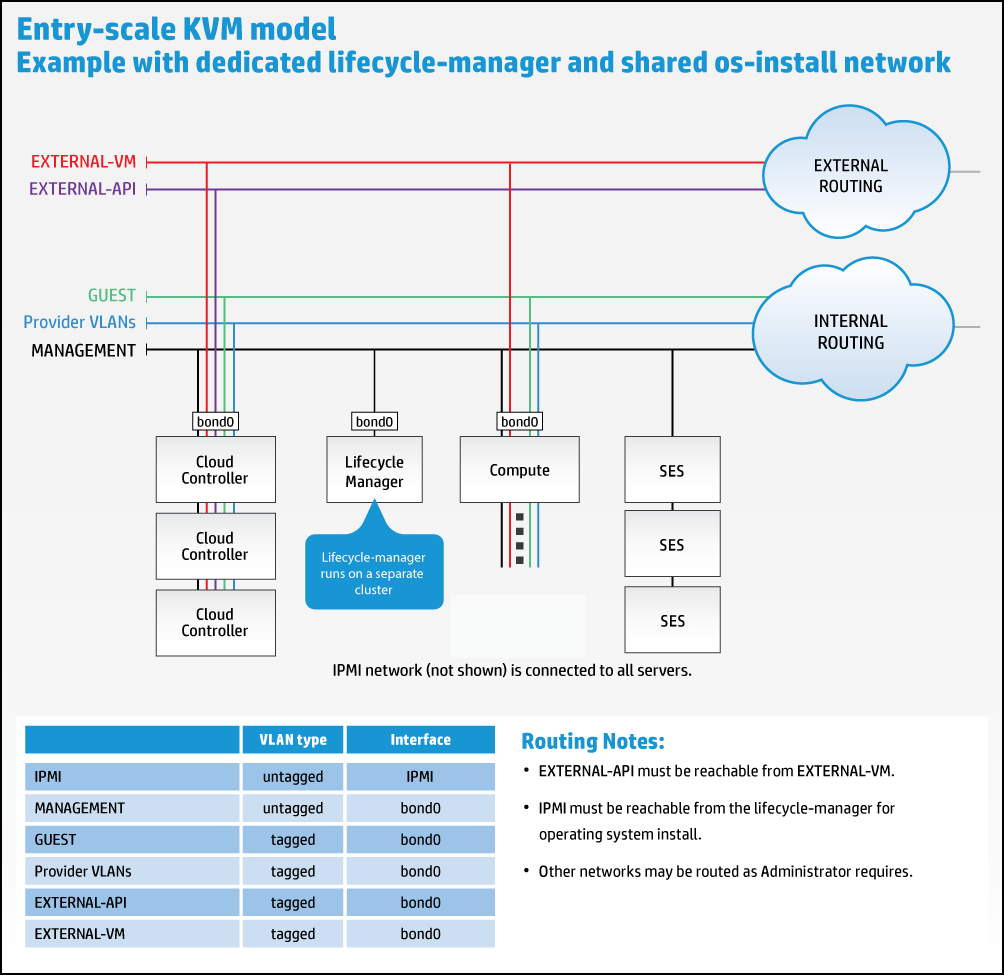

12.1 Using a Dedicated Cloud Lifecycle Manager Node #

All of the example configurations included host the Cloud Lifecycle Manager on the first Control Node. It is also possible to deploy this service on a dedicated node. One use case for wanting to run the dedicated Cloud Lifecycle Manager is to be able to test the deployment of different configurations without having to re-install the first server. Some administrators prefer the additional security of keeping all of the configuration data on a separate server from those that users of the cloud connect to (although all of the data can be encrypted and SSH keys can be password protected).

Here is a graphical representation of this setup:

12.1.1 Specifying a dedicated Cloud Lifecycle Manager in your input model #

To specify a dedicated Cloud Lifecycle Manager in your input model, make the following edits to your configuration files.

The indentation of each of the input files is important and will cause errors if not done correctly. Use the existing content in each of these files as a reference when adding additional content for your Cloud Lifecycle Manager.

Update

control_plane.ymlto add the Cloud Lifecycle Manager.Update

server_roles.ymlto add the Cloud Lifecycle Manager role.Update

net_interfaces.ymlto add the interface definition for the Cloud Lifecycle Manager.Create a

disks_lifecycle_manager.ymlfile to define the disk layout for the Cloud Lifecycle Manager.Update

servers.ymlto add the dedicated Cloud Lifecycle Manager node.

Control_plane.yml: The snippet below shows the addition

of a single node cluster into the control plane to host the Cloud Lifecycle Manager service.

Note that, in addition to adding the new cluster, you also have to remove

the Cloud Lifecycle Manager component from the cluster1 in the examples:

clusters:

- name: cluster0

cluster-prefix: c0

server-role: LIFECYCLE-MANAGER-ROLE

member-count: 1

allocation-policy: strict

service-components:

- lifecycle-manager

- ntp-client

- name: cluster1

cluster-prefix: c1

server-role: CONTROLLER-ROLE

member-count: 3

allocation-policy: strict

service-components:

- lifecycle-manager

- ntp-server

- tempest

This specifies a single node of role

LIFECYCLE-MANAGER-ROLE hosting the Cloud Lifecycle Manager.

Server_roles.yml: The snippet below shows the insertion

of the new server roles definition:

server-roles:

- name: LIFECYCLE-MANAGER-ROLE

interface-model: LIFECYCLE-MANAGER-INTERFACES

disk-model: LIFECYCLE-MANAGER-DISKS

- name: CONTROLLER-ROLEThis defines a new server role which references a new interface-model and disk-model to be used when configuring the server.

net-interfaces.yml: The snippet below shows the

insertion of the network-interface info:

- name: LIFECYCLE-MANAGER-INTERFACES

network-interfaces:

- name: BOND0

device:

name: bond0

bond-data:

options:

mode: active-backup

miimon: 200

primary: hed3

provider: linux

devices:

- name: hed3

- name: hed4

network-groups:

- MANAGEMENTThis assumes that the server uses the same physical networking layout as the other servers in the example.

disks_lifecycle_manager.yml: In the examples,

disk-models are provided as separate files (this is just a convention, not a

limitation) so the following should be added as a new file named

disks_lifecycle_manager.yml:

---

product:

version: 2

disk-models:

- name: LIFECYCLE-MANAGER-DISKS

# Disk model to be used for Cloud Lifecycle Managers nodes

# /dev/sda_root is used as a volume group for /, /var/log and /var/crash

# sda_root is a templated value to align with whatever partition is really used

# This value is checked in os config and replaced by the partition actually used

# on sda e.g. sda1 or sda5

volume-groups:

- name: ardana-vg

physical-volumes:

- /dev/sda_root

logical-volumes:

# The policy is not to consume 100% of the space of each volume group.

# 5% should be left free for snapshots and to allow for some flexibility.

- name: root

size: 80%

fstype: ext4

mount: /

- name: crash

size: 15%

mount: /var/crash

fstype: ext4

mkfs-opts: -O large_file

consumer:

name: os

Servers.yml: The snippet below shows the insertion of an

additional server used for hosting the Cloud Lifecycle Manager. Provide the address

information here for the server you are running on, that is, the node where

you have installed the SUSE OpenStack Cloud ISO.

servers:

# NOTE: Addresses of servers need to be changed to match your environment.

#

# Add additional servers as required

#Lifecycle-manager

- id: lifecycle-manager

ip-addr: YOUR IP ADDRESS HERE

role: LIFECYCLE-MANAGER-ROLE

server-group: RACK1

nic-mapping: HP-SL230-4PORT

mac-addr: 8c:dc:d4:b5:c9:e0

# ipmi information is not needed

# Controllers

- id: controller1

ip-addr: 192.168.10.3

role: CONTROLLER-ROLEWith a stand-alone deployer, the OpenStack CLI and other clients will not be installed automatically. You need to install OpenStack clients to get the desired OpenStack capabilities. For more information and installation instructions, consult Chapter 40, Installing OpenStack Clients.

12.2 Configuring SUSE OpenStack Cloud without DVR #

By default in the KVM model, the neutron service utilizes distributed routing (DVR). This is the recommended setup because it allows for high availability. However, if you would like to disable this feature, here are the steps to achieve this.

On your Cloud Lifecycle Manager, make the following changes:

In the

~/openstack/my_cloud/config/neutron/neutron.conf.j2file, change the line below from:router_distributed = {{ router_distributed }}to:

router_distributed = False

In the

~/openstack/my_cloud/config/neutron/ml2_conf.ini.j2file, change the line below from:enable_distributed_routing = {{ enable_distributed_routing }}to:

enable_distributed_routing = False

In the

~/openstack/my_cloud/config/neutron/l3_agent.ini.j2file, change the line below from:agent_mode = {{ neutron_l3_agent_mode }}to:

agent_mode = legacy

In the

~/openstack/my_cloud/definition/data/control_plane.ymlfile, remove the following values from the Compute resourceservice-componentslist:- neutron-l3-agent - neutron-metadata-agent

WarningIf you fail to remove the above values from the Compute resource service-components list from file

~/openstack/my_cloud/definition/data/control_plane.yml, you will end up with routers (non_DVR routers) being deployed in the compute host, even though the lifecycle manager is configured for non_distributed routers.Commit your changes to your local git repository:

ardana >cd ~/openstack/ardana/ansibleardana >git add -Aardana >git commit -m "My config or other commit message"Run the configuration processor:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlRun the ready deployment playbook:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost ready-deployment.ymlContinue installation. More information on cloud deployments are available in the Chapter 19, Overview

12.3 Configuring SUSE OpenStack Cloud with Provider VLANs and Physical Routers Only #

Another option for configuring neutron is to use provider VLANs and physical routers only, here are the steps to achieve this.

On your Cloud Lifecycle Manager, make the following changes:

In the

~/openstack/my_cloud/config/neutron/neutron.conf.j2file, change the line below from:router_distributed = {{ router_distributed }}to:

router_distributed = False

In the

~/openstack/my_cloud/config/neutron/ml2_conf.ini.j2file, change the line below from:enable_distributed_routing = {{ enable_distributed_routing }}to:

enable_distributed_routing = False

In the

~/openstack/my_cloud/config/neutron/dhcp_agent.ini.j2file, change the line below from:enable_isolated_metadata = {{ neutron_enable_isolated_metadata }}to:

enable_isolated_metadata = True

In the

~/openstack/my_cloud/definition/data/control_plane.ymlfile, remove the following values from the Compute resourceservice-componentslist:- neutron-l3-agent - neutron-metadata-agent

12.4 Considerations When Installing Two Systems on One Subnet #

If you wish to install two separate SUSE OpenStack Cloud 9 systems using a single subnet, you will need to consider the following notes.

The ip_cluster service includes the

keepalived daemon which maintains virtual IPs (VIPs) on

cluster nodes. In order to maintain VIPs, it communicates between cluster

nodes over the VRRP protocol.

A VRRP virtual routerid identifies a particular VRRP cluster and must be

unique for a subnet. If you have two VRRP clusters with the same virtual

routerid, causing a clash of VRRP traffic, the VIPs are unlikely to be up or

pingable and you are likely to get the following signature in your

/etc/keepalived/keepalived.log:

Dec 16 15:43:43 ardana-cp1-c1-m1-mgmt Keepalived_vrrp[2218]: ip address associated with VRID not present in received packet : 10.2.1.11 Dec 16 15:43:43 ardana-cp1-c1-m1-mgmt Keepalived_vrrp[2218]: one or more VIP associated with VRID mismatch actual MASTER advert Dec 16 15:43:43 ardana-cp1-c1-m1-mgmt Keepalived_vrrp[2218]: bogus VRRP packet received on br-bond0 !!! Dec 16 15:43:43 ardana-cp1-c1-m1-mgmt Keepalived_vrrp[2218]: VRRP_Instance(VI_2) ignoring received advertisment...

To resolve this issue, our recommendation is to install your separate SUSE OpenStack Cloud 9 systems with VRRP traffic on different subnets.

If this is not possible, you may also assign a unique routerid to your

separate SUSE OpenStack Cloud 9 system by changing the

keepalived_vrrp_offset service configurable. The routerid

is currently derived using the keepalived_vrrp_index which

comes from a configuration processor variable and the

keepalived_vrrp_offset.

For example,

Log in to your Cloud Lifecycle Manager.

Edit your

~/openstack/my_cloud/config/keepalived/defaults.ymlfile and change the value of the following line:keepalived_vrrp_offset: 0

Change the off value to a number that uniquely identifies a separate vrrp cluster. For example:

keepalived_vrrp_offset: 0for the 1st vrrp cluster on this subnet.keepalived_vrrp_offset: 1for the 2nd vrrp cluster on this subnet.keepalived_vrrp_offset: 2for the 3rd vrrp cluster on this subnet.ImportantYou should be aware that the files in the

~/openstack/my_cloud/config/directory are symlinks to the~/openstack/ardana/ansible/directory. For example:ardana >ls -al ~/openstack/my_cloud/config/keepalived/defaults.yml lrwxrwxrwx 1 stack stack 55 May 24 20:38 /var/lib/ardana/openstack/my_cloud/config/keepalived/defaults.yml -> ../../../ardana/ansible/roles/keepalived/defaults/main.ymlIf you are using a tool like

sedto make edits to files in this directory, you might break the symbolic link and create a new copy of the file. To maintain the link, you will need to forcesedto follow the link:ardana >sed -i --follow-symlinks \ 's$keepalived_vrrp_offset: 0$keepalived_vrrp_offset: 2$' \ ~/openstack/my_cloud/config/keepalived/defaults.ymlAlternatively, directly edit the target of the link

~/openstack/ardana/ansible/roles/keepalived/defaults/main.yml.Commit your configuration to the Git repository (see Chapter 22, Using Git for Configuration Management), as follows:

ardana >cd ~/openstack/ardana/ansibleardana >git add -Aardana >git commit -m "changing Admin password"Run the configuration processor with this command:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlUse the playbook below to create a deployment directory:

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost ready-deployment.ymlIf you are making this change after your initial install, run the following reconfigure playbook to make this change in your environment:

ardana >cd ~/scratch/ansible/next/ardana/ansible/ardana >ansible-playbook -i hosts/verb_hosts FND-CLU-reconfigure.yml