9 Example Configurations #

The SUSE OpenStack Cloud 9 system ships with a collection of pre-qualified example configurations. These are designed to help you to get up and running quickly with a minimum number of configuration changes.

The SUSE OpenStack Cloud input model allows a wide variety of configuration parameters that can, at first glance, appear daunting. The example configurations are designed to simplify this process by providing pre-built and pre-qualified examples that need only a minimum number of modifications to get started.

9.1 SUSE OpenStack Cloud Example Configurations #

This section briefly describes the various example configurations and their capabilities. It also describes in detail, for the entry-scale-kvm example, how you can adapt the input model to work in your environment.

The following pre-qualified examples are shipped with SUSE OpenStack Cloud 9:

| Name | Location |

|---|---|

| Section 9.3.1, “Entry-Scale Cloud” |

~/openstack/examples/entry-scale-kvm

|

| Section 9.3.2, “Entry Scale Cloud with Metering and Monitoring Services” |

~/openstack/examples/entry-scale-kvm-mml

|

| Section 9.4.1, “Single-Region Entry-Scale Cloud with a Mix of KVM and ESX Hypervisors” | ~/openstack/examples/entry-scale-kvm-esx

|

| Section 9.4.2, “Single-Region Entry-Scale Cloud with Metering and Monitoring Services, and a Mix of KVM and ESX Hypervisors” |

~/openstack/examples/entry-scale-kvm-esx-mml

|

| Section 9.5.1, “Entry-scale swift Model” |

~/openstack/examples/entry-scale-swift

|

| Section 9.6.1, “Entry-Scale Cloud with Ironic Flat Network” |

~/openstack/examples/entry-scale-ironic-flat-network

|

| Section 9.6.2, “Entry-Scale Cloud with Ironic Multi-Tenancy” |

~/openstack/examples/entry-scale-ironic-multi-tenancy

|

| Section 9.3.3, “Single-Region Mid-Size Model” |

~/openstack/examples/mid-scale-kvm

|

The entry-scale systems are designed to provide an entry-level solution that can be scaled from a small number of nodes to a moderately high node count (approximately 100 compute nodes, for example).

In the mid-scale model, the cloud control plane is subdivided into a number of dedicated service clusters to provide more processing power for individual control plane elements. This enables a greater number of resources to be supported (compute nodes, swift object servers). This model also shows how a segmented network can be expressed in the SUSE OpenStack Cloud model.

9.2 Alternative Configurations #

In SUSE OpenStack Cloud 9 there are alternative configurations that we recommend for specific purposes and this section we will outline them.

The ironic multi-tenancy feature uses neutron to manage the tenant networks. The interaction between neutron and the physical switch is facilitated by neutron's Modular Layer 2 (ML2) plugin. The neutron ML2 plugin supports drivers to interact with various networks, as each vendor may have their own extensions. Those drivers are referred to as neutron ML2 mechanism drivers, or simply mechanism drivers.

The ironic multi-tenancy feature has been validated using OpenStack

genericswitch mechanism driver. However, if the given physical switch

requires a different mechanism driver, you must update the input model

accordingly. To update the input model with a custom ML2 mechanism driver,

specify the relevant information in the

multi_tenancy_switch_config: section of the

data/ironic/ironic_config.yml file.

9.3 KVM Examples #

9.3.1 Entry-Scale Cloud #

This example deploys an entry-scale cloud.

- Control Plane

Cluster1 3 nodes of type

CONTROLLER-ROLErun the core OpenStack services, such as keystone, nova API, glance API, neutron API, horizon, and heat API.- Cloud Lifecycle Manager

The Cloud Lifecycle Manager runs on one of the control-plane nodes of type

CONTROLLER-ROLE. The IP address of the node that will run the Cloud Lifecycle Manager needs to be included in thedata/servers.ymlfile.- Resource Nodes

Compute One node of type

COMPUTE-ROLEruns nova Compute and associated services.Object Storage Minimal swift resources are provided by the control plane.

Additional resource nodes can be added to the configuration.

- Networking

This example requires the following networks:

IPMI network connected to the lifecycle-manager and the IPMI ports of all servers.

Nodes require a pair of bonded NICs which are used by the following networks:

External API The network for making requests to the cloud.

External VM This network provides access to VMs via floating IP addresses.

Cloud Management This network is used for all internal traffic between the cloud services. It is also used to install and configure the nodes. The network needs to be on an untagged VLAN.

Guest The network that carries traffic between VMs on private networks within the cloud.

The

EXTERNAL APInetwork must be reachable from theEXTERNAL VMnetwork for VMs to be able to make API calls to the cloud.An example set of networks is defined in

data/networks.yml. The file needs to be modified to reflect your environment.The example uses the devices

hed3andhed4as a bonded network interface for all services. The name given to a network interface by the system is configured in the filedata/net_interfaces.yml. That file needs to be edited to match your system.- Local Storage

All servers should present a single OS disk, protected by a RAID controller. This disk needs to be at least 512 GB in capacity. In addition the example configures one additional disk depending on the role of the server:

Controllers

/dev/sdband/dev/sdcare configured to be used by swift.Compute Servers

/dev/sdbis configured as an additional Volume Group to be used for VM storage

Additional disks can be configured for any of these roles by editing the corresponding

data/disks_*.ymlfile

9.3.2 Entry Scale Cloud with Metering and Monitoring Services #

This example deploys an entry-scale cloud that provides metering and monitoring services and runs the database and messaging services in their own cluster.

- Control Plane

Cluster1 2 nodes of type

CONTROLLER-ROLErun the core OpenStack services, such as keystone, nova API, glance API, neutron API, horizon, and heat API.Cluster2 3 nodes of type

MTRMON-ROLE, run the OpenStack services for metering and monitoring (for example, ceilometer, monasca and Logging).Cluster3 3 nodes of type

DBMQ-ROLEthat run clustered database and RabbitMQ services to support the cloud infrastructure. 3 nodes are required for high availability.

- Cloud Lifecycle Manager

The Cloud Lifecycle Manager runs on one of the control-plane nodes of type

CONTROLLER-ROLE. The IP address of the node that will run the Cloud Lifecycle Manager needs to be included in thedata/servers.ymlfile.- Resource Nodes

Compute 1 node of type

COMPUTE-ROLEruns nova Compute and associated services.Object Storage Minimal swift resources are provided by the control plane.

Additional resource nodes can be added to the configuration.

- Networking

This example requires the following networks:

IPMI network connected to the lifecycle-manager and the IPMI ports of all servers.

Nodes require a pair of bonded NICs which are used by the following networks:

External API The network for making requests to the cloud.

External VM The network that provides access to VMs via floating IP addresses.

Cloud Management This is the network that is used for all internal traffic between the cloud services. It is also used to install and configure the nodes. The network needs to be on an untagged VLAN.

Guest The network that carries traffic between VMs on private networks within the cloud.

The

EXTERNAL APInetwork must be reachable from theEXTERNAL VMnetwork for VMs to be able to make API calls to the cloud.An example set of networks is defined in

data/networks.yml. The file needs to be modified to reflect your environment.The example uses the devices

hed3andhed4as a bonded network interface for all services. The name given to a network interface by the system is configured in the filedata/net_interfaces.yml. That file needs to be edited to match your system.- Local Storage

All servers should present a single OS disk, protected by a RAID controller. This disk needs to be at least 512 GB of capacity. In addition, the example configures one additional disk depending on the role of the server:

Core Controllers

/dev/sdband/dev/sdcis configured to be used by swift.DBMQ Controllers

/dev/sdbis configured as an additional Volume Group to be used by the database and RabbitMQ.Compute Servers

/dev/sdbis configured as an additional Volume Group to be used for VM storage.

Additional disks can be configured for any of these roles by editing the corresponding

data/disks_*.ymlfile.

9.3.3 Single-Region Mid-Size Model #

The mid-size model is intended as a template for a moderate sized cloud. The Control plane is made up of multiple server clusters to provide sufficient computational, network and IOPS capacity for a mid-size production style cloud.

- Control Plane

Core Cluster runs core OpenStack Services, such as keystone, nova API, glance API, neutron API, horizon, and heat API. Default configuration is two nodes of role type

CORE-ROLE.Metering and Monitoring Cluster runs the OpenStack Services for metering and monitoring (for example, ceilometer, monasca and logging). Default configuration is three nodes of role type

MTRMON-ROLE.Database and Message Queue Cluster runs clustered MariaDB and RabbitMQ services to support the Ardana cloud infrastructure. Default configuration is three nodes of role type

DBMQ-ROLE. Three nodes are required for high availability.swift PAC Cluster runs the swift Proxy, Account and Container services. Default configuration is three nodes of role type

SWPAC-ROLE.neutron Agent Cluster Runs neutron VPN (L3), DHCP, Metadata and OpenVswitch agents. Default configuration is two nodes of role type

NEUTRON-ROLE.

- Cloud Lifecycle Manager

The Cloud Lifecycle Manager runs on one of the control-plane nodes of type

CONTROLLER-ROLE. The IP address of the node that will run the Cloud Lifecycle Manager needs to be included in thedata/servers.ymlfile.- Resource Nodes

Compute runs nova Compute and associated services. Runs on nodes of role type

COMPUTE-ROLE. This model lists 3 nodes. 1 node is the minimum requirement.Object Storage 3 nodes of type

SOWBJ-ROLErun the swift Object service. The minimum node count should match your swift replica count.

The minimum node count required to run this model unmodified is 19 nodes. This can be reduced by consolidating services on the control plane clusters.

- Networking

This example requires the following networks:

IPMI network connected to the lifecycle-manager and the IPMI ports of all servers.

Nodes require a pair of bonded NICs which are used by the following networks:

External API The network for making requests to the cloud.

Internal API This network is used within the cloud for API access between services.

External VM This network provides access to VMs via floating IP addresses.

Cloud Management This network is used for all internal traffic between the cloud services. It is also used to install and configure the nodes. The network needs to be on an untagged VLAN.

Guest The network that carries traffic between VMs on private networks within the cloud.

SWIFT This network is used for internal swift communications between the swift nodes.

The

EXTERNAL APInetwork must be reachable from theEXTERNAL VMnetwork for VMs to be able to make API calls to the cloud.An example set of networks is defined in

data/networks.yml. The file needs to be modified to reflect your environment.The example uses the devices

hed3andhed4as a bonded network interface for all services. The name given to a network interface by the system is configured in the filedata/net_interfaces.yml. That file needs to be edited to match your system.

9.3.3.1 Adapting the Mid-Size Model to Fit Your Environment #

The minimum set of changes you need to make to adapt the model for your environment are:

Update

servers.ymlto list the details of your baremetal servers.Update the

networks.ymlfile to replace network CIDRs and VLANs with site specific values.Update the

nic_mappings.ymlfile to ensure that network devices are mapped to the correct physical port(s).Review the disk models (

disks_*.yml) and confirm that the associated servers have the number of disks required by the disk model. The device names in the disk models might need to be adjusted to match the probe order of your servers. The default number of disks for the swift nodes (3 disks) is set low on purpose to facilitate deployment on generic hardware. For production scale swift the servers should have more disks. For example, 6 on SWPAC nodes and 12 on SWOBJ nodes. If you allocate more swift disks then you should review the ring power in the swift ring configuration. This is documented in the swift section. Disk models are provided as follows:DISK SET CONTROLLER: Minimum 1 disk

DISK SET DBMQ: Minimum 3 disks

DISK SET COMPUTE: Minimum 2 disks

DISK SET SWPAC: Minimum 3 disks

DISK SET SWOBJ: Minimum 3 disks

Update the

netinterfaces.ymlfile to match the server NICs used in your configuration. This file has a separate interface model definition for each of the following:INTERFACE SET CONTROLLER

INTERFACE SET DBMQ

INTERFACE SET SWPAC

INTERFACE SET SWOBJ

INTERFACE SET COMPUTE

9.4 ESX Examples #

9.4.1 Single-Region Entry-Scale Cloud with a Mix of KVM and ESX Hypervisors #

This example deploys a cloud which mixes KVM and ESX hypervisors.

- Control Plane

Cluster1 3 nodes of type

CONTROLLER-ROLErun the core OpenStack services, such as keystone, nova API, glance API, neutron API, horizon, and heat API.- Cloud Lifecycle Manager

The Cloud Lifecycle Manager runs on one of the control-plane nodes of type

CONTROLLER-ROLE. The IP address of the node that will run the Cloud Lifecycle Manager needs to be included in thedata/servers.ymlfile.- Resource Nodes

Compute:

KVM runs nova Computes and associated services. It runs on nodes of role type

COMPUTE-ROLE.ESX provides ESX Compute services. OS and software on this node is installed by user.

- ESX Resource Requirements

User needs to supply vSphere server

User needs to deploy the ovsvapp network resources using the vSphere GUI (Section 27.8.2, “Creating ESXi MGMT DVS and Required Portgroup”) by running the

neutron-create-ovsvapp-resources.ymlplaybook (Section 27.8.3, “Configuring OVSvApp Network Resources Using Ansible-Playbook”) or via Python-Networking-vSphere (Section 27.8.4, “Configuring OVSVAPP Using Python-Networking-vSphere”)The following DVS and DVPGs need to be created and configured for each cluster in each ESX hypervisor that will host an OvsVapp appliance. The settings for each DVS and DVPG are specific to your system and network policies. A JSON file example is provided in the documentation, but it needs to be edited to match your requirements.

DVS Port Groups assigned to DVS MGMT MGMT-PG, ESX-CONF-PG, GUEST-PG TRUNK TRUNK-PG User needs to deploy ovsvapp appliance (

OVSVAPP-ROLE) and nova-proxy appliance (ESX-COMPUTE-ROLE)User needs to add required information related to compute proxy and OVSvApp Nodes

- Networking

This example requires the following networks:

IPMInetwork connected to the lifecycle-manager and the IPMI ports of all nodes, except the ESX hypervisors.

Nodes require a pair of bonded NICs which are used by the following networks:

External API The network for making requests to the cloud.

External VM The network that provides access to VMs via floating IP addresses.

Cloud Management The network used for all internal traffic between the cloud services. It is also used to install and configure the nodes. The network needs to be on an untagged VLAN.

Guest This network carries traffic between VMs on private networks within the cloud.

SES This is the network that control-plane and compute-node clients use to talk to the external SUSE Enterprise Storage.

TRUNK is the network that is used to apply security group rules on tenant traffic. It is managed by the cloud admin and is restricted to the vCenter environment.

ESX-CONF-NET network is used only to configure the ESX compute nodes in the cloud. This network should be different from the network used with PXE to stand up the cloud control-plane.

This example's set of networks is defined in

data/networks.yml. The file needs to be modified to reflect your environment.The example uses the devices

hed3andhed4as a bonded network interface for all services. The name given to a network interface by the system is configured in the filedata/net_interfaces.yml. That file needs to be edited to match your system.- Local Storage

All servers should present a single OS disk, protected by a RAID controller. This disk needs to be at least 512 GB in capacity. In addition, the example configures additional disk depending on the node's role:

Controllers

/dev/sdband/dev/sdcare configured to be used by swiftCompute Servers

/dev/sdbis configured as an additional Volume Group to be used for VM storage

Additional disks can be configured for any of these roles by editing the corresponding

data/disks_*.ymlfile.

9.4.2 Single-Region Entry-Scale Cloud with Metering and Monitoring Services, and a Mix of KVM and ESX Hypervisors #

This example deploys a cloud which mixes KVM and ESX hypervisors, provides metering and monitoring services, and runs the database and messaging services in their own cluster.

- Control Plane

Cluster1 2 nodes of type

CONTROLLER-ROLErun the core OpenStack services, such as keystone, nova API, glance API, neutron API, horizon, and heat API.Cluster2 3 nodes of type

MTRMON-ROLE, run the OpenStack services for metering and monitoring (for example, ceilometer, monasca and Logging).Cluster3 3 nodes of type

DBMQ-ROLE, run clustered database and RabbitMQ services to support the cloud infrastructure. 3 nodes are required for high availability.

- Cloud Lifecycle Manager

The Cloud Lifecycle Manager runs on one of the control-plane nodes of type

CONTROLLER-ROLE. The IP address of the node that will run the Cloud Lifecycle Manager needs to be included in thedata/servers.ymlfile.- Resource Nodes

Compute:

KVM runs nova Computes and associated services. It runs on nodes of role type

COMPUTE-ROLE.ESX provides ESX Compute services. OS and software on this node is installed by user.

- ESX Resource Requirements

User needs to supply vSphere server

User needs to deploy the ovsvapp network resources using the vSphere GUI or by running the

neutron-create-ovsvapp-resources.ymlplaybookThe following DVS and DVPGs need to be created and configured for each cluster in each ESX hypervisor that will host an OvsVapp appliance. The settings for each DVS and DVPG are specific to your system and network policies. A JSON file example is provided in the documentation, but it needs to be edited to match your requirements.

ESX-CONF (DVS and DVPG) connected to ovsvapp eth0 and compute-proxy eth0

MANAGEMENT (DVS and DVPG) connected to ovsvapp eth1, eth2, eth3 and compute-proxy eth1

User needs to deploy ovsvapp appliance (

OVSVAPP-ROLE) and nova-proxy appliance (ESX-COMPUTE-ROLE)User needs to add required information related to compute proxy and OVSvApp Nodes

- Networking

This example requires the following networks:

IPMInetwork connected to the lifecycle-manager and the IPMI ports of all nodes, except the ESX hypervisors.

Nodes require a pair of bonded NICs which are used by the following networks:

External API The network for making requests to the cloud.

External VM The network that provides access to VMs (via floating IP addresses).

Cloud Management This network is used for all internal traffic between the cloud services. It is also used to install and configure the nodes. The network needs to be on an untagged VLAN.

Guest This is the network that will carry traffic between VMs on private networks within the cloud.

TRUNK is the network that will be used to apply security group rules on tenant traffic. It is managed by the cloud admin and is restricted to the vCenter environment.

ESX-CONF-NET network is used only to configure the ESX compute nodes in the cloud. This network should be different from the network used with PXE to stand up the cloud control-plane.

This example's set of networks is defined in

data/networks.yml. The file needs to be modified to reflect your environment.The example uses the devices

hed3andhed4as a bonded network interface for all services. The name given to a network interface by the system is configured in the filedata/net_interfaces.yml. That file needs to be edited to match your system.- Local Storage

All servers should present a single OS disk, protected by a RAID controller. This disk needs to be at least 512 GB in capacity. In addition, the example configures additional disk depending on the node's role:

Controllers

/dev/sdband/dev/sdcare configured to be used by swift.Compute Servers

/dev/sdbis configured as an additional Volume Group to be used for VM storage

Additional disks can be configured for any of these roles by editing the corresponding

data/disks_*.ymlfile

9.5 Swift Examples #

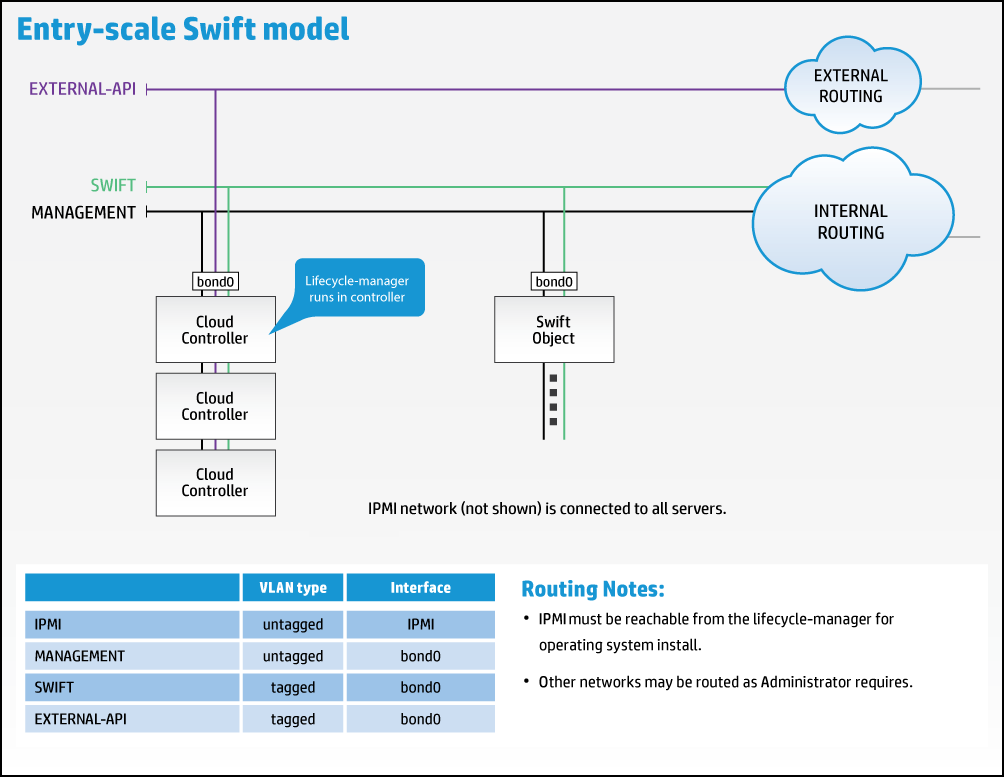

9.5.1 Entry-scale swift Model #

This example shows how SUSE OpenStack Cloud can be configured to provide a swift-only configuration, consisting of three controllers and one or more swift object servers.

The example requires the following networks:

External API - The network for making requests to the cloud.

swift - The network for all data traffic between the swift services.

Management - This network that is used for all internal traffic between the cloud services, including node provisioning. This network must be on an untagged VLAN.

All of these networks are configured to be presented via a pair of bonded NICs. The example also enables provider VLANs to be configured in neutron on this interface.

In the diagram "External Routing" refers to whatever routing you want to provide to allow users to access the External API. "Internal Routing" refers to whatever routing you want to provide to allow administrators to access the Management network.

If you are using SUSE OpenStack Cloud to install the operating system, then an IPMI network connected to the IPMI ports of all servers and routable from the Cloud Lifecycle Manager is also required for BIOS and power management of the node during the operating system installation process.

In the example the controllers use one disk for the operating system and two disks for swift proxy and account storage. The swift object servers use one disk for the operating system and four disks for swift storage. These values can be modified to suit your environment.

These recommended minimums are based on the included with the base installation and are suitable only for demo environments. For production systems you will want to consider your capacity and performance requirements when making decisions about your hardware.

The entry-scale-swift example runs the swift proxy,

account and container services on the three controller servers. However, it

is possible to extend the model to include the swift proxy, account and

container services on dedicated servers (typically referred to as the swift

proxy servers). If you are using this model, we have included the recommended

swift proxy servers specs in the table below.

| Node Type | Role Name | Required Number | Server Hardware - Minimum Requirements and Recommendations | |||

|---|---|---|---|---|---|---|

| Disk | Memory | Network | CPU | |||

| Dedicated Cloud Lifecycle Manager (optional) | Lifecycle-manager | 1 | 300 GB | 8 GB | 1 x 10 Gbit/s with PXE Support | 8 CPU (64-bit) cores total (Intel x86_64) |

| Control Plane | Controller | 3 |

| 64 GB | 2 x 10 Gbit/s with one PXE enabled port | 8 CPU (64-bit) cores total (Intel x86_64) |

| swift Object | swobj | 3 |

If using x3 replication only:

If using Erasure Codes only or a mix of x3 replication and Erasure Codes:

| 32 GB (see considerations at bottom of page for more details) | 2 x 10 Gbit/s with one PXE enabled port | 8 CPU (64-bit) cores total (Intel x86_64) |

| swift Proxy, Account, and Container | swpac | 3 | 2 x 600 GB (minimum, see considerations at bottom of page for more details) | 64 GB (see considerations at bottom of page for more details) | 2 x 10 Gbit/s with one PXE enabled port | 8 CPU (64-bit) cores total (Intel x86_64) |

The disk speeds (RPM) chosen should be consistent within the same ring or storage policy. It is best to not use disks with mixed disk speeds within the same swift ring.

Considerations for your swift object and proxy, account, container servers RAM and disk capacity needs

swift can have a diverse number of hardware configurations. For example, a swift object server may have just a few disks (minimum of 6 for erasure codes) or up to 70 and beyond. The memory requirement needs to be increased as more disks are added. The general rule of thumb for memory needed is 0.5 GB per TB of storage. For example, a system with 24 hard drives at 8TB each, giving a total capacity of 192TB, should use 96GB of RAM. However, this does not work well for a system with a small number of small hard drives or a very large number of very large drives. So, if after calculating the memory given this guideline, if the answer is less than 32GB then go with 32GB of memory minimum and if the answer is over 256GB then use 256GB maximum, no need to use more memory than that.

When considering the capacity needs for the swift proxy, account, and container (PAC) servers, you should calculate 2% of the total raw storage size of your object servers to specify the storage required for the PAC servers. So, for example, if you were using the example we provided earlier and you had an object server setup of 24 hard drives with 8TB each for a total of 192TB and you had a total of 6 object servers, that would give a raw total of 1152TB. So you would take 2% of that, which is 23TB, and ensure that much storage capacity was available on your swift proxy, account, and container (PAC) server cluster. If you had a cluster of three swift PAC servers, that would be ~8TB each.

Another general rule of thumb is that if you are expecting to have more than a million objects in a container then you should consider using SSDs on the swift PAC servers rather than HDDs.

9.6 Ironic Examples #

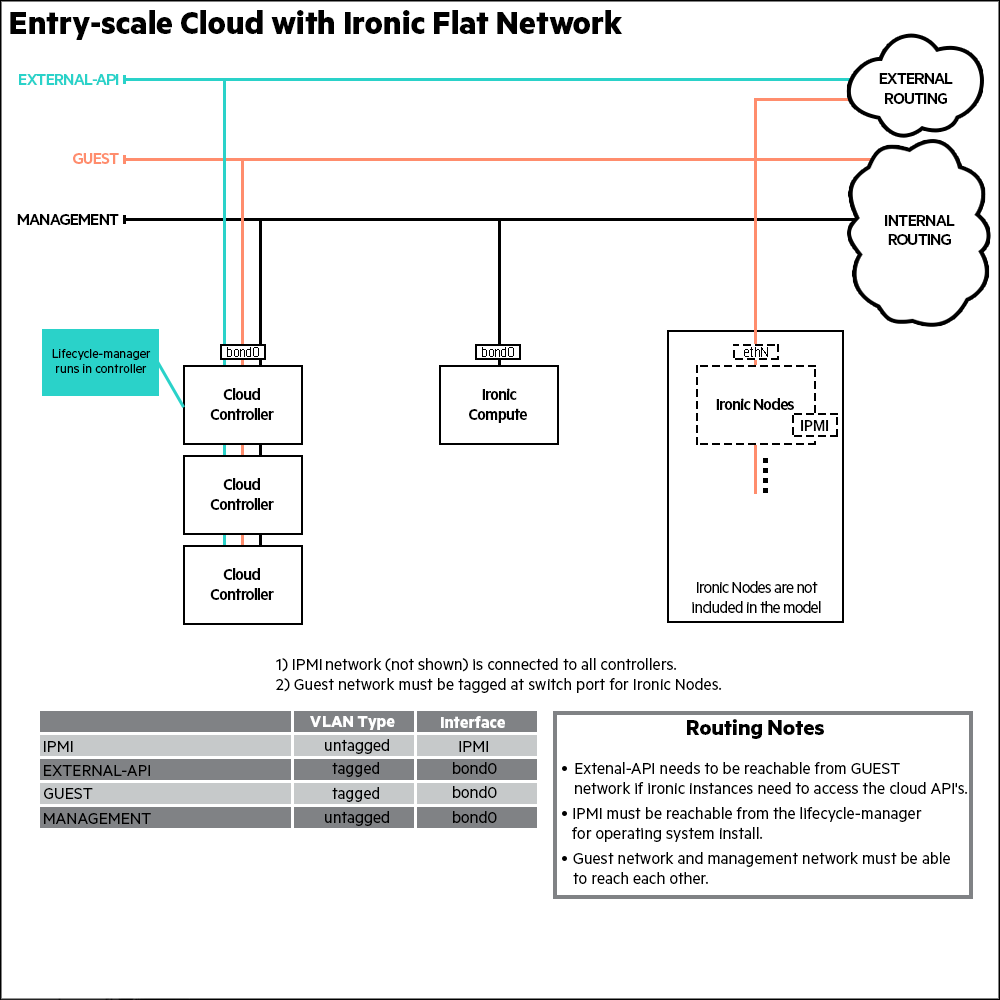

9.6.1 Entry-Scale Cloud with Ironic Flat Network #

This example deploys an entry scale cloud that uses the ironic service to provision physical machines through the Compute services API.

- Control Plane

Cluster1 3 nodes of type

CONTROLLER-ROLErun the core OpenStack services, such as keystone, nova API, glance API, neutron API, horizon, and heat API.- Cloud Lifecycle Manager

The Cloud Lifecycle Manager runs on one of the control-plane nodes of type

CONTROLLER-ROLE. The IP address of the node that will run the Cloud Lifecycle Manager needs to be included in thedata/servers.ymlfile.- Resource Nodes

ironic Compute One node of type

IRONIC-COMPUTE-ROLEruns nova-compute, nova-compute-ironic, and other supporting services.Object Storage Minimal swift resources are provided by the control plane.

- Networking

This example requires the following networks:

IPMI network connected to the lifecycle-manager and the IPMI ports of all servers.

Nodes require a pair of bonded NICs which are used by the following networks:

External API This is the network that users will use to make requests to the cloud.

Cloud Management This is the network that will be used for all internal traffic between the cloud services. This network is also used to install and configure the nodes. The network needs to be on an untagged VLAN.

Guest This is the flat network that will carry traffic between bare metal instances within the cloud. It is also used to PXE boot said bare metal instances and install the operating system selected by tenants.

The

EXTERNAL APInetwork must be reachable from theGUESTnetwork for the bare metal instances to make API calls to the cloud.An example set of networks is defined in

data/networks.yml. The file needs to be modified to reflect your environment.The example uses the devices

hed3andhed4as a bonded network interface for all services. The name given to a network interface by the system is configured in the filedata/net_interfaces.yml. That file needs to be modified to match your system.- Local Storage

All servers should present a single OS disk, protected by a RAID controller. This disk needs to be at least 512 GB in capacity. In addition the example configures one additional disk depending on the role of the server:

Controllers

/dev/sdband/dev/sdcconfigured to be used by swift.

Additional discs can be configured for any of these roles by editing the corresponding

data/disks_*.ymlfile.

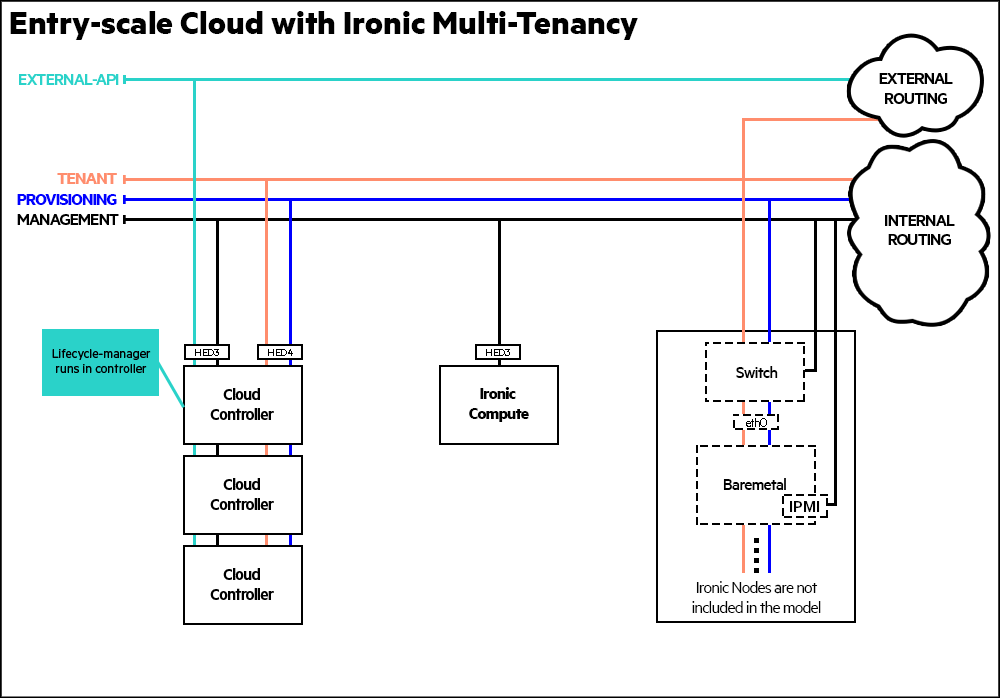

9.6.2 Entry-Scale Cloud with Ironic Multi-Tenancy #

This example deploys an entry scale cloud that uses the ironic service to provision physical machines through the Compute services API and supports multi tenancy.

- Control Plane

Cluster1 3 nodes of type

CONTROLLER-ROLErun the core OpenStack services, such as keystone, nova API, glance API, neutron API, horizon, and heat API.- Cloud Lifecycle Manager

The Cloud Lifecycle Manager runs on one of the control-plane nodes of type

CONTROLLER-ROLE. The IP address of the node that will run the Cloud Lifecycle Manager needs to be included in thedata/servers.ymlfile.- Resource Nodes

ironic Compute One node of type

IRONIC-COMPUTE-ROLEruns nova-compute, nova-compute-ironic, and other supporting services.Object Storage Minimal swift Resources are provided by the control plane.

- Networking

This example requires the following networks:

IPMI network connected to the deployer and the IPMI ports of all nodes.

External API network is used to make requests to the cloud.

Cloud Management This is the network that will be used for all internal traffic between the cloud services. This network is also used to install and configure the controller nodes. The network needs to be on an untagged VLAN.

Provisioning is the network used to PXE boot the ironic nodes and install the operating system selected by tenants. This network needs to be tagged on the switch for control plane/ironic compute nodes. For ironic bare metal nodes, VLAN configuration on the switch will be set by neutron driver.

Tenant VLANs The range of VLAN IDs should be reserved for use by ironic and set in the cloud configuration. It is configured as untagged on control plane nodes, therefore it cannot be combined with management network on the same network interface.

The following access should be allowed by routing/firewall:

Access from Management network to IPMI. Used during cloud installation and during ironic bare metal node provisioning.

Access from Management network to switch management network. Used by neutron driver.

The

EXTERNAL APInetwork must be reachable from the tenant networks if you want bare metal nodes to be able to make API calls to the cloud.

An example set of networks is defined in

data/networks.yml. The file needs to be modified to reflect your environment.The example uses

hed3for Management and External API traffic, andhed4for provisioning and tenant network traffic. If you need to modify these assignments for your environment, they are defined indata/net_interfaces.yml.- Local Storage

All servers should present a single OS disk, protected by a RAID controller. This disk needs to be at least 512 GB in capacity. In addition the example configures one additional disk depending on the role of the server:

Controllers

/dev/sdband/dev/sdcconfigured to be used by swift.

Additional disks can be configured for any of these roles by editing the corresponding

data/disks_*.ymlfile.