29 Sharing File Systems with NFS #

The Network File System (NFS) is a protocol that allows access to files on a server very similar to accessing local files.

29.1 Overview #

The Network File System (NFS) is a standardized, well-proven and widely supported network protocol that allows files to be shared between separate hosts.

The Network Information Service (NIS) can be used to have a centralized user management in the network. Combining NFS and NIS allows using file and directory permissions for access control in the network. NFS with NIS makes a network transparent to the user.

In the default configuration, NFS completely trusts the network and thus any machine that is connected to a trusted network. Any user with administrator privileges on any computer with physical access to any network the NFS server trusts can access any files that the server makes available.

In many cases, this level of security is perfectly satisfactory, such as when the network that is trusted is truly private, often localized to a single cabinet or machine room, and no unauthorized access is possible. In other cases the need to trust a whole subnet as a unit is restrictive and there is a need for more fine-grained trust. To meet the need in these cases, NFS supports various security levels using the Kerberos infrastructure. Kerberos requires NFSv4, which is used by default. For details, see Chapter 6, Network Authentication with Kerberos.

The following are terms used in the YaST module.

- Exports

A directory exported by an NFS server, which clients can integrate it into their system.

- NFS Client

The NFS client is a system that uses NFS services from an NFS server over the Network File System protocol. The TCP/IP protocol is already integrated into the Linux kernel; there is no need to install any additional software.

- NFS Server

The NFS server provides NFS services to clients. A running server depends on the following daemons:

nfsd(worker),idmapd(ID-to-name mapping for NFSv4, needed for certain scenarios only),statd(file locking), andmountd(mount requests).- NFSv3

NFSv3 is the version 3 implementation, the “old” stateless NFS that supports client authentication.

- NFSv4

NFSv4 is the new version 4 implementation that supports secure user authentication via kerberos. NFSv4 requires one single port only and thus is better suited for environments behind a firewall than NFSv3.

The protocol is specified as https://datatracker.ietf.org/doc/html/rfc3530.

- pNFS

Parallel NFS, a protocol extension of NFSv4. Any pNFS clients can directly access the data on an NFS server.

In principle, all exports can be made using IP addresses only. To avoid time-outs, you need a working DNS system. DNS is necessary at least for logging purposes, because the mountd daemon does reverse lookups.

29.2 Installing NFS Server #

The NFS server is not part of the default installation. To install the NFS server using YaST, choose › , select , and enable the option in the section. Press to install the required packages.

Like NIS, NFS is a client/server system. However, a machine can be both—it can supply file systems over the network (export) and mount file systems from other hosts (import).

Mounting NFS volumes locally on the exporting server is not supported on SUSE Linux Enterprise Server.

29.3 Configuring NFS Server #

Configuring an NFS server can be done either through YaST or manually. For authentication, NFS can also be combined with Kerberos.

29.3.1 Exporting File Systems with YaST #

With YaST, turn a host in your network into an NFS server—a server that exports directories and files to all hosts granted access to it or to all members of a group. Thus, the server can also provide applications without installing the applications locally on every host.

To set up such a server, proceed as follows:

Start YaST and select › ; see Figure 29.1, “NFS Server Configuration Tool”. You may be prompted to install additional software.

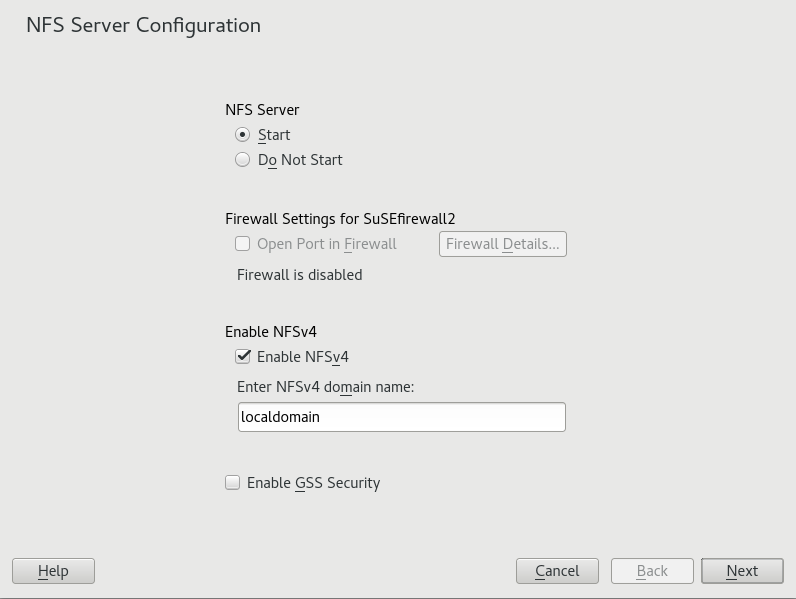

Figure 29.1: NFS Server Configuration Tool #Activate the radio button.

If a firewall is active on your system (SuSEfirewall2), check . YaST adapts its configuration for the NFS server by enabling the

nfsservice.Check whether you want to . If you deactivate NFSv4, YaST will only support NFSv3. For information about enabling NFSv2, see Note: NFSv2.

If NFSv4 is selected, additionally enter the appropriate NFSv4 domain name. This parameter is used by the

idmapddaemon that is required for Kerberos setups or if clients cannot work with numeric user names. Leave it aslocaldomain(the default) if you do not runidmapdor do not have any special requirements. For more information on theidmapddaemon see/etc/idmapd.conf.

Click if you need secure access to the server. A prerequisite for this is to have Kerberos installed on your domain and to have both the server and the clients kerberized. Click to proceed with the next configuration dialog.

Click in the upper half of the dialog to export your directory.

If you have not configured the allowed hosts already, another dialog for entering the client information and options pops up automatically. Enter the host wild card (usually you can leave the default settings as they are).

There are four possible types of host wild cards that can be set for each host: a single host (name or IP address), netgroups, wild cards (such as

*indicating all machines can access the server), and IP networks.For more information about these options, see the

exportsman page.Click to complete the configuration.

29.3.2 Exporting File Systems Manually #

The configuration files for the NFS export service are

/etc/exports and

/etc/sysconfig/nfs. In addition to these files,

/etc/idmapd.conf is needed for the NFSv4 server

configuration with kerberized NFS or if the clients cannot work with

numeric user names.

To start or restart the services, run the command

systemctl restart nfsserver. This also restarts the

RPC portmapper that is required by the NFS server.

To make sure the NFS server always starts at boot time, run sudo

systemctl enable nfsserver.

NFSv4 is the latest version of NFS protocol available on SUSE Linux Enterprise Server. Configuring directories for export with NFSv4 is now the same as with NFSv3.

On SUSE Linux Enterprise Server 11, the bind mount in

/etc/exports was mandatory. It is still supported,

but now deprecated.

/etc/exportsThe

/etc/exportsfile contains a list of entries. Each entry indicates a directory that is shared and how it is shared. A typical entry in/etc/exportsconsists of:/SHARED/DIRECTORY HOST(OPTION_LIST)

For example:

/export/data 192.168.1.2(rw,sync)

Here the IP address

192.168.1.2is used to identify the allowed client. You can also use the name of the host, a wild card indicating a set of hosts (*.abc.com,*, etc.), or netgroups (@my-hosts).For a detailed explanation of all options and their meaning, refer to the man page of

exports(man exports).In case you have modified

/etc/exportswhile the NFS server was running, you need to restart it for the changes to become active:sudo systemctl restart nfsserver./etc/sysconfig/nfsThe

/etc/sysconfig/nfsfile contains a few parameters that determine NFSv4 server daemon behavior. It is important to set the parameterNFS4_SUPPORTtoyes(default).NFS4_SUPPORTdetermines whether the NFS server supports NFSv4 exports and clients.In case you have modified

/etc/sysconfig/nfswhile the NFS server was running, you need to restart it for the changes to become active:sudo systemctl restart nfsserver.Tip: Mount OptionsOn SUSE Linux Enterprise Server 11, the

--bindmount in/etc/exportswas mandatory. It is still supported, but now deprecated. Configuring directories for export with NFSv4 is now the same as with NFSv3.Note: NFSv2If NFS clients still depend on NFSv2, enable it on the server in

/etc/sysconfig/nfsby setting:NFSD_OPTIONS="-V2" MOUNTD_OPTIONS="-V2"

After restarting the service, check whether version 2 is available with the command:

tux >cat /proc/fs/nfsd/versions +2 +3 +4 +4.1 -4.2/etc/idmapd.confStarting with SLE 12 SP1, the

idmapddaemon is only required if Kerberos authentication is used, or if clients cannot work with numeric user names. Linux clients can work with numeric user names since Linux kernel 2.6.39. Theidmapddaemon does the name-to-ID mapping for NFSv4 requests to the server and replies to the client.If required,

idmapdneeds to run on the NFSv4 server. Name-to-ID mapping on the client will be done bynfsidmapprovided by the package nfs-client.Make sure that there is a uniform way in which user names and IDs (UIDs) are assigned to users across machines that might probably be sharing file systems using NFS. This can be achieved by using NIS, LDAP, or any uniform domain authentication mechanism in your domain.

The parameter

Domainmust be set the same for both, client and server in the/etc/idmapd.conffile. If you are not sure, leave the domain aslocaldomainin the server and client files. A sample configuration file looks like the following:[General] Verbosity = 0 Pipefs-Directory = /var/lib/nfs/rpc_pipefs Domain = localdomain [Mapping] Nobody-User = nobody Nobody-Group = nobody

To start the

idmapddaemon, runsystemctl start nfs-idmapd. In case you have modified/etc/idmapd.confwhile the daemon was running, you need to restart it for the changes to become active:systemctl start nfs-idmapd.For more information, see the man pages of

idmapdandidmapd.conf(man idmapdandman idmapd.conf).

29.3.3 NFS with Kerberos #

To use Kerberos authentication for NFS, Generic Security Services (GSS) must be enabled. Select in the initial YaST NFS Server dialog. You must have a working Kerberos server to use this feature. YaST does not set up the server but only uses the provided functionality. If you want to use Kerberos authentication in addition to the YaST configuration, complete at least the following steps before running the NFS configuration:

Make sure that both the server and the client are in the same Kerberos domain. They must access the same KDC (Key Distribution Center) server and share their

krb5.keytabfile (the default location on any machine is/etc/krb5.keytab). For more information about Kerberos, see Chapter 6, Network Authentication with Kerberos.Start the gssd service on the client with

systemctl start rpc-gssd.service.Start the svcgssd service on the server with

systemctl start rpc-svcgssd.service.

Kerberos authentication also requires the idmapd daemon to run on the server. For more

information refer to /etc/idmapd.conf.

For more information about configuring kerberized NFS, refer to the links in Section 29.5, “For More Information”.

29.4 Configuring Clients #

To configure your host as an NFS client, you do not need to install additional software. All needed packages are installed by default.

29.4.1 Importing File Systems with YaST #

Authorized users can mount NFS directories from an NFS server into the local file tree using the YaST NFS client module. Proceed as follows:

Start the YaST NFS client module.

Click in the tab. Enter the host name of the NFS server, the directory to import, and the mount point at which to mount this directory locally.

When using NFSv4, select in the tab. Additionally, the must contain the same value as used by the NFSv4 server. The default domain is

localdomain.To use Kerberos authentication for NFS, GSS security must be enabled. Select .

Enable in the tab if you use a Firewall and want to allow access to the service from remote computers. The firewall status is displayed next to the check box.

Click to save your changes.

The configuration is written to /etc/fstab and the

specified file systems are mounted. When you start the YaST configuration

client at a later time, it also reads the existing configuration from this

file.

On (diskless) systems where the root partition is mounted via network as an NFS share, you need to be careful when configuring the network device with which the NFS share is accessible.

When shutting down or rebooting the system, the default processing order is to turn off network connections, then unmount the root partition. With NFS root, this order causes problems as the root partition cannot be cleanly unmounted as the network connection to the NFS share is already not activated. To prevent the system from deactivating the relevant network device, open the network device configuration tab as described in Section 17.4.1.2.5, “Activating the Network Device” and choose in the pane.

29.4.2 Importing File Systems Manually #

The prerequisite for importing file systems manually from an NFS server is

a running RPC port mapper. The nfs service takes care to

start it properly; thus, start it by entering systemctl start

nfs as root. Then

remote file systems can be mounted in the file system like local partitions

using mount:

tux > sudo mount HOST:REMOTE-PATHLOCAL-PATH

To import user directories from the nfs.example.com

machine, for example, use:

tux >sudomount nfs.example.com:/home /home

The mount takes several mount options. Please bear in

mind that all of the mount option stated below are mutually exclusive.

nconnectThe opton defines the count of TCP conncetions that the clients makes to the NFS server. You can specif any number from 1 to 16, where 1 is the default value if the mount option has not been specified.

The

nconnectsetting is applied only during the first mount process to the particular NFS server. If the same client executes the mount command to the same NFS server, all already established connections will be shared—no new connection will be established. To change thenconnectsetting, you have to unmount all clients connections to the particular NFS server. Then you can define a new value of thenconnectoption.You can find the current

nconnectvalue in effect in output of themountcommand or in the file/proc/mounts. If there is no value of the mount option, then the option has not been used during mounting and the default value 1 is in use.The option applies to NFS v2, v3, and all v4.x variants,

Note: Different number of connections than defined bynconnectAs you can close and open connections after the first mount, the actual count of connections necessarily does not have to be the same as the value of

nconnect.nosharetransportthe option causes that a client uses to mount its own isolated TCP connection. The client will not share the TCP connection with any other mount done before or after.

The option applies to NFS v2 and v3.

sharetransportThe option is a number that identifies mounts sharing the same TCP connection. If two or more mounts to a particular NFS server have a different value of

sharetransport, these mounts will use different connections. If you don't specify the option value for mounts to a particular NFS server, all the mounts will shate one TCP connection.The option applies to NFS v4.x.

29.4.2.1 Using the Automount Service #

The autofs daemon can be used to mount remote file systems automatically.

Add the following entry to the /etc/auto.master file:

/nfsmounts /etc/auto.nfs

Now the /nfsmounts directory acts as the root for all

the NFS mounts on the client if the auto.nfs file is

filled appropriately. The name auto.nfs is chosen for

the sake of convenience—you can choose any name. In

auto.nfs add entries for all the NFS mounts as

follows:

localdata -fstype=nfs server1:/data nfs4mount -fstype=nfs4 server2:/

Activate the settings with systemctl start autofs as

root. In this example, /nfsmounts/localdata,

the /data directory of

server1, is mounted with NFS and

/nfsmounts/nfs4mount from

server2 is mounted with NFSv4.

If the /etc/auto.master file is edited while the

service autofs is running, the automounter must be restarted for the

changes to take effect with systemctl restart autofs.

29.4.2.2 Manually Editing /etc/fstab #

A typical NFSv3 mount entry in /etc/fstab looks like

this:

nfs.example.com:/data /local/path nfs rw,noauto 0 0

For NFSv4 mounts, use nfs4 instead of

nfs in the third column:

nfs.example.com:/data /local/pathv4 nfs4 rw,noauto 0 0

The noauto option prevents the file system from being

mounted automatically at start-up. If you want to mount the respective

file system manually, it is possible to shorten the mount command

specifying the mount point only:

tux > sudo mount /local/path

If you do not enter the noauto option, the init

scripts of the system will handle the mount of those file systems at

start-up.

29.4.3 Parallel NFS (pNFS) #

NFS is one of the oldest protocols, developed in the '80s. As such, NFS is usually sufficient if you want to share small files. However, when you want to transfer big files or large numbers of clients want to access data, an NFS server becomes a bottleneck and has a significant impact on the system performance. This is because of files quickly getting bigger, whereas the relative speed of your Ethernet has not fully kept up.

When you request a file from a regular NFS server, the server looks up the file metadata, collects all the data and transfers it over the network to your client. However, the performance bottleneck becomes apparent no matter how small or big the files are:

With small files most of the time is spent collecting the metadata.

With big files most of the time is spent on transferring the data from server to client.

pNFS, or parallel NFS, overcomes this limitation as it separates the file system metadata from the location of the data. As such, pNFS requires two types of servers:

A metadata or control server that handles all the non-data traffic

One or more storage server(s) that hold(s) the data

The metadata and the storage servers form a single, logical NFS server. When a client wants to read or write, the metadata server tells the NFSv4 client which storage server to use to access the file chunks. The client can access the data directly on the server.

SUSE Linux Enterprise Server supports pNFS on the client side only.

29.4.3.1 Configuring pNFS Client With YaST #

Proceed as described in Procedure 29.2, “Importing NFS Directories”, but click

the check box and optionally . YaST will do all the necessary steps and will write all

the required options in the file /etc/exports.

29.4.3.2 Configuring pNFS Client Manually #

Refer to Section 29.4.2, “Importing File Systems Manually” to start. Most of the

configuration is done by the NFSv4 server. For pNFS, the only difference

is to add the minorversion option and the metadata server

MDS_SERVER to your mount

command:

tux > sudo mount -t nfs4 -o minorversion=1 MDS_SERVER MOUNTPOINT

To help with debugging, change the value in the /proc

file system:

tux >sudo echo 32767 > /proc/sys/sunrpc/nfsd_debugtux >sudo echo 32767 > /proc/sys/sunrpc/nfs_debug

29.5 For More Information #

In addition to the man pages of exports,

nfs, and mount, information about

configuring an NFS server and client is available in

/usr/share/doc/packages/nfsidmap/README. For further

documentation online refer to the following Web sites:

Find the detailed technical documentation online at SourceForge.

For instructions for setting up kerberized NFS, refer to NFS Version 4 Open Source Reference Implementation.

If you have questions on NFSv4, refer to the Linux NFSv4 FAQ.