20 RADOS Block Device #

A block is a sequence of bytes, for example a 4 MB block of data. Block-based storage interfaces are the most common way to store data with rotating media, such as hard disks, CDs, floppy disks. The ubiquity of block device interfaces makes a virtual block device an ideal candidate to interact with a mass data storage system like Ceph.

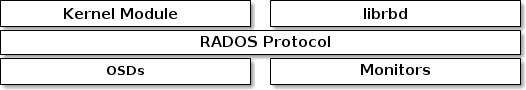

Ceph block devices allow sharing of physical resources, and are resizable.

They store data striped over multiple OSDs in a Ceph cluster. Ceph block

devices leverage RADOS capabilities such as snapshotting, replication, and

consistency. Ceph's RADOS Block Devices (RBD) interact with OSDs using kernel modules or

the librbd library.

Ceph's block devices deliver high performance with infinite scalability to

kernel modules. They support virtualization solutions such as QEMU, or

cloud-based computing systems such as OpenStack that rely on libvirt. You

can use the same cluster to operate the Object Gateway, CephFS, and RADOS Block Devices

simultaneously.

20.1 Block device commands #

The rbd command enables you to create, list, introspect,

and remove block device images. You can also use it, for example, to clone

images, create snapshots, rollback an image to a snapshot, or view a

snapshot.

20.1.1 Creating a block device image in a replicated pool #

Before you can add a block device to a client, you need to create a related image in an existing pool (see Chapter 18, Manage storage pools):

cephuser@adm > rbd create --size MEGABYTES POOL-NAME/IMAGE-NAMEFor example, to create a 1 GB image named 'myimage' that stores information in a pool named 'mypool', execute the following:

cephuser@adm > rbd create --size 1024 mypool/myimageIf you omit a size unit shortcut ('G' or 'T'), the image's size is in megabytes. Use 'G' or 'T' after the size number to specify gigabytes or terabytes.

20.1.2 Creating a block device image in an erasure coded pool #

It is possible to store data of a block device image directly in erasure

coded (EC) pools. A RADOS Block Device image consists of data and

metadata parts. You can store only the data part of a

RADOS Block Device image in an EC pool. The pool needs to have the

overwrite flag set to true, and that

is only possible if all OSDs where the pool is stored use BlueStore.

You cannot store the image's metadata part in an EC pool. You can specify

the replicated pool for storing the image's metadata with the

--pool= option of the rbd create

command or specify pool/ as a prefix to the image name.

Create an EC pool:

cephuser@adm >ceph osd pool create EC_POOL 12 12 erasurecephuser@adm >ceph osd pool set EC_POOL allow_ec_overwrites true

Specify the replicated pool for storing metadata:

cephuser@adm > rbd create IMAGE_NAME --size=1G --data-pool EC_POOL --pool=POOLOr:

cephuser@adm > rbd create POOL/IMAGE_NAME --size=1G --data-pool EC_POOL20.1.3 Listing block device images #

To list block devices in a pool named 'mypool', execute the following:

cephuser@adm > rbd ls mypool20.1.4 Retrieving image information #

To retrieve information from an image 'myimage' within a pool named 'mypool', run the following:

cephuser@adm > rbd info mypool/myimage20.1.5 Resizing a block device image #

RADOS Block Device images are thin provisioned—they do not actually use any

physical storage until you begin saving data to them. However, they do have

a maximum capacity that you set with the --size option. If

you want to increase (or decrease) the maximum size of the image, run the

following:

cephuser@adm >rbd resize --size 2048 POOL_NAME/IMAGE_NAME # to increasecephuser@adm >rbd resize --size 2048 POOL_NAME/IMAGE_NAME --allow-shrink # to decrease

20.1.6 Removing a block device image #

To remove a block device that corresponds to an image 'myimage' in a pool named 'mypool', run the following:

cephuser@adm > rbd rm mypool/myimage20.2 Mounting and unmounting #

After you create a RADOS Block Device, you can use it like any other disk device: format it, mount it to be able to exchange files, and unmount it when done.

The rbd command defaults to accessing the cluster using

the Ceph admin user account. This account has full

administrative access to the cluster. This runs the risk of accidentally

causing damage, similarly to logging into a Linux workstation as root.

Thus, it is preferable to create user accounts with fewer privileges and use

these accounts for normal read/write RADOS Block Device access.

20.2.1 Creating a Ceph user account #

To create a new user account with Ceph Manager, Ceph Monitor, and Ceph OSD capabilities, use

the ceph command with the auth

get-or-create subcommand:

cephuser@adm > ceph auth get-or-create client.ID mon 'profile rbd' osd 'profile profile name \

[pool=pool-name] [, profile ...]' mgr 'profile rbd [pool=pool-name]'For example, to create a user called qemu with read-write access to the pool vms and read-only access to the pool images, execute the following:

ceph auth get-or-create client.qemu mon 'profile rbd' osd 'profile rbd pool=vms, profile rbd-read-only pool=images' \ mgr 'profile rbd pool=images'

The output from the ceph auth get-or-create command will

be the keyring for the specified user, which can be written to

/etc/ceph/ceph.client.ID.keyring.

When using the rbd command, you can specify the user ID

by providing the optional --id

ID argument.

For more details on managing Ceph user accounts, refer to

Chapter 30, Authentication with cephx.

20.2.2 User authentication #

To specify a user name, use --id

user-name. If you use

cephx authentication, you also need to specify a

secret. It may come from a keyring or a file containing the secret:

cephuser@adm > rbd device map --pool rbd myimage --id admin --keyring /path/to/keyringor

cephuser@adm > rbd device map --pool rbd myimage --id admin --keyfile /path/to/file20.2.3 Preparing a RADOS Block Device for use #

Make sure your Ceph cluster includes a pool with the disk image you want to map. Assume the pool is called

mypooland the image ismyimage.cephuser@adm >rbd list mypoolMap the image to a new block device:

cephuser@adm >rbd device map --pool mypool myimageList all mapped devices:

cephuser@adm >rbd device list id pool image snap device 0 mypool myimage - /dev/rbd0The device we want to work on is

/dev/rbd0.Tip: RBD device pathInstead of

/dev/rbdDEVICE_NUMBER, you can use/dev/rbd/POOL_NAME/IMAGE_NAMEas a persistent device path. For example:/dev/rbd/mypool/myimage

Make an XFS file system on the

/dev/rbd0device:#mkfs.xfs /dev/rbd0 log stripe unit (4194304 bytes) is too large (maximum is 256KiB) log stripe unit adjusted to 32KiB meta-data=/dev/rbd0 isize=256 agcount=9, agsize=261120 blks = sectsz=512 attr=2, projid32bit=1 = crc=0 finobt=0 data = bsize=4096 blocks=2097152, imaxpct=25 = sunit=1024 swidth=1024 blks naming =version 2 bsize=4096 ascii-ci=0 ftype=0 log =internal log bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=8 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0Replacing

/mntwith your mount point, mount the device and check it is correctly mounted:#mount /dev/rbd0 /mnt#mount | grep rbd0 /dev/rbd0 on /mnt type xfs (rw,relatime,attr2,inode64,sunit=8192,...Now you can move data to and from the device as if it was a local directory.

Tip: Increasing the size of RBD deviceIf you find that the size of the RBD device is no longer enough, you can easily increase it.

Increase the size of the RBD image, for example up to 10 GB.

cephuser@adm >rbd resize --size 10000 mypool/myimage Resizing image: 100% complete...done.Grow the file system to fill up the new size of the device:

#xfs_growfs /mnt [...] data blocks changed from 2097152 to 2560000

After you finish accessing the device, you can unmap and unmount it.

cephuser@adm >rbd device unmap /dev/rbd0#unmount /mnt

A rbdmap script and systemd unit is provided to make

the process of mapping and mounting RBDs after boot, and unmounting them

before shutdown, smoother. Refer to Section 20.2.4, “rbdmap Map RBD devices at boot time”.

20.2.4 rbdmap Map RBD devices at boot time #

rbdmap is a shell script that automates rbd

map and rbd device unmap operations on one or

more RBD images. Although you can run the script manually at any time, the

main advantage is automatic mapping and mounting of RBD images at boot time

(and unmounting and unmapping at shutdown), as triggered by the Init

system. A systemd unit file, rbdmap.service is

included with the ceph-common package for this

purpose.

The script takes a single argument, which can be either

map or unmap. In either case, the script

parses a configuration file. It defaults to

/etc/ceph/rbdmap, but can be overridden via an

environment variable RBDMAPFILE. Each line of the

configuration file corresponds to an RBD image which is to be mapped, or

unmapped.

The configuration file has the following format:

image_specification rbd_options

image_specificationPath to an image within a pool. Specify as pool_name/image_name.

rbd_optionsAn optional list of parameters to be passed to the underlying

rbd device mapcommand. These parameters and their values should be specified as a comma-separated string, for example:PARAM1=VAL1,PARAM2=VAL2,...

The example makes the

rbdmapscript run the following command:cephuser@adm >rbd device map POOL_NAME/IMAGE_NAME --PARAM1 VAL1 --PARAM2 VAL2In the following example you can see how to specify a user name and a keyring with a corresponding secret:

cephuser@adm >rbdmap device map mypool/myimage id=rbd_user,keyring=/etc/ceph/ceph.client.rbd.keyring

When run as rbdmap map, the script parses the

configuration file, and for each specified RBD image, it attempts to first

map the image (using the rbd device map command) and

then mount the image.

When run as rbdmap unmap, images listed in the

configuration file will be unmounted and unmapped.

rbdmap unmap-all attempts to unmount and subsequently

unmap all currently mapped RBD images, regardless of whether they are

listed in the configuration file.

If successful, the rbd device map operation maps the

image to a /dev/rbdX device, at which point a udev

rule is triggered to create a friendly device name symbolic link

/dev/rbd/pool_name/image_name

pointing to the real mapped device.

In order for mounting and unmounting to succeed, the 'friendly' device name

needs to have a corresponding entry in /etc/fstab.

When writing /etc/fstab entries for RBD images,

specify the 'noauto' (or 'nofail') mount option. This prevents the Init

system from trying to mount the device too early—before the device in

question even exists, as rbdmap.service is typically

triggered quite late in the boot sequence.

For a complete list of rbd options, see the

rbd manual page (man 8 rbd).

For examples of the rbdmap usage, see the

rbdmap manual page (man 8 rbdmap).

20.2.5 Increasing the size of RBD devices #

If you find that the size of the RBD device is no longer enough, you can easily increase it.

Increase the size of the RBD image, for example up to 10GB.

cephuser@adm >rbd resize --size 10000 mypool/myimage Resizing image: 100% complete...done.Grow the file system to fill up the new size of the device.

#xfs_growfs /mnt [...] data blocks changed from 2097152 to 2560000

20.3 Snapshots #

An RBD snapshot is a snapshot of a RADOS Block Device image. With snapshots, you retain a

history of the image's state. Ceph also supports snapshot layering, which

allows you to clone VM images quickly and easily. Ceph supports block

device snapshots using the rbd command and many

higher-level interfaces, including QEMU, libvirt,

OpenStack, and CloudStack.

Stop input and output operations and flush all pending writes before snapshotting an image. If the image contains a file system, the file system must be in a consistent state at the time of snapshotting.

20.3.1 Enabling and configuring cephx #

When cephx is enabled, you must specify a user

name or ID and a path to the keyring containing the corresponding key for

the user. See Chapter 30, Authentication with cephx for more details. You may

also add the CEPH_ARGS environment variable to

avoid re-entry of the following parameters.

cephuser@adm >rbd --id user-ID --keyring=/path/to/secret commandscephuser@adm >rbd --name username --keyring=/path/to/secret commands

For example:

cephuser@adm >rbd --id admin --keyring=/etc/ceph/ceph.keyring commandscephuser@adm >rbd --name client.admin --keyring=/etc/ceph/ceph.keyring commands

Add the user and secret to the CEPH_ARGS

environment variable so that you do not need to enter them each time.

20.3.2 Snapshot basics #

The following procedures demonstrate how to create, list, and remove

snapshots using the rbd command on the command line.

20.3.2.1 Creating snapshots #

To create a snapshot with rbd, specify the snap

create option, the pool name, and the image name.

cephuser@adm >rbd --pool pool-name snap create --snap snap-name image-namecephuser@adm >rbd snap create pool-name/image-name@snap-name

For example:

cephuser@adm >rbd --pool rbd snap create --snap snapshot1 image1cephuser@adm >rbd snap create rbd/image1@snapshot1

20.3.2.2 Listing snapshots #

To list snapshots of an image, specify the pool name and the image name.

cephuser@adm >rbd --pool pool-name snap ls image-namecephuser@adm >rbd snap ls pool-name/image-name

For example:

cephuser@adm >rbd --pool rbd snap ls image1cephuser@adm >rbd snap ls rbd/image1

20.3.2.3 Rolling back snapshots #

To rollback to a snapshot with rbd, specify the

snap rollback option, the pool name, the image name, and

the snapshot name.

cephuser@adm >rbd --pool pool-name snap rollback --snap snap-name image-namecephuser@adm >rbd snap rollback pool-name/image-name@snap-name

For example:

cephuser@adm >rbd --pool pool1 snap rollback --snap snapshot1 image1cephuser@adm >rbd snap rollback pool1/image1@snapshot1

Rolling back an image to a snapshot means overwriting the current version of the image with data from a snapshot. The time it takes to execute a rollback increases with the size of the image. It is faster to clone from a snapshot than to rollback an image to a snapshot, and it is the preferred method of returning to a pre-existing state.

20.3.2.4 Deleting a snapshot #

To delete a snapshot with rbd, specify the snap

rm option, the pool name, the image name, and the user name.

cephuser@adm >rbd --pool pool-name snap rm --snap snap-name image-namecephuser@adm >rbd snap rm pool-name/image-name@snap-name

For example:

cephuser@adm >rbd --pool pool1 snap rm --snap snapshot1 image1cephuser@adm >rbd snap rm pool1/image1@snapshot1

Ceph OSDs delete data asynchronously, so deleting a snapshot does not free up the disk space immediately.

20.3.2.5 Purging snapshots #

To delete all snapshots for an image with rbd, specify

the snap purge option and the image name.

cephuser@adm >rbd --pool pool-name snap purge image-namecephuser@adm >rbd snap purge pool-name/image-name

For example:

cephuser@adm >rbd --pool pool1 snap purge image1cephuser@adm >rbd snap purge pool1/image1

20.3.3 Snapshot layering #

Ceph supports the ability to create multiple copy-on-write (COW) clones of a block device snapshot. Snapshot layering enables Ceph block device clients to create images very quickly. For example, you might create a block device image with a Linux VM written to it, then, snapshot the image, protect the snapshot, and create as many copy-on-write clones as you like. A snapshot is read-only, so cloning a snapshot simplifies semantics—making it possible to create clones rapidly.

The terms 'parent' and 'child' mentioned in the command line examples below mean a Ceph block device snapshot (parent) and the corresponding image cloned from the snapshot (child).

Each cloned image (child) stores a reference to its parent image, which enables the cloned image to open the parent snapshot and read it.

A COW clone of a snapshot behaves exactly like any other Ceph block device image. You can read to, write from, clone, and resize cloned images. There are no special restrictions with cloned images. However, the copy-on-write clone of a snapshot refers to the snapshot, so you must protect the snapshot before you clone it.

--image-format 1 not supported

You cannot create snapshots of images created with the deprecated

rbd create --image-format 1 option. Ceph only

supports cloning of the default format 2 images.

20.3.3.1 Getting started with layering #

Ceph block device layering is a simple process. You must have an image. You must create a snapshot of the image. You must protect the snapshot. After you have performed these steps, you can begin cloning the snapshot.

The cloned image has a reference to the parent snapshot, and includes the pool ID, image ID, and snapshot ID. The inclusion of the pool ID means that you may clone snapshots from one pool to images in another pool.

Image Template: A common use case for block device layering is to create a master image and a snapshot that serves as a template for clones. For example, a user may create an image for a Linux distribution (for example, SUSE Linux Enterprise Server), and create a snapshot for it. Periodically, the user may update the image and create a new snapshot (for example,

zypper ref && zypper patchfollowed byrbd snap create). As the image matures, the user can clone any one of the snapshots.Extended Template: A more advanced use case includes extending a template image that provides more information than a base image. For example, a user may clone an image (a VM template) and install other software (for example, a database, a content management system, or an analytics system), and then snapshot the extended image, which itself may be updated in the same way as the base image.

Template Pool: One way to use block device layering is to create a pool that contains master images that act as templates, and snapshots of those templates. You may then extend read-only privileges to users so that they may clone the snapshots without the ability to write or execute within the pool.

Image Migration/Recovery: One way to use block device layering is to migrate or recover data from one pool into another pool.

20.3.3.2 Protecting a snapshot #

Clones access the parent snapshots. All clones would break if a user inadvertently deleted the parent snapshot. To prevent data loss, you need to protect the snapshot before you can clone it.

cephuser@adm >rbd --pool pool-name snap protect \ --image image-name --snap snapshot-namecephuser@adm >rbd snap protect pool-name/image-name@snapshot-name

For example:

cephuser@adm >rbd --pool pool1 snap protect --image image1 --snap snapshot1cephuser@adm >rbd snap protect pool1/image1@snapshot1

You cannot delete a protected snapshot.

20.3.3.3 Cloning a snapshot #

To clone a snapshot, you need to specify the parent pool, image, snapshot, the child pool, and the image name. You need to protect the snapshot before you can clone it.

cephuser@adm >rbd clone --pool pool-name --image parent-image \ --snap snap-name --dest-pool pool-name \ --dest child-imagecephuser@adm >rbd clone pool-name/parent-image@snap-name \ pool-name/child-image-name

For example:

cephuser@adm > rbd clone pool1/image1@snapshot1 pool1/image2You may clone a snapshot from one pool to an image in another pool. For example, you may maintain read-only images and snapshots as templates in one pool, and writable clones in another pool.

20.3.3.4 Unprotecting a snapshot #

Before you can delete a snapshot, you must unprotect it first. Additionally, you may not delete snapshots that have references from clones. You need to flatten each clone of a snapshot before you can delete the snapshot.

cephuser@adm >rbd --pool pool-name snap unprotect --image image-name \ --snap snapshot-namecephuser@adm >rbd snap unprotect pool-name/image-name@snapshot-name

For example:

cephuser@adm >rbd --pool pool1 snap unprotect --image image1 --snap snapshot1cephuser@adm >rbd snap unprotect pool1/image1@snapshot1

20.3.3.5 Listing children of a snapshot #

To list the children of a snapshot, execute the following:

cephuser@adm >rbd --pool pool-name children --image image-name --snap snap-namecephuser@adm >rbd children pool-name/image-name@snapshot-name

For example:

cephuser@adm >rbd --pool pool1 children --image image1 --snap snapshot1cephuser@adm >rbd children pool1/image1@snapshot1

20.3.3.6 Flattening a cloned image #

Cloned images retain a reference to the parent snapshot. When you remove the reference from the child clone to the parent snapshot, you effectively 'flatten' the image by copying the information from the snapshot to the clone. The time it takes to flatten a clone increases with the size of the snapshot. To delete a snapshot, you must flatten the child images first.

cephuser@adm >rbd --pool pool-name flatten --image image-namecephuser@adm >rbd flatten pool-name/image-name

For example:

cephuser@adm >rbd --pool pool1 flatten --image image1cephuser@adm >rbd flatten pool1/image1

Since a flattened image contains all the information from the snapshot, a flattened image will take up more storage space than a layered clone.

20.4 RBD image mirrors #

RBD images can be asynchronously mirrored between two Ceph clusters. This capability is available in two modes:

- Journal-based

This mode uses the RBD journaling image feature to ensure point-in-time, crash-consistent replication between clusters. Every write to the RBD image is first recorded to the associated journal before modifying the actual image. The

remotecluster will read from the journal and replay the updates to its local copy of the image. Since each write to the RBD image will result in two writes to the Ceph cluster, expect write latencies to nearly double when using the RBD journaling image feature.- Snapshot-based

This mode uses periodically-scheduled or manually-created RBD image mirror-snapshots to replicate crash-consistent RBD images between clusters. The

remotecluster will determine any data or metadata updates between two mirror-snapshots, and copy the deltas to its local copy of the image. With the help of the RBD fast-diff image feature, updated data blocks can be quickly computed without the need to scan the full RBD image. Since this mode is not point-in-time consistent, the full snapshot delta will need to be synchronized prior to use during a failover scenario. Any partially-applied snapshot deltas will be rolled back to the last fully synchronized snapshot prior to use.

Mirroring is configured on a per-pool basis within peer clusters. This can

be configured on a specific subset of images within the pool, or configured

to automatically mirror all images within a pool when using journal-based

mirroring only. Mirroring is configured using the rbd

command. The rbd-mirror daemon is responsible for pulling image updates

from the remote, peer cluster and applying them to the

image within the local cluster.

Depending on the desired needs for replication, RBD mirroring can be configured for either one- or two-way replication:

- One-way Replication

When data is only mirrored from a primary cluster to a secondary cluster, the

rbd-mirrordaemon runs only on the secondary cluster.- Two-way Replication

When data is mirrored from primary images on one cluster to non-primary images on another cluster (and vice-versa), the

rbd-mirrordaemon runs on both clusters.

Each instance of the rbd-mirror daemon needs to be able to connect to both

the local and remote Ceph clusters

simultaneously. For example, all monitor and OSD hosts. Additionally, the

network needs to have sufficient bandwidth between the two data centers to

handle mirroring workload.

20.4.1 Pool configuration #

The following procedures demonstrate how to perform the basic

administrative tasks to configure mirroring using the

rbd command. Mirroring is configured on a per-pool basis

within the Ceph clusters.

You need to perform the pool configuration steps on both peer clusters.

These procedures assume two clusters, named local and

remote, are accessible from a single host for clarity.

See the rbd manual page (man 8 rbd)

for additional details on how to connect to different Ceph clusters.

The cluster name in the following examples corresponds to a Ceph

configuration file of the same name

/etc/ceph/remote.conf and Ceph keyring file of the

same name /etc/ceph/remote.client.admin.keyring.

20.4.1.1 Enable mirroring on a pool #

To enable mirroring on a pool, specify the mirror pool

enable subcommand, the pool name, and the mirroring mode. The

mirroring mode can either be pool or image:

- pool

All images in the pool with the journaling feature enabled are mirrored.

- image

Mirroring needs to be explicitly enabled on each image. See Section 20.4.2.1, “Enabling image mirroring” for more information.

For example:

cephuser@adm >rbd --cluster local mirror pool enable POOL_NAME poolcephuser@adm >rbd --cluster remote mirror pool enable POOL_NAME pool

20.4.1.2 Disable mirroring #

To disable mirroring on a pool, specify the mirror pool

disable subcommand and the pool name. When mirroring is disabled

on a pool in this way, mirroring will also be disabled on any images

(within the pool) for which mirroring was enabled explicitly.

cephuser@adm >rbd --cluster local mirror pool disable POOL_NAMEcephuser@adm >rbd --cluster remote mirror pool disable POOL_NAME

20.4.1.3 Bootstrapping peers #

In order for the rbd-mirror daemon to discover its peer cluster, the peer

needs to be registered to the pool and a user account needs to be created.

This process can be automated with rbd and the

mirror pool peer bootstrap create and mirror

pool peer bootstrap import commands.

To manually create a new bootstrap token with rbd,

specify the mirror pool peer bootstrap create command,

a pool name, along with an optional friendly site name to describe the

local cluster:

cephuser@local > rbd mirror pool peer bootstrap create \

[--site-name LOCAL_SITE_NAME] POOL_NAME

The output of mirror pool peer bootstrap create will be

a token that should be provided to the mirror pool peer bootstrap

import command. For example, on the local

cluster:

cephuser@local > rbd --cluster local mirror pool peer bootstrap create --site-name local image-pool

eyJmc2lkIjoiOWY1MjgyZGItYjg5OS00NTk2LTgwOTgtMzIwYzFmYzM5NmYzIiwiY2xpZW50X2lkIjoicmJkLW1pcnJvci1wZWVyIiwia2V5I \

joiQVFBUnczOWQwdkhvQmhBQVlMM1I4RmR5dHNJQU50bkFTZ0lOTVE9PSIsIm1vbl9ob3N0IjoiW3YyOjE5Mi4xNjguMS4zOjY4MjAsdjE6MTkyLjE2OC4xLjM6NjgyMV0ifQ==

To manually import the bootstrap token created by another cluster with the

rbd command, use the following syntax:

rbd mirror pool peer bootstrap import \ [--site-name LOCAL_SITE_NAME] \ [--direction DIRECTION \ POOL_NAME TOKEN_PATH

Where:

- LOCAL_SITE_NAME

An optional friendly site name to describe the

localcluster.- DIRECTION

A mirroring direction. Defaults to

rx-txfor bidirectional mirroring, but can also be set torx-onlyfor unidirectional mirroring.- POOL_NAME

Name of the pool.

- TOKEN_PATH

A file path to the created token (or

-to read it from the standard input).

For example, on the remote cluster:

cephuser@remote > cat <<EOF > token

eyJmc2lkIjoiOWY1MjgyZGItYjg5OS00NTk2LTgwOTgtMzIwYzFmYzM5NmYzIiwiY2xpZW50X2lkIjoicmJkLW1pcnJvci1wZWVyIiwia2V5IjoiQVFBUnczOWQwdkhvQmhBQVlMM1I4RmR5dHNJQU50bkFTZ0lOTVE9PSIsIm1vbl9ob3N0IjoiW3YyOjE5Mi4xNjguMS4zOjY4MjAsdjE6MTkyLjE2OC4xLjM6NjgyMV0ifQ==

EOFcephuser@adm > rbd --cluster remote mirror pool peer bootstrap import \

--site-name remote image-pool token20.4.1.4 Adding a cluster peer manually #

Alternatively to bootstrapping peers as described in

Section 20.4.1.3, “Bootstrapping peers”, you can specify

peers manually. The remote rbd-mirror daemon will need access to the

local cluster to perform mirroring. Create a new local Ceph user that

the remote rbd-mirror daemon will use, for example

rbd-mirror-peer:

cephuser@adm > ceph auth get-or-create client.rbd-mirror-peer \

mon 'profile rbd' osd 'profile rbd'

Use the following syntax to add a mirroring peer Ceph cluster with the

rbd command:

rbd mirror pool peer add POOL_NAME CLIENT_NAME@CLUSTER_NAME

For example:

cephuser@adm >rbd --cluster site-a mirror pool peer add image-pool client.rbd-mirror-peer@site-bcephuser@adm >rbd --cluster site-b mirror pool peer add image-pool client.rbd-mirror-peer@site-a

By default, the rbd-mirror daemon needs to have access to the Ceph

configuration file located at

/etc/ceph/.CLUSTER_NAME.conf.

It provides IP addresses of the peer cluster’s MONs and a keyring for a

client named CLIENT_NAME located in the default

or custom keyring search paths, for example

/etc/ceph/CLUSTER_NAME.CLIENT_NAME.keyring.

Alternatively, the peer cluster’s MON and/or client key can be securely

stored within the local Ceph config-key store. To specify the peer

cluster connection attributes when adding a mirroring peer, use the

--remote-mon-host and --remote-key-file

options. For example:

cephuser@adm >rbd --cluster site-a mirror pool peer add image-pool \ client.rbd-mirror-peer@site-b --remote-mon-host 192.168.1.1,192.168.1.2 \ --remote-key-file /PATH/TO/KEY_FILEcephuser@adm >rbd --cluster site-a mirror pool info image-pool --all Mode: pool Peers: UUID NAME CLIENT MON_HOST KEY 587b08db... site-b client.rbd-mirror-peer 192.168.1.1,192.168.1.2 AQAeuZdb...

20.4.1.5 Remove cluster peer #

To remove a mirroring peer cluster, specify the mirror pool peer

remove subcommand, the pool name, and the peer UUID (available

from the rbd mirror pool info command):

cephuser@adm >rbd --cluster local mirror pool peer remove POOL_NAME \ 55672766-c02b-4729-8567-f13a66893445cephuser@adm >rbd --cluster remote mirror pool peer remove POOL_NAME \ 60c0e299-b38f-4234-91f6-eed0a367be08

20.4.1.6 Data pools #

When creating images in the destination cluster, rbd-mirror selects a

data pool as follows:

If the destination cluster has a default data pool configured (with the

rbd_default_data_poolconfiguration option), it will be used.Otherwise, if the source image uses a separate data pool, and a pool with the same name exists on the destination cluster, that pool will be used.

If neither of the above is true, no data pool will be set.

20.4.2 RBD Image configuration #

Unlike pool configuration, image configuration only needs to be performed against a single mirroring peer Ceph cluster.

Mirrored RBD images are designated as either primary or non-primary. This is a property of the image and not the pool. Images that are designated as non-primary cannot be modified.

Images are automatically promoted to primary when mirroring is first

enabled on an image (either implicitly if the pool mirror mode was 'pool'

and the image has the journaling image feature enabled, or explicitly (see

Section 20.4.2.1, “Enabling image mirroring”) by the

rbd command).

20.4.2.1 Enabling image mirroring #

If mirroring is configured in the image mode, then it

is necessary to explicitly enable mirroring for each image within the

pool. To enable mirroring for a specific image with

rbd, specify the mirror image enable

subcommand along with the pool and image name:

cephuser@adm > rbd --cluster local mirror image enable \

POOL_NAME/IMAGE_NAME

The mirror image mode can either be journal or

snapshot:

- journal (default)

When configured in

journalmode, mirroring will use the RBD journaling image feature to replicate the image contents. If the RBD journaling image feature is not yet enabled on the image, it will be automatically enabled.- snapshot

When configured in

snapshotmode, mirroring will use RBD image mirror-snapshots to replicate the image contents. Once enabled, an initial mirror-snapshot will automatically be created. Additional RBD image mirror-snapshots can be created by therbdcommand.

For example:

cephuser@adm >rbd --cluster local mirror image enable image-pool/image-1 snapshotcephuser@adm >rbd --cluster local mirror image enable image-pool/image-2 journal

20.4.2.2 Enabling the image journaling feature #

RBD mirroring uses the RBD journaling feature to ensure that the

replicated image always remains crash-consistent. When using the

image mirroring mode, the journaling feature will be

automatically enabled if mirroring is enabled on the image. When using the

pool mirroring mode, before an image can be mirrored to

a peer cluster, the RBD image journaling feature must be enabled. The

feature can be enabled at image creation time by providing the

--image-feature exclusive-lock,journaling option to the

rbd command.

Alternatively, the journaling feature can be dynamically enabled on

pre-existing RBD images. To enable journaling, specify the

feature enable subcommand, the pool and image name, and

the feature name:

cephuser@adm >rbd --cluster local feature enable POOL_NAME/IMAGE_NAME exclusive-lockcephuser@adm >rbd --cluster local feature enable POOL_NAME/IMAGE_NAME journaling

The journaling feature is dependent on the

exclusive-lock feature. If the

exclusive-lock feature is not already enabled, you need

to enable it prior to enabling the journaling feature.

You can enable journaling on all new images by default by adding

rbd default features =

layering,exclusive-lock,object-map,deep-flatten,journaling to

your Ceph configuration file.

20.4.2.3 Creating image mirror-snapshots #

When using snapshot-based mirroring, mirror-snapshots will need to be

created whenever it is desired to mirror the changed contents of the RBD

image. To create a mirror-snapshot manually with rbd,

specify the mirror image snapshot command along with

the pool and image name:

cephuser@adm > rbd mirror image snapshot POOL_NAME/IMAGE_NAMEFor example:

cephuser@adm > rbd --cluster local mirror image snapshot image-pool/image-1

By default only three mirror-snapshots will be created per image. The most

recent mirror-snapshot is automatically pruned if the limit is reached.

The limit can be overridden via the

rbd_mirroring_max_mirroring_snapshots configuration

option if required. Additionally, mirror-snapshots are automatically

deleted when the image is removed or when mirroring is disabled.

Mirror-snapshots can also be automatically created on a periodic basis if mirror-snapshot schedules are defined. The mirror-snapshot can be scheduled globally, per-pool, or per-image levels. Multiple mirror-snapshot schedules can be defined at any level, but only the most-specific snapshot schedules that match an individual mirrored image will run.

To create a mirror-snapshot schedule with rbd, specify

the mirror snapshot schedule add command along with an

optional pool or image name, interval, and optional start time.

The interval can be specified in days, hours, or minutes using the

suffixes d, h, or m

respectively. The optional start time can be specified using the ISO 8601

time format. For example:

cephuser@adm >rbd --cluster local mirror snapshot schedule add --pool image-pool 24h 14:00:00-05:00cephuser@adm >rbd --cluster local mirror snapshot schedule add --pool image-pool --image image1 6h

To remove a mirror-snapshot schedule with rbd, specify

the mirror snapshot schedule remove command with

options that match the corresponding add schedule command.

To list all snapshot schedules for a specific level (global, pool, or

image) with rbd, specify the mirror snapshot

schedule ls command along with an optional pool or image name.

Additionally, the --recursive option can be specified to

list all schedules at the specified level and below. For example:

cephuser@adm > rbd --cluster local mirror schedule ls --pool image-pool --recursive

POOL NAMESPACE IMAGE SCHEDULE

image-pool - - every 1d starting at 14:00:00-05:00

image-pool image1 every 6h

To find out when the next snapshots will be created for snapshot-based

mirroring RBD images with rbd, specify the

mirror snapshot schedule status command along with an

optional pool or image name. For example:

cephuser@adm > rbd --cluster local mirror schedule status

SCHEDULE TIME IMAGE

2020-02-26 18:00:00 image-pool/image120.4.2.4 Disabling image mirroring #

To disable mirroring for a specific image, specify the mirror

image disable subcommand along with the pool and image name:

cephuser@adm > rbd --cluster local mirror image disable POOL_NAME/IMAGE_NAME20.4.2.5 Promoting and demoting images #

In a failover scenario where the primary designation needs to be moved to the image in the peer cluster, you need to stop access to the primary image, demote the current primary image, promote the new primary image, and resume access to the image on the alternate cluster.

Promotion can be forced using the --force option. Forced

promotion is needed when the demotion cannot be propagated to the peer

cluster (for example, in case of cluster failure or communication

outage). This will result in a split-brain scenario between the two

peers, and the image will no longer be synchronized until a

resync subcommand is issued.

To demote a specific image to non-primary, specify the mirror

image demote subcommand along with the pool and image name:

cephuser@adm > rbd --cluster local mirror image demote POOL_NAME/IMAGE_NAME

To demote all primary images within a pool to non-primary, specify the

mirror pool demote subcommand along with the pool name:

cephuser@adm > rbd --cluster local mirror pool demote POOL_NAME

To promote a specific image to primary, specify the mirror image

promote subcommand along with the pool and image name:

cephuser@adm > rbd --cluster remote mirror image promote POOL_NAME/IMAGE_NAME

To promote all non-primary images within a pool to primary, specify the

mirror pool promote subcommand along with the pool

name:

cephuser@adm > rbd --cluster local mirror pool promote POOL_NAMESince the primary or non-primary status is per-image, it is possible to have two clusters split the I/O load and stage failover or failback.

20.4.2.6 Forcing image resync #

If a split-brain event is detected by the rbd-mirror daemon, it will not

attempt to mirror the affected image until corrected. To resume mirroring

for an image, first demote the image determined to be out of date and then

request a resync to the primary image. To request an image resync, specify

the mirror image resync subcommand along with the pool

and image name:

cephuser@adm > rbd mirror image resync POOL_NAME/IMAGE_NAME20.4.3 Checking the mirror status #

The peer cluster replication status is stored for every primary mirrored

image. This status can be retrieved using the mirror image

status and mirror pool status subcommands:

To request the mirror image status, specify the mirror image

status subcommand along with the pool and image name:

cephuser@adm > rbd mirror image status POOL_NAME/IMAGE_NAME

To request the mirror pool summary status, specify the mirror pool

status subcommand along with the pool name:

cephuser@adm > rbd mirror pool status POOL_NAME

Adding the --verbose option to the mirror pool

status subcommand will additionally output status details for

every mirroring image in the pool.

20.5 Cache settings #

The user space implementation of the Ceph block device

(librbd) cannot take advantage of the Linux page

cache. Therefore, it includes its own in-memory caching. RBD caching behaves

similar to hard disk caching. When the OS sends a barrier or a flush

request, all 'dirty' data is written to the OSDs. This means that using

write-back caching is just as safe as using a well-behaved physical hard

disk with a VM that properly sends flushes. The cache uses a Least

Recently Used (LRU) algorithm, and in write-back mode it can

merge adjacent requests for better throughput.

Ceph supports write-back caching for RBD. To enable it, run

cephuser@adm > ceph config set client rbd_cache true

By default, librbd does not perform any caching.

Writes and reads go directly to the storage cluster, and writes return only

when the data is on disk on all replicas. With caching enabled, writes

return immediately, unless there are more unflushed bytes than set in the

rbd cache max dirty option. In such a case, the write

triggers writeback and blocks until enough bytes are flushed.

Ceph supports write-through caching for RBD. You can set the size of the cache, and you can set targets and limits to switch from write-back caching to write-through caching. To enable write-through mode, run

cephuser@adm > ceph config set client rbd_cache_max_dirty 0This means writes return only when the data is on disk on all replicas, but reads may come from the cache. The cache is in memory on the client, and each RBD image has its own cache. Since the cache is local to the client, there is no coherency if there are others accessing the image. Running GFS or OCFS on top of RBD will not work with caching enabled.

The following parameters affect the behavior of RADOS Block Devices. To set them, use the

client category:

cephuser@adm > ceph config set client PARAMETER VALUErbd cacheEnable caching for RADOS Block Device (RBD). Default is 'true'.

rbd cache sizeThe RBD cache size in bytes. Default is 32 MB.

rbd cache max dirtyThe 'dirty' limit in bytes at which the cache triggers write-back.

rbd cache max dirtyneeds to be less thanrbd cache size. If set to 0, uses write-through caching. Default is 24 MB.rbd cache target dirtyThe 'dirty target' before the cache begins writing data to the data storage. Does not block writes to the cache. Default is 16 MB.

rbd cache max dirty ageThe number of seconds dirty data is in the cache before writeback starts. Default is 1.

rbd cache writethrough until flushStart out in write-through mode, and switch to write-back after the first flush request is received. Enabling this is a conservative but safe setting in case virtual machines running on

rbdare too old to send flushes (for example, the virtio driver in Linux before kernel 2.6.32). Default is 'true'.

20.6 QoS settings #

Generally, Quality of Service (QoS) refers to methods of traffic prioritization and resource reservation. It is particularly important for the transportation of traffic with special requirements.

The following QoS settings are used only by the user space RBD

implementation librbd and

not used by the kRBD

implementation. Because iSCSI uses kRBD, it does

not use the QoS settings. However, for iSCSI you can configure QoS on the

kernel block device layer using standard kernel facilities.

rbd qos iops limitThe desired limit of I/O operations per second. Default is 0 (no limit).

rbd qos bps limitThe desired limit of I/O bytes per second. Default is 0 (no limit).

rbd qos read iops limitThe desired limit of read operations per second. Default is 0 (no limit).

rbd qos write iops limitThe desired limit of write operations per second. Default is 0 (no limit).

rbd qos read bps limitThe desired limit of read bytes per second. Default is 0 (no limit).

rbd qos write bps limitThe desired limit of write bytes per second. Default is 0 (no limit).

rbd qos iops burstThe desired burst limit of I/O operations. Default is 0 (no limit).

rbd qos bps burstThe desired burst limit of I/O bytes. Default is 0 (no limit).

rbd qos read iops burstThe desired burst limit of read operations. Default is 0 (no limit).

rbd qos write iops burstThe desired burst limit of write operations. Default is 0 (no limit).

rbd qos read bps burstThe desired burst limit of read bytes. Default is 0 (no limit).

rbd qos write bps burstThe desired burst limit of write bytes. Default is 0 (no limit).

rbd qos schedule tick minThe minimum schedule tick (in milliseconds) for QoS. Default is 50.

20.7 Read-ahead settings #

RADOS Block Device supports read-ahead/prefetching to optimize small, sequential reads. This should normally be handled by the guest OS in the case of a virtual machine, but boot loaders may not issue efficient reads. Read-ahead is automatically disabled if caching is disabled.

The following read-ahead settings are used only by the user space RBD

implementation librbd and

not used by the kRBD

implementation. Because iSCSI uses kRBD, it does

not use the read-ahead settings. However, for iSCSI you can configure

read-ahead on the kernel block device layer using standard kernel

facilities.

rbd readahead trigger requestsNumber of sequential read requests necessary to trigger read-ahead. Default is 10.

rbd readahead max bytesMaximum size of a read-ahead request. If set to 0, read-ahead is disabled. Default is 512 kB.

rbd readahead disable after bytesAfter this many bytes have been read from an RBD image, read-ahead is disabled for that image until it is closed. This allows the guest OS to take over read-ahead when it is booted. If set to 0, read-ahead stays enabled. Default is 50 MB.

20.8 Advanced features #

RADOS Block Device supports advanced features that enhance the functionality of RBD

images. You can specify the features either on the command line when

creating an RBD image, or in the Ceph configuration file by using the

rbd_default_features option.

You can specify the values of the rbd_default_features

option in two ways:

As a sum of features' internal values. Each feature has its own internal value—for example 'layering' has 1 and 'fast-diff' has 16. Therefore to activate these two feature by default, include the following:

rbd_default_features = 17

As a comma-separated list of features. The previous example will look as follows:

rbd_default_features = layering,fast-diff

RBD images with the following features will not be supported by iSCSI:

deep-flatten, object-map,

journaling, fast-diff,

striping

A list of advanced RBD features follows:

layeringLayering enables you to use cloning.

Internal value is 1, default is 'yes'.

stripingStriping spreads data across multiple objects and helps with parallelism for sequential read/write workloads. It prevents single node bottlenecks for large or busy RADOS Block Devices.

Internal value is 2, default is 'yes'.

exclusive-lockWhen enabled, it requires a client to get a lock on an object before making a write. Enable the exclusive lock only when a single client is accessing an image at the same time. Internal value is 4. Default is 'yes'.

object-mapObject map support depends on exclusive lock support. Block devices are thin provisioned, meaning that they only store data that actually exists. Object map support helps track which objects actually exist (have data stored on a drive). Enabling object map support speeds up I/O operations for cloning, importing and exporting a sparsely populated image, and deleting.

Internal value is 8, default is 'yes'.

fast-diffFast-diff support depends on object map support and exclusive lock support. It adds another property to the object map, which makes it much faster to generate diffs between snapshots of an image and the actual data usage of a snapshot.

Internal value is 16, default is 'yes'.

deep-flattenDeep-flatten makes the

rbd flatten(see Section 20.3.3.6, “Flattening a cloned image”) work on all the snapshots of an image, in addition to the image itself. Without it, snapshots of an image will still rely on the parent, therefore you will not be able to delete the parent image until the snapshots are deleted. Deep-flatten makes a parent independent of its clones, even if they have snapshots.Internal value is 32, default is 'yes'.

journalingJournaling support depends on exclusive lock support. Journaling records all modifications to an image in the order they occur. RBD mirroring (see Section 20.4, “RBD image mirrors”) uses the journal to replicate a crash consistent image to a

remotecluster.Internal value is 64, default is 'no'.

20.9 Mapping RBD using old kernel clients #

Old clients (for example, SLE11 SP4) may not be able to map RBD images because a cluster deployed with SUSE Enterprise Storage 7 forces some features (both RBD image level features and RADOS level features) that these old clients do not support. When this happens, the OSD logs will show messages similar to the following:

2019-05-17 16:11:33.739133 7fcb83a2e700 0 -- 192.168.122.221:0/1006830 >> \ 192.168.122.152:6789/0 pipe(0x65d4e0 sd=3 :57323 s=1 pgs=0 cs=0 l=1 c=0x65d770).connect \ protocol feature mismatch, my 2fffffffffff < peer 4010ff8ffacffff missing 401000000000000

If you intend to switch the CRUSH Map bucket types between 'straw' and 'straw2', do it in a planned manner. Expect a significant impact on the cluster load because changing bucket type will cause massive cluster rebalancing.

Disable any RBD image features that are not supported. For example:

cephuser@adm >rbd feature disable pool1/image1 object-mapcephuser@adm >rbd feature disable pool1/image1 exclusive-lockChange the CRUSH Map bucket types from 'straw2' to 'straw':

Save the CRUSH Map:

cephuser@adm >ceph osd getcrushmap -o crushmap.originalDecompile the CRUSH Map:

cephuser@adm >crushtool -d crushmap.original -o crushmap.txtEdit the CRUSH Map and replace 'straw2' with 'straw'.

Recompile the CRUSH Map:

cephuser@adm >crushtool -c crushmap.txt -o crushmap.newSet the new CRUSH Map:

cephuser@adm >ceph osd setcrushmap -i crushmap.new

20.10 Enabling block devices and Kubernetes #

You can use Ceph RBD with Kubernetes v1.13 and higher through the

ceph-csi driver. This driver dynamically provisions RBD

images to back Kubernetes volumes, and maps these RBD images as block devices

(optionally mounting a file system contained within the image) on worker

nodes running pods that reference an RBD-backed volume.

To use Ceph block devices with Kubernetes, you must install and configure

ceph-csi within your Kubernetes environment.

ceph-csi uses the RBD kernel modules by default which

may not support all Ceph CRUSH tunables or RBD image features.

By default, Ceph block devices use the RBD pool. Create a pool for Kubernetes volume storage. Ensure your Ceph cluster is running, then create the pool:

cephuser@adm >ceph osd pool create kubernetesUse the RBD tool to initialize the pool:

cephuser@adm >rbd pool init kubernetesCreate a new user for Kubernetes and

ceph-csi. Execute the following and record the generated key:cephuser@adm >ceph auth get-or-create client.kubernetes mon 'profile rbd' osd 'profile rbd pool=kubernetes' mgr 'profile rbd pool=kubernetes' [client.kubernetes] key = AQD9o0Fd6hQRChAAt7fMaSZXduT3NWEqylNpmg==ceph-csirequires a ConfigMap object stored in Kubernetes to define the Ceph monitor addresses for the Ceph cluster. Collect both the Ceph cluster unique fsid and the monitor addresses:cephuser@adm >ceph mon dump <...> fsid b9127830-b0cc-4e34-aa47-9d1a2e9949a8 <...> 0: [v2:192.168.1.1:3300/0,v1:192.168.1.1:6789/0] mon.a 1: [v2:192.168.1.2:3300/0,v1:192.168.1.2:6789/0] mon.b 2: [v2:192.168.1.3:3300/0,v1:192.168.1.3:6789/0] mon.cGenerate a

csi-config-map.yamlfile similar to the example below, substituting the FSID forclusterID, and the monitor addresses formonitors:kubectl@adm >cat <<EOF > csi-config-map.yaml --- apiVersion: v1 kind: ConfigMap data: config.json: |- [ { "clusterID": "b9127830-b0cc-4e34-aa47-9d1a2e9949a8", "monitors": [ "192.168.1.1:6789", "192.168.1.2:6789", "192.168.1.3:6789" ] } ] metadata: name: ceph-csi-config EOFWhen generated, store the new ConfigMap object in Kubernetes:

kubectl@adm >kubectl apply -f csi-config-map.yamlceph-csirequires the cephx credentials for communicating with the Ceph cluster. Generate acsi-rbd-secret.yamlfile similar to the example below, using the newly-created Kubernetes user ID and cephx key:kubectl@adm >cat <<EOF > csi-rbd-secret.yaml --- apiVersion: v1 kind: Secret metadata: name: csi-rbd-secret namespace: default stringData: userID: kubernetes userKey: AQD9o0Fd6hQRChAAt7fMaSZXduT3NWEqylNpmg== EOFWhen generated, store the new secret object in Kubernetes:

kubectl@adm >kubectl apply -f csi-rbd-secret.yamlCreate the required ServiceAccount and RBAC ClusterRole/ClusterRoleBinding Kubernetes objects. These objects do not necessarily need to be customized for your Kubernetes environment, and therefore can be used directly from the

ceph-csideployment YAML files:kubectl@adm >kubectl apply -f https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-provisioner-rbac.yamlkubectl@adm >kubectl apply -f https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-nodeplugin-rbac.yamlCreate the

ceph-csiprovisioner and node plugins:kubectl@adm >wget https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-rbdplugin-provisioner.yamlkubectl@adm >kubectl apply -f csi-rbdplugin-provisioner.yamlkubectl@adm >wget https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-rbdplugin.yamlkubectl@adm >kubectl apply -f csi-rbdplugin.yamlImportantBy default, the provisioner and node plugin YAML files will pull the development release of the

ceph-csicontainer. The YAML files should be updated to use a release version.

20.10.1 Using Ceph block devices in Kubernetes #

The Kubernetes StorageClass defines a class of storage. Multiple StorageClass objects can be created to map to different quality-of-service levels and features. For example, NVMe versus HDD-based pools.

To create a ceph-csi StorageClass that maps to the

Kubernetes pool created above, the following YAML file can be used, after

ensuring that the clusterID property matches your Ceph

cluster's FSID:

kubectl@adm >cat <<EOF > csi-rbd-sc.yaml --- apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: csi-rbd-sc provisioner: rbd.csi.ceph.com parameters: clusterID: b9127830-b0cc-4e34-aa47-9d1a2e9949a8 pool: kubernetes csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret csi.storage.k8s.io/provisioner-secret-namespace: default csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret csi.storage.k8s.io/node-stage-secret-namespace: default reclaimPolicy: Delete mountOptions: - discard EOFkubectl@adm >kubectl apply -f csi-rbd-sc.yaml

A PersistentVolumeClaim is a request for abstract

storage resources by a user. The PersistentVolumeClaim

would then be associated to a pod resource to provision a

PersistentVolume, which would be backed by a Ceph

block image. An optional volumeMode can be included to

select between a mounted file system (default) or raw block-device-based

volume.

Using ceph-csi, specifying Filesystem

for volumeMode can support both

ReadWriteOnce and ReadOnlyMany

accessMode claims, and specifying Block for

volumeMode can support ReadWriteOnce,

ReadWriteMany, and ReadOnlyMany

accessMode claims.

For example, to create a block-based

PersistentVolumeClaim that uses the

ceph-csi-based StorageClass created above, the following

YAML file can be used to request raw block storage from the

csi-rbd-sc StorageClass:

kubectl@adm >cat <<EOF > raw-block-pvc.yaml --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: raw-block-pvc spec: accessModes: - ReadWriteOnce volumeMode: Block resources: requests: storage: 1Gi storageClassName: csi-rbd-sc EOFkubectl@adm >kubectl apply -f raw-block-pvc.yaml

The following demonstrates and example of binding the above

PersistentVolumeClaim to a pod resource as a raw block

device:

kubectl@adm >cat <<EOF > raw-block-pod.yaml --- apiVersion: v1 kind: Pod metadata: name: pod-with-raw-block-volume spec: containers: - name: fc-container image: fedora:26 command: ["/bin/sh", "-c"] args: ["tail -f /dev/null"] volumeDevices: - name: data devicePath: /dev/xvda volumes: - name: data persistentVolumeClaim: claimName: raw-block-pvc EOFkubectl@adm >kubectl apply -f raw-block-pod.yaml

To create a file-system-based PersistentVolumeClaim that

uses the ceph-csi-based StorageClass created above, the

following YAML file can be used to request a mounted file system (backed by

an RBD image) from the csi-rbd-sc StorageClass:

kubectl@adm >cat <<EOF > pvc.yaml --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: rbd-pvc spec: accessModes: - ReadWriteOnce volumeMode: Filesystem resources: requests: storage: 1Gi storageClassName: csi-rbd-sc EOFkubectl@adm >kubectl apply -f pvc.yaml

The following demonstrates an example of binding the above

PersistentVolumeClaim to a pod resource as a mounted

file system:

kubectl@adm >cat <<EOF > pod.yaml --- apiVersion: v1 kind: Pod metadata: name: csi-rbd-demo-pod spec: containers: - name: web-server image: nginx volumeMounts: - name: mypvc mountPath: /var/lib/www/html volumes: - name: mypvc persistentVolumeClaim: claimName: rbd-pvc readOnly: false EOFkubectl@adm >kubectl apply -f pod.yaml