19 Erasure coded pools #

Ceph provides an alternative to the normal replication of data in pools, called erasure or erasure coded pool. Erasure pools do not provide all functionality of replicated pools (for example, they cannot store metadata for RBD pools), but require less raw storage. A default erasure pool capable of storing 1 TB of data requires 1.5 TB of raw storage, allowing a single disk failure. This compares favorably to a replicated pool, which needs 2 TB of raw storage for the same purpose.

For background information on Erasure Code, see https://en.wikipedia.org/wiki/Erasure_code.

For a list of pool values related to EC pools, refer to Erasure coded pool values.

19.1 Prerequisite for erasure coded Pools #

To make use of erasure coding, you need to:

Define an erasure rule in the CRUSH Map.

Define an erasure code profile that specifies the coding algorithm to be used.

Create a pool using the previously mentioned rule and profile.

Keep in mind that changing the profile and the details in the profile will not be possible after the pool is created and has data.

Ensure that the CRUSH rules for erasure pools use

indep for step. For details see

Section 17.3.2, “firstn and indep”.

19.2 Creating a sample erasure coded pool #

The simplest erasure coded pool is equivalent to RAID5 and requires at least three hosts. This procedure describes how to create a pool for testing purposes.

The command

ceph osd pool createis used to create a pool with type erasure. The12stands for the number of placement groups. With default parameters, the pool is able to handle the failure of one OSD.cephuser@adm >ceph osd pool create ecpool 12 12 erasure pool 'ecpool' createdThe string

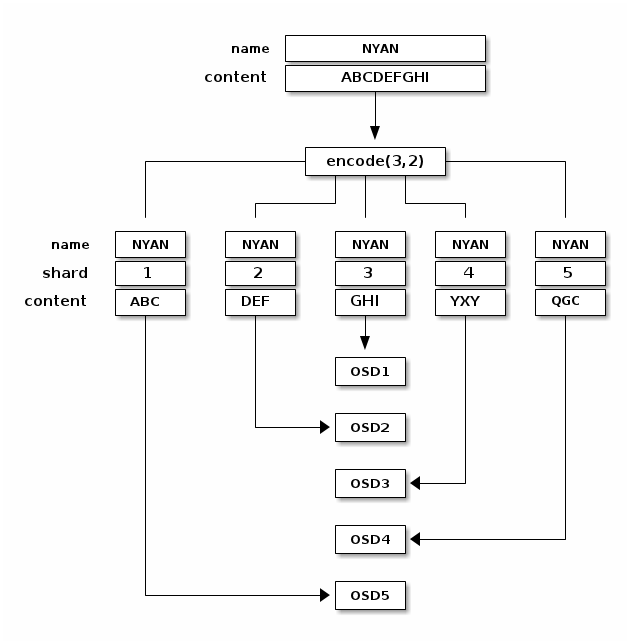

ABCDEFGHIis written into an object calledNYAN.cephuser@adm >echo ABCDEFGHI | rados --pool ecpool put NYAN -For testing purposes OSDs can now be disabled, for example by disconnecting them from the network.

To test whether the pool can handle the failure of devices, the content of the file can be accessed with the

radoscommand.cephuser@adm >rados --pool ecpool get NYAN - ABCDEFGHI

19.3 Erasure code profiles #

When the ceph osd pool create command is invoked to

create an erasure pool, the default profile is used,

unless another profile is specified. Profiles define the redundancy of data.

This is done by setting two parameters, arbitrarily named

k and m. k and m define in how many

chunks a piece of data is split and how many coding

chunks are created. Redundant chunks are then stored on different OSDs.

Definitions required for erasure pool profiles:

- chunk

when the encoding function is called, it returns chunks of the same size: data chunks which can be concatenated to reconstruct the original object and coding chunks which can be used to rebuild a lost chunk.

- k

the number of data chunks, that is the number of chunks into which the original object is divided. For example, if

k = 2a 10 kB object will be divided intokobjects of 5 kB each. The defaultmin_sizeon erasure coded pools isk + 1. However, we recommendmin_sizeto bek + 2or more to prevent loss of writes and data.- m

the number of coding chunks, that is the number of additional chunks computed by the encoding functions. If there are 2 coding chunks, it means 2 OSDs can be out without losing data.

- crush-failure-domain

defines to which devices the chunks are distributed. A bucket type needs to be set as value. For all bucket types, see Section 17.2, “Buckets”. If the failure domain is

rack, the chunks will be stored on different racks to increase the resilience in case of rack failures. Keep in mind that this requires k+m racks.

With the default erasure code profile used in Section 19.2, “Creating a sample erasure coded pool”, you will not lose cluster data if a single OSD or host fails. Therefore, to store 1 TB of data it needs another 0.5 TB of raw storage. That means 1.5 TB of raw storage is required for 1 TB of data (because of k=2, m=1). This is equivalent to a common RAID 5 configuration. For comparison, a replicated pool needs 2 TB of raw storage to store 1 TB of data.

The settings of the default profile can be displayed with:

cephuser@adm > ceph osd erasure-code-profile get default

directory=.libs

k=2

m=1

plugin=jerasure

crush-failure-domain=host

technique=reed_sol_vanChoosing the right profile is important because it cannot be modified after the pool is created. A new pool with a different profile needs to be created and all objects from the previous pool moved to the new one (see Section 18.6, “Pool migration”).

The most important parameters of the profile are k,

m and crush-failure-domain because

they define the storage overhead and the data durability. For example, if

the desired architecture must sustain the loss of two racks with a storage

overhead of 66%, the following profile can be defined. Note that this is

only valid with a CRUSH Map that has buckets of type 'rack':

cephuser@adm > ceph osd erasure-code-profile set myprofile \

k=3 \

m=2 \

crush-failure-domain=rackThe example Section 19.2, “Creating a sample erasure coded pool” can be repeated with this new profile:

cephuser@adm >ceph osd pool create ecpool 12 12 erasure myprofilecephuser@adm >echo ABCDEFGHI | rados --pool ecpool put NYAN -cephuser@adm >rados --pool ecpool get NYAN - ABCDEFGHI

The NYAN object will be divided in three (k=3) and two

additional chunks will be created (m=2). The value of

m defines how many OSDs can be lost simultaneously

without losing any data. The crush-failure-domain=rack

will create a CRUSH ruleset that ensures no two chunks are stored in the

same rack.

19.3.1 Creating a new erasure code profile #

The following command creates a new erasure code profile:

# ceph osd erasure-code-profile set NAME \

directory=DIRECTORY \

plugin=PLUGIN \

stripe_unit=STRIPE_UNIT \

KEY=VALUE ... \

--force- DIRECTORY

Optional. Set the directory name from which the erasure code plugin is loaded. Default is

/usr/lib/ceph/erasure-code.- PLUGIN

Optional. Use the erasure code plugin to compute coding chunks and recover missing chunks. Available plugins are 'jerasure', 'isa', 'lrc', and 'shes'. Default is 'jerasure'.

- STRIPE_UNIT

Optional. The amount of data in a data chunk, per stripe. For example, a profile with 2 data chunks and stripe_unit=4K would put the range 0-4K in chunk 0, 4K-8K in chunk 1, then 8K-12K in chunk 0 again. This should be a multiple of 4K for best performance. The default value is taken from the monitor configuration option

osd_pool_erasure_code_stripe_unitwhen a pool is created. The 'stripe_width' of a pool using this profile will be the number of data chunks multiplied by this 'stripe_unit'.- KEY=VALUE

Key/value pairs of options specific to the selected erasure code plugin.

- --force

Optional. Override an existing profile by the same name, and allow setting a non-4K-aligned stripe_unit.

19.3.2 Removing an erasure code profile #

The following command removes an erasure code profile as identified by its NAME:

# ceph osd erasure-code-profile rm NAMEIf the profile is referenced by a pool, the deletion will fail.

19.3.3 Displaying an erasure code profile's details #

The following command displays details of an erasure code profile as identified by its NAME:

# ceph osd erasure-code-profile get NAME19.3.4 Listing erasure code profiles #

The following command lists the names of all erasure code profiles:

# ceph osd erasure-code-profile ls19.4 Marking erasure coded pools with RADOS Block Device #

To mark an EC pool as an RBD pool, tag it accordingly:

cephuser@adm > ceph osd pool application enable rbd ec_pool_nameRBD can store image data in EC pools. However, the image header and metadata still need to be stored in a replicated pool. Assuming you have the pool named 'rbd' for this purpose:

cephuser@adm > rbd create rbd/image_name --size 1T --data-pool ec_pool_nameYou can use the image normally like any other image, except that all of the data will be stored in the ec_pool_name pool instead of 'rbd' pool.