17 Stored data management #

The CRUSH algorithm determines how to store and retrieve data by computing data storage locations. CRUSH empowers Ceph clients to communicate with OSDs directly rather than through a centralized server or broker. With an algorithmically determined method of storing and retrieving data, Ceph avoids a single point of failure, a performance bottleneck, and a physical limit to its scalability.

CRUSH requires a map of your cluster, and uses the CRUSH Map to pseudo-randomly store and retrieve data in OSDs with a uniform distribution of data across the cluster.

CRUSH maps contain a list of OSDs, a list of 'buckets' for aggregating the devices into physical locations, and a list of rules that tell CRUSH how it should replicate data in a Ceph cluster's pools. By reflecting the underlying physical organization of the installation, CRUSH can model—and thereby address—potential sources of correlated device failures. Typical sources include physical proximity, a shared power source, and a shared network. By encoding this information into the cluster map, CRUSH placement policies can separate object replicas across different failure domains while still maintaining the desired distribution. For example, to address the possibility of concurrent failures, it may be desirable to ensure that data replicas are on devices using different shelves, racks, power supplies, controllers, and/or physical locations.

After you deploy a Ceph cluster, a default CRUSH Map is generated. It is fine for your Ceph sandbox environment. However, when you deploy a large-scale data cluster, you should give significant consideration to developing a custom CRUSH Map, because it will help you manage your Ceph cluster, improve performance and ensure data safety.

For example, if an OSD goes down, a CRUSH Map can help you locate the physical data center, room, row and rack of the host with the failed OSD in the event you need to use on-site support or replace hardware.

Similarly, CRUSH may help you identify faults more quickly. For example, if all OSDs in a particular rack go down simultaneously, the fault may lie with a network switch or power to the rack or the network switch rather than the OSDs themselves.

A custom CRUSH Map can also help you identify the physical locations where Ceph stores redundant copies of data when the placement group(s) (refer to Section 17.4, “Placement groups”) associated with a failed host are in a degraded state.

There are three main sections to a CRUSH Map.

OSD devices consist of any object storage device corresponding to a

ceph-osddaemon.Buckets consist of a hierarchical aggregation of storage locations (for example rows, racks, hosts, etc.) and their assigned weights.

Rule sets consist of the manner of selecting buckets.

17.1 OSD devices #

To map placement groups to OSDs, a CRUSH Map requires a list of OSD devices (the name of the OSD daemon). The list of devices appears first in the CRUSH Map.

#devices device NUM osd.OSD_NAME class CLASS_NAME

For example:

#devices device 0 osd.0 class hdd device 1 osd.1 class ssd device 2 osd.2 class nvme device 3 osd.3 class ssd

As a general rule, an OSD daemon maps to a single disk.

17.1.1 Device classes #

The flexibility of the CRUSH Map in controlling data placement is one of the Ceph's strengths. It is also one of the most difficult parts of the cluster to manage. Device classes automate the most common changes to CRUSH Maps that the administrator needed to do manually previously.

17.1.1.1 The CRUSH management problem #

Ceph clusters are frequently built with multiple types of storage devices: HDD, SSD, NVMe, or even mixed classes of the above. We call these different types of storage devices device classes to avoid confusion between the type property of CRUSH buckets (for example, host, rack, row, see Section 17.2, “Buckets” for more details). Ceph OSDs backed by SSDs are much faster than those backed by spinning disks, making them better suited for certain workloads. Ceph makes it easy to create RADOS pools for different data sets or workloads and to assign different CRUSH rules to control data placement for those pools.

However, setting up the CRUSH rules to place data only on a certain class of device is tedious. Rules work in terms of the CRUSH hierarchy, but if the devices are mixed into the same hosts or racks (as in the sample hierarchy above), they will (by default) be mixed together and appear in the same sub-trees of the hierarchy. Manually separating them out into separate trees involved creating multiple versions of each intermediate node for each device class in previous versions of SUSE Enterprise Storage.

17.1.1.2 Device classes #

An elegant solution that Ceph offers is to add a property called

device class to each OSD. By default, OSDs will

automatically set their device classes to either 'hdd', 'ssd', or 'nvme'

based on the hardware properties exposed by the Linux kernel. These device

classes are reported in a new column of the ceph osd

tree command output:

cephuser@adm > ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 83.17899 root default

-4 23.86200 host cpach

2 hdd 1.81898 osd.2 up 1.00000 1.00000

3 hdd 1.81898 osd.3 up 1.00000 1.00000

4 hdd 1.81898 osd.4 up 1.00000 1.00000

5 hdd 1.81898 osd.5 up 1.00000 1.00000

6 hdd 1.81898 osd.6 up 1.00000 1.00000

7 hdd 1.81898 osd.7 up 1.00000 1.00000

8 hdd 1.81898 osd.8 up 1.00000 1.00000

15 hdd 1.81898 osd.15 up 1.00000 1.00000

10 nvme 0.93100 osd.10 up 1.00000 1.00000

0 ssd 0.93100 osd.0 up 1.00000 1.00000

9 ssd 0.93100 osd.9 up 1.00000 1.00000

If the automatic device class detection fails, for example because the

device driver is not properly exposing information about the device via

/sys/block, you can adjust device classes from the

command line:

cephuser@adm >ceph osd crush rm-device-class osd.2 osd.3 done removing class of osd(s): 2,3cephuser@adm >ceph osd crush set-device-class ssd osd.2 osd.3 set osd(s) 2,3 to class 'ssd'

17.1.1.3 Setting CRUSH placement rules #

CRUSH rules can restrict placement to a specific device class. For example, you can create a 'fast' replicated pool that distributes data only over SSD disks by running the following command:

cephuser@adm > ceph osd crush rule create-replicated RULE_NAME ROOT FAILURE_DOMAIN_TYPE DEVICE_CLASSFor example:

cephuser@adm > ceph osd crush rule create-replicated fast default host ssdCreate a pool named 'fast_pool' and assign it to the 'fast' rule:

cephuser@adm > ceph osd pool create fast_pool 128 128 replicated fastThe process for creating erasure code rules is slightly different. First, you create an erasure code profile that includes a property for your desired device class. Then, use that profile when creating the erasure coded pool:

cephuser@adm >ceph osd erasure-code-profile set myprofile \ k=4 m=2 crush-device-class=ssd crush-failure-domain=hostcephuser@adm >ceph osd pool create mypool 64 erasure myprofile

In case you need to manually edit the CRUSH Map to customize your rule, the syntax has been extended to allow the device class to be specified. For example, the CRUSH rule generated by the above commands looks as follows:

rule ecpool {

id 2

type erasure

min_size 3

max_size 6

step set_chooseleaf_tries 5

step set_choose_tries 100

step take default class ssd

step chooseleaf indep 0 type host

step emit

}The important difference here is that the 'take' command includes the additional 'class CLASS_NAME' suffix.

17.1.1.4 Additional commands #

To list device classes used in a CRUSH Map, run:

cephuser@adm > ceph osd crush class ls

[

"hdd",

"ssd"

]To list existing CRUSH rules, run:

cephuser@adm > ceph osd crush rule ls

replicated_rule

fastTo view details of the CRUSH rule named 'fast', run:

cephuser@adm > ceph osd crush rule dump fast

{

"rule_id": 1,

"rule_name": "fast",

"ruleset": 1,

"type": 1,

"min_size": 1,

"max_size": 10,

"steps": [

{

"op": "take",

"item": -21,

"item_name": "default~ssd"

},

{

"op": "chooseleaf_firstn",

"num": 0,

"type": "host"

},

{

"op": "emit"

}

]

}To list OSDs that belong to an 'ssd' class, run:

cephuser@adm > ceph osd crush class ls-osd ssd

0

117.1.1.5 Migrating from a legacy SSD rule to device classes #

In SUSE Enterprise Storage prior to version 5, you needed to manually edit the CRUSH Map and maintain a parallel hierarchy for each specialized device type (such as SSD) in order to write rules that apply to these devices. Since SUSE Enterprise Storage 5, the device class feature has enabled this transparently.

You can transform a legacy rule and hierarchy to the new class-based rules

by using the crushtool command. There are several types

of transformation possible:

crushtool --reclassify-root ROOT_NAME DEVICE_CLASSThis command takes everything in the hierarchy beneath ROOT_NAME and adjusts any rules that reference that root via

take ROOT_NAME

to instead

take ROOT_NAME class DEVICE_CLASS

It renumbers the buckets so that the old IDs are used for the specified class's 'shadow tree'. As a consequence, no data movement occurs.

Example 17.1:crushtool --reclassify-root#Consider the following existing rule:

rule replicated_ruleset { id 0 type replicated min_size 1 max_size 10 step take default step chooseleaf firstn 0 type rack step emit }If you reclassify the root 'default' as class 'hdd', the rule will become

rule replicated_ruleset { id 0 type replicated min_size 1 max_size 10 step take default class hdd step chooseleaf firstn 0 type rack step emit }crushtool --set-subtree-class BUCKET_NAME DEVICE_CLASSThis method marks every device in the subtree rooted at BUCKET_NAME with the specified device class.

--set-subtree-classis normally used in conjunction with the--reclassify-rootoption to ensure that all devices in that root are labeled with the correct class. However, some of those devices may intentionally have a different class, and therefore you do not want to relabel them. In such cases, exclude the--set-subtree-classoption. Keep in mind that such remapping will not be perfect, because the previous rule is distributed across devices of multiple classes but the adjusted rules will only map to devices of the specified device class.crushtool --reclassify-bucket MATCH_PATTERN DEVICE_CLASS DEFAULT_PATTERNThis method allows merging a parallel type-specific hierarchy with the normal hierarchy. For example, many users have CRUSH Maps similar to the following one:

Example 17.2:crushtool --reclassify-bucket#host node1 { id -2 # do not change unnecessarily # weight 109.152 alg straw hash 0 # rjenkins1 item osd.0 weight 9.096 item osd.1 weight 9.096 item osd.2 weight 9.096 item osd.3 weight 9.096 item osd.4 weight 9.096 item osd.5 weight 9.096 [...] } host node1-ssd { id -10 # do not change unnecessarily # weight 2.000 alg straw hash 0 # rjenkins1 item osd.80 weight 2.000 [...] } root default { id -1 # do not change unnecessarily alg straw hash 0 # rjenkins1 item node1 weight 110.967 [...] } root ssd { id -18 # do not change unnecessarily # weight 16.000 alg straw hash 0 # rjenkins1 item node1-ssd weight 2.000 [...] }This function reclassifies each bucket that matches a given pattern. The pattern can look like

%suffixorprefix%. In the above example, you would use the pattern%-ssd. For each matched bucket, the remaining portion of the name that matches the '%' wild card specifies the base bucket. All devices in the matched bucket are labeled with the specified device class and then moved to the base bucket. If the base bucket does not exist (for example, if 'node12-ssd' exists but 'node12' does not), then it is created and linked underneath the specified default parent bucket. The old bucket IDs are preserved for the new shadow buckets to prevent data movement. Rules with thetakesteps that reference the old buckets are adjusted.crushtool --reclassify-bucket BUCKET_NAME DEVICE_CLASS BASE_BUCKETYou can use the

--reclassify-bucketoption without a wild card to map a single bucket. For example, in the previous example, we want the 'ssd' bucket to be mapped to the default bucket.The final command to convert the map comprised of the above fragments would be as follows:

cephuser@adm >ceph osd getcrushmap -o originalcephuser@adm >crushtool -i original --reclassify \ --set-subtree-class default hdd \ --reclassify-root default hdd \ --reclassify-bucket %-ssd ssd default \ --reclassify-bucket ssd ssd default \ -o adjustedIn order to verify that the conversion is correct, there is a

--compareoption that tests a large sample of inputs to the CRUSH Map and compares if the same result comes back out. These inputs are controlled by the same options that apply to the--test. For the above example, the command would be as follows:cephuser@adm >crushtool -i original --compare adjusted rule 0 had 0/10240 mismatched mappings (0) rule 1 had 0/10240 mismatched mappings (0) maps appear equivalentTipIf there were differences, you would see what ratio of inputs are remapped in the parentheses.

If you are satisfied with the adjusted CRUSH Map, you can apply it to the cluster:

cephuser@adm >ceph osd setcrushmap -i adjusted

17.1.1.6 For more information #

Find more details on CRUSH Maps in Section 17.5, “CRUSH Map manipulation”.

Find more details on Ceph pools in general in Chapter 18, Manage storage pools.

Find more details about erasure coded pools in Chapter 19, Erasure coded pools.

17.2 Buckets #

CRUSH maps contain a list of OSDs, which can be organized into a tree-structured arrangement of buckets for aggregating the devices into physical locations. Individual OSDs comprise the leaves on the tree.

|

0 |

osd |

A specific device or OSD ( |

|

1 |

host |

The name of a host containing one or more OSDs. |

|

2 |

chassis |

Identifier for which chassis in the rack contains the

|

|

3 |

rack |

A computer rack. The default is |

|

4 |

row |

A row in a series of racks. |

|

5 |

pdu |

Abbreviation for "Power Distribution Unit". |

|

6 |

pod |

Abbreviation for "Point of Delivery": in this context, a group of PDUs, or a group of rows of racks. |

|

7 |

room |

A room containing rows of racks. |

|

8 |

datacenter |

A physical data center containing one or more rooms. |

|

9 |

region |

Geographical region of the world (for example, NAM, LAM, EMEA, APAC etc.) |

|

10 |

root |

The root node of the tree of OSD buckets (normally set to

|

You can modify the existing types and create your own bucket types.

Ceph's deployment tools generate a CRUSH Map that contains a bucket for

each host, and a root named 'default', which is useful for the default

rbd pool. The remaining bucket types provide a means for

storing information about the physical location of nodes/buckets, which

makes cluster administration much easier when OSDs, hosts, or network

hardware malfunction and the administrator needs access to physical

hardware.

A bucket has a type, a unique name (string), a unique ID expressed as a

negative integer, a weight relative to the total capacity/capability of its

item(s), the bucket algorithm ( straw2 by default), and

the hash (0 by default, reflecting CRUSH Hash

rjenkins1). A bucket may have one or more items. The

items may consist of other buckets or OSDs. Items may have a weight that

reflects the relative weight of the item.

[bucket-type] [bucket-name] {

id [a unique negative numeric ID]

weight [the relative capacity/capability of the item(s)]

alg [the bucket type: uniform | list | tree | straw2 | straw ]

hash [the hash type: 0 by default]

item [item-name] weight [weight]

}The following example illustrates how you can use buckets to aggregate a pool and physical locations like a data center, a room, a rack and a row.

host ceph-osd-server-1 {

id -17

alg straw2

hash 0

item osd.0 weight 0.546

item osd.1 weight 0.546

}

row rack-1-row-1 {

id -16

alg straw2

hash 0

item ceph-osd-server-1 weight 2.00

}

rack rack-3 {

id -15

alg straw2

hash 0

item rack-3-row-1 weight 2.00

item rack-3-row-2 weight 2.00

item rack-3-row-3 weight 2.00

item rack-3-row-4 weight 2.00

item rack-3-row-5 weight 2.00

}

rack rack-2 {

id -14

alg straw2

hash 0

item rack-2-row-1 weight 2.00

item rack-2-row-2 weight 2.00

item rack-2-row-3 weight 2.00

item rack-2-row-4 weight 2.00

item rack-2-row-5 weight 2.00

}

rack rack-1 {

id -13

alg straw2

hash 0

item rack-1-row-1 weight 2.00

item rack-1-row-2 weight 2.00

item rack-1-row-3 weight 2.00

item rack-1-row-4 weight 2.00

item rack-1-row-5 weight 2.00

}

room server-room-1 {

id -12

alg straw2

hash 0

item rack-1 weight 10.00

item rack-2 weight 10.00

item rack-3 weight 10.00

}

datacenter dc-1 {

id -11

alg straw2

hash 0

item server-room-1 weight 30.00

item server-room-2 weight 30.00

}

root data {

id -10

alg straw2

hash 0

item dc-1 weight 60.00

item dc-2 weight 60.00

}17.3 Rule sets #

CRUSH maps support the notion of 'CRUSH rules', which are the rules that determine data placement for a pool. For large clusters, you will likely create many pools where each pool may have its own CRUSH ruleset and rules. The default CRUSH Map has a rule for the default root. If you want more roots and more rules, you need to create them later or they will be created automatically when new pools are created.

In most cases, you will not need to modify the default rules. When you create a new pool, its default ruleset is 0.

A rule takes the following form:

rule rulename {

ruleset ruleset

type type

min_size min-size

max_size max-size

step step

}- ruleset

An integer. Classifies a rule as belonging to a set of rules. Activated by setting the ruleset in a pool. This option is required. Default is

0.- type

A string. Describes a rule for either a 'replicated' or 'erasure' coded pool. This option is required. Default is

replicated.- min_size

An integer. If a pool group makes fewer replicas than this number, CRUSH will NOT select this rule. This option is required. Default is

2.- max_size

An integer. If a pool group makes more replicas than this number, CRUSH will NOT select this rule. This option is required. Default is

10.- step take bucket

Takes a bucket specified by a name, and begins iterating down the tree. This option is required. For an explanation about iterating through the tree, see Section 17.3.1, “Iterating the node tree”.

- step targetmodenum type bucket-type

target can either be

chooseorchooseleaf. When set tochoose, a number of buckets is selected.chooseleafdirectly selects the OSDs (leaf nodes) from the sub-tree of each bucket in the set of buckets.mode can either be

firstnorindep. See Section 17.3.2, “firstnandindep”.Selects the number of buckets of the given type. Where N is the number of options available, if num > 0 && < N, choose that many buckets; if num < 0, it means N - num; and, if num == 0, choose N buckets (all available). Follows

step takeorstep choose.- step emit

Outputs the current value and empties the stack. Typically used at the end of a rule, but may also be used to form different trees in the same rule. Follows

step choose.

17.3.1 Iterating the node tree #

The structure defined with the buckets can be viewed as a node tree. Buckets are nodes and OSDs are leafs in this tree.

Rules in the CRUSH Map define how OSDs are selected from this tree. A rule starts with a node and then iterates down the tree to return a set of OSDs. It is not possible to define which branch needs to be selected. Instead the CRUSH algorithm assures that the set of OSDs fulfills the replication requirements and evenly distributes the data.

With step take bucket the

iteration through the node tree begins at the given bucket (not bucket

type). If OSDs from all branches in the tree are to be returned, the bucket

must be the root bucket. Otherwise the following steps are only iterating

through a sub-tree.

After step take one or more step

choose entries follow in the rule definition. Each step

choose chooses a defined number of nodes (or branches) from the

previously selected upper node.

In the end the selected OSDs are returned with step

emit.

step chooseleaf is a convenience function that directly

selects OSDs from branches of the given bucket.

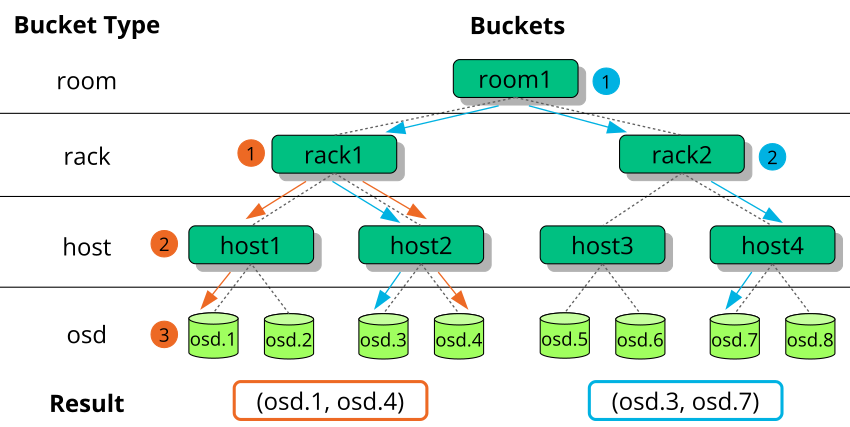

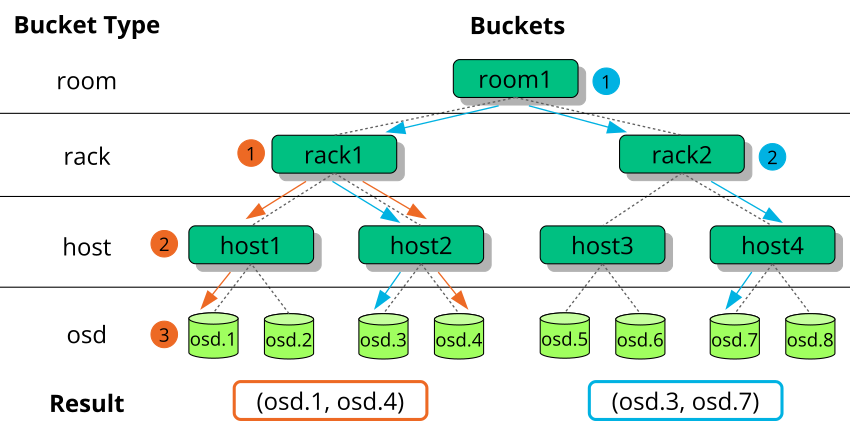

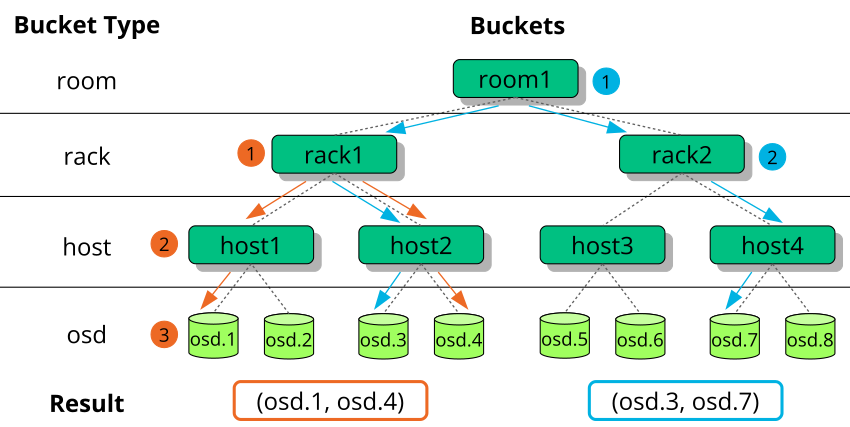

Figure 17.2, “Example tree” provides an example of

how step is used to iterate through a tree. The orange

arrows and numbers correspond to example1a and

example1b, while blue corresponds to

example2 in the following rule definitions.

# orange arrows

rule example1a {

ruleset 0

type replicated

min_size 2

max_size 10

# orange (1)

step take rack1

# orange (2)

step choose firstn 0 host

# orange (3)

step choose firstn 1 osd

step emit

}

rule example1b {

ruleset 0

type replicated

min_size 2

max_size 10

# orange (1)

step take rack1

# orange (2) + (3)

step chooseleaf firstn 0 host

step emit

}

# blue arrows

rule example2 {

ruleset 0

type replicated

min_size 2

max_size 10

# blue (1)

step take room1

# blue (2)

step chooseleaf firstn 0 rack

step emit

}17.3.2 firstn and indep #

A CRUSH rule defines replacements for failed nodes or OSDs (see

Section 17.3, “Rule sets”). The keyword step

requires either firstn or indep as

parameter. Figure 17.3, “Node replacement methods” provides

an example.

firstn adds replacement nodes to the end of the list of

active nodes. In case of a failed node, the following healthy nodes are

shifted to the left to fill the gap of the failed node. This is the default

and desired method for replicated pools, because a

secondary node already has all data and therefore can take over the duties

of the primary node immediately.

indep selects fixed replacement nodes for each active

node. The replacement of a failed node does not change the order of the

remaining nodes. This is desired for erasure coded

pools. In erasure coded pools the data stored on a node depends

on its position in the node selection. When the order of nodes changes, all

data on affected nodes needs to be relocated.

17.4 Placement groups #

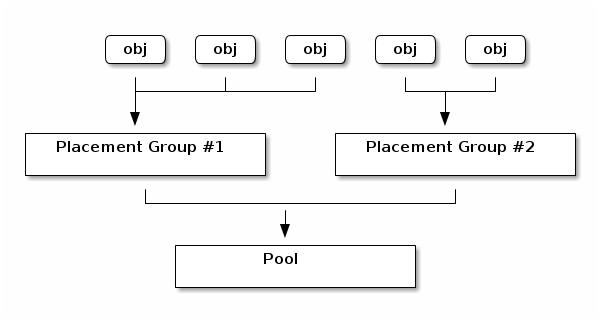

Ceph maps objects to placement groups (PGs). Placement groups are shards or fragments of a logical object pool that place objects as a group into OSDs. Placement groups reduce the amount of per-object metadata when Ceph stores the data in OSDs. A larger number of placement groups—for example, 100 per OSD—leads to better balancing.

17.4.1 Using placement groups #

A placement group (PG) aggregates objects within a pool. The main reason is that tracking object placement and metadata on a per-object basis is computationally expensive. For example, a system with millions of objects cannot track placement of each of its objects directly.

The Ceph client will calculate to which placement group an object will belong to. It does this by hashing the object ID and applying an operation based on the number of PGs in the defined pool and the ID of the pool.

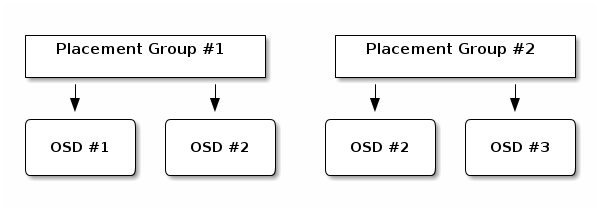

The object's contents within a placement group are stored in a set of OSDs. For example, in a replicated pool of size two, each placement group will store objects on two OSDs:

If OSD #2 fails, another OSD will be assigned to placement group #1 and will be filled with copies of all objects in OSD #1. If the pool size is changed from two to three, an additional OSD will be assigned to the placement group and will receive copies of all objects in the placement group.

Placement groups do not own the OSD, they share it with other placement groups from the same pool or even other pools. If OSD #2 fails, the placement group #2 will also need to restore copies of objects, using OSD #3.

When the number of placement groups increases, the new placement groups will be assigned OSDs. The result of the CRUSH function will also change and some objects from the former placement groups will be copied over to the new placement groups and removed from the old ones.

17.4.2 Determining the value of PG_NUM #

Since Ceph Nautilus (v14.x), you can use the Ceph Manager

pg_autoscaler module to auto-scale the PGs as needed.

If you want to enable this feature, refer to

Section 7.1.1.1, “Default PG and PGP counts”.

When creating a new pool, you can still choose the value of PG_NUM manually:

# ceph osd pool create POOL_NAME PG_NUMPG_NUM cannot be calculated automatically. Following are a few commonly used values, depending on the number of OSDs in the cluster:

- Less than 5 OSDs:

Set PG_NUM to 128.

- Between 5 and 10 OSDs:

Set PG_NUM to 512.

- Between 10 and 50 OSDs:

Set PG_NUM to 1024.

As the number of OSDs increases, choosing the right value for PG_NUM becomes more important. PG_NUM strongly affects the behavior of the cluster as well as the durability of the data in case of OSD failure.

17.4.2.1 Calculating placement groups for more than 50 OSDs #

If you have less than 50 OSDs, use the preselection described in Section 17.4.2, “Determining the value of PG_NUM”. If you have more than 50 OSDs, we recommend approximately 50-100 placement groups per OSD to balance out resource usage, data durability, and distribution. For a single pool of objects, you can use the following formula to get a baseline:

total PGs = (OSDs * 100) / POOL_SIZE

Where POOL_SIZE is either the number of

replicas for replicated pools, or the 'k'+'m' sum for erasure coded pools

as returned by the ceph osd erasure-code-profile get

command. You should round the result up to the nearest power of 2.

Rounding up is recommended for the CRUSH algorithm to evenly balance the

number of objects among placement groups.

As an example, for a cluster with 200 OSDs and a pool size of 3 replicas, you would estimate the number of PGs as follows:

(200 * 100) / 3 = 6667

The nearest power of 2 is 8192.

When using multiple data pools for storing objects, you need to ensure that you balance the number of placement groups per pool with the number of placement groups per OSD. You need to reach a reasonable total number of placement groups that provides reasonably low variance per OSD without taxing system resources or making the peering process too slow.

For example, a cluster of 10 pools, each with 512 placement groups on 10 OSDs, is a total of 5,120 placement groups spread over 10 OSDs, that is 512 placement groups per OSD. Such a setup does not use too many resources. However, if 1000 pools were created with 512 placement groups each, the OSDs would handle approximately 50,000 placement groups each and it would require significantly more resources and time for peering.

17.4.3 Setting the number of placement groups #

Since Ceph Nautilus (v14.x), you can use the Ceph Manager

pg_autoscaler module to auto-scale the PGs as needed.

If you want to enable this feature, refer to

Section 7.1.1.1, “Default PG and PGP counts”.

If you still need to specify the number of placement groups in a pool manually, you need to specify them at the time of pool creation (see Section 18.1, “Creating a pool”). Once you have set placement groups for a pool, you may increase the number of placement groups by running the following command:

# ceph osd pool set POOL_NAME pg_num PG_NUM

After you increase the number of placement groups, you also need to

increase the number of placement groups for placement

(PGP_NUM) before your cluster will rebalance.

PGP_NUM will be the number of placement groups that will

be considered for placement by the CRUSH algorithm. Increasing

PG_NUM splits the placement groups but data will not be

migrated to the newer placement groups until PGP_NUM is

increased. PGP_NUM should be equal to

PG_NUM. To increase the number of placement groups for

placement, run the following:

# ceph osd pool set POOL_NAME pgp_num PGP_NUM17.4.4 Finding the number of placement groups #

To find out the number of placement groups in a pool, run the following

get command:

# ceph osd pool get POOL_NAME pg_num17.4.5 Finding a cluster's PG statistics #

To find out the statistics for the placement groups in your cluster, run the following command:

# ceph pg dump [--format FORMAT]Valid formats are 'plain' (default) and 'json'.

17.4.6 Finding statistics for stuck PGs #

To find out the statistics for all placement groups stuck in a specified state, run the following:

# ceph pg dump_stuck STATE \

[--format FORMAT] [--threshold THRESHOLD]

STATE is one of 'inactive' (PGs cannot process

reads or writes because they are waiting for an OSD with the most

up-to-date data to come up), 'unclean' (PGs contain objects that are not

replicated the desired number of times), 'stale' (PGs are in an unknown

state—the OSDs that host them have not reported to the monitor

cluster in a time interval specified by the

mon_osd_report_timeout option), 'undersized', or

'degraded'.

Valid formats are 'plain' (default) and 'json'.

The threshold defines the minimum number of seconds the placement group is stuck before including it in the returned statistics (300 seconds by default).

17.4.7 Searching a placement group map #

To search for the placement group map for a particular placement group, run the following:

# ceph pg map PG_IDCeph will return the placement group map, the placement group, and the OSD status:

# ceph pg map 1.6c

osdmap e13 pg 1.6c (1.6c) -> up [1,0] acting [1,0]17.4.8 Retrieving a placement groups statistics #

To retrieve statistics for a particular placement group, run the following:

# ceph pg PG_ID query17.4.9 Scrubbing a placement group #

To scrub (Section 17.6, “Scrubbing placement groups”) a placement group, run the following:

# ceph pg scrub PG_IDCeph checks the primary and replica nodes, generates a catalog of all objects in the placement group, and compares them to ensure that no objects are missing or mismatched and their contents are consistent. Assuming the replicas all match, a final semantic sweep ensures that all of the snapshot-related object metadata is consistent. Errors are reported via logs.

17.4.10 Prioritizing backfill and recovery of placement groups #

You may run into a situation where several placement groups require recovery and/or back-fill, while some groups hold data more important than others. For example, those PGs may hold data for images used by running machines and other PGs may be used by inactive machines or less relevant data. In that case, you may want to prioritize recovery of those groups so that performance and availability of data stored on those groups is restored earlier. To mark particular placement groups as prioritized during backfill or recovery, run the following:

#ceph pg force-recovery PG_ID1 [PG_ID2 ... ]#ceph pg force-backfill PG_ID1 [PG_ID2 ... ]

This will cause Ceph to perform recovery or backfill on specified placement groups first, before other placement groups. This does not interrupt currently ongoing backfills or recovery, but causes specified PGs to be processed as soon as possible. If you change your mind or prioritize wrong groups, cancel the prioritization:

#ceph pg cancel-force-recovery PG_ID1 [PG_ID2 ... ]#ceph pg cancel-force-backfill PG_ID1 [PG_ID2 ... ]

The cancel-* commands remove the 'force' flag from the

PGs so that they are processed in default order. Again, this does not

affect placement groups currently being processed, only those that are

still queued. The 'force' flag is cleared automatically after recovery or

backfill of the group is done.

17.4.11 Reverting lost objects #

If the cluster has lost one or more objects and you have decided to abandon the search for the lost data, you need to mark the unfound objects as 'lost'.

If the objects are still lost after having queried all possible locations, you may need to give up on the lost objects. This is possible given unusual combinations of failures that allow the cluster to learn about writes that were performed before the writes themselves are recovered.

Currently the only supported option is 'revert', which will either roll back to a previous version of the object, or forget about it entirely in case of a new object. To mark the 'unfound' objects as 'lost', run the following:

cephuser@adm > ceph pg PG_ID mark_unfound_lost revert|delete17.4.12 Enabling the PG auto-scaler #

Placement groups (PGs) are an internal implementation detail of how Ceph distributes data. By enabling pg-autoscaling, you can allow the cluster to either make or automatically tune PGs based on how the cluster is used.

Each pool in the system has a pg_autoscale_mode property

that can be set to off, on, or

warn:

The autoscaler is configured on a per-pool basis, and can run in three modes:

- off

Disable autoscaling for this pool. It is up to the administrator to choose an appropriate PG number for each pool.

- on

Enable automated adjustments of the PG count for the given pool.

- warn

Raise health alerts when the PG count should be adjusted.

To set the autoscaling mode for existing pools:

cephuser@adm > ceph osd pool set POOL_NAME pg_autoscale_mode mode

You can also configure the default pg_autoscale_mode that

is applied to any pools that are created in the future with:

cephuser@adm > ceph config set global osd_pool_default_pg_autoscale_mode MODEYou can view each pool, its relative utilization, and any suggested changes to the PG count with this command:

cephuser@adm > ceph osd pool autoscale-status17.5 CRUSH Map manipulation #

This section introduces ways to basic CRUSH Map manipulation, such as editing a CRUSH Map, changing CRUSH Map parameters, and adding/moving/removing an OSD.

17.5.1 Editing a CRUSH Map #

To edit an existing CRUSH map, do the following:

Get a CRUSH Map. To get the CRUSH Map for your cluster, execute the following:

cephuser@adm >ceph osd getcrushmap -o compiled-crushmap-filenameCeph will output (

-o) a compiled CRUSH Map to the file name you specified. Since the CRUSH Map is in a compiled form, you must decompile it first before you can edit it.Decompile a CRUSH Map. To decompile a CRUSH Map, execute the following:

cephuser@adm >crushtool -d compiled-crushmap-filename \ -o decompiled-crushmap-filenameCeph will decompile (

-d) the compiled CRUSH Mapand output (-o) it to the file name you specified.Edit at least one of Devices, Buckets and Rules parameters.

Compile a CRUSH Map. To compile a CRUSH Map, execute the following:

cephuser@adm >crushtool -c decompiled-crush-map-filename \ -o compiled-crush-map-filenameCeph will store a compiled CRUSH Mapto the file name you specified.

Set a CRUSH Map. To set the CRUSH Map for your cluster, execute the following:

cephuser@adm >ceph osd setcrushmap -i compiled-crushmap-filenameCeph will input the compiled CRUSH Map of the file name you specified as the CRUSH Map for the cluster.

Use a versioning system—such as git or svn—for the exported and modified CRUSH Map files. It makes a possible rollback simple.

Test the new adjusted CRUSH Map using the crushtool

--test command, and compare to the state before applying the new

CRUSH Map. You may find the following command switches useful:

--show-statistics, --show-mappings,

--show-bad-mappings, --show-utilization,

--show-utilization-all,

--show-choose-tries

17.5.2 Adding or moving an OSD #

To add or move an OSD in the CRUSH Map of a running cluster, execute the following:

cephuser@adm > ceph osd crush set id_or_name weight root=pool-name

bucket-type=bucket-name ...- id

An integer. The numeric ID of the OSD. This option is required.

- name

A string. The full name of the OSD. This option is required.

- weight

A double. The CRUSH weight for the OSD. This option is required.

- root

A key/value pair. By default, the CRUSH hierarchy contains the pool default as its root. This option is required.

- bucket-type

Key/value pairs. You may specify the OSD's location in the CRUSH hierarchy.

The following example adds osd.0 to the hierarchy, or

moves the OSD from a previous location.

cephuser@adm > ceph osd crush set osd.0 1.0 root=data datacenter=dc1 room=room1 \

row=foo rack=bar host=foo-bar-117.5.3 Difference between ceph osd reweight and ceph osd crush reweight #

There are two similar commands that change the 'weight' of a Ceph OSD. The context of their usage is different and may cause confusion.

17.5.3.1 ceph osd reweight #

Usage:

cephuser@adm > ceph osd reweight OSD_NAME NEW_WEIGHT

ceph osd reweight sets an override weight on the Ceph OSD.

This value is in the range of 0 to 1, and forces CRUSH to reposition the

data that would otherwise live on this drive. It does

not change the weights

assigned to the buckets above the OSD, and is a corrective measure in case

the normal CRUSH distribution is not working out quite right. For example,

if one of your OSDs is at 90% and the others are at 40%, you could reduce

this weight to try and compensate for it.

Note that ceph osd reweight is not a persistent

setting. When an OSD gets marked out, its weight will be set to 0 and

when it gets marked in again, the weight will be changed to 1.

17.5.3.2 ceph osd crush reweight #

Usage:

cephuser@adm > ceph osd crush reweight OSD_NAME NEW_WEIGHT

ceph osd crush reweight sets the

CRUSH weight of the OSD. This

weight is an arbitrary value—generally the size of the disk in

TB—and controls how much data the system tries to allocate to the

OSD.

17.5.4 Removing an OSD #

To remove an OSD from the CRUSH Map of a running cluster, execute the following:

cephuser@adm > ceph osd crush remove OSD_NAME17.5.5 Adding a bucket #

To add a bucket to the CRUSH Map of a running cluster, execute the

ceph osd crush add-bucket command:

cephuser@adm > ceph osd crush add-bucket BUCKET_NAME BUCKET_TYPE17.5.6 Moving a bucket #

To move a bucket to a different location or position in the CRUSH Map hierarchy, execute the following:

cephuser@adm > ceph osd crush move BUCKET_NAME BUCKET_TYPE=BUCKET_NAME [...]For example:

cephuser@adm > ceph osd crush move bucket1 datacenter=dc1 room=room1 row=foo rack=bar host=foo-bar-117.5.7 Removing a bucket #

To remove a bucket from the CRUSH Map hierarchy, execute the following:

cephuser@adm > ceph osd crush remove BUCKET_NAMEA bucket must be empty before removing it from the CRUSH hierarchy.

17.6 Scrubbing placement groups #

In addition to making multiple copies of objects, Ceph ensures data

integrity by scrubbing placement groups (find more

information about placement groups in

Section 1.3.2, “Placement groups”). Ceph scrubbing is analogous

to running fsck on the object storage layer. For each

placement group, Ceph generates a catalog of all objects and compares each

primary object and its replicas to ensure that no objects are missing or

mismatched. Daily light scrubbing checks the object size and attributes,

while weekly deep scrubbing reads the data and uses checksums to ensure data

integrity.

Scrubbing is important for maintaining data integrity, but it can reduce performance. You can adjust the following settings to increase or decrease scrubbing operations:

osd max scrubsThe maximum number of simultaneous scrub operations for a Ceph OSD. Default is 1.

osd scrub begin hour,osd scrub end hourThe hours of day (0 to 24) that define a time window during which the scrubbing can happen. By default, begins at 0 and ends at 24.

ImportantIf the placement group's scrub interval exceeds the

osd scrub max intervalsetting, the scrub will happen no matter what time window you define for scrubbing.osd scrub during recoveryAllows scrubs during recovery. Setting this to 'false' will disable scheduling new scrubs while there is an active recovery. Already running scrubs will continue. This option is useful for reducing load on busy clusters. Default is 'true'.

osd scrub thread timeoutThe maximum time in seconds before a scrub thread times out. Default is 60.

osd scrub finalize thread timeoutThe maximum time in seconds before a scrub finalize thread times out. Default is 60*10.

osd scrub load thresholdThe normalized maximum load. Ceph will not scrub when the system load (as defined by the ratio of

getloadavg()/ number ofonline cpus) is higher than this number. Default is 0.5.osd scrub min intervalThe minimal interval in seconds for scrubbing Ceph OSD when the Ceph cluster load is low. Default is 60*60*24 (once a day).

osd scrub max intervalThe maximum interval in seconds for scrubbing Ceph OSD, irrespective of cluster load. Default is 7*60*60*24 (once a week).

osd scrub chunk minThe minimum number of object store chunks to scrub during a single operation. Ceph blocks writes to a single chunk during a scrub. Default is 5.

osd scrub chunk maxThe maximum number of object store chunks to scrub during a single operation. Default is 25.

osd scrub sleepTime to sleep before scrubbing the next group of chunks. Increasing this value slows down the whole scrub operation, while client operations are less impacted. Default is 0.

osd deep scrub intervalThe interval for 'deep' scrubbing (fully reading all data). The

osd scrub load thresholdoption does not affect this setting. Default is 60*60*24*7 (once a week).osd scrub interval randomize ratioAdd a random delay to the

osd scrub min intervalvalue when scheduling the next scrub job for a placement group. The delay is a random value smaller than the result ofosd scrub min interval*osd scrub interval randomized ratio. Therefore, the default setting practically randomly spreads the scrubs out in the allowed time window of [1, 1.5] *osd scrub min interval. Default is 0.5.osd deep scrub strideRead size when doing a deep scrub. Default is 524288 (512 kB).